-

Notifications

You must be signed in to change notification settings - Fork 422

Fix importer intrinsics #518

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Fix importer intrinsics #518

Conversation

libs/mve/depthmap.h

Outdated

| inline void | ||

| depthmap_convert_conventions (typename Image<T>::Ptr dm, | ||

| math::Matrix3f const& invproj, bool to_mve) | ||

| math::Matrix3f const& invproj, bool to_mve, bool add_pixel_offset) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm curious, why did you add this? This pixel offset is always necessary when converting from image coordinates (where the center of the top left pixel is (0,0)) to 3D image plane coordinates (where the center of the top left pixel is (0.5,0.5)).

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

From the following comment in colmap/blob/dev/src/base/reconstruction.cc,

// Bundler output assumes image coordinate system origin

// in the lower left corner of the image with the center of

// the lower left pixel being (0, 0). Our coordinate system

// starts in the upper left corner with the center of the

// upper left pixel being (0.5, 0.5).

I understand that the image coordinate system of Colmap also starts at (0.5,0.5). I made some experiments a few weeks ago, which "confirmed" that.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Quick question: Does MVE offer some way to associate something like view_0006.mve with the original image name? Since the images are also reordered it is not easy to match the results with the original images

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why is it relevant what convention Colmap uses internally for images? You're inputting images into MVE, and the pixels of that depth image correspond to the pixels of the color image. Now you're treating these images with MVE's convention to convert to a depth map in MVE's convention.

Unless you're telling me that Colmap's depth map is shifted by 0.5 pixels compared to the color image, no change should be necessary here.

Each MVE view has a meta.ini file that has a name key. Typically you put the original image name (such as IMG_123.JPG) into that key. Does that help?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

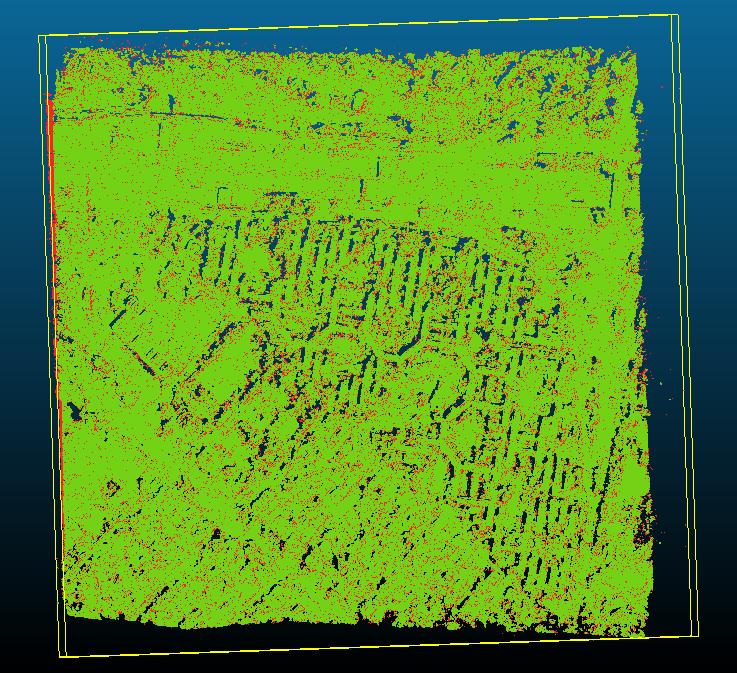

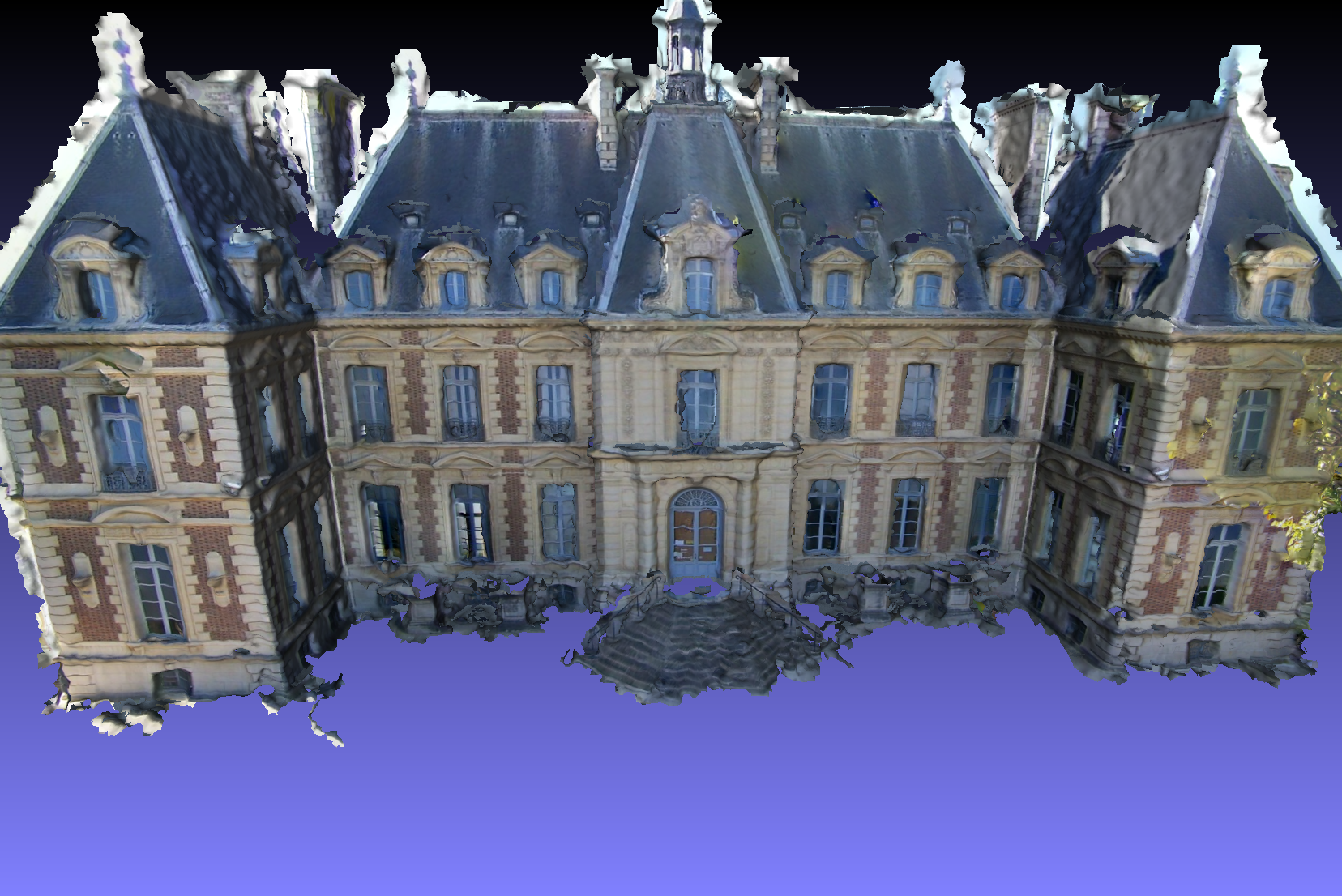

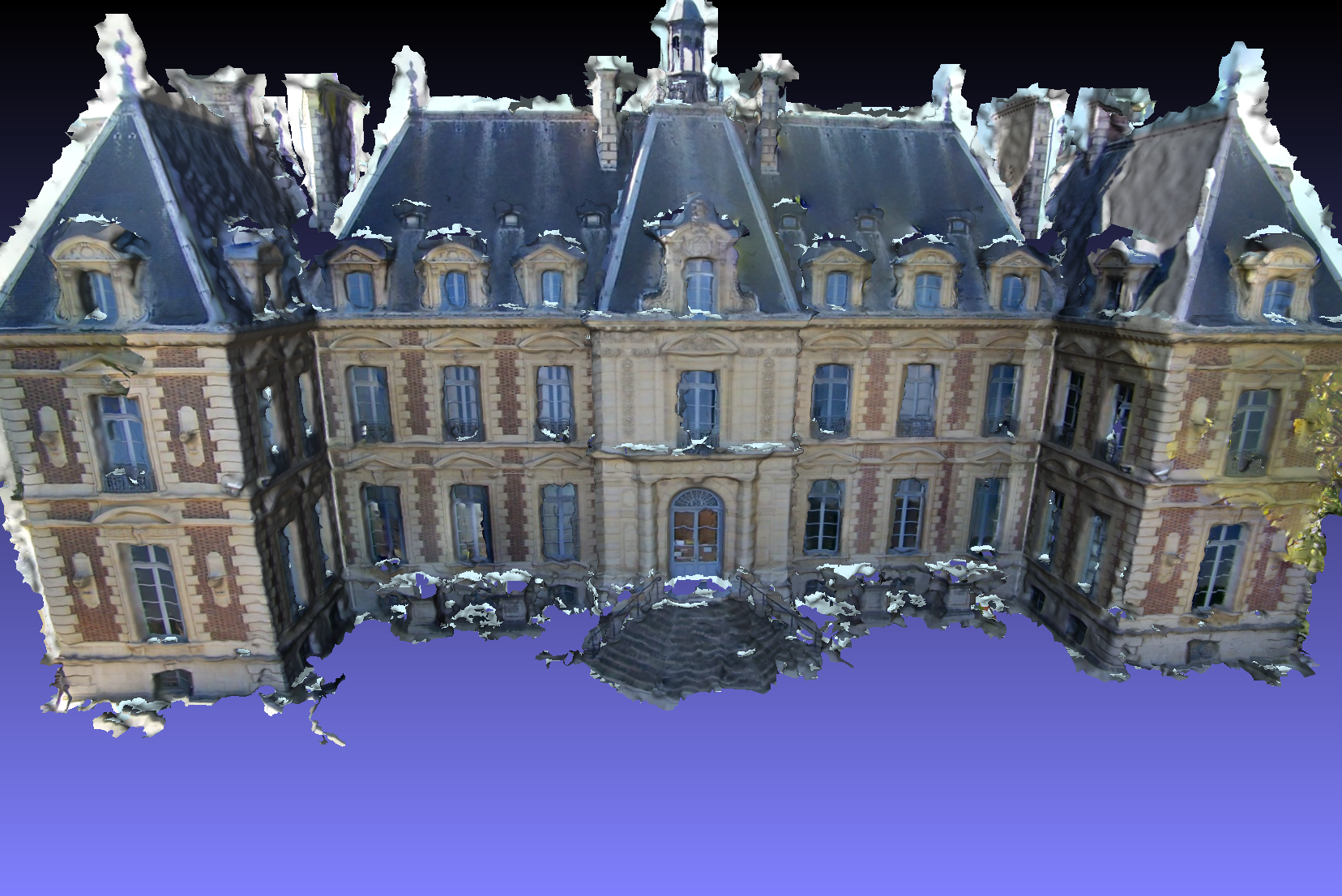

The Colmap Fork I'm working with provides some matrices, which transform the reparameterized depth maps back into world coordinates. In the following, I'll use the corresponding point clouds as reference (shown in red).

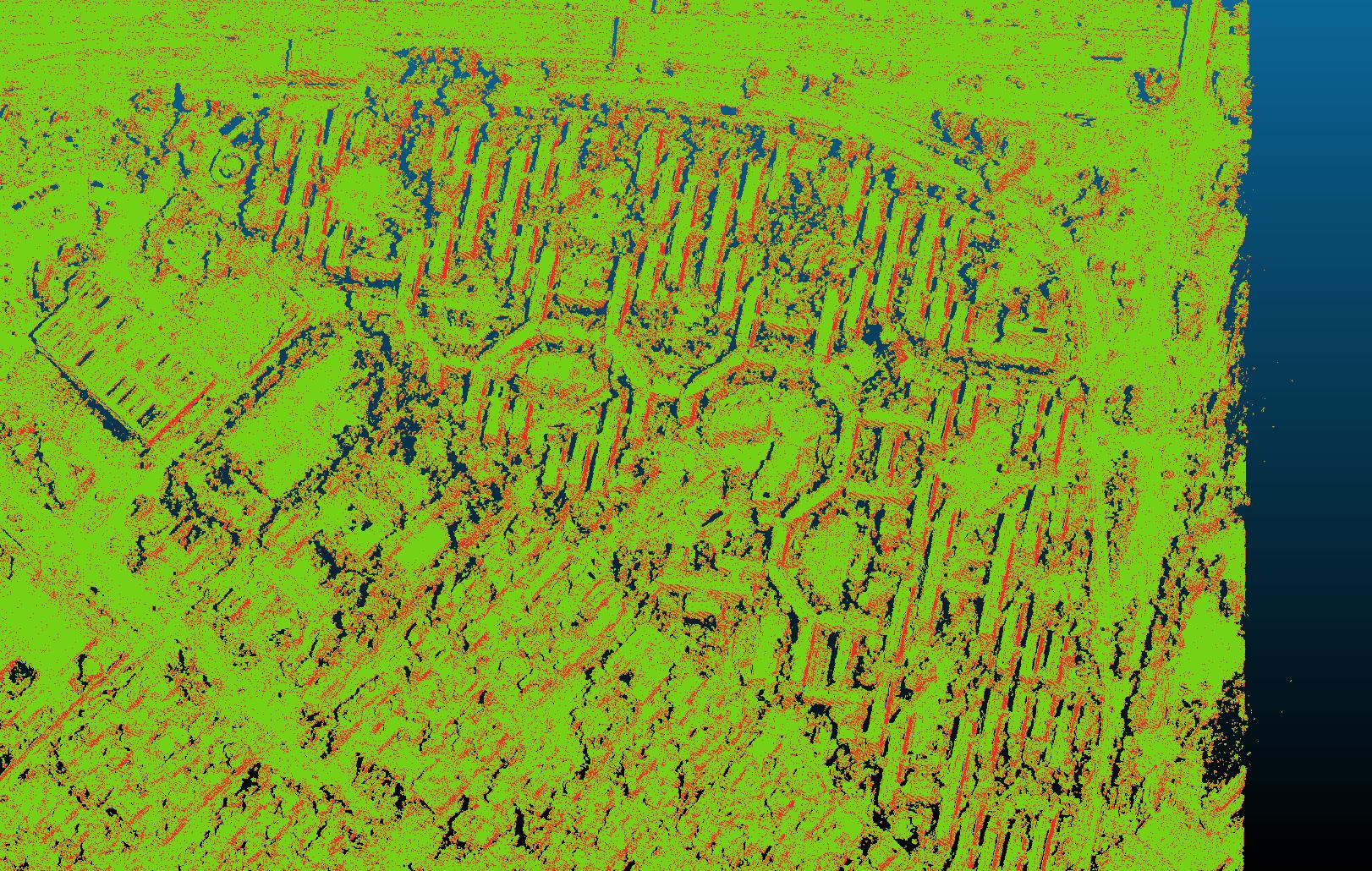

Here, an overlay of the reference point cloud and the point cloud generated with offset=0.0 (green). The reason it does not completely match is the fact that the computations in the reparameterized space are numerically more stable. But one can see that the differences (the noise) appears to be random and non-systematic.

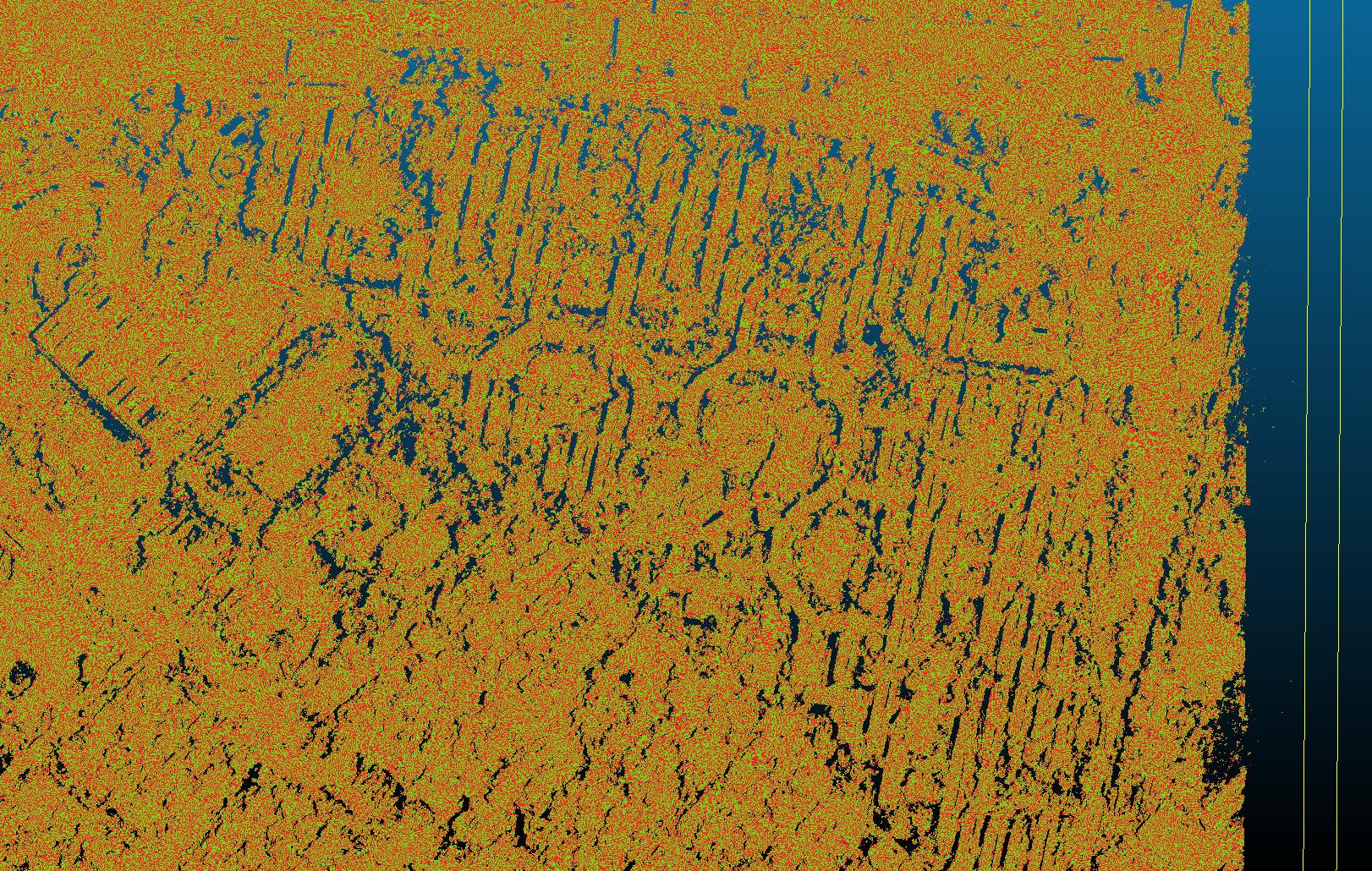

Here, an overlay of the reference point cloud and the point cloud generated with offset=0.5 (green)

On can clearly see that there is a systematic difference between the point clouds.

I think that makes sense, or am I missing something here?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

But hold on a second. The offset we're talking about here is solely used to compute the scaling factor for converting depth values. I would assume that this offset has a negligible effect on the depth actually.Why? With this offset the position of the 3D point changes. And MVE uses the distance to the point as depth, so the value will be different. No?

This referred to the scaling value

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In this code

math::Vec3f px((float)x + 0.5f, (float)y + 0.5f, 1.0f);

px = invproj * px;

double len = px.norm();

dm->at(pos) *= (to_mve ? len : 1.0 / len);

the position of px does change with the offset, but it's really only used to scale the depth value (px.norm()), i.e., changes the final point cloud position in viewing direction, not orthogonal to it. Have you tried adding/subtracting 0.5 from the principal point before normalizing it (as per my previous comment). Maybe this fixes this issue you're seeing?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is to say, if the 0.5 offset was the problem, there would be more places you'd have to fix.

Most notably this one.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Have you tried adding/subtracting 0.5 from the principal point before normalizing it (as per my previous comment). Maybe this fixes this issue you're seeing

No, but this could / should have the same effect.

This is to say, if the 0.5 offset was the problem, there would be more places you'd have to fix.

I'm afraid that's the case.

Oh boy this importer is killing me ... :D

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I feel you man. These two things are among the hardest in human history: Converting between conventions, and rotations. Literally the hardest thing in the world is converting rotation conventions.

|

Offset reverted and commit squashed |

|

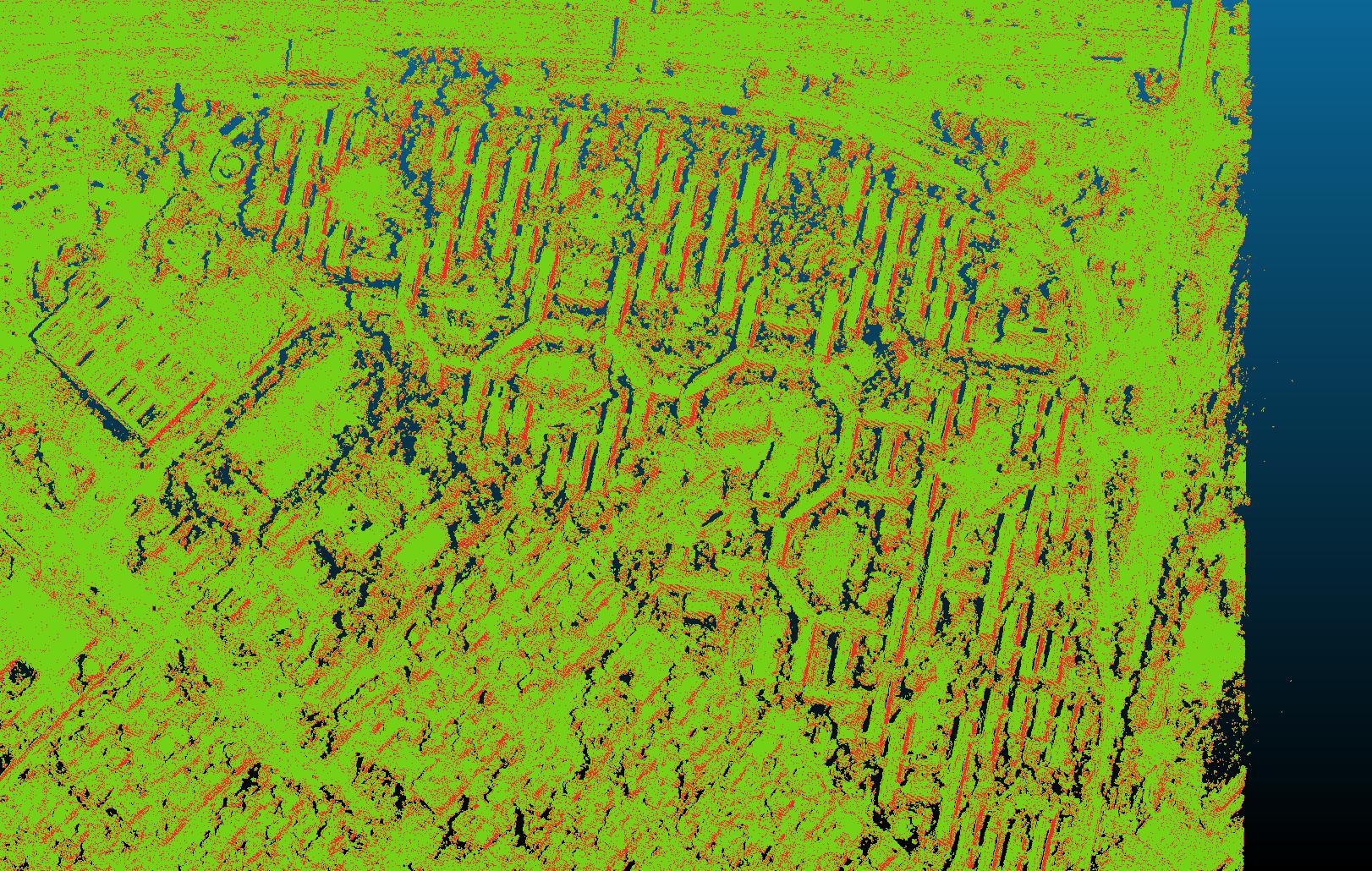

I've conducted some more experiments and I think you are right with

The previous results were created with a python script, I already had to convert the depth maps to world points. In addition, I run some texturing experiments with the Sceaux dataset that showed that adjusting the principal point (with -0.5f) leads to a mesh with untextured faces. Thus, I think the master branch is already in a good shape. Sorry for the noise and thank you for merging. |

|

No worries, I'm glad it works. Just to reiterate on the principal point though. When you subtract 0.5 from it, it has to be before normalization. For example, this

Will become

|

|

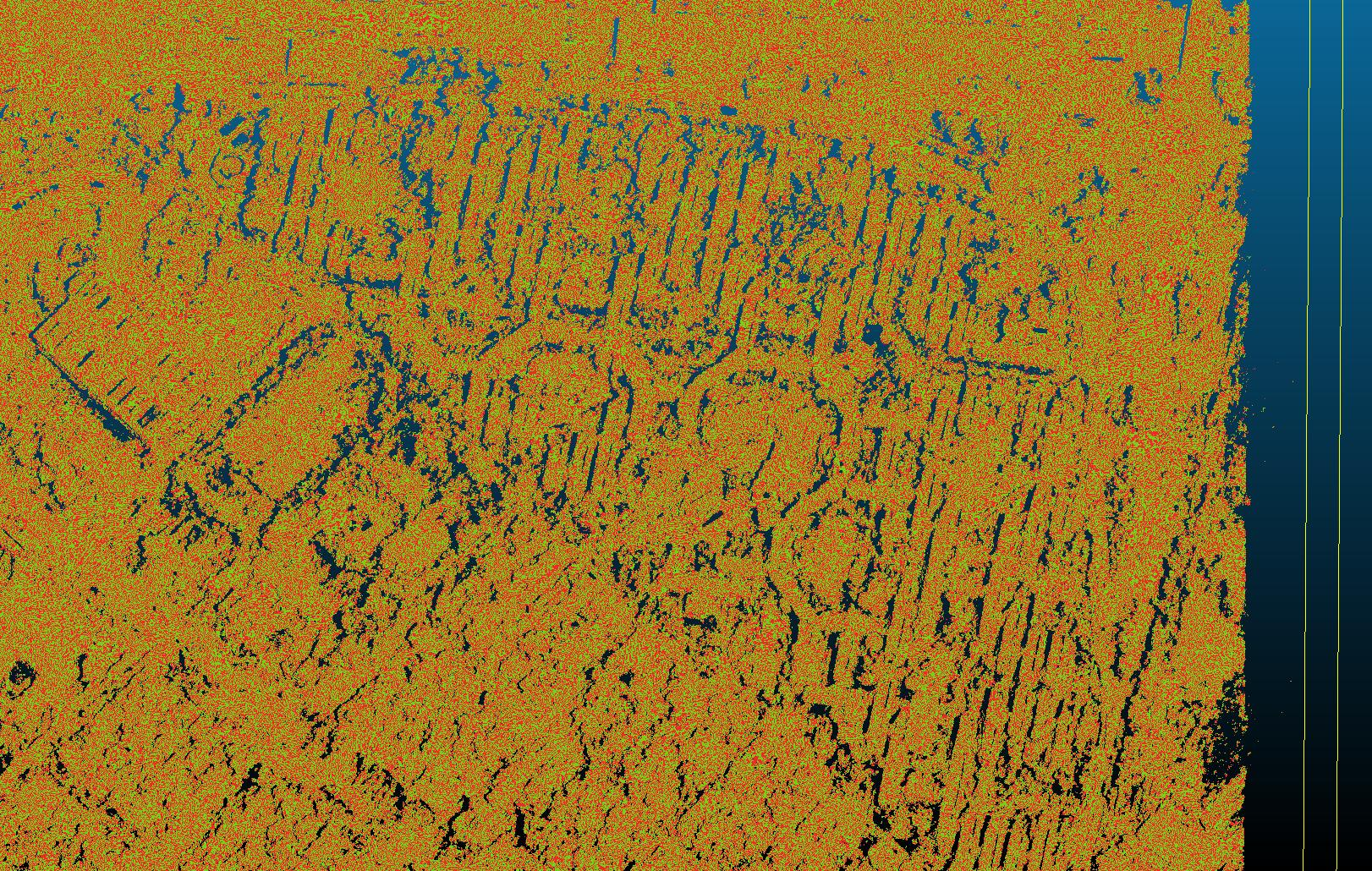

So, by using

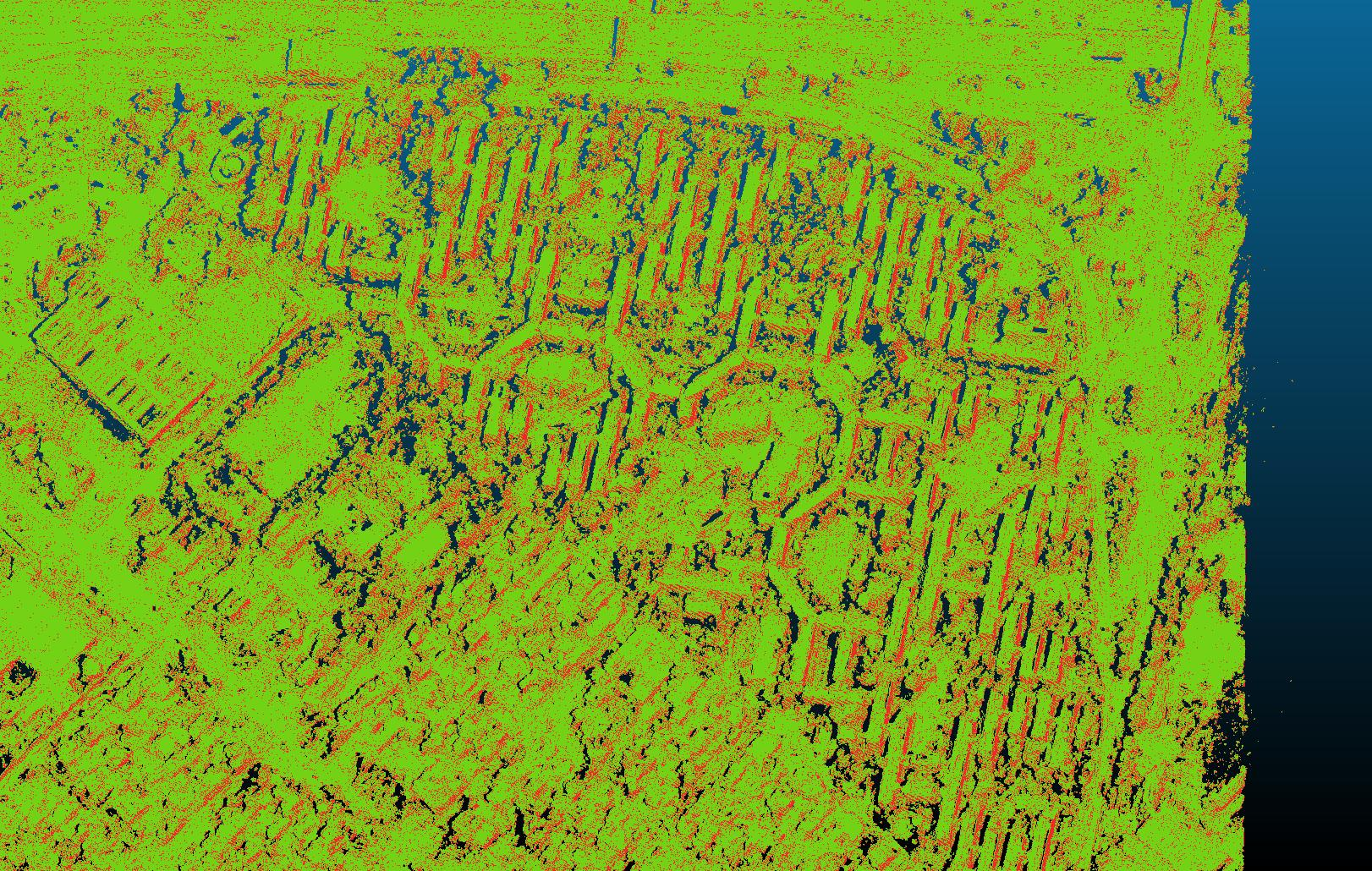

that would mean that there is a shift between the image and the depth map, which sounds really unreasonable to me. However, looking again at the results in (see results below - copied from a previous post) But then again, that would mean that there is this (non-intuitive) shift ... |

Fix Colmap importer intrinsic conversion and remove redundant depth map conversion code