-

Notifications

You must be signed in to change notification settings - Fork 422

Fix fill_calibration and fill_inverse_calibration #517

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Maybe one additional note: Why this did not appear earlier? I think in many situation the case differentiation in |

|

Yes I think we very rarely, if at all, had the case where |

|

If you check the original implementation of If one substitutes |

|

I am a little bit more skeptical about this change. It is correct that we rarely have to deal with pixel aspect rations != 1. But we have tested datasets with pixel aspect ratios != 1, for example, the Strecha datasets, and when dataset came out that's also when we implemented this code. The reason why I'm skeptical is because what Sebastian uses as empirical proof, while it does look convincing, does come from a different SfM and stereo system, which may use a different convention to what we use. So I would at least like to try to reconstruct the fountain with both implementations (the baseline and this PR), and see what the results look like. Problem is, the ground truth camera parameters for the image seem to be gone? Edit: @pmoulon seems to have this dataset in his repo. But WHO KNOWS what the format of the camera files is. |

|

In MVE conventions, if I remember correctly, the focal length corresponds to the (inverse of the) field of view of the longer side of the image. And longer side doesn't mean more pixels, but it means the image plane is wider than tall. And that has to take the aspect ratio of the pixels into account, hence we have code Here, width/height is the image dimensions in pixels, and pwidth/pheight is the pixel size in some (irrelevant) unit. |

|

Ok yeah, I think I remember. So if "longer side" is the convention then we need to take that into account. @SBCV is right in that we always have I guess this is not taken into account when the parameters are imported and that causes the issues? |

I absolutely understand that, since it affects such a basic component of the library.

Okay, I'll make a comparison tomorrow and post the results here.

Before submitting this PR, I tried to modify the |

|

Question -- did you consider that our focal length is relative to max(width, height), because I can't see that here. For example, our focal length is focal_length_in_px / max(width, height), essentially to make the focal length independent of the image size. How is the focal length from Colmap defined? Crazy idea, but I think this might actually be the issue you're running into, and the code modified in the PR might actually be correct. So, the values Does this make sense? |

|

Also, comparing our old code: with your new code, the code is equivalent if the image is wider than tall, so evaluating it on the fountain dataset is probably not very useful. :( Sidenote: |

I think that this is only true with

is not true. And this also illustrates why I find the original if-else-condition a bit odd. We are testing for |

I think that the focal lengths @ahojnnes Could you shed some light on this? This would help a lot. Especially, it would be useful to know, if largest image dimension means |

Can you elaborate this a little bit. Like I said, before creating this PR, I tried already something like the following without success. |

|

I just realized that this issue is actually more complicated as expected... |

Converting the depth map conventions is relatively easy. For each pixel Actually we have a function for that here. |

Let me try. Because I don't see where you normalize Edit: I can see that MVE's code is not ideally structured, where parts of the conversion happen in In any case, when the normalization happens here, you have to skip the normalization in And that means your code and my code are really not that different except that I have a cast to compute the aspect ratio... :( |

Thank you! I'll give it a try the next time I have access to my desktop PC - which will be on Monday. |

|

Could confirm that the problem has been caused by the missing adjustment in See #518 for the corresponding adjustments. |

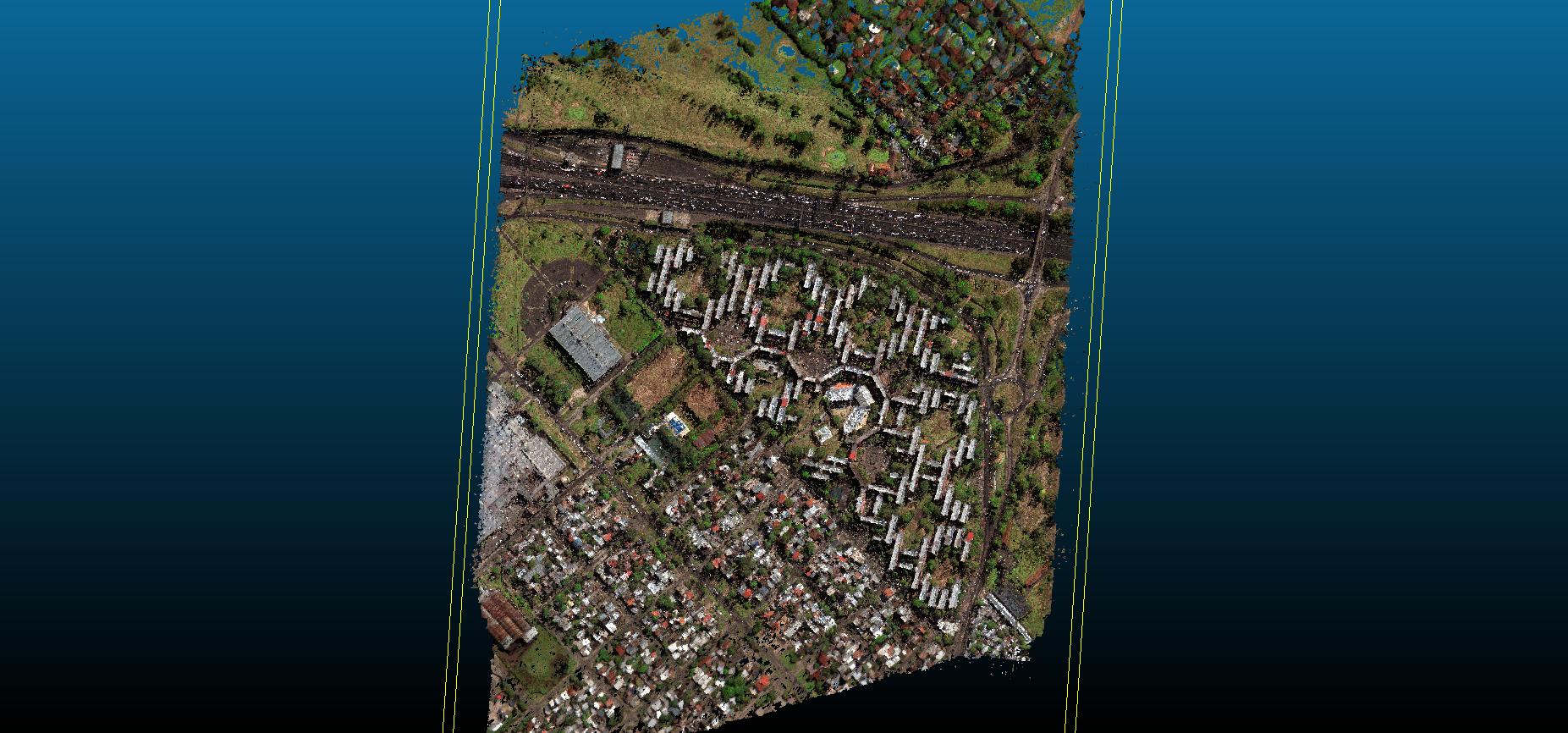

When running an experiment with some satellite images, I observed that the

fill_calibrationandfill_inverse_calibrationmethods are (probably) incorrect.I tried to compute a dense point cloud using some depth maps imported with the recently added Colmap-Importer. The fused result showed that the triangulation of the different depth maps have a huge displacement. The following image shows an example. The mesh in green is reconstructed with Colmap and serves as reference.

The reason for that is that the pinhole camera models used to approximate the pusbroom cameras of satellites are very special. They potentially have dramatically different focal length values

fxandfyas well as strange principal pointscxandcy. Therefore, they are suitable for testing the pipeline for cases with extreme values. In addition, already small errors in the camera models / poses result in large reconstruction point cloud errors, since the satellites are thousands of kilometers away from the reconstructed point cloud.Note, when running the same experiments with "standard" camera models (i.e.

fxalmost identical tofy,cxandcyclose to the image center), I did not observe cases like this.The following image shows the fused point cloud of all 50 cameras using the changes of this PR.

The next image shows an overlay with the green mesh. As one can see, the differences are very small (i.e. the pose and shape of the fused point cloud and the mesh are almost identical)

Here, a final textured mesh using the the different pipeline components of MVE except of the Structure from Motion and the Depth Map computation (for both steps it is necessary to use this Colmap fork to address specific properties of satellite images).

I'm aware that this is a fundamental change, but I guess that the experiments above nicely show that this change is correct. Any second thoughts on this?