-

Notifications

You must be signed in to change notification settings - Fork 1.1k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

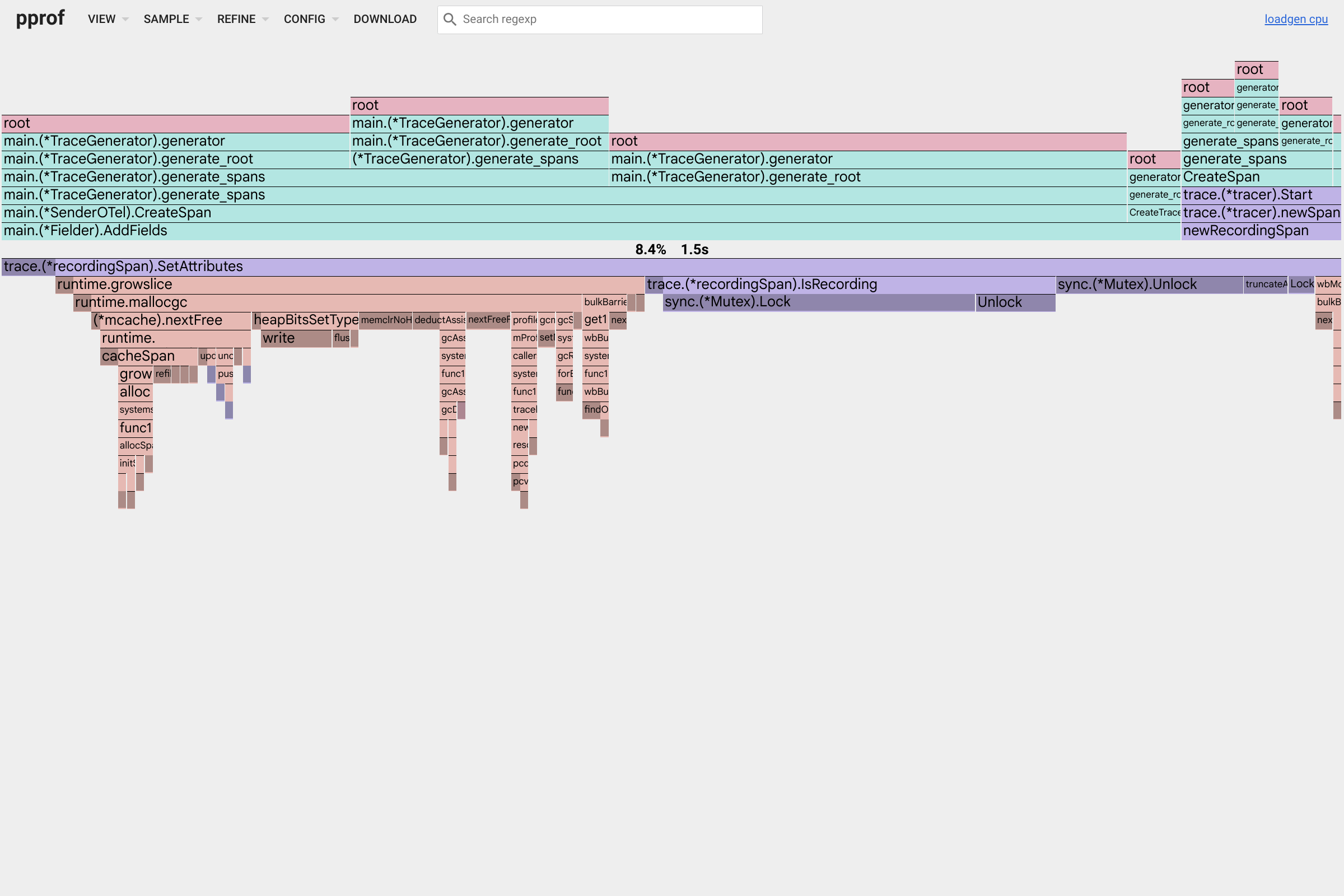

sdk/trace: use slices.Grow() to avoid excessive runtime.growslice() #4818

sdk/trace: use slices.Grow() to avoid excessive runtime.growslice() #4818

Conversation

Codecov ReportAttention:

Additional details and impacted files@@ Coverage Diff @@

## main #4818 +/- ##

=======================================

- Coverage 82.3% 82.3% -0.1%

=======================================

Files 226 226

Lines 18410 18421 +11

=======================================

+ Hits 15157 15164 +7

- Misses 2969 2971 +2

- Partials 284 286 +2

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Do we have some benchmarks that would show the reduced number of allocations? Can you add a benchstat result in the description?

|

|

That's because I introduced a bug while inlining. It's fixed now. Description updated. |

## Which problem is this PR solving? - `fielder.AddFields()` is very inefficient, calling `span.SetAttributes()` once per field set. We can optimise/batch this.  ## Short description of the changes - Batches calls to `span.SetAttributes()` to ensure efficient memory allocations, decreasing garbage & growslice. Alternative to try first before pushing open-telemetry/opentelemetry-go#4818 forward.

## Which problem is this PR solving? - `span.SetAttributes()` even with batching of the attributes in one call from #34, will add them all to the inner `s.attributes` object one at a time, causing excess `growslice` calls. ## Short description of the changes - Cherry-picks in open-telemetry/opentelemetry-go#4818 for improved performance.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Out of curiosity, do you have access to profiling data after the change?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for the feedback, I'll get that sorted out.

And yes, I have profiles before/after.

Co-authored-by: Robert Pająk <pellared@hotmail.com> Co-authored-by: Damien Mathieu <42@dmathieu.com>

|

Benchmark updated and bug in the pre-sizing fixed!! |

## Which problem is this PR solving? - Review found a bug in `addOverCapAttrs()` ## Short description of the changes - Import fixes from upstream open-telemetry/opentelemetry-go#4818

|

@MadVikingGod any chance of squeezing this into v1.22? It's ready to go otherwise (2 approvals) |

Profiling of our otel load generator shows excessive time in growslice. This indicates that we need to expand cap(s.attributes) all at once, rather than one element at a time in the loop.