-

Notifications

You must be signed in to change notification settings - Fork 8.5k

[NP] KibanaRequest provides request abortion event #55061

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

| } | ||

|

|

||

| private getEvents(request: Request): KibanaRequestEvents { | ||

| const end$ = fromEvent<void>(request.raw.req, 'end').pipe(first()); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I added end$ to complete aborted$ observable to prevent memory leaks. I use the end event here to make the tests green https://github.com/elastic/kibana/pull/55061/files#diff-7ffe36ae4fa33e0fddf01a3182306eedR47

From my understanding end cannot be used here, as it's emitted when a request payload is fully consumed. It seems that I should use close instead, but switching to close fails the tests (end$ is not completed). I have a question: What is the correct way to understand that request won't emit anymore to complete aborted$?

Note: I don't set connection: keep-alive/close explicitly in tests, I assume the code should work for either case.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@elastic/kibana-platform @watson any thoughts?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm not that familiar with rxjs, but I'll do my best to answer.

You stepped right into the most complicated part of Node.js 😅 Streams can "end" in many ways unfortunately, it differs with the type of stream, and in some cases also with the version of Node.js, and it's often hard to implement correctly without memory leaks - as you also found out.

A normal request/response event-lifecycle looks something like this:

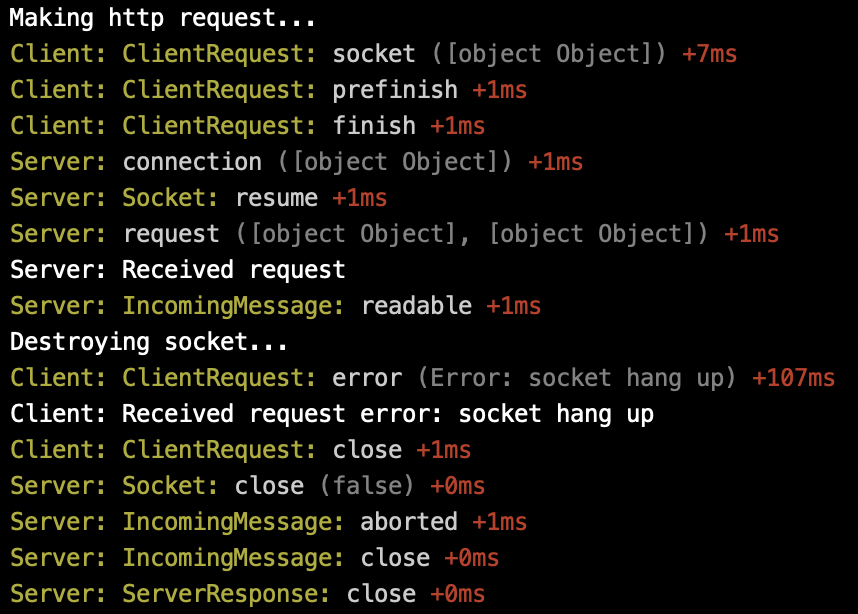

In case the underlying socket is destroyed the order of events are like so:

Note: If I want to be sure to be notified when a stream ends I always use the end-of-stream module.

Pipe only cares about the end event, so if you pipe source into target, but source then emits a close event, target will not know about it and the pipe will still be open:

Similar to the end-of-stream module, there's a pump module which takes care of this edge-case, so that the target stream is correctly closed if the source stream closes. I think that's the issue you're seeing

Note: I don't set

connection: keep-alive/closeexplicitly in tests, I assume the code should work for either case.

Correct, the events on the request and response objects shouldn't be affected by the Connection header.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Great explanation, really appreciate it!

I surprised to see Server: Socket: closed in both your examples. From my understanding server should keep the socket open since HTTP/1.1 uses connection: keep-alive by default. What I missed here?

https://www.w3.org/Protocols/rfc2616/rfc2616-sec8.html

A significant difference between HTTP/1.1 and earlier versions of HTTP is that persistent connections are the default behavior of any HTTP connection. That is, unless otherwise indicated, the client SHOULD assume that the server will maintain a persistent connection, even after error responses from the server.

What I missed here?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@watson is this built-in nodejs logger?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nope, I'm using my own event debugging module: https://www.npmjs.com/package/event-debug

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Then end usage in the code looks correct:

- server handles an incoming request and responds.

endemitted -->aborted$completes -->end$completes - an incoming request aborted / socket destroyed.

abortedemitted -->aborted$completes -->end$unsubscribes fromendevent (there are no other subscriptions).

Therefore memory a memory leak should not be possible.

Additionally, I performed a loading test locally and everything looks good. load test

Using additional package https://github.com/mafintosh/end-of-stream looks a bit overkill for such a small use-case.

@elastic/kibana-platform are you okay with the current implementation or we should consider exposing the EventEmitter interface instead of Observables? Then we needn't to think about cleaning up after manually created Observable (GC removes all listeners)

|

Pinging @elastic/kibana-platform (Team:Platform) |

pgayvallet

left a comment

pgayvallet

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

implementation is looking good to me and follow consensus of #44302

| return { | ||

| aborted$: fromEvent<void>(request.raw.req, 'aborted').pipe(first(), takeUntil(end$)), | ||

| } as const; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm wondering if we should add areplay effect. Is there a risk for the request to already be aborded when entering the request handler?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

That's a tricky part... the underlying framework should handle it. How to test it? No clue. We can add sharedReplay to be double-safe.

💚 Build SucceededHistory

To update your PR or re-run it, just comment with: |

* add aborted$ observable to KibanaRequest * complete observable on request end * update docs * update test suit names * always finish subscription * address comments

…t-of-legacy * 'master' of github.com:elastic/kibana: Restore 200 OK + response payload for save and delete endpoints (elastic#55535) [SIEM] [Detection Engine] Log time gaps as failures for now (elastic#55515) [NP] KibanaRequest provides request abortion event (elastic#55061)

|

Any possibility to backport to 7.6.1? (See #58749) |

* add aborted$ observable to KibanaRequest * complete observable on request end * update docs * update test suit names * always finish subscription * address comments

|

@lukasolson #58851 backport to 7.6 branch. which version label I should use? 7.6.1/7.6.2? |

Summary

Closes #44302

Provides an alternative to hapi request.disconnect event, which uses aborted under the hood.

The usage example in the Legacy platform

kibana/src/legacy/core_plugins/elasticsearch/lib/abortable_request_handler.js

Line 29 in f13adfa

The

aborted$event provided via Observable interface as proposed in #44302 (comment)Usage example:

Checklist

Use

strikethroughsto remove checklist items you don't feel are applicable to this PR.[ ] This was checked for cross-browser compatibility, including a check against IE11[ ] Any text added follows EUI's writing guidelines, uses sentence case text and includes i18n support[ ] Documentation was added for features that require explanation or tutorials[ ] This was checked for keyboard-only and screenreader accessibilityFor maintainers

Dev Docs

KibanaRequestprovidesaborted$observable that emits once if and when an incoming request has been aborted.Usage example: