-

Notifications

You must be signed in to change notification settings - Fork 16

Fix corrupt data when Deleting entries from zip files. #103

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

@grendello This is the problem related to #102 , I managed to narrow it down into a unit test. Still can't see what the problem is, even with the corrected API. |

|

@dellis1972 it's just occurred to me - do the test files shrink or grow when added to the archive? If the latter, then perhaps this is some sort of problem here? |

The are either smaller or the same size |

|

@grendello Interesting.... As per the original report changing the code from to fixes the issue. So by moving the file which is "stored" around we can seemingly fix the problem. |

|

Hmm, this starts to look like an issue with libzip - the weird error code, the rearranging of files fixing it. |

The error seems to be in here https://github.com/nih-at/libzip/blob/26ba5523db09213f532821875542dba7afa04b65/lib/zip_extra_field.c#L351 something to do with extra fields. |

|

specifically this line https://github.com/nih-at/libzip/blob/26ba5523db09213f532821875542dba7afa04b65/lib/zip_extra_field.c#L396 is the one which returns an error |

|

@dellis1972 Try modifying |

I tried that , it didn't change anything. But it did still end up in the |

Yes, it appears that |

|

@grendello the interesting thing is I don't think the error is in the creation of the zip file. Becuse I can repo the bug by opening a zip file created with just So I guess the problem is with loading of the zip. Looking at my debug output the values on https://github.com/nih-at/libzip/blob/26ba5523db09213f532821875542dba7afa04b65/lib/zip_extra_field.c#L381 look very weird. For a working file |

|

@dellis1972 Looking at |

|

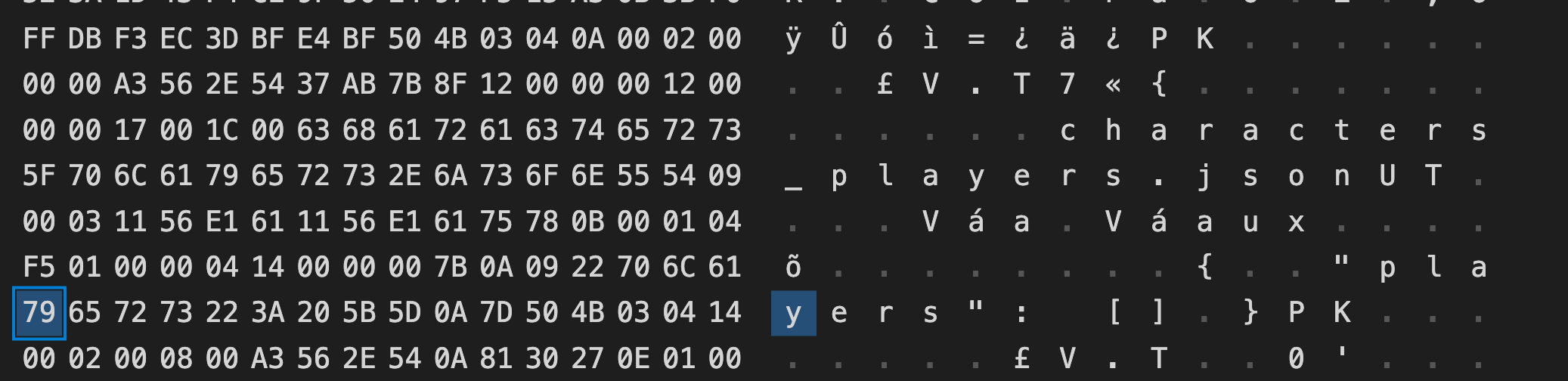

So we are reading data from what looks like the wrong location. This one of the example files. In this case libzip is trying to read the extra field data from the locaiton where the stored file data is. In both of the cases I have here. It is the stored file which has the problem. So the code on [1] is calculating the extra field offset as |

|

I just tried |

|

I'm wondering if this stored entry even has extra fields.... that might explain why this isn't working. |

So the issue might still be a problem with libzip - why does it think there's an extra field? |

So I just used the This seems to work as expected. So now it looks like its not libzip..... I'll try and write a C version of our test and see if I can get it to break. |

|

So I put this together and it works it seems. The int

main(int argc, char *argv[]) {

const char *archive;

zip_source_t *src;

zip_t *za;

zip_error_t error;

void *data;

size_t size;

if (argc < 2) {

fprintf(stderr, "usage: %s archive\n", argv[0]);

return 1;

}

archive = argv[1];

zip_error_init(&error);

za = zip_open (archive, 0, &error);

zip_error_fini(&error);

zip_int16_t index = zip_name_locate (za, "info.json", ZIP_FL_NOCASE);

// remove the entry

if (index >= 0) {

printf ("deleting info.json at %hu", index);

if (zip_delete (za, index) < 0) {

fprintf(stderr, "did not delete entry '%hu': %s\n", index, zip_strerror(za));

}

}

/* close archive */

if (zip_close(za) < 0) {

fprintf(stderr, "can't close zip archive '%s': %s\n", archive, zip_strerror(za));

return 1;

}

return 0;

} |

|

So my thinking is this... I wonder if our code is not seeking from the right locations? So we end up at the wrong place in the memory stream. There are a number of places where libzip will seek from the beginning of the file, and some where its from the current location. I'll start looking to make sure we don't change the |

It appears to be very likely, indeed |

Fixes dotnet#102 The initial report showed the following error ``` Unhandled exception. System.IO.IOException: An attempt was made to move the position before the beginning of the stream. at System.IO.MemoryStream.Seek(Int64 offset, SeekOrigin loc) at Xamarin.Tools.Zip.ZipArchive.stream_callback(IntPtr state, IntPtr data, UInt64 len, SourceCommand cmd) ``` The really odd thing was that this only happened if the example files were added to the archive in a certain order. This pointed to some kind of memory corruption or stream offset issue. Digging into the documentation for [zip_source_function]](https://libzip.org/documentation/zip_source_function.html#DESCRIPTION) we can being to see what the problem is. Our current implementation of this user callback treats the SourceCommand's of `ZIP_SOURCE_BEGIN_WRITE` `ZIP_SOURCE_COMMIT_WRITE`, `ZIP_SOURCE_TELL_WRITE` and `ZIP_SOURCE_SEEK_WRITE` in the same way as their non `WRITE` counter parts. In that when we get a `ZIP_SOURCE_SEEK_WRITE` we seek in the current archive `Stream` to the appropriate location. Similarly `ZIP_SOURCE_BEGIN_WRITE` seeks to the start of the current archive `Stream`. This implementation is incorrect. The documentation for `ZIP_SOURCE_BEGIN_WRITE` is as follows ``` Prepare the source for writing. Use this to create any temporary file(s). ``` This suggests that a new temporary file is expected to be created. Also if we look at the following descriptions ZIP_SOURCE_TELL Return the current read offset in the source, like ftell(3). ZIP_SOURCE_TELL_WRITE Return the current write offset in the source, like ftell(3). We can see that there are supposed to be two different offsets. One for reading and one for writing. This leads us to the reason why this problem was occurring. Because both the `READ` and `WRITE` are operating on the exact same `Stream` were are getting into a position where the original data was being overwritten by us deleting an entry. What we should have been doing was creating a temp `Stream` when we got the `ZIP_SOURCE_BEGIN_WRITE` SourceCommand. Then use this temp `Stream` to write all the required changes to before finally overwriting the original `Stream` when we get the `ZIP_SOURCE_COMMIT_WRITE` SourceCommand. This will ensure that the original archive data will remain intact until all the processing is done. So rather than passing in a `GCHandle` to the `Stream` directly , a new class has been introduced. The `CallbackContext` class will be used to pass data between the managed and unmanaged code. It will contain the properties for the `Source` stream as well as `Destination` stream. The `Source` will always be used for reading, the `Destination` (if present) will be used for writing. Now on `ZIP_SOURCE_BEGIN_WRITE` we create a new temp file stream which we will use to create the updated zip file. This new stream will be stored in the `CallbackContext.Destination` property so that all the other `WRITE` based commands will work on it. Finally when we get the `ZIP_SOURCE_COMMIT_WRITE` SourceCommand, we will copy the data to the `CallbackContext.Source` stream. Then finally we will dispose of the `CallbackContext.Destination`.

| context.Destination = File.Open (tempFile, FileMode.OpenOrCreate, FileAccess.ReadWrite); | ||

| context.DestinationFileName = tempFile; | ||

| } catch (IOException) { | ||

| // ok use a memory stream as a backup |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@grendello do you think this is a good idea? or should we just throw an exception and fail.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think it's fine, we should do our best to make the code work

Fixes #102

The initial report showed the following error

The really odd thing was that this only happened if the example files

were added to the archive in a certain order. This pointed to some kind

of memory corruption or stream offset issue.

Digging into the documentation for zip_source_function we can

being to see what the problem is. Our current implementation of this

user callback treats the SourceCommand's of

ZIP_SOURCE_BEGIN_WRITEZIP_SOURCE_COMMIT_WRITE,ZIP_SOURCE_TELL_WRITEandZIP_SOURCE_SEEK_WRITEin the same way as their non

WRITEcounter parts. In that when we geta

ZIP_SOURCE_SEEK_WRITEwe seek in the current archiveStreamtothe appropriate location. Similarly

ZIP_SOURCE_BEGIN_WRITEseeks tothe start of the current archive

Stream. This implementation is incorrect.The documentation for

ZIP_SOURCE_BEGIN_WRITEis as followsThis suggests that a new temporary file is expected to be created. Also

if we look at the following descriptions