-

Notifications

You must be signed in to change notification settings - Fork 28.6k

[SPARK-26260][Core]For disk store tasks summary table should show only successful tasks summary #26508

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

…sks metrics for disk store

|

Test build #113730 has finished for PR 26508 at commit

|

|

Test build #113732 has finished for PR 26508 at commit

|

|

Test build #113800 has finished for PR 26508 at commit

|

|

So, to solve this problem, you're changing the format of the data on disk. That breaks backwards compatibility (you'll run into problems if you run with an old disk store). So you have to update Given that I don't see a way to fix this without changing the disk format, I wonder if there are better alternatives than basically doubling the amount of disk space needed to store metrics. What if you use the same existing fields and indices, but record successful tasks with positive numbers, and in progress or failed tasks as negative? You need some slight adjustments (e.g. |

|

Thanks @vanzin for the input. Let me check and update. |

|

Test build #113942 has finished for PR 26508 at commit

|

|

Test build #113943 has finished for PR 26508 at commit

|

Thanks @vanzin , I have updated accordingly. It is working fine for both live and history UI |

|

Test build #113944 has finished for PR 26508 at commit

|

|

Test build #113946 has finished for PR 26508 at commit

|

|

Test build #113947 has finished for PR 26508 at commit

|

|

Test build #113958 has finished for PR 26508 at commit

|

|

Test build #113961 has finished for PR 26508 at commit

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Small test suggestion, otherwise looks ok.

core/src/test/scala/org/apache/spark/status/AppStatusStoreSuite.scala

Outdated

Show resolved

Hide resolved

|

@vanzin Is it ok to go in? Thanks |

core/src/test/scala/org/apache/spark/status/AppStatusStoreSuite.scala

Outdated

Show resolved

Hide resolved

core/src/test/scala/org/apache/spark/status/AppStatusStoreSuite.scala

Outdated

Show resolved

Hide resolved

core/src/test/scala/org/apache/spark/status/AppStatusStoreSuite.scala

Outdated

Show resolved

Hide resolved

core/src/test/scala/org/apache/spark/status/AppStatusStoreSuite.scala

Outdated

Show resolved

Hide resolved

|

Thanks @vanzin for the comments. I have updated accordingly |

|

Test build #114295 has finished for PR 26508 at commit

|

|

Test build #114294 has finished for PR 26508 at commit

|

|

Test build #114299 has finished for PR 26508 at commit

|

|

Test build #114302 has finished for PR 26508 at commit

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Couple of small nits, then good to go.

core/src/test/scala/org/apache/spark/status/AppStatusStoreSuite.scala

Outdated

Show resolved

Hide resolved

|

Test build #114317 has finished for PR 26508 at commit

|

|

Test build #114316 has finished for PR 26508 at commit

|

|

Merging to master. |

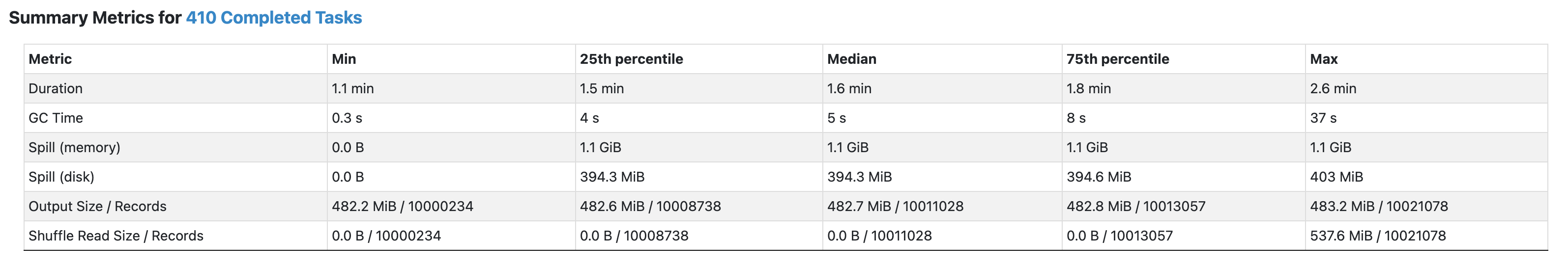

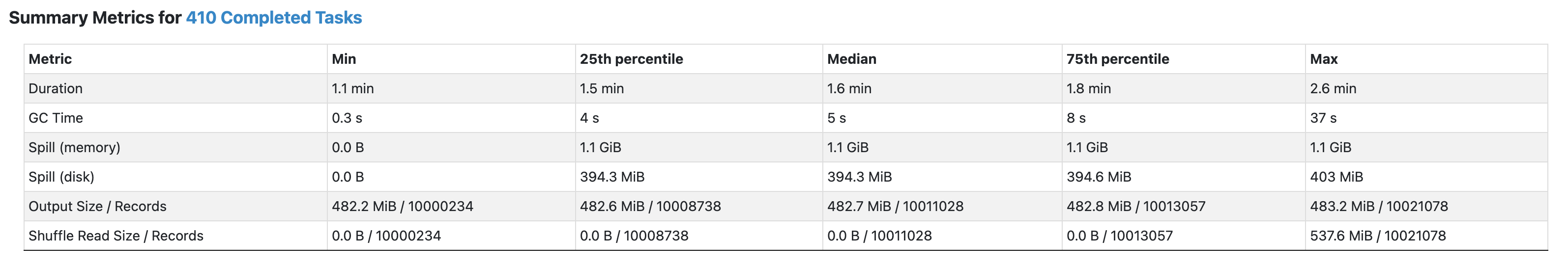

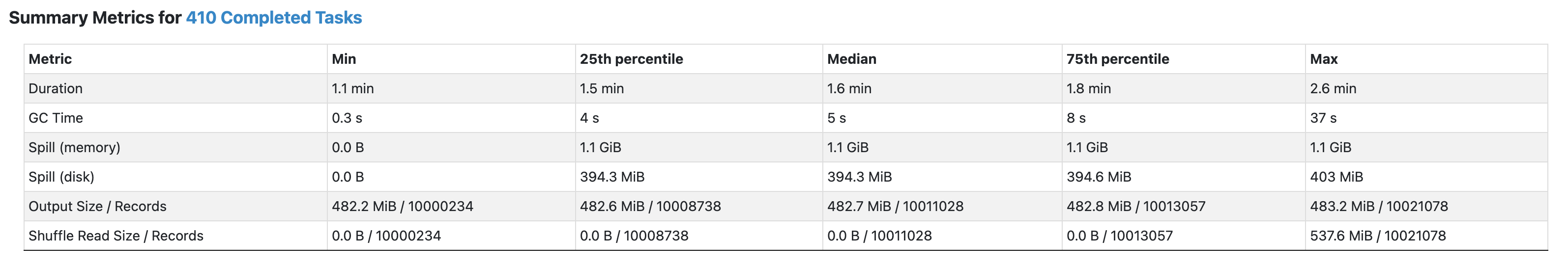

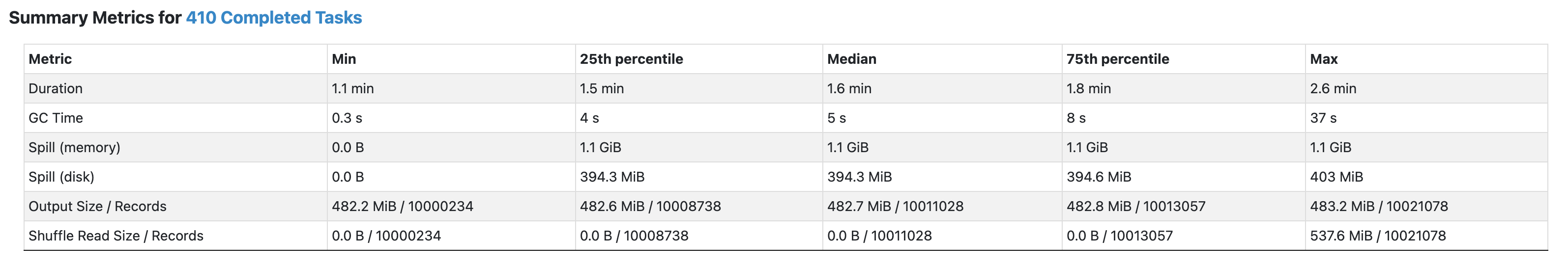

…ks` percentile metrics ### What changes were proposed in this pull request? #### Background In PR #26508 (SPARK-26260) the SHS stage metric percentiles were updated to only include successful tasks when using disk storage. It did this by making the values for each metric negative when the task is not in a successful state. This approach was chosen to avoid breaking changes to disk storage. See [this comment](#26508 (comment)) for context. To get the percentiles, it reads the metric values, starting at 0, in ascending order. This filters out all tasks that are not successful because the values are less than 0. To get the percentile values it scales the percentiles to the list index of successful tasks. For example if there are 200 tasks and you want percentiles [0, 25, 50, 75, 100] the lookup indexes in the task collection are [0, 50, 100, 150, 199]. #### Issue For metrics 1) shuffle total reads and 2) shuffle total blocks, PR #26508 incorrectly makes the metric indices positive. This means tasks that are not successful are included in the percentile calculations. The percentile lookup index calculation is still based on the number of successful task so the wrong task metric is returned for a given percentile. This was not caught because the unit test only verified values for one metric, `executorRunTime`. #### Fix The index values for `SHUFFLE_TOTAL_READS` and `SHUFFLE_TOTAL_BLOCKS` should not convert back to positive metric values for tasks that are not successful. I believe this was done because the metrics values are summed from two other metrics. Using the raw values still creates the desired outcome. `negative + negative = negative` and `positive + positive = positive`. There is no case where one metric will be negative and one will be positive. I also verified that these two metrics are only used in the percentile calculations where only successful tasks are used. ### Why are the changes needed? This change is required so that the SHS stage percentile metrics for shuffle read bytes and shuffle total blocks are correct. ### Does this PR introduce _any_ user-facing change? Yes. The user will see the correct percentile values for the stage summary shuffle read bytes. ### How was this patch tested? I updated the unit test to verify the percentile values for every task metric. I also modified the unit test to have unique values for every metric. Previously the test had the same metrics for every field. This would not catch bugs like the wrong field being read by accident. I manually validated the fix in the UI. **BEFORE**  **AFTER**  I manually validated the fix in the task summary API (`/api/v1/applications/application_123/1/stages/14/0/taskSummary\?quantiles\=0,0.25,0.5,0.75,1.0`). See `shuffleReadMetrics.readBytes` and `shuffleReadMetrics.totalBlocksFetched`. Before: ```json { "quantiles":[ 0.0, 0.25, 0.5, 0.75, 1.0 ], "shuffleReadMetrics":{ "readBytes":[ -2.0, -2.0, -2.0, -2.0, 5.63718681E8 ], "totalBlocksFetched":[ -2.0, -2.0, -2.0, -2.0, 2.0 ], ... }, ... } ``` After: ```json { "quantiles":[ 0.0, 0.25, 0.5, 0.75, 1.0 ], "shuffleReadMetrics":{ "readBytes":[ 5.62865286E8, 5.63779421E8, 5.63941681E8, 5.64327925E8, 5.7674183E8 ], "totalBlocksFetched":[ 2.0, 2.0, 2.0, 2.0, 2.0 ], ... } ... } ``` Closes #35637 from robreeves/SPARK-38309. Authored-by: Rob Reeves <roreeves@linkedin.com> Signed-off-by: Mridul Muralidharan <mridul<at>gmail.com>

…ks` percentile metrics ### What changes were proposed in this pull request? #### Background In PR #26508 (SPARK-26260) the SHS stage metric percentiles were updated to only include successful tasks when using disk storage. It did this by making the values for each metric negative when the task is not in a successful state. This approach was chosen to avoid breaking changes to disk storage. See [this comment](#26508 (comment)) for context. To get the percentiles, it reads the metric values, starting at 0, in ascending order. This filters out all tasks that are not successful because the values are less than 0. To get the percentile values it scales the percentiles to the list index of successful tasks. For example if there are 200 tasks and you want percentiles [0, 25, 50, 75, 100] the lookup indexes in the task collection are [0, 50, 100, 150, 199]. #### Issue For metrics 1) shuffle total reads and 2) shuffle total blocks, PR #26508 incorrectly makes the metric indices positive. This means tasks that are not successful are included in the percentile calculations. The percentile lookup index calculation is still based on the number of successful task so the wrong task metric is returned for a given percentile. This was not caught because the unit test only verified values for one metric, `executorRunTime`. #### Fix The index values for `SHUFFLE_TOTAL_READS` and `SHUFFLE_TOTAL_BLOCKS` should not convert back to positive metric values for tasks that are not successful. I believe this was done because the metrics values are summed from two other metrics. Using the raw values still creates the desired outcome. `negative + negative = negative` and `positive + positive = positive`. There is no case where one metric will be negative and one will be positive. I also verified that these two metrics are only used in the percentile calculations where only successful tasks are used. ### Why are the changes needed? This change is required so that the SHS stage percentile metrics for shuffle read bytes and shuffle total blocks are correct. ### Does this PR introduce _any_ user-facing change? Yes. The user will see the correct percentile values for the stage summary shuffle read bytes. ### How was this patch tested? I updated the unit test to verify the percentile values for every task metric. I also modified the unit test to have unique values for every metric. Previously the test had the same metrics for every field. This would not catch bugs like the wrong field being read by accident. I manually validated the fix in the UI. **BEFORE**  **AFTER**  I manually validated the fix in the task summary API (`/api/v1/applications/application_123/1/stages/14/0/taskSummary\?quantiles\=0,0.25,0.5,0.75,1.0`). See `shuffleReadMetrics.readBytes` and `shuffleReadMetrics.totalBlocksFetched`. Before: ```json { "quantiles":[ 0.0, 0.25, 0.5, 0.75, 1.0 ], "shuffleReadMetrics":{ "readBytes":[ -2.0, -2.0, -2.0, -2.0, 5.63718681E8 ], "totalBlocksFetched":[ -2.0, -2.0, -2.0, -2.0, 2.0 ], ... }, ... } ``` After: ```json { "quantiles":[ 0.0, 0.25, 0.5, 0.75, 1.0 ], "shuffleReadMetrics":{ "readBytes":[ 5.62865286E8, 5.63779421E8, 5.63941681E8, 5.64327925E8, 5.7674183E8 ], "totalBlocksFetched":[ 2.0, 2.0, 2.0, 2.0, 2.0 ], ... } ... } ``` Closes #35637 from robreeves/SPARK-38309. Authored-by: Rob Reeves <roreeves@linkedin.com> Signed-off-by: Mridul Muralidharan <mridul<at>gmail.com> (cherry picked from commit 0ad7677) Signed-off-by: Mridul Muralidharan <mridulatgmail.com>

…ks` percentile metrics ### What changes were proposed in this pull request? #### Background In PR #26508 (SPARK-26260) the SHS stage metric percentiles were updated to only include successful tasks when using disk storage. It did this by making the values for each metric negative when the task is not in a successful state. This approach was chosen to avoid breaking changes to disk storage. See [this comment](#26508 (comment)) for context. To get the percentiles, it reads the metric values, starting at 0, in ascending order. This filters out all tasks that are not successful because the values are less than 0. To get the percentile values it scales the percentiles to the list index of successful tasks. For example if there are 200 tasks and you want percentiles [0, 25, 50, 75, 100] the lookup indexes in the task collection are [0, 50, 100, 150, 199]. #### Issue For metrics 1) shuffle total reads and 2) shuffle total blocks, PR #26508 incorrectly makes the metric indices positive. This means tasks that are not successful are included in the percentile calculations. The percentile lookup index calculation is still based on the number of successful task so the wrong task metric is returned for a given percentile. This was not caught because the unit test only verified values for one metric, `executorRunTime`. #### Fix The index values for `SHUFFLE_TOTAL_READS` and `SHUFFLE_TOTAL_BLOCKS` should not convert back to positive metric values for tasks that are not successful. I believe this was done because the metrics values are summed from two other metrics. Using the raw values still creates the desired outcome. `negative + negative = negative` and `positive + positive = positive`. There is no case where one metric will be negative and one will be positive. I also verified that these two metrics are only used in the percentile calculations where only successful tasks are used. ### Why are the changes needed? This change is required so that the SHS stage percentile metrics for shuffle read bytes and shuffle total blocks are correct. ### Does this PR introduce _any_ user-facing change? Yes. The user will see the correct percentile values for the stage summary shuffle read bytes. ### How was this patch tested? I updated the unit test to verify the percentile values for every task metric. I also modified the unit test to have unique values for every metric. Previously the test had the same metrics for every field. This would not catch bugs like the wrong field being read by accident. I manually validated the fix in the UI. **BEFORE**  **AFTER**  I manually validated the fix in the task summary API (`/api/v1/applications/application_123/1/stages/14/0/taskSummary\?quantiles\=0,0.25,0.5,0.75,1.0`). See `shuffleReadMetrics.readBytes` and `shuffleReadMetrics.totalBlocksFetched`. Before: ```json { "quantiles":[ 0.0, 0.25, 0.5, 0.75, 1.0 ], "shuffleReadMetrics":{ "readBytes":[ -2.0, -2.0, -2.0, -2.0, 5.63718681E8 ], "totalBlocksFetched":[ -2.0, -2.0, -2.0, -2.0, 2.0 ], ... }, ... } ``` After: ```json { "quantiles":[ 0.0, 0.25, 0.5, 0.75, 1.0 ], "shuffleReadMetrics":{ "readBytes":[ 5.62865286E8, 5.63779421E8, 5.63941681E8, 5.64327925E8, 5.7674183E8 ], "totalBlocksFetched":[ 2.0, 2.0, 2.0, 2.0, 2.0 ], ... } ... } ``` Closes #35637 from robreeves/SPARK-38309. Authored-by: Rob Reeves <roreeves@linkedin.com> Signed-off-by: Mridul Muralidharan <mridul<at>gmail.com> (cherry picked from commit 0ad7677) Signed-off-by: Mridul Muralidharan <mridulatgmail.com>

…ks` percentile metrics ### What changes were proposed in this pull request? #### Background In PR apache#26508 (SPARK-26260) the SHS stage metric percentiles were updated to only include successful tasks when using disk storage. It did this by making the values for each metric negative when the task is not in a successful state. This approach was chosen to avoid breaking changes to disk storage. See [this comment](apache#26508 (comment)) for context. To get the percentiles, it reads the metric values, starting at 0, in ascending order. This filters out all tasks that are not successful because the values are less than 0. To get the percentile values it scales the percentiles to the list index of successful tasks. For example if there are 200 tasks and you want percentiles [0, 25, 50, 75, 100] the lookup indexes in the task collection are [0, 50, 100, 150, 199]. #### Issue For metrics 1) shuffle total reads and 2) shuffle total blocks, PR apache#26508 incorrectly makes the metric indices positive. This means tasks that are not successful are included in the percentile calculations. The percentile lookup index calculation is still based on the number of successful task so the wrong task metric is returned for a given percentile. This was not caught because the unit test only verified values for one metric, `executorRunTime`. #### Fix The index values for `SHUFFLE_TOTAL_READS` and `SHUFFLE_TOTAL_BLOCKS` should not convert back to positive metric values for tasks that are not successful. I believe this was done because the metrics values are summed from two other metrics. Using the raw values still creates the desired outcome. `negative + negative = negative` and `positive + positive = positive`. There is no case where one metric will be negative and one will be positive. I also verified that these two metrics are only used in the percentile calculations where only successful tasks are used. ### Why are the changes needed? This change is required so that the SHS stage percentile metrics for shuffle read bytes and shuffle total blocks are correct. ### Does this PR introduce _any_ user-facing change? Yes. The user will see the correct percentile values for the stage summary shuffle read bytes. ### How was this patch tested? I updated the unit test to verify the percentile values for every task metric. I also modified the unit test to have unique values for every metric. Previously the test had the same metrics for every field. This would not catch bugs like the wrong field being read by accident. I manually validated the fix in the UI. **BEFORE**  **AFTER**  I manually validated the fix in the task summary API (`/api/v1/applications/application_123/1/stages/14/0/taskSummary\?quantiles\=0,0.25,0.5,0.75,1.0`). See `shuffleReadMetrics.readBytes` and `shuffleReadMetrics.totalBlocksFetched`. Before: ```json { "quantiles":[ 0.0, 0.25, 0.5, 0.75, 1.0 ], "shuffleReadMetrics":{ "readBytes":[ -2.0, -2.0, -2.0, -2.0, 5.63718681E8 ], "totalBlocksFetched":[ -2.0, -2.0, -2.0, -2.0, 2.0 ], ... }, ... } ``` After: ```json { "quantiles":[ 0.0, 0.25, 0.5, 0.75, 1.0 ], "shuffleReadMetrics":{ "readBytes":[ 5.62865286E8, 5.63779421E8, 5.63941681E8, 5.64327925E8, 5.7674183E8 ], "totalBlocksFetched":[ 2.0, 2.0, 2.0, 2.0, 2.0 ], ... } ... } ``` Closes apache#35637 from robreeves/SPARK-38309. Authored-by: Rob Reeves <roreeves@linkedin.com> Signed-off-by: Mridul Muralidharan <mridul<at>gmail.com> (cherry picked from commit 0ad7677) Signed-off-by: Mridul Muralidharan <mridulatgmail.com> (cherry picked from commit e067b12) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

…sks metrics for disk store

What changes were proposed in this pull request?

After #23088 task Summary table in the stage page shows successful tasks metrics for lnMemory store. In this PR, it added for disk store also.

Why are the changes needed?

Now both InMemory and disk store will be consistent in showing the task summary table in the UI, if there are non successful tasks

Does this PR introduce any user-facing change?

no

How was this patch tested?

Added UT. Manually verified

Test steps:

sc.parallelize(1 to 1000, 2).map(x => throw new Exception("fail")).count