-

Notifications

You must be signed in to change notification settings - Fork 20

Fix sentence splitting with mecab #43

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Mmmmh I think I have a better understanding of the issue now. Basically, one line feed (\n) is swallowed by the parser, so you need two line feeds to get separated sentences, so it explains the big blobs of texts you get on the master branch. However, after more experiments it seems your text is a kind of LWT breaker, because the parsing gets really sensitive to side effects 😅 Currently, I think that the best solution is to review the MeCab parsing. It is getting increasingly complex, but yields poor results. I will try some improvements next week, if it does not work I will simply use your PR. In the meantime, texts with simple structure work fine, so a temporary solution may be manual editing... |

We use EOP (end-of-paragraph) markers instead of EOS (end-of-sentence). Parsing using PHP is a bit slower, but results are better. After 3 months trying to, I do not advise to use a SQL parsing.

|

Hi, long time no see! As expected, it took me a long time to review the Japanese parsing using SQL, and as it often happens with code not following KISS principle, the best solution was to destroy and redo everything. As far as I investigated, the code was using a lot of SQL glitches in order to assign variables, which led to very complicated debugging. I rewrote the parser using pure PHP, data are then sent to SQL. It's a bit slower, but texts are actually parsed as expected, which is better. I did not manage to get to such results with the SQL parser anyway. Thanks for the bug report and the PR. Have a great day! |

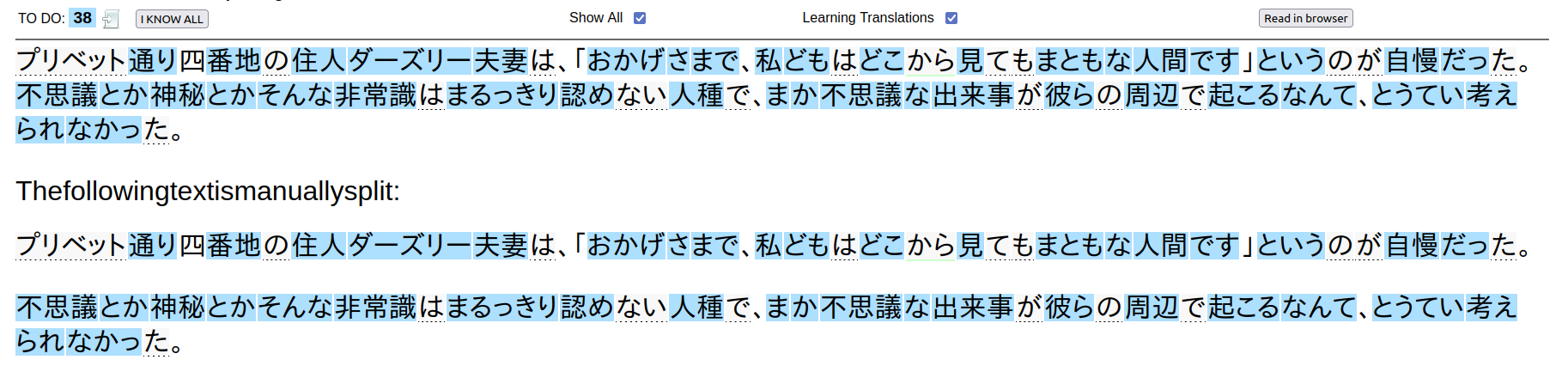

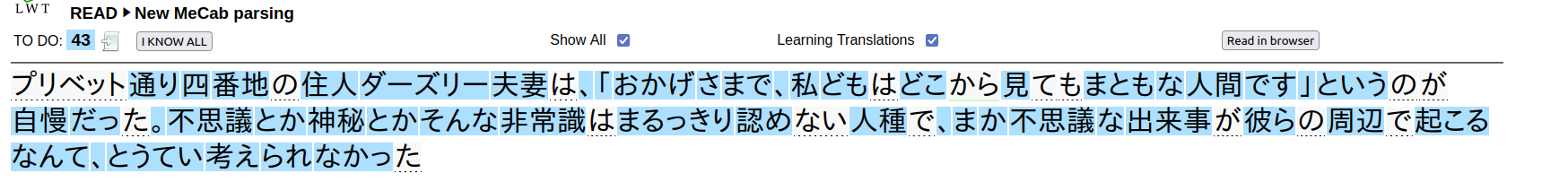

The sentence splitting wasn't working properly with mecab. It seems like the idea was to use the EOS markers, but their name is misleading and mecab doesn't actually split sentences. For example

will output

where the only EOS is at the very end to mark the end of that "chunk" of input, even though the text contains two sentences.

The workaround I wrote is pretty hacky but it seems to work well. The node_type 3 signifies a punctuation mark, and I wrote a check to skip characters that shouldn't end a sentence, like commas and quotation marks. The code also handles repeated punctiation marks properly, like a sentence ending in ?!. I'm not very experienced with mysql or php so the only way I could do this was to stuff the variable assignments into the calculations which doesn't look great...

I also had problems with the EOS markers appearing in the final text, so I just dropped them altogether since they're not used in this new code. If this looks acceptable, I can clean up the surrounding code a little and remove some of the EOS checks.

edit: I added a commit that handles more edge cases but now it looks even worse. It also doesn't handle sentences that are separated by others by newlines, such as a line that's surrounded entirely by quotation marks. I don't think there's any way to detect them from mecab's output since it doesn't retain newlines, so the proper solution to this would be to do the word splitting in php. I'm not sure how to implement that though.