- The library is faster than other libraries on most of the transformations.

- Based on numpy, OpenCV, imgaug picking the best from each of them.

- Simple, flexible API that allows the library to be used in any computer vision pipeline.

- Large, diverse set of transformations.

- Easy to extend the library to wrap around other libraries.

- Easy to extend to other tasks.

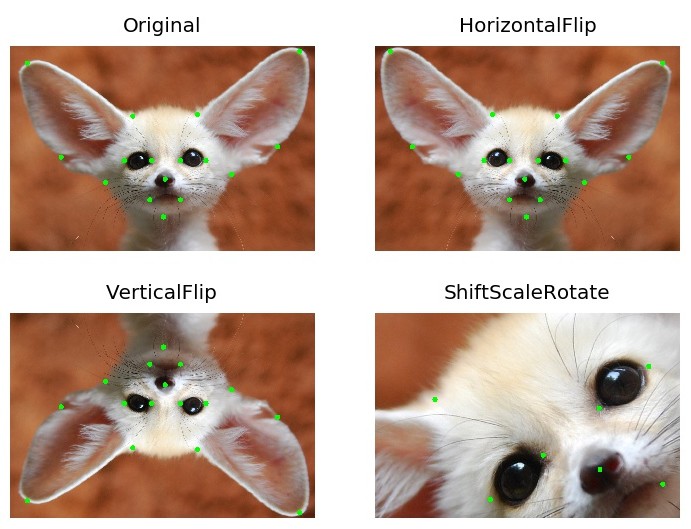

- Supports transformations on images, masks, key points and bounding boxes.

- Supports python 2.7-3.7

- Easy integration with PyTorch.

- Easy transfer from torchvision.

- Was used to get top results in many DL competitions at Kaggle, topcoder, CVPR, MICCAI.

- Written by Kaggle Masters.

All in one showcase notebook - showcase.ipynb

Classification - example.ipynb

Object detection - example_bboxes.ipynb

Non-8-bit images - example_16_bit_tiff.ipynb

Image segmentation example_kaggle_salt.ipynb

Keypoints example_keypoints.ipynb

Custom targets example_multi_target.ipynb

You can use this Google Colaboratory notebook to adjust image augmentation parameters and see the resulting images.

You can use pip to install albumentations:

pip install albumentations

If you want to get the latest version of the code before it is released on PyPI you can install the library from GitHub:

pip install -U git+https://github.com/albu/albumentations

And it also works in Kaggle GPU kernels (proof)

!pip install albumentations > /dev/null

To install albumentations using conda we need first to install imgaug with pip

pip install imgaug

conda install albumentations -c albumentations

The full documentation is available at albumentations.readthedocs.io.

Pixel-level transforms will change just an input image and will leave any additional targets such as masks, bounding boxes, and keypoints unchanged. The list of pixel-level transforms:

- Blur

- CLAHE

- ChannelShuffle

- Cutout

- FromFloat

- GaussNoise

- HueSaturationValue

- IAAAdditiveGaussianNoise

- IAAEmboss

- IAASharpen

- IAASuperpixels

- InvertImg

- JpegCompression

- MedianBlur

- MotionBlur

- Normalize

- RGBShift

- RandomBrightness

- RandomBrightnessContrast

- RandomContrast

- RandomGamma

- ToFloat

- ToGray

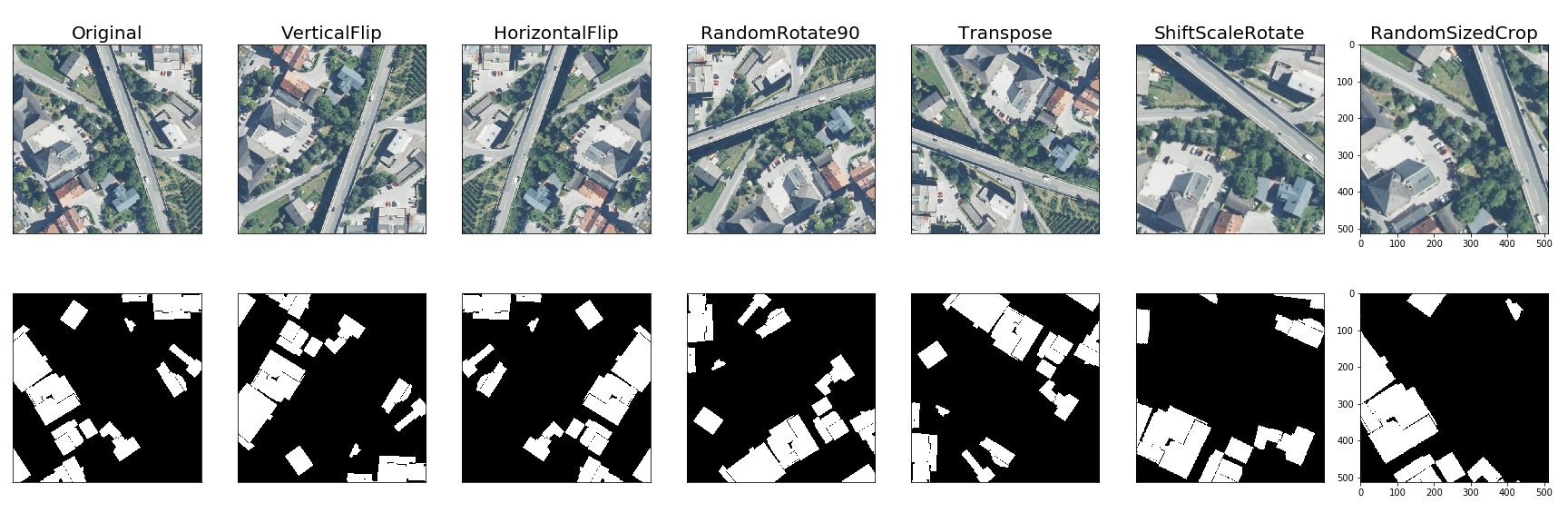

Spatial-level transforms will simultaneously change both an input image as well as additional targets such as masks, bounding boxes, and keypoints. The following table shows which additional targets are supported by each transform.

| Transform | Image | Masks | BBoxes | Keypoints |

|---|---|---|---|---|

| CenterCrop | ✓ | ✓ | ✓ | ✓ |

| Crop | ✓ | ✓ | ✓ | |

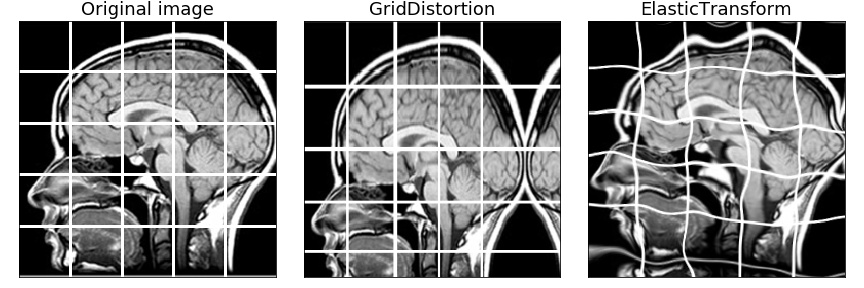

| ElasticTransform | ✓ | ✓ | ||

| Flip | ✓ | ✓ | ✓ | ✓ |

| GridDistortion | ✓ | ✓ | ||

| HorizontalFlip | ✓ | ✓ | ✓ | ✓ |

| IAAAffine | ✓ | ✓ | ✓ | ✓ |

| IAACropAndPad | ✓ | ✓ | ✓ | ✓ |

| IAAFliplr | ✓ | ✓ | ✓ | ✓ |

| IAAFlipud | ✓ | ✓ | ✓ | ✓ |

| IAAPerspective | ✓ | ✓ | ✓ | ✓ |

| IAAPiecewiseAffine | ✓ | ✓ | ✓ | ✓ |

| LongestMaxSize | ✓ | ✓ | ✓ | |

| NoOp | ✓ | ✓ | ✓ | ✓ |

| OpticalDistortion | ✓ | ✓ | ||

| PadIfNeeded | ✓ | ✓ | ✓ | ✓ |

| RandomCrop | ✓ | ✓ | ✓ | ✓ |

| RandomCropNearBBox | ✓ | ✓ | ✓ | |

| RandomRotate90 | ✓ | ✓ | ✓ | ✓ |

| RandomScale | ✓ | ✓ | ✓ | ✓ |

| RandomSizedBBoxSafeCrop | ✓ | ✓ | ✓ | |

| RandomSizedCrop | ✓ | ✓ | ✓ | ✓ |

| Resize | ✓ | ✓ | ✓ | |

| Rotate | ✓ | ✓ | ✓ | ✓ |

| ShiftScaleRotate | ✓ | ✓ | ✓ | ✓ |

| SmallestMaxSize | ✓ | ✓ | ✓ | |

| Transpose | ✓ | ✓ | ✓ | |

| VerticalFlip | ✓ | ✓ | ✓ | ✓ |

Migrating from torchvision to albumentations is simple - you just need to change a few lines of code.

Albumentations has equivalents for common torchvision transforms as well as plenty of transforms that are not presented in torchvision.

migrating_from_torchvision_to_albumentations.ipynb shows how one can migrate code from torchvision to albumentations.

To run the benchmark yourself follow the instructions in benchmark/README.md

Results for running the benchmark on first 2000 images from the ImageNet validation set using an Intel Core i7-7800X CPU. The table shows how many images per second can be processed on a single core, higher is better.

| albumentations 0.1.11 |

imgaug 0.2.7 |

torchvision (Pillow backend) 0.2.1 |

torchvision (Pillow-SIMD backend) 0.2.1 |

Keras 2.2.4 |

Augmentor 0.2.3 |

solt 0.1.3 |

|

|---|---|---|---|---|---|---|---|

| RandomCrop64 | 754387 | 6730 | 94557 | 97446 | - | 69562 | 7932 |

| PadToSize512 | 7516 | - | 798 | 772 | - | - | 3102 |

| Resize512 | 2898 | 1272 | 379 | 1441 | - | 378 | 1822 |

| HorizontalFlip | 1320 | 1008 | 6475 | 5972 | 1093 | 6346 | 1154 |

| VerticalFlip | 11048 | 5429 | 7845 | 8213 | 10760 | 7677 | 3823 |

| Rotate | 1079 | 772 | 124 | 206 | 37 | 52 | 267 |

| ShiftScaleRotate | 2198 | 1223 | 107 | 184 | 40 | - | - |

| Brightness | 772 | 884 | 425 | 563 | 199 | 425 | 134 |

| Contrast | 894 | 826 | 304 | 401 | - | 303 | 1028 |

| BrightnessContrast | 690 | 408 | 173 | 229 | - | 173 | 119 |

| ShiftHSV | 216 | 151 | 57 | 74 | - | - | 142 |

| ShiftRGB | 728 | 884 | - | - | 665 | - | - |

| Gamma | 1151 | - | 1655 | 1692 | - | - | 918 |

| Grayscale | 2710 | 509 | 1183 | 1515 | - | 2891 | 3872 |

Python and library versions: Python 3.6.8 | Anaconda, numpy 1.16.1, pillow 5.4.1, pillow-simd 5.3.0.post0, opencv-python 4.0.0.21, scikit-image 0.14.2, scipy 1.2.0.

- Clone the repository:

git clone git@github.com:albu/albumentations.git cd albumentations - Install the library in development mode:

pip install -e .[tests] - Run tests:

pytest - Run flake8 to perform PEP8 and PEP257 style checks and to check code for lint errors.

flake8

- Go to

docs/directorycd docs - Install required libraries

pip install -r requirements.txt - Build html files

make html - Open

_build/html/index.htmlin browser.

Alternatively, you can start a web server that rebuilds the documentation

automatically when a change is detected by running make livehtml

In some systems, in the multiple GPU regime PyTorch may deadlock the DataLoader if OpenCV was compiled with OpenCL optimizations. Adding the following two lines before the library import may help. For more details pytorch/pytorch#1355

cv2.setNumThreads(0)

cv2.ocl.setUseOpenCL(False)If you find this library useful for your research, please consider citing:

@article{2018arXiv180906839B,

author = {A. Buslaev, A. Parinov, E. Khvedchenya, V.~I. Iglovikov and A.~A. Kalinin},

title = "{Albumentations: fast and flexible image augmentations}",

journal = {ArXiv e-prints},

eprint = {1809.06839},

year = 2018

}