Deep learning has no theoretical limitations of what it can learn. It obviously knocks its benchmarks. This repository includes project implementations that I couldn't imagine before. You can find the source code and documentation as a step by step tutorial.

1- Facial Expression Recognition Code, Tutorial

This is a custom CNN model. Kaggle FER 2013 data set is fed to the model. This model runs fast and produces satisfactory results.

2- Real Time Facial Expression Recognition Code, Tutorial

This is the adaptation of the same model to real time video.

3- Face Recognition With Oxford VGG-Face Model Code, Tutorial

Oxford Visual Geometry Group's VGG model is famous for confident scores on Imagenet contest. They retrain (almost) the same network structure for face recognition. This implementation is mainly based on convolutional neural networks, autoencoders and transfer learning. This is on the top of the leaderboard for face recognition challenges.

4- Real Time Deep Face Recognition Implementation with VGG-Face Code, Video

This is the real time implementation of VGG-Face model for face recognition.

5- Face Recognition with Google FaceNet Model Code, Tutorial

This is an alternative to Oxford VGG model. Even though FaceNet has more complex structure, it runs slower and less successful than VGG-Face based on my observations and experiments.

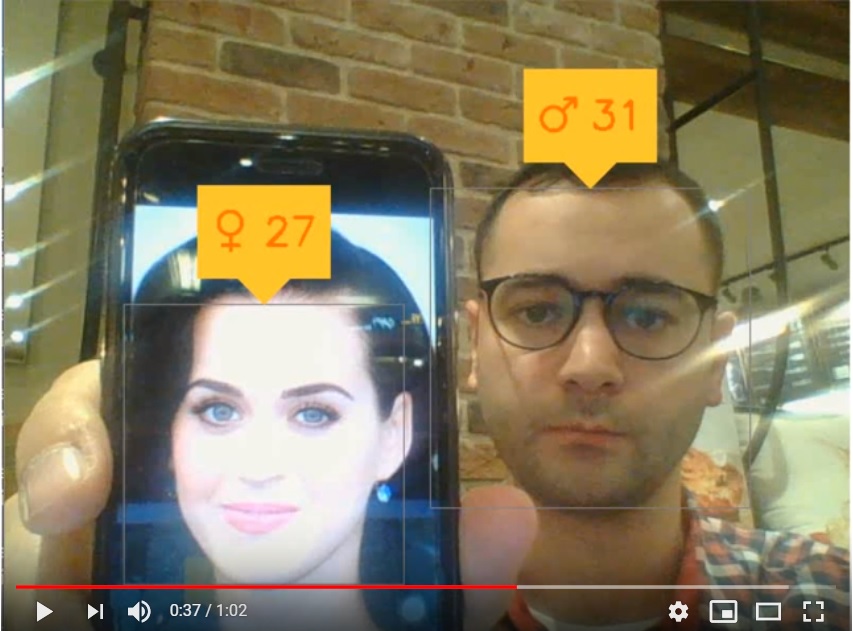

6- Apparent Age and Gender Prediction Code for age, Code for gender, Tutorial

We've used VGG-Face model for apparent age prediction this time. We actually applied transfer learning. Locking the early layers' weights enables to have outcomes fast.

7- Real Time Age and Gender Prediction Code, Video

This is a real time apparent age and gender prediction implementation

8- Making Arts with Deep Learning: Artistic Style Transfer Code, Tutorial

What if Vincent van Gogh had painted Istanbul Bosporus? Today we can answer this question. A deep learning technique named artistic style transfer enables to transform ordinary images to masterpieces.

9- Autoencoder and clustering Code, Tutorial

We can use neural networks to represent data. If you design a neural networks model symmetric about the centroid and you can restore a base data with an acceptable loss, then output of the centroid layer can represent the base data. Representations can contribute any field of deep learning such as face recognition, style transfer or just clustering.

10- Convolutional Autoencoder and clustering Code, Tutorial

We can adapt same representation approach to convolutional neural networks, too.

11- Transfer Learning: Consuming InceptionV3 to Classify Cat and Dog Images in Keras Code, Tutorial

We can have the outcomes of the other researchers effortlessly. Google researchers compete on Kaggle Imagenet competition. They got 97% accuracy. We will adapt Google's Inception V3 model to classify objects.

12- Handwritten Digit Classification Using Neural Networks Code, Tutorial

We had to apply feature extraction on data sets to use neural networks. Deep learning enables to skip this step. We just feed the data, and deep neural networks can extract features on the data set. Here, we will feed handwritten digit data (MNIST) to deep neural networks, and expect to learn digits.

13- Handwritten Digit Recognition Using Convolutional Neural Networks with Keras Code, Tutorial

Convolutional neural networks are close to human brain. People look for some patterns in classifying objects. For example, mouth, nose and ear shape of a cat is enough to classify a cat. We don't look at all pixels, just focus on some area. Herein, CNN applies some filters to detect these kind of shapes. They perform better than conventional neural networks. Herein, we got almost 2% accuracy than fully connected neural networks.

The following curriculum includes the source codes and notebooks captured in TensorFlow 101: Introduction to Deep Learning online course published on Udemy.

1- Installing TensorFlow and Prerequisites Video

2- Jupyter notebook Video

3- Hello, TensorFlow! Building Deep Neural Networks Classifier Model Code

1- Restoring and Working on Already Trained DNN In TensorFlow Code

The costly operation in neural networks is learning. You may spent hours to train a neural networks model. On the other hand, you can run the model in seconds after training. We'll mention how to store trained models and restore them.

2- Importing Saved TensorFlow DNN Classifier Model in Java Code

You can handle training with TensorFlow in Python and you can call this trained model from Java on your production pipeline.

1- Monitoring Model Evaluation Metrics in TensorFlow and TensorBoard Code

1- Building a DNN Regressor for Non-Linear Time Series in TensorFlow Code

2- Visualizing ML Results with Matplotlib and Embed them in TensorBoard Code

1- Unsupervised learning and k-means clustering with TensorFlow Code

We feel strong at supervised learning but today the more data we have is unlabeled. Unsupervised learning is mandatory field in machine learning.

2- Applying k-means clustering to n-dimensional datasets in TensorFlow Code

We can visualize clustering result on 2 and 3 dimensional space but the algorithm still works for higher dimensions even though we cannot visualize. Here, we apply k-means for n-dimensional data set but visualize for 3 dimensions.

1- Optimization Algorithms in TensorFlow Code

2- Activation Functions in TensorFlow Code

1- Installing Keras Code

2- Building DNN Classifier with Keras Code

3- Storing and restoring a trained neural networks model with Keras Code

1- How single layer perceptron works Code

This is the 1957 model implementation of the perceptron.

2- Gradient Vanishing Problem Code Tutorial

Why legacy activation functions such as sigmoid and tanh disappear on the pages of the history?

This repository is licensed under MIT license - see LICENSE for more details