-

Notifications

You must be signed in to change notification settings - Fork 126

Disable test(s) that fail often on GitHub CI for Windows/MPI #1398

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

Codecov Report

@@ Coverage Diff @@

## main #1398 +/- ##

==========================================

+ Coverage 95.44% 95.97% +0.54%

==========================================

Files 351 351

Lines 29122 29122

==========================================

+ Hits 27793 27949 +156

+ Misses 1329 1173 -156

Flags with carried forward coverage won't be shown. Click here to find out more. |

f793f3a to

bb6e9ef

Compare

…work/Trixi.jl into msl/triage-windows-mpi-tests

|

Alright... after a lot of trial and error I am now relatively confident that this represents a working set of MPI-parallel tests for GitHub Actions on Windows. The good news is that this set has been run successfully for at least 4 times in a row without fail. The bad news? I had to completely disable all parallel tests of the P4estMesh. Where to go from now? My current assessment is still that there is might be a fundamental problem with memory usage However, this is something which I believe less and less. Alternateively, there might be an issue with p4est on Windows, or there is a more fundamental issue with MicrosoftMPI. Neither cause can fully explain why the failures were intermittent, only affected some tests and not all of them, and why also TreeMesh tests are failing. My suggestion for how to proceed would be to merge this PR (possibly after cleaning up the p4est tests file, since it can be excluded in toto), and then to create an issue such that this can be investigated more thoroughly in the future. Thoughts, suggestions? |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks a lot for looking into this issue! I just have a minor suggestion to discuss.

Co-authored-by: Hendrik Ranocha <ranocha@users.noreply.github.com>

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks!

In the past weeks/months, we have a very high failure rate for CI tests on Windows with MPI that are intermittent and cannot be traced back reasonably to any real programming issue. Some examples:

https://github.com/trixi-framework/Trixi.jl/actions/runs/4703123828/jobs/8341232910

https://github.com/trixi-framework/Trixi.jl/actions/runs/4710627754/jobs/8362973268

https://github.com/trixi-framework/Trixi.jl/actions/runs/4714298641/jobs/8360570746

https://github.com/trixi-framework/Trixi.jl/actions/runs/4698811234/jobs/8351234657

https://github.com/trixi-framework/Trixi.jl/actions/runs/4694820492/jobs/8323339119

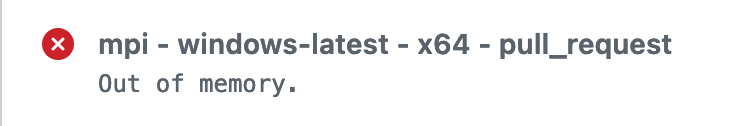

Our working hypothesis is that the GitHub runners run out of memory, which is supported by at least some of the failed tests, where we see the following statement in the test failure overview:

This happened, e.g., here:

https://github.com/trixi-framework/Trixi.jl/actions/runs/4702374974

The goal of this PR is to try to disable those tests that only fail on Windows to have regularly passing MPI tests again, and then fix this for real (e.g. by reducing the test size) in a subsequent PR.