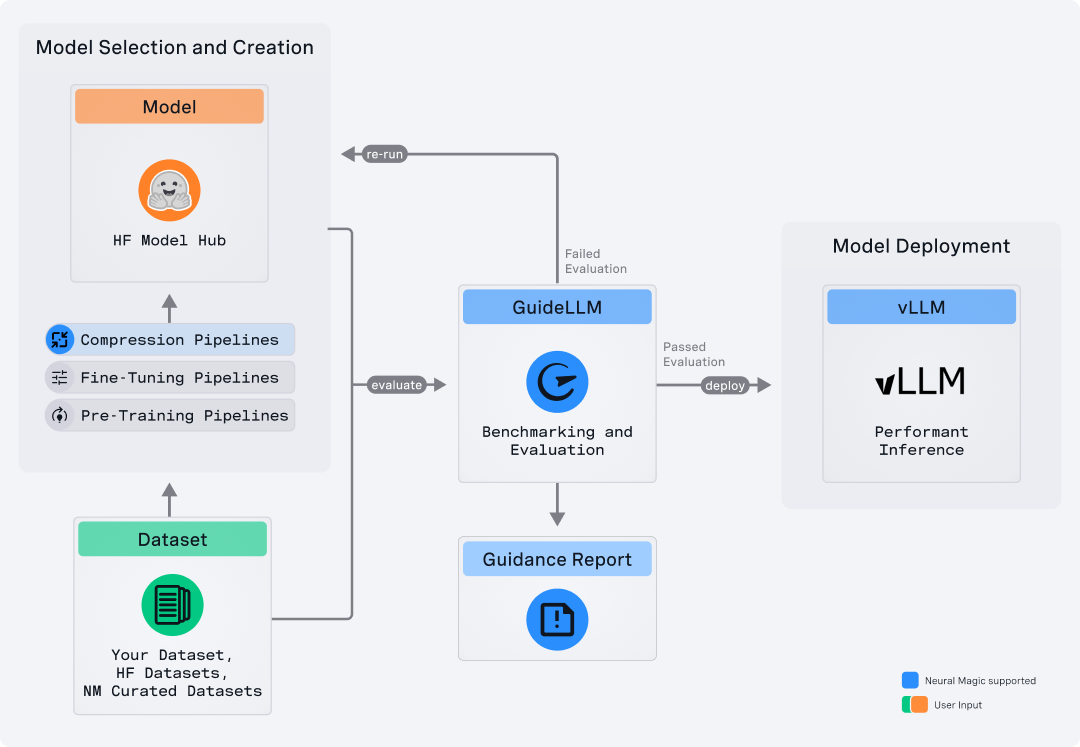

GuideLLM is a platform for evaluating and optimizing the deployment of large language models (LLMs). By simulating real-world inference workloads, GuideLLM helps users gauge the performance, resource needs, and cost implications of deploying LLMs on various hardware configurations. This approach ensures efficient, scalable, and cost-effective LLM inference serving while maintaining high service quality.

- Performance Evaluation: Analyze LLM inference under different load scenarios to ensure your system meets your service level objectives (SLOs).

- Resource Optimization: Determine the most suitable hardware configurations for running your models effectively.

- Cost Estimation: Understand the financial impact of different deployment strategies and make informed decisions to minimize costs.

- Scalability Testing: Simulate scaling to handle large numbers of concurrent users without degradation in performance.

Before installing, ensure you have the following prerequisites:

- OS: Linux or MacOS

- Python: 3.9 – 3.13

The latest GuideLLM release can be installed using pip:

pip install guidellmOr from source code using pip:

pip install git+https://github.com/neuralmagic/guidellm.gitFor detailed installation instructions and requirements, see the Installation Guide.

GuideLLM requires an OpenAI-compatible server to run evaluations. vLLM is recommended for this purpose. After installing vLLM on your desired server (pip install vllm), start a vLLM server with a Llama 3.1 8B quantized modelby running the following command:

vllm serve "neuralmagic/Meta-Llama-3.1-8B-Instruct-quantized.w4a16"For more information on starting a vLLM server, see the vLLM Documentation.

For information on starting other supported inference servers or platforms, see the Supported Backends documentation.

To run a GuideLLM benchmark, use the guidellm benchmark command with the target set to an OpenAI compatible server. For this example, the target is set to 'http://localhost:8000', assuming that vLLM is active and running on the same server. Be sure to update it appropriately. By default, GuideLLM will automatically determine the model avaialble on the server and use that. To target a different model, pass the desired name with the --model argument. Additionally, the --rate-type is set to sweep which will run a range of benchmarks automatically determining the minimum and maximum rates the server and model can support. Each benchmark run under the sweep will run for 30 seconds, as set by the --max-seconds argument. Finally, --data is set to a synthetic dataset with 256 prompt tokens and 128 output tokens per request. For more arguments, supported scenarios, and configurations, jump to the Configurations Section or run guidellm benchmark --help.

Now, to start benchmarking, run the following command:

guidellm benchmark \

--target "http://localhost:8000" \

--rate-type sweep \

--max-seconds 30 \

--data "prompt_tokens=256,output_tokens=128"The above command will begin the evaluation and provide progress updates similar to the following:

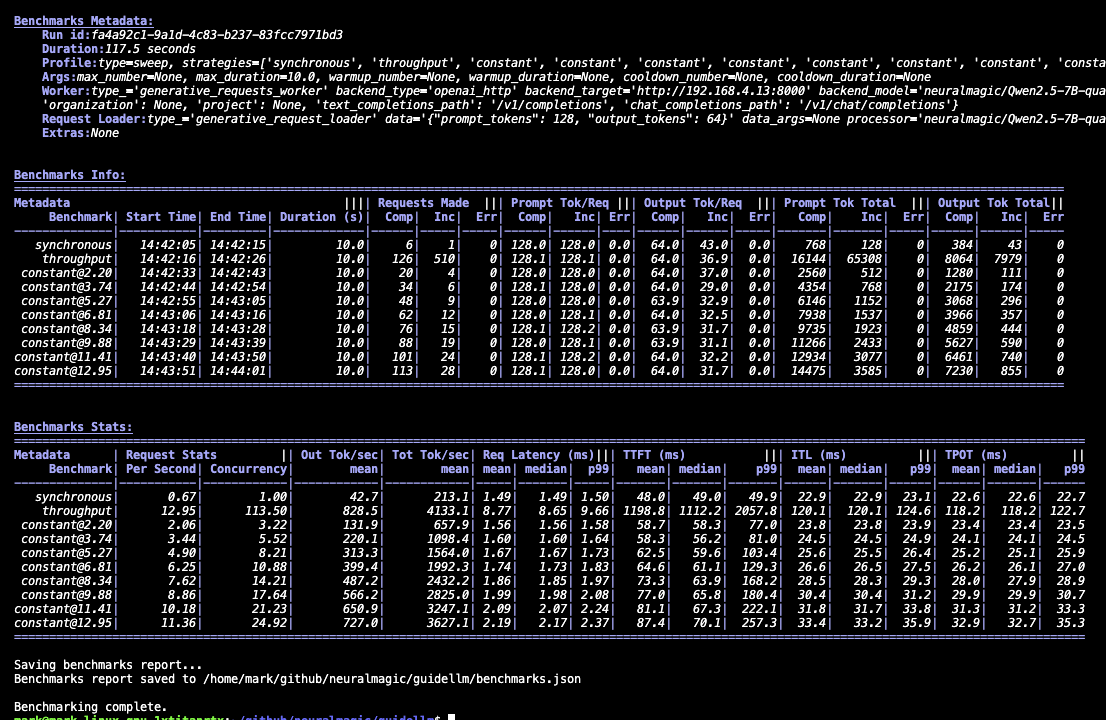

After the evaluation is completed, GuideLLM will summarize the results into three sections:

- Benchmarks Metadata: A summary of the benchmark run and the arguments used to create it, including the server, data, profile, and more.

- Benchmarks Info: A high level view of each benchmark and the requests that were run, including the type, duration, request statuses, and number of tokens.

- Benchmarks Stats: A summary of the statistics for each benchmark run, including the requests rate, concurrency, latency, and token level metrics such as TTFT, ITL, and more.

The sections will look similar to the following:

For further details about the metrics and definitions, view the Metrics documentation.

By default, the full results, including full statistics and request data, are saved to a file benchmarks.json in the current working directory. This file can be used for further analysis or reporting and additionally can be reloaded into Python for further analysis using the guidellm.benchmark.GenerativeBenchmarksReport class. You can specify a different file name and extension with the --output argument.

For further details about the supported output file types, view the Outputs documentation.

The results from GuideLLM are used to optimize your LLM deployment for performance, resource efficiency, and cost. By analyzing the performance metrics, you can identify bottlenecks, determine the optimal request rate, and select the most cost-effective hardware configuration for your deployment.

For example, if we are deploying a chat application, we likely want to guarantee our time to first token (TTFT) and inter-token latency (ITL) are under certain thresholds to meet our service level objectives (SLOs) or agreements (SLAs). For example, setting TTFT to 200ms and ITL 25ms for the sample data provided in the example above, we can see that even though the server is capable of handling up to 13 requests per second, we would only be able to meet our SLOs for 99% of users at a request rate of 3.5 requests per second. If we relax our constraints on ITL to 50ms, then we can meet the TTFT SLA for 99% of users at a request rate of roughly 10 requests per second.

For further details about deterimining the optimal request rate and SLOs, view the SLOs documentation.

GuideLLM provides a variety of configurations through both the benchmark CLI command alongside environment variables which handle default values and more granular controls. Below, the most common configurations are listed. A complete list is easily accessible, though, by running guidellm benchmark --help or guidellm config respectively.

The guidellm benchmark command is used to run benchmarks against a generative AI backend/server. The command accepts a variety of arguments to customize the benchmark run. The most common arguments include:

-

--target: Specifies the target path for the backend to run benchmarks against. For example,http://localhost:8000. This is required to define the server endpoint. -

--model: Allows selecting a specific model from the server. If not provided, it defaults to the first model available on the server. Useful when multiple models are hosted on the same server. -

--processor: Used only for synthetic data creation or when the token source configuration is set to local for calculating token metrics locally. It must match the model's processor/tokenizer to ensure compatibility and correctness. This supports either a HuggingFace model ID or a local path to a processor/tokenizer. -

--data: Specifies the dataset to use. This can be a HuggingFace dataset ID, a local path to a dataset, or standard text files such as CSV, JSONL, and more. Additionally, synthetic data configurations can be provided using JSON or key-value strings. Synthetic data options include:prompt_tokens: Average number of tokens for prompts.output_tokens: Average number of tokens for outputs.TYPE_stdev,TYPE_min,TYPE_max: Standard deviation, minimum, and maximum values for the specified type (e.g.,prompt_tokens,output_tokens). If not provided, will use the provided tokens value only.samples: Number of samples to generate, defaults to 1000.source: Source text data for generation, defaults to a local copy of Pride and Prejudice.

-

--data-args: A JSON string used to specify the columns to source data from (e.g.,prompt_column,output_tokens_count_column) and additional arguments to pass into the HuggingFace datasets constructor. -

--data-sampler: Enables applyingrandomshuffling or sampling to the dataset. If not set, no sampling is applied. -

--rate-type: Defines the type of benchmark to run (default sweep). Supported types include:synchronous: Runs a single stream of requests one at a time.--ratemust not be set for this mode.throughput: Runs all requests in parallel to measure the maximum throughput for the server (bounded by GUIDELLM__MAX_CONCURRENCY config argument).--ratemust not be set for this mode.concurrent: Runs a fixed number of streams of requests in parallel.--ratemust be set to the desired concurrency level/number of streams.constant: Sends requests asynchronously at a constant rate set by--rate.poisson: Sends requests at a rate following a Poisson distribution with the mean set by--rate.sweep: Automatically determines the minimum and maximum rates the server can support by running synchronous and throughput benchmarks and then runs a series of benchmarks equally spaced between the two rates. The number of benchmarks is set by--rate(default is 10).

-

--max-seconds: Sets the maximum duration (in seconds) for each benchmark run. If not provided, the benchmark will run until the dataset is exhausted or--max-requestsis reached. -

--max-requests: Sets the maximum number of requests for each benchmark run. If not provided, the benchmark will run until--max-secondsis reached or the dataset is exhausted. -

--warmup-percent: Specifies the percentage of the benchmark to treat as a warmup phase. Requests during this phase are excluded from the final results. -

--cooldown-percent: Specifies the percentage of the benchmark to treat as a cooldown phase. Requests during this phase are excluded from the final results. -

--output-path: Defines the path to save the benchmark results. Supports JSON, YAML, or CSV formats. If a directory is provided, the results will be saved asbenchmarks.jsonin that directory. If not set, the results will be saved in the current working directory.

Our comprehensive documentation provides detailed guides and resources to help you get the most out of GuideLLM. Whether just getting started or looking to dive deeper into advanced topics, you can find what you need in our full documentation.

- Installation Guide - This guide provides step-by-step instructions for installing GuideLLM, including prerequisites and setup tips.

- Backends Guide - A comprehensive overview of supported backends and how to set them up for use with GuideLLM.

- Metrics Guide - Detailed explanations of the metrics used in GuideLLM, including definitions and how to interpret them.

- Outputs Guide - Information on the different output formats supported by GuideLLM and how to use them.

- Architecture Overview - A detailed look at GuideLLM's design, components, and how they interact.

- vLLM Documentation - Official vLLM documentation provides insights into installation, usage, and supported models.

Visit our GitHub Releases page and review the release notes to stay updated with the latest releases.

GuideLLM is licensed under the Apache License 2.0.

We appreciate contributions to the code, examples, integrations, documentation, bug reports, and feature requests! Your feedback and involvement are crucial in helping GuideLLM grow and improve. Below are some ways you can get involved:

- DEVELOPING.md - Development guide for setting up your environment and making contributions.

- CONTRIBUTING.md - Guidelines for contributing to the project, including code standards, pull request processes, and more.

- CODE_OF_CONDUCT.md - Our expectations for community behavior to ensure a welcoming and inclusive environment.

We invite you to join our growing community of developers, researchers, and enthusiasts passionate about LLMs and optimization. Whether you're looking for help, want to share your own experiences, or stay up to date with the latest developments, there are plenty of ways to get involved:

- vLLM Slack - Join the vLLM Slack community to connect with other users, ask questions, and share your experiences.

- GitHub Issues - Report bugs, request features, or browse existing issues. Your feedback helps us improve GuideLLM.

If you find GuideLLM helpful in your research or projects, please consider citing it:

@misc{guidellm2024,

title={GuideLLM: Scalable Inference and Optimization for Large Language Models},

author={Neural Magic, Inc.},

year={2024},

howpublished={\url{https://github.com/neuralmagic/guidellm}},

}