-

Notifications

You must be signed in to change notification settings - Fork 6.8k

[rllib] Adding DDPG (adapted from baselines) #1868

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Test FAILed. |

|

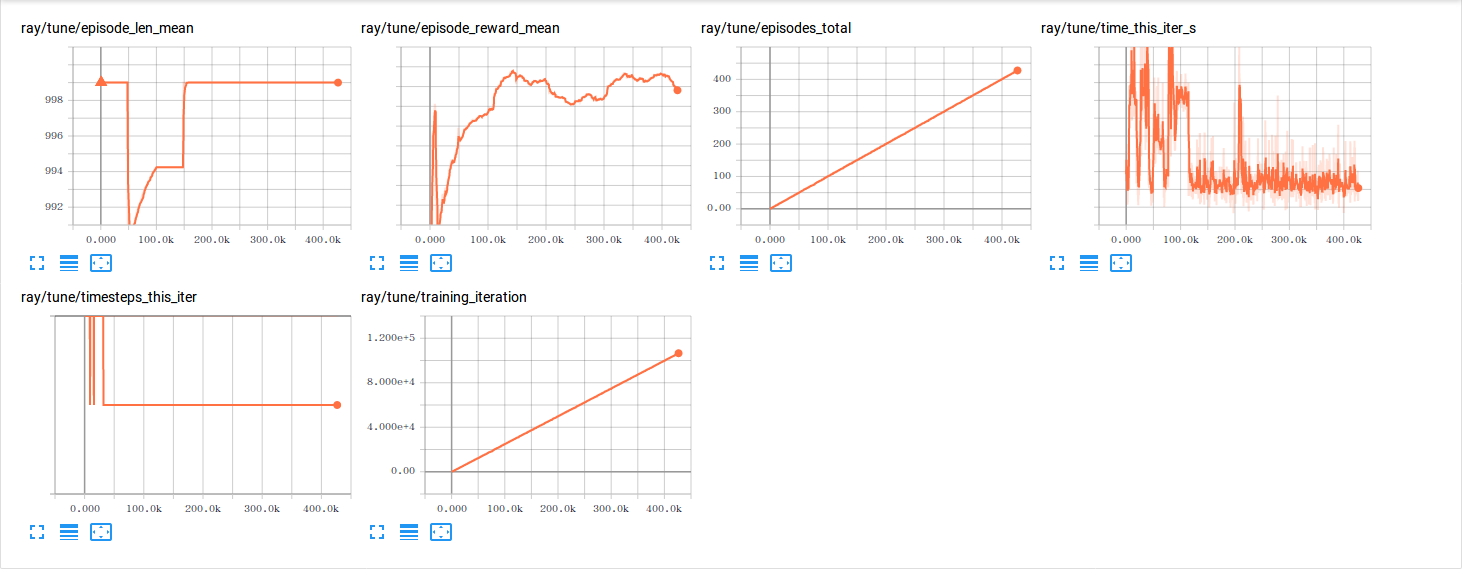

Thanks for contributing this! Do you have any performance numbers/charts? |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks a bunch for contributing this! Do you mind running flake8 on rllib/ddpg/ and fixing the errors that show up?

python/ray/rllib/__init__.py

Outdated

| def _register_all(): | ||

| for key in ["PPO", "ES", "DQN", "APEX", "A3C", "BC", "PG", "__fake", | ||

| "__sigmoid_fake_data", "__parameter_tuning"]: | ||

| "__sigmoid_fake_data", "__parameter_tuning", "DDPG"]: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

do you mind moving this before the private keys?

python/ray/rllib/ddpg/models.py

Outdated

|

|

||

| def _build_q_network(self, registry, inputs, state_space, ac_space, act_t, config): | ||

| x = inputs | ||

| x = tf.layers.dense(x, 64) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

can you use tf.slim instead? it would be great to avoid mixing higher level libraries

| import os | ||

|

|

||

| import numpy as np | ||

| import tensorflow as tf |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Would it be possible to move all of the TF code out of this file? We would like to keep the main algorithm framework agnostic

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for contributing this! I think this is good to merge after we add it to the test files mentioned below, and check that it passes those.

| @@ -0,0 +1,56 @@ | |||

| # -------------------------------------- | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Shall we move this into rllib/utils ?

| from ray.rllib.ddpg.ou_noise import AdaptiveParamNoiseSpec | ||

|

|

||

|

|

||

| def _huber_loss(x, delta=1.0): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Some of this seems duplicated with DQN, we should probably move it to a util file.

|

|

||

| """The base DDPG Evaluator that does not include the replay buffer. | ||

| TODO(rliaw): Support observation/reward filters?""" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This comment seems out of date and could be removed.

python/ray/rllib/ddpg/ddpg.py

Outdated

|

|

||

| return result | ||

|

|

||

| def _populate_replay_buffer(self): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Unused function.

python/ray/rllib/agent.py

Outdated

| return _ParameterTuningAgent | ||

| elif alg == "DDPG": | ||

| from ray.rllib import ddpg | ||

| return ddpg.DDPGAgent |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Along with registering it here, could you also register DDPG in these automated tests?

-

The generic test for supported obs/action spaces:

https://github.com/ray-project/ray/blob/master/python/ray/rllib/test/test_supported_spaces.py#L132 -

The regression tests folder: https://github.com/ray-project/ray/tree/master/python/ray/rllib/tuned_examples/regression_tests

(probably MountainCarContinuous-v0 is the right env for this test)

- The checkpoint/restore test https://github.com/ray-project/ray/blob/master/python/ray/rllib/test/test_checkpoint_restore.py

You should be able to basically copy paste an existing entry for e.g., DQN in each of these cases. The tests can be run locally (and will also be run by travis/jenkins once pushed here).

python/ray/rllib/ddpg/ddpg.py

Outdated

| # Whether to compute priorities on workers. | ||

| worker_side_prioritization=False, | ||

| # Whether to force evaluator actors to be placed on remote machines. | ||

| force_evaluators_remote=False) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Could we drop this config? It used to be used for Ape-X but is no longer needed due to improvements in Ray's actor scheduling.

move all of the TF code out of ddpg.py remove all unused functions use tf.slim

|

Test PASSed. |

|

Test FAILed. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looks close. I just ran ./train.py -f tuned_examples/regression_tests/mountaincarcontinuous-ddpg.yaml and got

Remote function __init__ failed with:

Traceback (most recent call last):

File "/home/eric/Desktop/ray-private/python/ray/worker.py", line 832, in _process_task

*arguments)

File "/home/eric/Desktop/ray-private/python/ray/actor.py", line 212, in actor_method_executor

method_returns = method(actor, *args)

File "/home/eric/Desktop/ray-private/python/ray/rllib/agent.py", line 84, in __init__

Trainable.__init__(self, config, registry, logger_creator)

File "/home/eric/Desktop/ray-private/python/ray/tune/trainable.py", line 89, in __init__

self._setup()

File "/home/eric/Desktop/ray-private/python/ray/rllib/agent.py", line 107, in _setup

self._init()

File "/home/eric/Desktop/ray-private/python/ray/rllib/ddpg/ddpg.py", line 105, in _init

for i in range(self.config["num_workers"])]

File "/home/eric/Desktop/ray-private/python/ray/rllib/ddpg/ddpg.py", line 105, in <listcomp>

for i in range(self.config["num_workers"])]

File "/home/eric/Desktop/ray-private/python/ray/actor.py", line 822, in remote

dependency=actor_cursor)

File "/home/eric/Desktop/ray-private/python/ray/actor.py", line 646, in _actor_method_call

args = signature.extend_args(function_signature, args, kwargs)

File "/home/eric/Desktop/ray-private/python/ray/signature.py", line 212, in extend_args

.format(function_name))

Exception: Too many arguments were passed to the function '__init__'

I think this is since the evaluator class doesn't take a logdir argument? Could you make sure that that example runs to -15 result?

python/ray/rllib/ddpg/ddpg.py

Outdated

| # Number of env steps to optimize for before returning | ||

| timesteps_per_iteration=1000, | ||

|

|

||

| exploration_noise=0.2, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Comment for exploration_noise and action_noise?

python/ray/rllib/ddpg/ddpg.py

Outdated

| # Smooth the current average reward over this many previous episodes. | ||

| smoothing_num_episodes=100, | ||

|

|

||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

one newline

python/ray/rllib/ddpg/ddpg.py

Outdated

|

|

||

| return result | ||

|

|

||

| # def _populate_replay_buffer(self): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Remove?

python/ray/rllib/ddpg/ou_noise.py

Outdated

| import numpy as np | ||

|

|

||

|

|

||

| class OUNoise: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think you forgot to remove this file after moving it to rllib/utils

python/ray/rllib/__init__.py

Outdated

|

|

||

| def _register_all(): | ||

| for key in ["PPO", "ES", "DQN", "APEX", "A3C", "BC", "PG", "__fake", | ||

| for key in ["PPO", "ES", "DQN", "APEX", "A3C", "BC", "PG", "DDPG", "__fake", |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Could we rename this to "DDPG_baselines", and change the directory to rllib/ddpg_baselines in order to not conflict with the other DDPG pr here https://github.com/ray-project/ray/pull/1877/files ?

The main feature of this PR is that it's adapted from the baselines code, so we should probably keep that in the naming (or, could also call it DDPG2 and just leave the details in the README).

|

Test FAILed. |

|

Test FAILed. |

|

Test FAILed. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Bunch of lint errors here: https://api.travis-ci.org/v3/job/365429239/log.txt

Could you also make sure the HalfCheetah environment trains, and add a tuned example for this env? This one should be fairly fast and so is a good sanity check for DDPG.

python/ray/rllib/__init__.py

Outdated

|

|

||

| for key in ["PPO", "ES", "DQN", "APEX", "A3C", "BC", "PG", "DDPG", | ||

| "__fake", "__sigmoid_fake_data", "__parameter_tuning"]: | ||

| "DDPG_beselines", "__fake", "__sigmoid_fake_data", "__parameter_tuning"]: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

typo: baselines

| self.set_weights(objs["weights"]) | ||

|

|

||

| def sync_filters(self, new_filters): | ||

| """Changes self's filter to given and rebases any accumulated delta. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Indentation here is off

|

Test FAILed. |

|

Test FAILed. |

|

Test FAILed. |

|

Test FAILed. |

|

Test PASSed. |

|

Test PASSed. |

|

Test FAILed. |

|

Test FAILed. |

|

Test FAILed. |

|

Test PASSed. |

|

Test FAILed. |

|

Test FAILed. |

|

Test PASSed. |

|

why does continuous-integration/travis-ci/pr always fail ? I don‘t konw if my code is wrong |

|

@qyccc unfortunately we have a bunch of flaky tests right now. Really need to address this.. Keeping track of them at https://github.com/ray-project/ray/issues?q=is%3Aissue+is%3Aopen+label%3A%22test+failure%22. |

|

Hm now that the other two DDPG's have been consolidated (as they achieve the exact same performance), it probably makes sense for this one to as well. |

What do these changes do?

Add the algorithm ddpg in rllib.

It can run using the same command as 'python ray/python/ray/rllib/train.py --run DDPG --env MountainCarContinuous-v0'.

Related issue number