Implementation of some simple Neural-Networks for binary classification with newff toolbox in Matlab

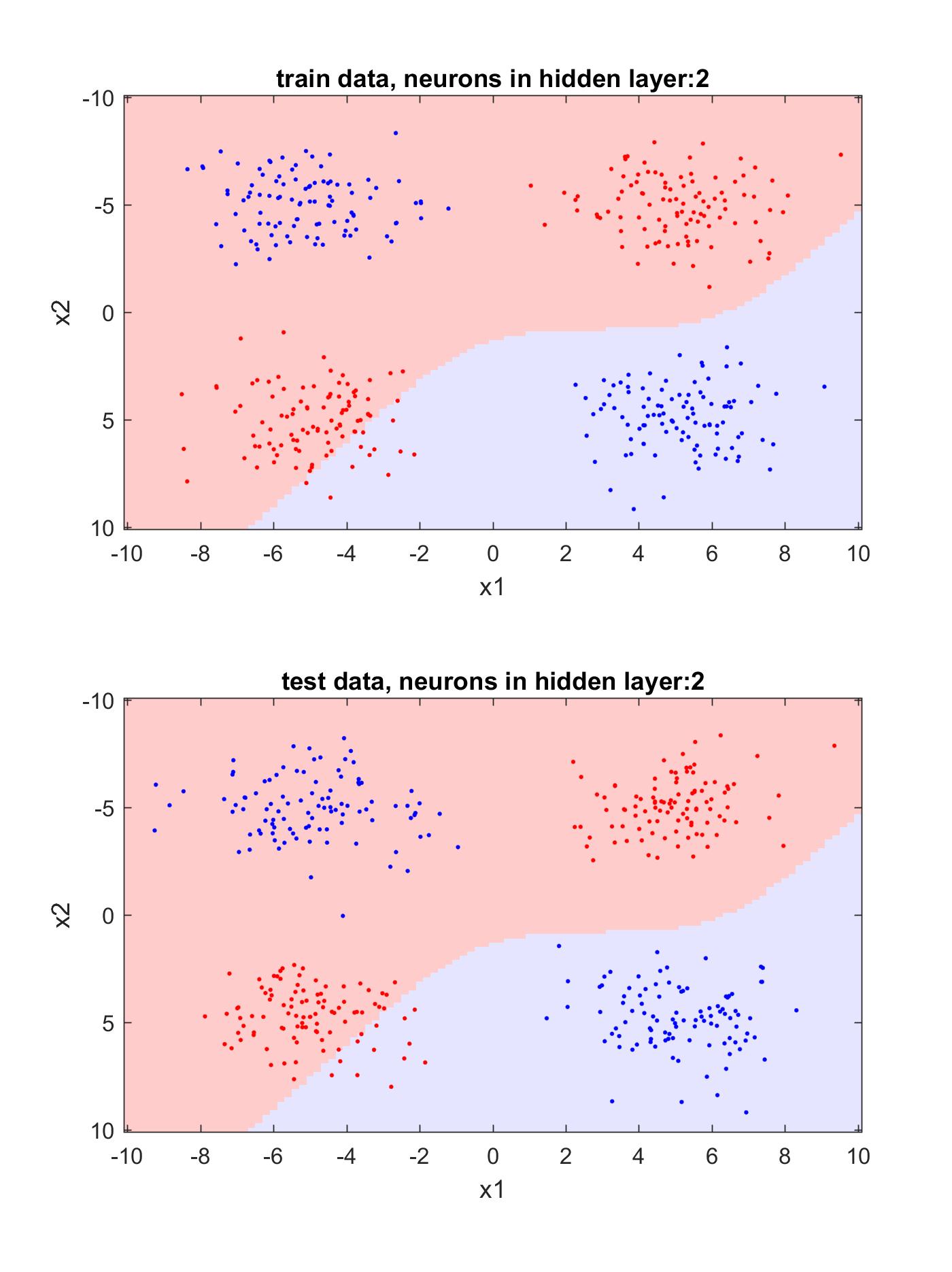

figure1 (2 neurons in hidden layer)

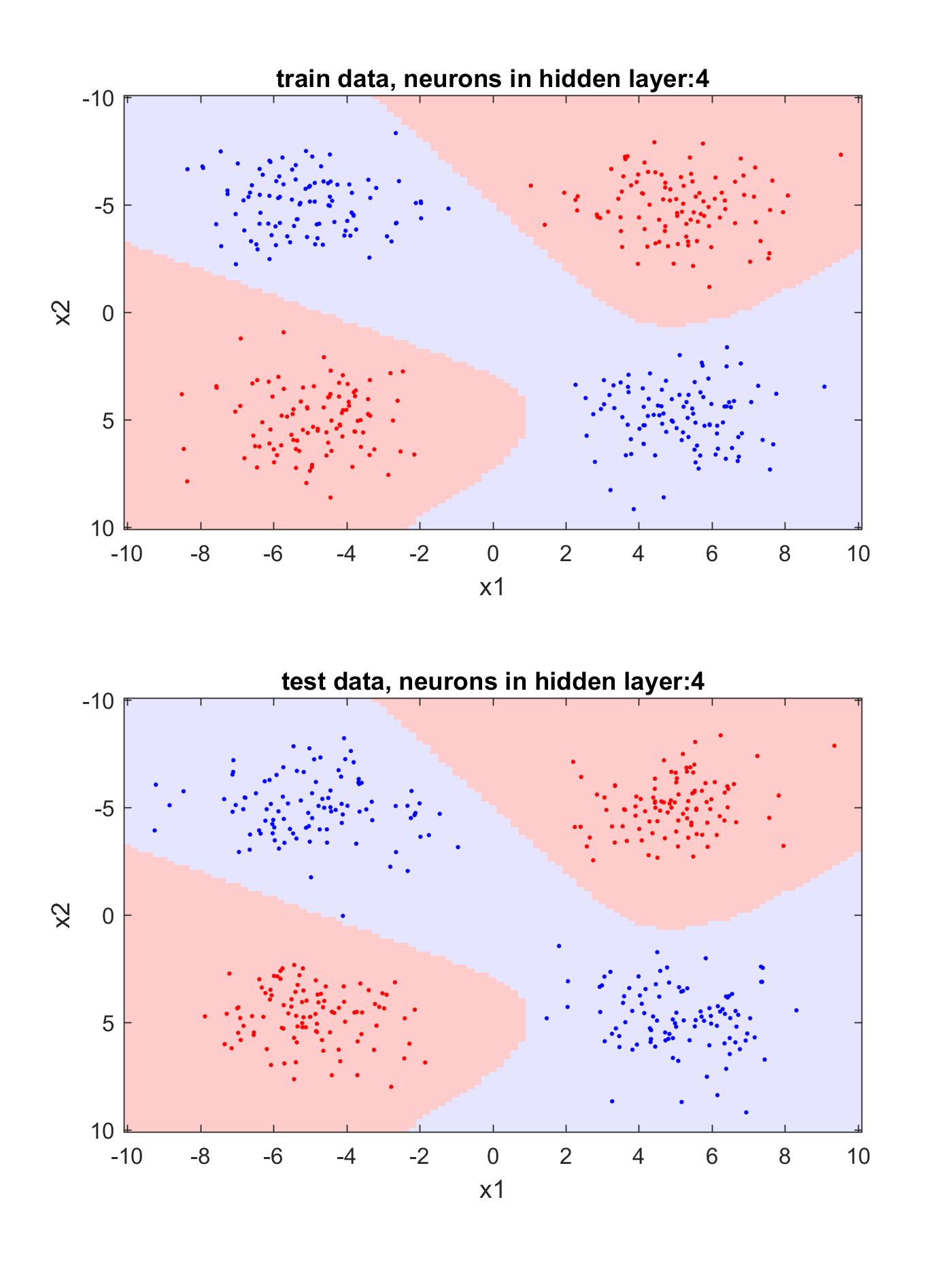

figure2 (4 neurons in hidden layer)

figure3 (15 neurons in hidden layer)

The use of two nodes (neurons), one hidden layer, and a standard gradient descent backpropagation algorithm have caused the network couldn't classify data correctly, and around 40 percent of data are misclassified.

But if the number of nodes increases to 4, the network can classify much better. But this does not mean that as the number of neurons increases, the accuracy of the classification should increase. (As can be seen, 15 neurons in the hidden layer misclassify some data points).

Actually, for better results, we can use two hidden layers except for one hidden layer or use a backpropagation algorithm with an adaptive learning rate!