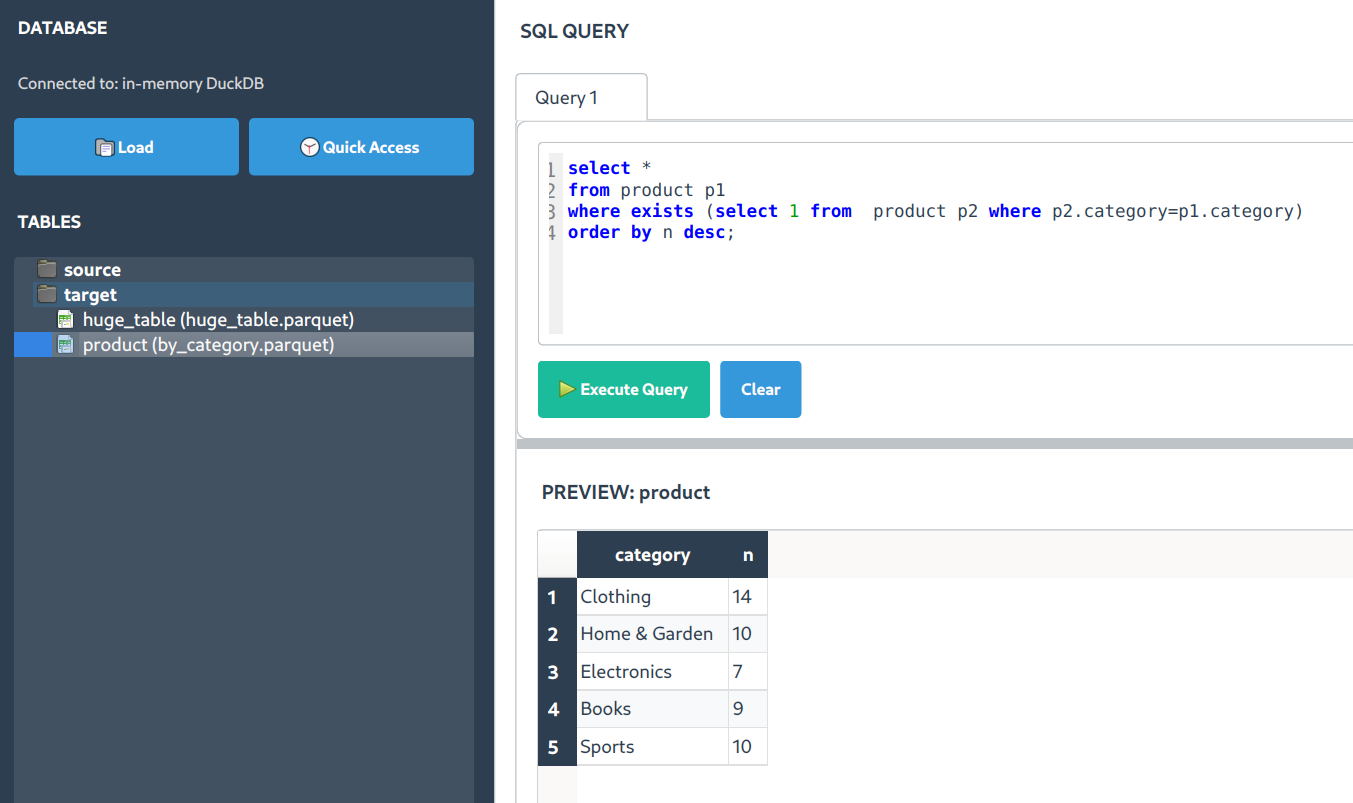

A fast SQL interface for analyzing data files ✨

Query CSV, Parquet, Excel files with SQL • DuckDB powered • No database setup required

SQLShell is a desktop SQL interface specifically designed for analyzing data files. It's not a database client - instead, it lets you load CSV, Parquet, Excel, and other data files and query them with SQL using DuckDB's fast analytical engine.

|

⚡ Fast File Analysis Load data files and search through millions of rows quickly. Built on DuckDB for analytical performance. |

🎯 Smart Execution

|

🧠 SQL Autocompletion Context-aware suggestions that understand your loaded tables and column names. |

Important: SQLShell works with data files, not live databases. It's designed for:

- 📊 Data Files - CSV, Parquet, Excel, TSV, JSON files

- 🗃️ Local Analysis - Load files from your computer for SQL analysis

- ⚡ Fast Queries - DuckDB engine optimized for analytical workloads

- 🔍 Data Exploration - Search and filter capabilities across your datasets

Not supported: Live database connections (MySQL, PostgreSQL, etc.). Use dedicated database clients for those.

- 🏎️ DuckDB Powered - Fast analytical queries on data files

- 📊 Multiple File Formats - CSV, Parquet, Excel, Delta, TSV, JSON support

- 🎨 Clean Interface - Simple SQL editor with result display

- 🔍 Search Functionality - Find data across result sets quickly

- 🚀 Zero Database Setup - No server installation or configuration needed

Get up and running in 30 seconds:

pip install sqlshell

sqlsThat's it! 🎉 SQLShell opens and you can start loading data files.

🐧 Linux Users - One-Time Setup for Better Experience

# Create dedicated environment (recommended)

python3 -m venv ~/.venv/sqlshell

source ~/.venv/sqlshell/bin/activate

pip install sqlshell

# Add convenient alias

echo 'alias sqls="~/.venv/sqlshell/bin/sqls"' >> ~/.bashrc

source ~/.bashrc💻 Alternative Launch Methods

If sqls doesn't work immediately:

python -c "import sqlshell; sqlshell.start()"- Launch:

sqls - Load Data: Click "Load Files" to import your CSV, Parquet, or Excel files

- Query: Write SQL queries against your loaded data

- Execute: Hit

Ctrl+EnterorF5to run queries - Search: Press

Ctrl+Fto search through results

Once you have query results, use Ctrl+F to search across all columns:

- Cross-column search - Finds terms across all visible columns

- Case-insensitive - Flexible text matching

- Instant feedback - Filter results as you type

- Numeric support - Search numbers and dates

| Use Case | Search Term | What It Finds |

|---|---|---|

| Error Analysis | "error" |

Error messages in log files |

| Data Quality | "null" |

Missing data indicators |

| ID Tracking | "CUST_12345" |

Specific customer records |

| Pattern Matching | "*.com" |

Email domains |

Workflow: Load file → Query data → Ctrl+F → Search → ESC to clear

Right-click text columns to create binary indicator columns for analysis:

-- Original data

SELECT category FROM products;

-- "Electronics", "Books", "Clothing"

-- After encoding

SELECT

category_Electronics,

category_Books,

category_Clothing

FROM products_encoded;Right-click columns for quick statistical analysis and correlation insights.

F5- Execute all SQL statements in sequenceF9- Execute only the current statement (where cursor is positioned)- Useful for: Testing queries step by step

- Press

Ctrl+Spacefor suggestions - After SELECT: Available columns from loaded tables

- After FROM/JOIN: Loaded table names

- After WHERE: Column names with appropriate operators

SQLShell can load and query:

- CSV/TSV - Comma and tab-separated files

- Parquet - Column-oriented format

- Excel - .xlsx and .xls files

- JSON - Structured JSON data

- Delta - Delta Lake format files

-- Load and explore your CSV data

SELECT * FROM my_data LIMIT 10;

-- Aggregate analysis

SELECT

category,

AVG(price) as avg_price,

COUNT(*) as count

FROM sales_data

GROUP BY category

ORDER BY avg_price DESC;-- Join data from multiple loaded files

SELECT

c.customer_name,

SUM(o.order_total) as total_spent

FROM customers c

JOIN orders o ON c.customer_id = o.customer_id

GROUP BY c.customer_name

ORDER BY total_spent DESC

LIMIT 10;|

📊 Data Analysts

🔬 Data Scientists

|

💼 Business Analysts

🛠️ Developers

|

- Python 3.8+

- Auto-installed dependencies: PyQt6, DuckDB, Pandas, NumPy

System Requirements: SQLShell is a desktop application that works on Windows, macOS, and Linux.

|

|

- Load files (drag & drop or Load Files button)

- Explore structure (

SELECT * FROM table_name LIMIT 5) - Build analysis (use F9 to test statements)

- Search results (Ctrl+F for specific data)

- Export findings (copy results or save queries)

📊 Table Analysis Tools

Right-click loaded tables for:

- Column profiling - Data types, null counts, unique values

- Quick statistics - Min, max, average for numeric columns

- Sample data preview - Quick look at table contents

🔮 Column Operations

Right-click column headers in results:

- Text encoding - Create binary columns from categories

- Statistical summary - Distribution and correlation info

- Data type conversion - Format suggestions

⚡ Performance Tips

- File format matters - Parquet files load faster than CSV

- Use LIMIT - for initial exploration of large files

- Column selection - Select only needed columns for better performance

- Indexing - DuckDB automatically optimizes common query patterns

SQLShell is open source and welcomes contributions!

git clone https://github.com/oyvinrog/SQLShell.git

cd SQLShell

pip install -e .Ways to contribute:

- 🐛 Report bugs and issues

- 💡 Suggest new features

- 📖 Improve documentation

- 🔧 Submit pull requests

- ⭐ Star the repo to show support

MIT License - feel free to use SQLShell in your projects!

Ready to analyze your data files with SQL?

pip install sqlshell && sqls⭐ Star us on GitHub if SQLShell helps with your data analysis!

🚀 Get Started Now • 📖 Documentation • 🐛 Report Issues

A simple tool for SQL-based file analysis