-

Notifications

You must be signed in to change notification settings - Fork 22.7k

WritableStream: example, fixed block character in the output #14371

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

This comment was marked as outdated.

This comment was marked as outdated.

I can’t reproduce that in Chrome in my macOS environment — though I can reproduce it in Safari. But regardless, it seems to me the cause may actually be browser bugs, not a problem with the code.

While that’s true about how JavaScript encodes strings internally, that’s not true at the API layer for

The code here is doing So the |

|

@sideshowbarker

Some browsers hide non-printable characters. Above JSfiddle on Windows in Chrome and Edge shows all extended ASCII chars. Non-printable characters are shown as empty boxes. Same JSFiddle on ipad Safari and Chrome doesn't show non printable characters.

We can easily verify if it's a a browser bug. In above JSFiddle, in the output, received message length is 26 in both Windows and iPadOs. Can you check the same in Chrome on macOS?

You are right. var buffer = new ArrayBuffer(2);

var view = new Uint16Array(buffer);

view[0] = chunk;

var decoded = decoder.decode(view, { stream: true });By using Uint16Array, the var buffer = new ArrayBuffer(1);

var view = new Uint8Array(buffer);

view[0] = chunk;

var decoded = decoder.decode(view, { stream: true });This solved the issue on windows and ipadOs. Let me know if it works ok on your end: https://jsfiddle.net/onqp6xvt/ |

Summary

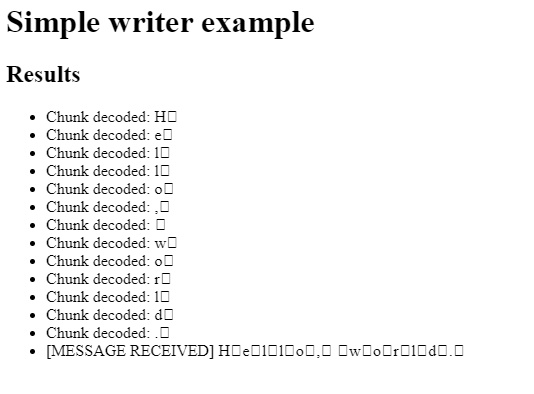

In the example https://developer.mozilla.org/en-US/docs/Web/API/WritableStream#examples ,

there are unwanted block characters in the output:

In JavaScript strings use UTF-16 encoding.

With

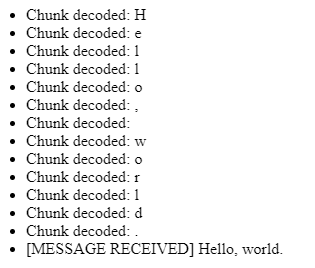

utf-16encoding for the decoder, the issue is fixed:After:

Tested in Chrome on Windows 10.

Note: I've created a PR for the same in mdn/dom-examples repo: mdn/dom-examples#97

I have no idea who is the reviewer there.

Metadata