A CDK example of a Glue Crawler based on S3 event notifications.

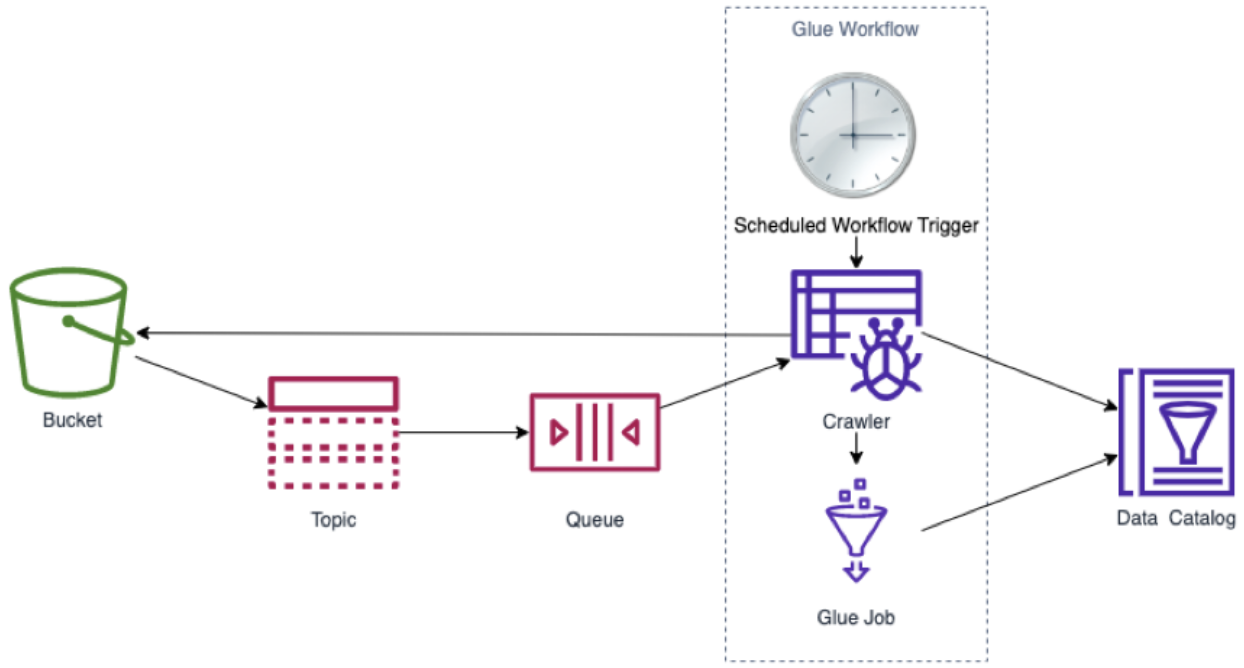

Here is a look at what is being deployed by this stack :

Install poetry:

curl -sSL https://install.python-poetry.org | python3 -

Create Python environment:

# The python version of this project is 3.10 or further.

poetry install

Activate the virtual environment:

poetry shell

Deploy the stack containing the crawler, a bucket and a Glue workflow to run the crawler:

# It is assumed that you have valid AWS credentials

cdk deploy

To test the solution, you can upload a json (for example example_json) in the bucket:

aws s3 cp example_json s3://<your bucket name>

Then run the Glue Workflow a first time. For the following commands, the name of the workflow and of the crawler are displayed as output of the stak

aws glue start-workflow-run --name <your workflow name>

To see the results of this first launch:

# This command might fail at the beginning as the log group has to be created the first time the crawler is launched

aws logs tail /aws-glue/crawlers --log-stream-names <you crawler name> --follow

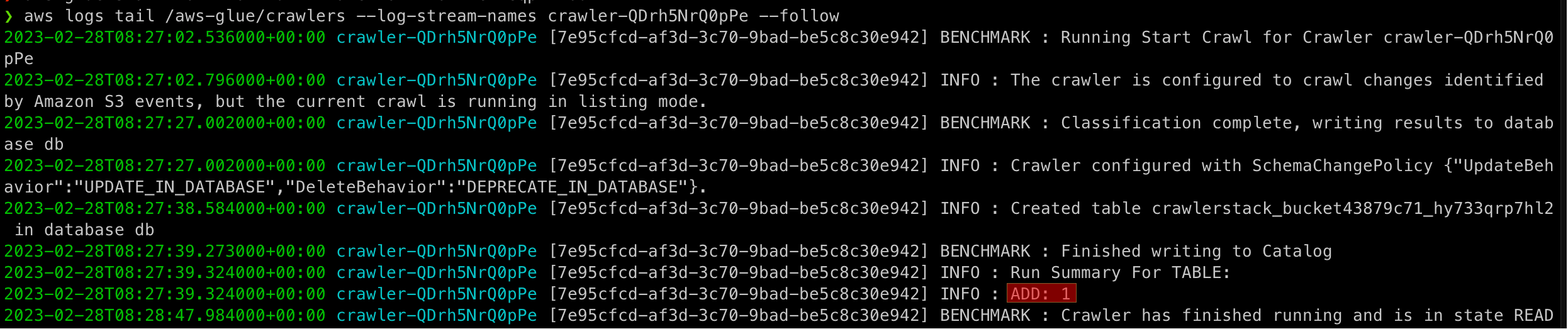

If all went right, you should have something like that:

You can then redo these two commands to see what the second execution is doing

aws glue start-workflow-run --name <your workflow name>

aws logs tail /aws-glue/crawlers --log-stream-names <you crawler name> --follow

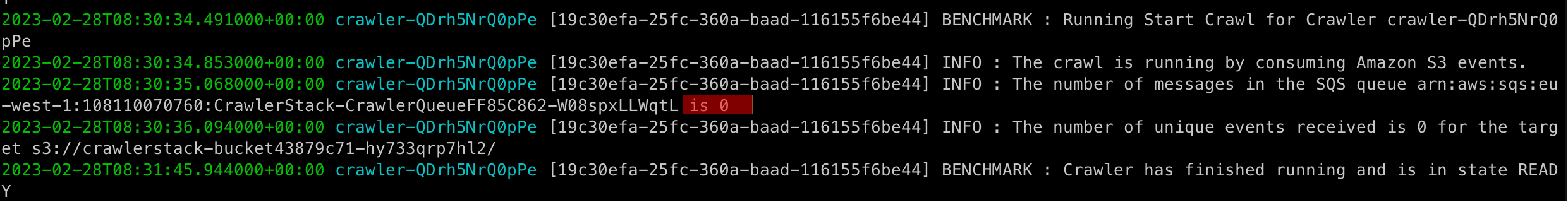

Now the number of unique events should be 0 and the crawler should have done nothing:

To then delete the stack :

cdk destroy