In this machine learning experiment, I have analyzed the effect of using floating point numbers instead of integers in AI actions.

I have created a very basic machine learning scenario. The AI has to prevent colliding with obstacles and the ceiling. It gets a little reward for each successful dodging and a big punishment for every colliding.

Used tools: Pytorch, ML Agents (1.1.0-preview 3), Tensorboard, Unity3D.

8 different playgrounds are used to speed up the learning process. Children of each agent has a vertically attached "Ray Perception Sensor" component. There have been no observations other than that.

There are rewarding walls that agents have to collide with behind every obstacle.

(Obsticles are randomly spawned)

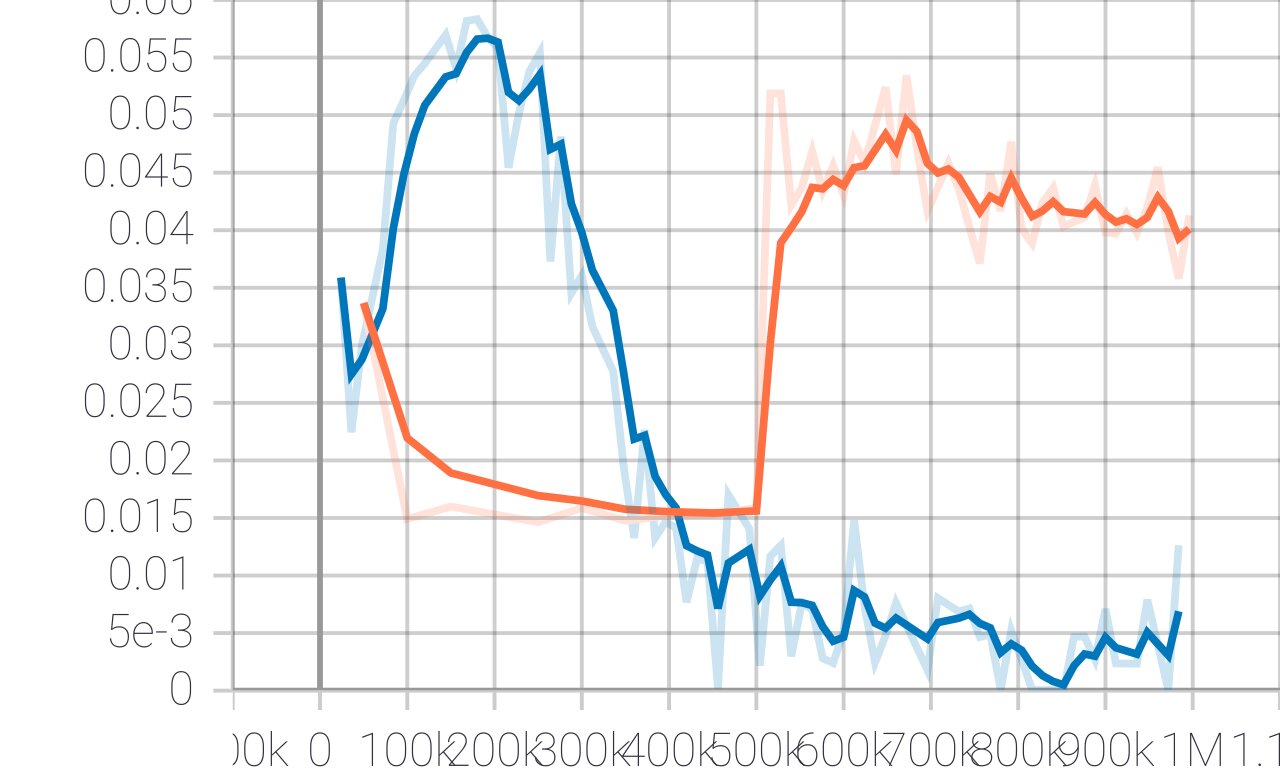

Both tests ran for one million steps. Blue and orange lines represent the mean reward for float and integer actions of the AI respectively.

(Each reward has a value of 0.1f, punishments have -1f)

It was pretty hard to control the jumps even while the agent was in human control. As can be seen above, using float data type for AI actions shortened the learning period dramatically. After around 800k steps, test runs started to complete with little to no mistakes but they didn't contribute to the mean reward graph.

The mean loss of the value update correlates to how well the model is able to predict the value of each state. This should increase while the agent is learning, and then decrease once the reward stabilizes.

Running a test using the brain of the AI with float type actions after 1 million learning step: