-

Notifications

You must be signed in to change notification settings - Fork 16k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

fix: provider the latest duckduckgo_search API #5184

fix: provider the latest duckduckgo_search API #5184

Conversation

|

duckduckgo_search readme attention: Versions before v2.9.4 no longer work as of May 12, 2023 |

|

@vowelparrot I wonder why |

|

The |

|

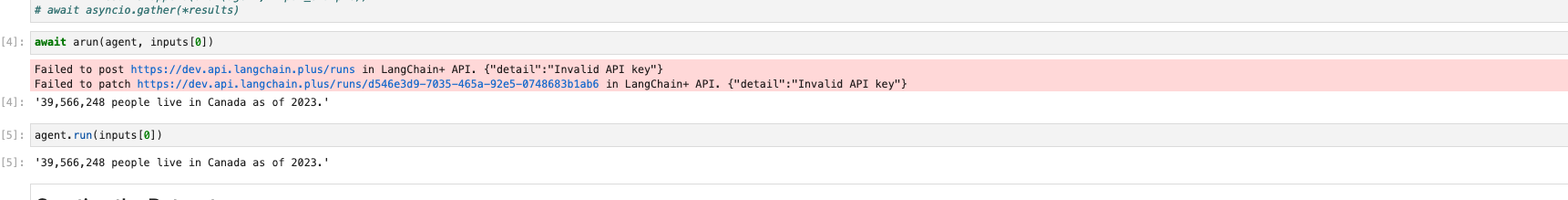

@vowelparrot I got the following problem. Do you have ideas to solve the problem? |

|

I'll try to get back to this later - opened a PR on the weaviate client to bump the upper bound. the Dep resolution issue seems fairly common : python-poetry/poetry#697 |

|

Oh I see. It's a very common problem. |

|

We can harness the for i, res in enumerate(results, 1):

snippets.append(res['body'])

if i == self.max_results:

break

return snippets

...

for i, res in enumerate(results, 1):

yield res['body']

if i == self.max_results:

return

...In the latest version of duckduckgo_search, it is recommand to use context manager with with DDGS() as ddgs:

ddgs.text(...) |

|

ok I fix it later. Previously, due to dependency version conflicts, I was unable to pass the test. |

# Fix for docstring in faiss.py vectorstore (load_local) The doctring should reflect that load_local loads something FROM the disk.

…-ai#5449) This removes duplicate code presumably introduced by a cut-and-paste error, spotted while reviewing the code in ```langchain/client/langchain.py```. The original code had back to back occurrences of the following code block: ``` response = self._get( path, params=params, ) raise_for_status_with_text(response) ```

# What does this PR do? Bring support of `encode_kwargs` for ` HuggingFaceInstructEmbeddings`, change the docstring example and add a test to illustrate with `normalize_embeddings`. Fixes langchain-ai#3605 (Similar to langchain-ai#3914) Use case: ```python from langchain.embeddings import HuggingFaceInstructEmbeddings model_name = "hkunlp/instructor-large" model_kwargs = {'device': 'cpu'} encode_kwargs = {'normalize_embeddings': True} hf = HuggingFaceInstructEmbeddings( model_name=model_name, model_kwargs=model_kwargs, encode_kwargs=encode_kwargs ) ```

…#5432) # Handles the edge scenario in which the action input is a well formed SQL query which ends with a quoted column There may be a cleaner option here (or indeed other edge scenarios) but this seems to robustly determine if the action input is likely to be a well formed SQL query in which we don't want to arbitrarily trim off `"` characters Fixes langchain-ai#5423 ## Who can review? Community members can review the PR once tests pass. Tag maintainers/contributors who might be interested: For a quicker response, figure out the right person to tag with @ @hwchase17 - project lead Agents / Tools / Toolkits - @vowelparrot

# docs cleaning Changed docs to consistent format (probably, we need an official doc integration template): - ClearML - added product descriptions; changed title/headers - Rebuff - added product descriptions; changed title/headers - WhyLabs - added product descriptions; changed title/headers - Docugami - changed title/headers/structure - Airbyte - fixed title - Wolfram Alpha - added descriptions, fixed title - OpenWeatherMap - - added product descriptions; changed title/headers - Unstructured - changed description ## Who can review? Community members can review the PR once tests pass. Tag maintainers/contributors who might be interested: @hwchase17 @dev2049

# Added Async _acall to FakeListLLM FakeListLLM is handy when unit testing apps built with langchain. This allows the use of FakeListLLM inside concurrent code with [asyncio](https://docs.python.org/3/library/asyncio.html). I also changed the pydocstring which was out of date. ## Who can review? @hwchase17 - project lead @agola11 - async

# Add batching to Qdrant Several people requested a batching mechanism while uploading data to Qdrant. It is important, as there are some limits for the maximum size of the request payload, and without batching implemented in Langchain, users need to implement it on their own. This PR exposes a new optional `batch_size` parameter, so all the documents/texts are loaded in batches of the expected size (64, by default). The integration tests of Qdrant are extended to cover two cases: 1. Documents are sent in separate batches. 2. All the documents are sent in a single request.

Update [psychicapi](https://pypi.org/project/psychicapi/) python package dependency to the latest version 0.5. The newest python package version addresses breaking changes in the Psychic http api.

# Add maximal relevance search to SKLearnVectorStore This PR implements the maximum relevance search in SKLearnVectorStore. Twitter handle: jtolgyesi (I submitted also the original implementation of SKLearnVectorStore) ## Before submitting Unit tests are included. Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

…loader (langchain-ai#5466) # Adds ability to specify credentials when using Google BigQuery as a data loader Fixes langchain-ai#5465 . Adds ability to set credentials which must be of the `google.auth.credentials.Credentials` type. This argument is optional and will default to `None. Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

…the class BooleanOutputParser (langchain-ai#5397) when the LLMs output 'yes|no',BooleanOutputParser can parse it to 'True|False', fix the ValueError in parse(). <!-- when use the BooleanOutputParser in the chain_filter.py, the LLMs output 'yes|no',the function 'parse' will throw ValueError。 --> Fixes # (issue) langchain-ai#5396 langchain-ai#5396 --------- Co-authored-by: gaofeng27692 <gaofeng27692@hundsun.com>

# Added support for modifying the number of threads in the GPT4All model I have added the capability to modify the number of threads used by the GPT4All model. This allows users to adjust the model's parallel processing capabilities based on their specific requirements. ## Changes Made - Updated the `validate_environment` method to set the number of threads for the GPT4All model using the `values["n_threads"]` parameter from the `GPT4All` class constructor. ## Context Useful in scenarios where users want to optimize the model's performance by leveraging multi-threading capabilities. Please note that the `n_threads` parameter was included in the `GPT4All` class constructor but was previously unused. This change ensures that the specified number of threads is utilized by the model . ## Dependencies There are no new dependencies introduced by this change. It only utilizes existing functionality provided by the GPT4All package. ## Testing Since this is a minor change testing is not required. --------- Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

# Allow for async use of SelfAskWithSearchChain Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

…bject (langchain-ai#5321) This PR adds a new method `from_es_connection` to the `ElasticsearchEmbeddings` class allowing users to use Elasticsearch clusters outside of Elastic Cloud. Users can create an Elasticsearch Client object and pass that to the new function. The returned object is identical to the one returned by calling `from_credentials` ``` # Create Elasticsearch connection es_connection = Elasticsearch( hosts=['https://es_cluster_url:port'], basic_auth=('user', 'password') ) # Instantiate ElasticsearchEmbeddings using es_connection embeddings = ElasticsearchEmbeddings.from_es_connection( model_id, es_connection, ) ``` I also added examples to the elasticsearch jupyter notebook Fixes # langchain-ai#5239 --------- Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

# SQLite-backed Entity Memory Following the initiative of langchain-ai#2397 I think it would be helpful to be able to persist Entity Memory on disk by default Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

Co-authored-by: David Revillas <26328973+r3v1@users.noreply.github.com>

# Support Qdrant filters Qdrant has an [extensive filtering system](https://qdrant.tech/documentation/concepts/filtering/) with rich type support. This PR makes it possible to use the filters in Langchain by passing an additional param to both the `similarity_search_with_score` and `similarity_search` methods. ## Who can review? @dev2049 @hwchase17 --------- Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

Example (would log several times if not for the helper fn. Would emit no logs due to mulithreading previously)

# Introduces embaas document extraction api endpoints In this PR, we add support for embaas document extraction endpoints to Text Embedding Models (with LLMs, in different PRs coming). We currently offer the MTEB leaderboard top performers, will continue to add top embedding models and soon add support for customers to deploy thier own models. Additional Documentation + Infomation can be found [here](https://embaas.io). While developing this integration, I closely followed the patterns established by other langchain integrations. Nonetheless, if there are any aspects that require adjustments or if there's a better way to present a new integration, let me know! :) Additionally, I fixed some docs in the embeddings integration. Related PR: langchain-ai#5976 #### Who can review? DataLoaders - @eyurtsev

Update the Run object in the tracer to extend that in the SDK to include the parameters necessary for tracking/tracing

…gchain-ai#5833 (langchain-ai#6077) This PR fixes the error `ModuleNotFoundError: No module named 'langchain.cli'` Fixes langchain-ai#5833 (issue)

…in-ai#6069) Add test and update notebook for `MarkdownHeaderTextSplitter`.

langchain-ai#6056) This adds implementation of MMR search in pinecone; and I have two semi-related observations about this vector store class: - Maybe we should also have a `similarity_search_by_vector_returning_embeddings` like in supabase, but it's not in the base `VectorStore` class so I didn't implement - Talking about the base class, there's `similarity_search_with_relevance_scores`, but in pinecone it is called `similarity_search_with_score`; maybe we should consider renaming it to align with other `VectorStore` base and sub classes (or add that as an alias for backward compatibility) #### Who can review? Tag maintainers/contributors who might be interested: - VectorStores / Retrievers / Memory - @dev2049

<!-- Thank you for contributing to LangChain! Your PR will appear in our release under the title you set. Please make sure it highlights your valuable contribution. Replace this with a description of the change, the issue it fixes (if applicable), and relevant context. List any dependencies required for this change. After you're done, someone will review your PR. They may suggest improvements. If no one reviews your PR within a few days, feel free to @-mention the same people again, as notifications can get lost. Finally, we'd love to show appreciation for your contribution - if you'd like us to shout you out on Twitter, please also include your handle! --> <!-- Remove if not applicable --> Fixes # (issue) #### Before submitting <!-- If you're adding a new integration, please include: 1. a test for the integration - favor unit tests that does not rely on network access. 2. an example notebook showing its use See contribution guidelines for more information on how to write tests, lint etc: https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md --> #### Who can review? Tag maintainers/contributors who might be interested: <!-- For a quicker response, figure out the right person to tag with @ @hwchase17 - project lead Tracing / Callbacks - @agola11 Async - @agola11 DataLoaders - @eyurtsev Models - @hwchase17 - @agola11 Agents / Tools / Toolkits - @vowelparrot VectorStores / Retrievers / Memory - @dev2049 -->

Makes it easier to then run evals w/o thinking about specifying a session

Add a callback handler that can collect nested run objects. Useful for evaluation.

Minor fix in documentation. Change URL in wget call to proper one.

Co-authored-by: thiswillbeyourgithub <github@32mail.33mail.com>

<!-- Thank you for contributing to LangChain! Your PR will appear in our release under the title you set. Please make sure it highlights your valuable contribution. Replace this with a description of the change, the issue it fixes (if applicable), and relevant context. List any dependencies required for this change. After you're done, someone will review your PR. They may suggest improvements. If no one reviews your PR within a few days, feel free to @-mention the same people again, as notifications can get lost. Finally, we'd love to show appreciation for your contribution - if you'd like us to shout you out on Twitter, please also include your handle! --> <!-- Remove if not applicable --> Fixes # (issue) #### Before submitting <!-- If you're adding a new integration, please include: 1. a test for the integration - favor unit tests that does not rely on network access. 2. an example notebook showing its use See contribution guidelines for more information on how to write tests, lint etc: https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md --> #### Who can review? Tag maintainers/contributors who might be interested: <!-- For a quicker response, figure out the right person to tag with @ @hwchase17 - project lead Tracing / Callbacks - @agola11 Async - @agola11 DataLoaders - @eyurtsev Models - @hwchase17 - @agola11 Agents / Tools / Toolkits - @hwchase17 VectorStores / Retrievers / Memory - @dev2049 -->

<!-- Thank you for contributing to LangChain! Your PR will appear in our release under the title you set. Please make sure it highlights your valuable contribution. Replace this with a description of the change, the issue it fixes (if applicable), and relevant context. List any dependencies required for this change. After you're done, someone will review your PR. They may suggest improvements. If no one reviews your PR within a few days, feel free to @-mention the same people again, as notifications can get lost. Finally, we'd love to show appreciation for your contribution - if you'd like us to shout you out on Twitter, please also include your handle! --> ## Add Solidity programming language support for code splitter. Twitter: @0xjord4n_ <!-- If you're adding a new integration, please include: 1. a test for the integration - favor unit tests that does not rely on network access. 2. an example notebook showing its use See contribution guidelines for more information on how to write tests, lint etc: https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md --> #### Who can review? Tag maintainers/contributors who might be interested: @hwchase17 <!-- For a quicker response, figure out the right person to tag with @ @hwchase17 - project lead Tracing / Callbacks - @agola11 Async - @agola11 DataLoaders - @eyurtsev Models - @hwchase17 - @agola11 Agents / Tools / Toolkits - @hwchase17 VectorStores / Retrievers / Memory - @dev2049 -->

…ai#5922) Confluence API supports difference format of page content. The storage format is the raw XML representation for storage. The view format is the HTML representation for viewing with macros rendered as though it is viewed by users. Add the `content_format` parameter to `ConfluenceLoader.load()` to specify the content format, this is set to `ContentFormat.STORAGE` by default. #### Who can review? Tag maintainers/contributors who might be interested: @eyurtsev --------- Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

|

@Undertone0809 is attempting to deploy a commit to the LangChain Team on Vercel. A member of the Team first needs to authorize it. |

|

Oh, I don't know why make so many commit. Perhaps I should create a new PR #6409 |

Provider the latest duckduckgo_search API

The Git commit contents involve two files related to some DuckDuckGo query operations, and an upgrade of the DuckDuckGo module to version 3.2.0. A suitable commit message could be "Upgrade DuckDuckGo module to version 3.2.0, including query operations". Specifically, in the duckduckgo_search.py file, a DDGS() class instance is newly added to replace the previous ddg() function, and the time parameter name in the get_snippets() and results() methods is changed from "time" to "timelimit" to accommodate recent changes. In the pyproject.toml file, the duckduckgo-search module is upgraded to version 3.2.0.

Who can review?

@vowelparrot