-

Notifications

You must be signed in to change notification settings - Fork 19.8k

Description

I noticed that when I moved this solution from OpenAI to AzureOpenAI (same model), it produced non-expected results.

After digging into it, discovered that they may be a problem with the way RetrievalQAWithSourcesChain.from_chain_type utilizes the LLM specifically with the map_reduce chain.

(it does not seem to occur with the refine chain -- it seems to work as expected)

Here is the full example:

I ingested a website and embedded it into the ChromaDB vector database.

I am using the same DB for both of my tests side by side.

I created a "loader" (text interface) pointed at OpenAI, using the 'text-davinci-003' model, temperature of 0, and using the ChromaDB of embeddings.

Asking this question: "What paid holidays do staff get?"

I get this answer:

Answer: Staff get 12.5 paid holidays, including New Year's Day, Martin Luther King, Jr. Day, Presidents' Day, Memorial Day, Juneteenth, Independence Day, Labor Day, Columbus Day/Indigenous Peoples' Day, Veterans Day, Thanksgiving, the Friday after Thanksgiving, Christmas Eve 1/2 day, and Christmas Day, plus a Winter Recess during the week between Christmas and New Year.

Source: https://website-redacted/reference1, https://website-redacted/reference2

When moving this loader over to the AzureOpenAI side --

Asking the same question: "What paid holidays do staff get?"

And I get this answer:

I don't know what paid holidays staff get.

There are only 3 changes to move OpenAI -> AzureOpenAI

1.) Removing the:

oaikey = "..."

os.environ["OPENAI_API_KEY"] = oaikey

And switching it out for:

export OPENAI_API_TYPE="azure"

export OPENAI_API_VERSION="2023-03-15-preview"

export OPENAI_API_BASE="https://endpoit-redacted.openai.azure.com/"

export OPENAI_API_KEY="..."

2.) Changing my OpenAI initializer to use the deployment_name instead of model_name

temperature = 0

embedding_model="text-embedding-ada-002"

openai = OpenAI(

model_name='text-davinci-003',

temperature=temperature,

)

to:

deployment_id="davinci" #(note: see below for the screenshot - set correctly)

embedding_model="text-embedding-ada-002"

temperature = 0

openai = AzureOpenAI(

deployment_name=deployment_id,

temperature=temperature,

)

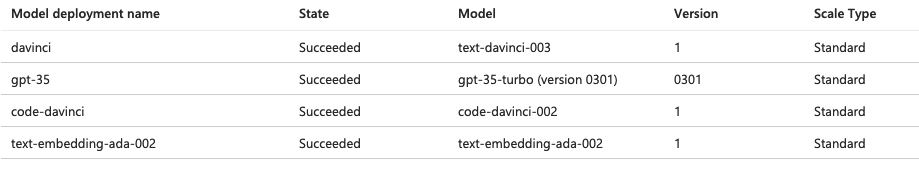

Here are the Azure models we have:

3.) Changing the langchain loader from:

from langchain.llms import OpenAI

to:

from langchain.llms import AzureOpenAI

Everything else stays the same.

The key part here is that it seems to fail when it comes to map_reduce with Azure:

qa = RetrievalQAWithSourcesChain.from_chain_type(llm=openai, chain_type="map_reduce", retriever=retriever, return_source_documents=True)

I have tried this with a custom chain_type_kwargs arguments overriding the question and combine prompt, and without (using the default).

It fails in both cases with Azure, but works exactly as expected with OpenAI.

Again, this seems to fail specifically around the map_reduce chain when it comes to Azure, and seems to produce results with refine.

If using with OpenAI -- it seems to work as expected in both cases.