-

Notifications

You must be signed in to change notification settings - Fork 1

Binary Search

Note

Objectives

- Identify a logarithmic sequence.

- Analyze the runtime of a recursive algorithm using recurrences.

Code: BinarySearch.java

Cool: Nonverbal instructions for Binary Search

Sequential search returns the index of an element in an array in worst case linear time by scanning across the array and comparing each element to the target. Although linear time algorithms are much more efficient than quadratic time algorithms, there are many situations where we need to make a large number of searches on a large amount of data. A linear time algorithm like worst case sequential search might have the following real-world runtimes.

- When

$N$ = 1 million, about 1 second. - When

$N$ = 10 million, about 10 seconds. - When

$N$ = 100 million, about 100 seconds.

Imagine how differently we would interact with technologies if search results took 100 or more seconds to process. Sorting elements is one way to enable more efficient searching.

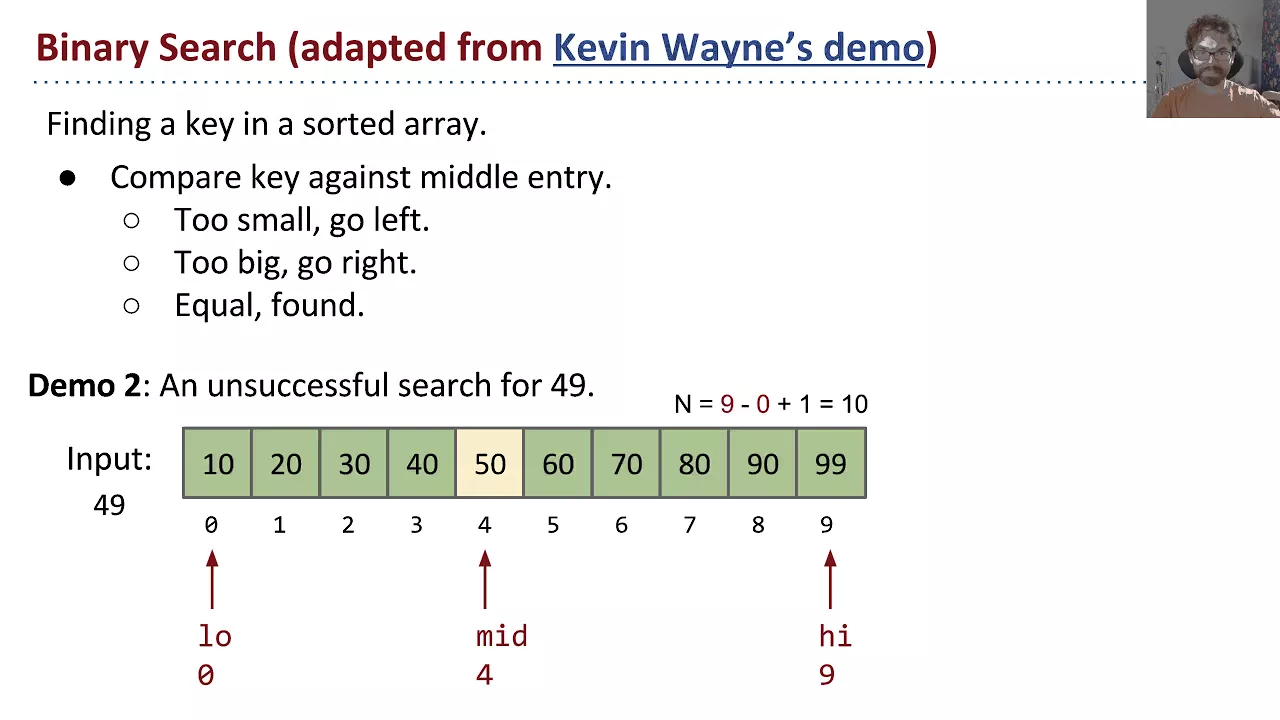

Binary search returns the index of an element in a sorted array in worst case logarithmic time by using the sorted order to discard half the remaining elements after each comparison. Instead of checking each element one-by-one from left-to-right in the array, binary search instead starts at the middle of the current problem and compares to the middle element to decide whether the proceed left or right. Watch the video through the 3:08 mark.

public static int binarySearch(int[] sorted, int target) {

return binarySearch(sorted, target, 0, sorted.length);

}

private static int binarySearch(int[] sorted, int target, int low, int high) {

if (low > high) {

return -1;

}

int N = high - low;

int mid = low + (high - low) / 2;

if (sorted[mid] < target) {

return binarySearch(sorted, target, mid + 1, high);

} else if (sorted[mid] > target) {

return binarySearch(sorted, target, low, mid - 1);

} else {

return mid;

}

}When does the best case runtime occur for binary search?

In the best case, the target is the exact middle element in the sorted array, which can be found in constant time.

How about the worst case? In the worst case, we don't find the target immediately. In fact, we might not even find the target at all in the array, and instead return -1. In each recursive call, half the remaining elements under consideration can be discarded. In other words, the number of recursive calls is given by the answer to the question, How many times do we need to divide

This is the definition of the base-2 logarithm, often written as either binarySearch is logarithmic with respect to target or report that it's not found by returning -1.

How long would a logarithmic time algorithm take to process large amounts of data? Let's compute the base-2 logarithm for big data.

- The base-2 logarithm of

$N$ = 1 million is about 20. - The base-2 logarithm of

$N$ = 10 million is about 23. - The base-2 logarithm of

$N$ = 100 million is about 27.

The runtime of a recursive program like binary search can be more effectively modeled using recurrence relations. Recurrence relations (aka recurrences) are recursive equations that represent the order of growth for a function in two parts: (1) non-recursive work and (2) recursive work. A recurrence relation describing the worst-case asymptotic runtime for binary search is

- On the left hand side of the equals sign,

$T(N)$ refers to the runtime for binary search in terms of the size of the current subproblem,$N$ . In the code above, note thatN = high - low. - On the right hand side of the equals sign, there are two components. The recursive work is given by the expression

$T(N / 2)$ because binary search makes a single recursive call to itself on a subproblem of half the size. The non-recursive work is given by the expression 1 because, ignoring the recursive calls, binary search spends a constant amount of time comparingsorted[mid] < target.

One way to solve a recurrence is by unrolling the recurrence, an approach that works by plugging the recurrence back into itself.

$T(N) = T(N / 2) + 1$ $T(N) = [T(N / 4) + 1] + 1$ $T(N) = [[T(N / 8) + 1] + 1] + 1$ $T(N) = [[[T(N / 16) + 1] + 1] + 1] + 1$

We can start to see a pattern emerge. The recursive work will eventually go down to sorted[mid] to the target? Put another way, we can ask: How many times do we need to divide

Kevin Lin ©️ 2025 CC BY-NC-SA 4.0