This project aims to create a guide for generating Llama.cpp GGUF model files out of base Llama v2 model weigths

To run this project locally, you have two options:

-

Use VS Code's Dev Container [Prefered].

-

Create your own virtual environment.

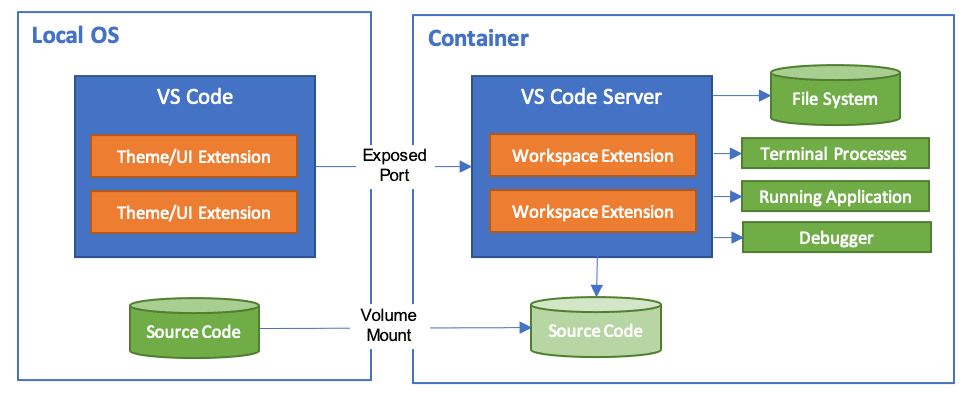

The Visual Studio Code Dev Containers extension lets you use a container as a full-featured development environment. It allows you to open any folder inside (or mounted into) a container and take advantage of Visual Studio Code's full feature set.

-

Docker installed locally.

-

VS Code Dev Containers Extension.

Name: Dev Containers Id: ms-vscode-remote.remote-containers Description: Open any folder or repository inside a Docker container and take advantage of Visual Studio Code's full feature set. Version: 0.347.0 Publisher: Microsoft VS Marketplace Link: https://marketplace.visualstudio.com/items?itemName=ms-vscode-remote.remote-containers

For more, please refer to: System Requirements

If you meet the requirements, you can get started by just opening this project in VS Code, and selecting Open in Dev Container.

If the message above doesn't appear, you can press: ctrl + shift + p in your keyboard and type: dev container.

Select: Open in Dev Container.

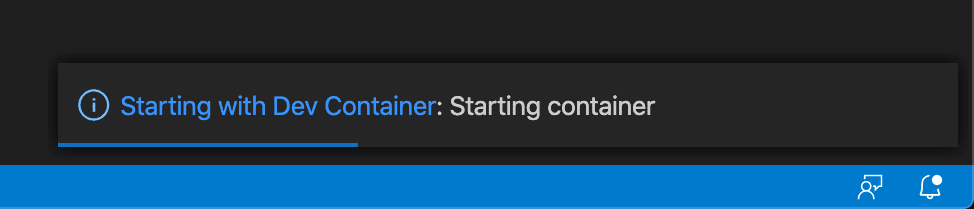

This should display a status bar like example below:

After the container starts successfully, you are ready to use and add features to the code.

Open the terminal and run the following commands:

-

Create virtual environment

python -m venv .venv

-

Activate virtual environment

-

For Windows:

.venv/Scripts/activate

-

For Mac/Linux:

source .venv/bin/activate

-

-

Install dependencies

python -m pip install -r .devcontainer/requirements.txt

After the dependencies install successfully, you are ready to use and add features to the code.

-

Hugging Face account.

Can be created here.

-

Hugging Face API Token.

Can be requested here.

-

Request access to Meta's Llama v2 models

Can be requested here.

📝 NOTE: A Hugging Face Token is need to access gated models like

Llama v2and to authenticate with the Hugging Face CLI.

To download a model from Hugging Face, run the notebook Model Downloader.

The output of this notebook should the desired Hugging Face model inside the models directory.

To convert the download Llama model from step above, run the notebook GGUF Converter.

This cell is responsible of converting the model to a GGUF compatible format.

The remaining part of the notebook will quantize the model (to reduce memory foot print - but may cause response quality degradation) and upload to Hugging Face.