-

Notifications

You must be signed in to change notification settings - Fork 4.5k

[autoparallel] Patch meta information of torch.tanh() and torch.nn.Dropout

#2773

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Merged

YuliangLiu0306

merged 61 commits into

hpcaitech:main

from

Cypher30:feature/tanh_metainfo

Feb 22, 2023

Merged

[autoparallel] Patch meta information of torch.tanh() and torch.nn.Dropout

#2773

YuliangLiu0306

merged 61 commits into

hpcaitech:main

from

Cypher30:feature/tanh_metainfo

Feb 22, 2023

Conversation

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Merge ColossalAI

Daily merge

torch.tanh()torch.tanh() and torch.nn.Dropout

|

The code coverage for the changed files is 45%. Click me to view the complete report |

1 similar comment

|

The code coverage for the changed files is 45%. Click me to view the complete report |

|

The code coverage for the changed files is 44%. Click me to view the complete report |

YuliangLiu0306

approved these changes

Feb 22, 2023

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

📌 Checklist before creating the PR

[doc/gemini/tensor/...]: A concise description🚨 Issue number

📝 What does this PR do?

In this PR, I patch meta information of

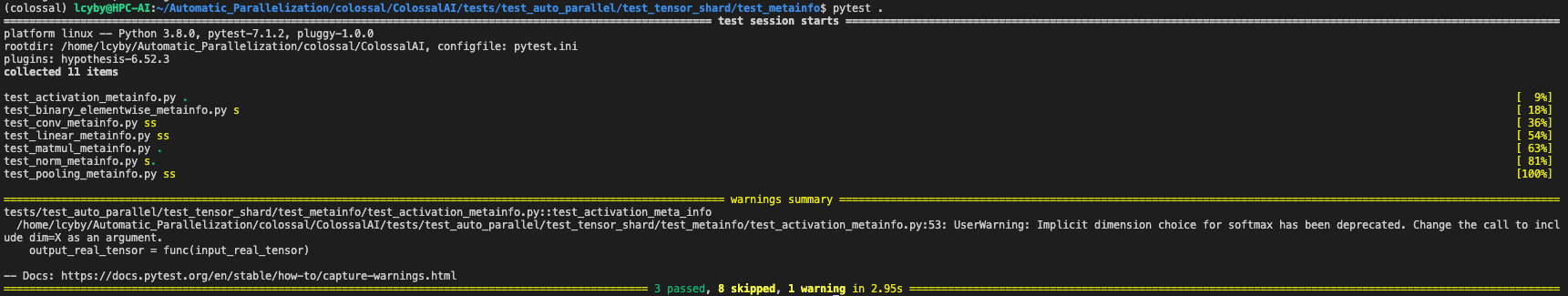

torch.tanh()andtorch.nn.Dropout. I also modify all the meta information generators inauto_parallel/meta_profiler/meta_registry/activation.pyand turn them into the same template so that we have cleaner code. We could think about refactoring more code inmeta_registryin the future.Again, the test is not supported on torch 1.11.0, so I attach the results here

💥 Checklist before requesting a review

⭐️ Do you enjoy contributing to Colossal-AI?

Tell us more if you don't enjoy contributing to Colossal-AI.