-

Notifications

You must be signed in to change notification settings - Fork 21.4k

eth/downloader: refactor downloader queue #20236

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

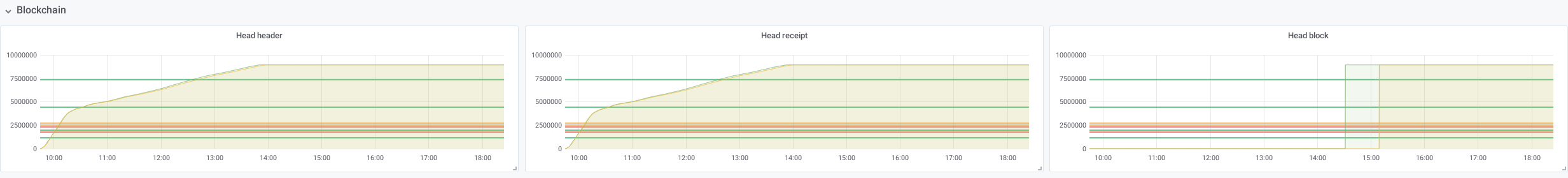

This is now doing a fast-sync on the benchmarkers: https://geth-bench.ethdevops.io/d/Jpk-Be5Wk/dual-geth?orgId=1&var-exp=mon08&var-master=mon09&var-percentile=50&from=1572876506497&to=now |

7d58a97 to

eed7fe6

Compare

|

Finally got greenlighted by travis. Will do one more fastsync-benchmark and post results |

|

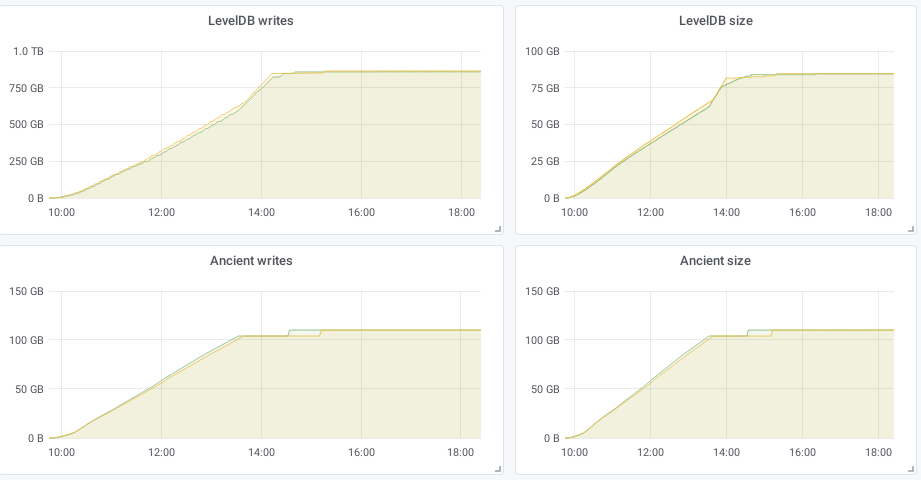

Fast-sync done (https://geth-bench.ethdevops.io/d/Jpk-Be5Wk/dual-geth?orgId=1&from=1573721066023&to=1573752540000&var-exp=mon06&var-master=mon07&var-percentile=50) , some graphs (this PR in yellow) Also, totally unrelated, it's interesting to see that there's a 10x write amplification on leveldb (750Gb written, 75G stored), and a perfect 1x on ancients: |

| throttleThreshold := uint64((common.StorageSize(blockCacheMemory) + q.resultSize - 1) / q.resultSize) | ||

| q.resultCache.SetThrottleThreshold(throttleThreshold) | ||

| // log some info at certain times | ||

| if time.Now().Second()&0xa == 0 { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

bleh

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

indeed :P

eth/downloader/queue.go

Outdated

| delete(q.receiptDonePool, hash) | ||

| closed = q.closed | ||

| q.lock.Unlock() | ||

| results = q.resultCache.GetCompleted(maxResultsProcess) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think the condition variable should be in resultStore. This means closed needs to move into the resultStore as well.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Well, that totally makes sense, but it means an even larger refactor. Then closed would have to move in there, and the things that calls Signal need to somehow trigger that via the resultStore.

Let's leave that for a future refactor (I'd be happy to continue iterating on the downloader)

cfd3f18 to

a0f30ba

Compare

core/types/block.go

Outdated

| } | ||

|

|

||

| // EmptyBody returns true if there is no additional 'body' to complete the header | ||

| // that is: no transactions and no uncles |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

.

core/types/block.go

Outdated

| return h.TxHash == EmptyRootHash && h.UncleHash == EmptyUncleHash | ||

| } | ||

|

|

||

| // EmptyReceipts returns true if there are no receipts for this header/block |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

.

eth/downloader/downloader.go

Outdated

| headers := packet.(*headerPack).headers | ||

| if len(headers) != 1 { | ||

| p.log.Debug("Multiple headers for single request", "headers", len(headers)) | ||

| p.log.Info("Multiple headers for single request", "headers", len(headers)) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think we should possible raise this to Warn

eth/downloader/downloader.go

Outdated

| headers := packer.(*headerPack).headers | ||

| if len(headers) != 1 { | ||

| p.log.Debug("Multiple headers for single request", "headers", len(headers)) | ||

| p.log.Info("Multiple headers for single request", "headers", len(headers)) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think we should possible raise this to Warn

eth/downloader/downloader.go

Outdated

| header := d.lightchain.GetHeaderByHash(h) // Independent of sync mode, header surely exists | ||

| if header.Number.Uint64() != check { | ||

| p.log.Debug("Received non requested header", "number", header.Number, "hash", header.Hash(), "request", check) | ||

| p.log.Info("Received non requested header", "number", header.Number, "hash", header.Hash(), "request", check) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think we should possible raise this to Warn

eth/downloader/downloader.go

Outdated

| delay = n | ||

| } | ||

| headers = headers[:n-delay] | ||

| ignoredHeaders = delay |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Probably simpler if you replace delay altogether with ignoredHeader instead of defining a new delay instance and then just assigning it at the end.

…tandalone resultcache

… in state requests

99d1503 to

060e2c0

Compare

|

Rebased |

|

Closing in favour of #21263 |

This PR contains a massive refactoring in the downloader + queue area. It's not quite ready to be merged yet, I'd like to see how the tests perform.

Todo: add some more unit-tests regarding the resultstore implementation, and the queue.

Throttling

Previously, we had a

doneQueuewhich was a map where we kept track of all downloaded items (receipts, block bodies). This map was updated when deliveries came in, and cleaned when results were pulled from theresultCache. It was quite finicky, and modifications to how the download functioned was dangerous: if these were not kept in check, it was possible that thedoneQueuewould blow up.It was also quite resource intensive, where a lot of counting and cross-checking was going on between the various pools and queues.

This has now been reworked, so that

resultCachemaintains (like previously) a slice of*fetchResults, with a length ofblockCacheLimit * 2.resultCachealso knows that it should only consider the first75%of available slots to be up for filling. Thus, when areserverequest comes in (we want do give a task to a peer), the resultCache checks if the proposed download-task is in that priority segment. Otherwise, it flags for throttling.This means I could drop all

donePoolthingies, which simplified things a bit.Concurrency

Previously, the queue maintained one lock to rule them all. Now, the resultCache has it's own lock, and can handle concurrency internally. This means that body and receipt fetch/delivery can happen simultaneously, and also that verification (sha:ing) of the bodies/receipts doesn't block other threads waiting for the lock.

Previously, I think it was kind of racy when setting the

Pendingon thefetchResult. This has been fixed.Tests

The downloader tests failed quite often; when receipts are added in the backend, the headers (

ownHeaders) were deleted and moved intoancientHeaders. If this happened quickly enough, the next batch of headers errored withunknown parent. This has been fixed so the backend also queriesancientHeadersfor header existence.Minor changes

rttmeasurements a bit more closer to the thuth.