An Unreal Engine plugin that helps you use AI and ML techniques in your unreal engine project.

News | Document | Download | M4U Remoting Android App (Open Source) | Speech Model Packages

Free Edtion vs Commercial Edition

Demo Projects: Full Demo (Windows) | Android Demo

MediaPipe4U provides a suite of libraries and tools that allow you to quickly apply artificial intelligence (AI) and machine learning (ML) techniques to your Unreal Engine projects. You can integrate these solutions into your UE project immediately and customize them to meet your needs. The suite includes motion capture, facial expression capture for your 3D avatar, text-to-speech (TTS), speech recognition (ASR), and more. All features are real-time, offline, low-latency, and easy to use.

- [new] Unreal Engine 5.6 support.

- [fix] When using NvAR to capture head rotation, negative angle values are handled incorrectly. #122

- [fix] Package failed on Unreal Engine versions 5.3 or lower. #237

- [new] 🌈 The free version now supports packaging all features, including voice and facial expression capture.

- [new] 🌈 Ollama support: Integrated with Ollama for large language model inference, enabling support for various LLMs such as DeepSeek, LLaMA, Phi, Qwen, QWQ, and more.

- [new] 🌈 Dialogue component

LLMSpeechChatRuntime: Integrates LLM, TTS, and ASR, making it easy to build chatbot functionality within Blueprints. - [new] 🌈 Added new TTS support: Kokoro, Melo.

- [new] 🌈 Added new ASR support: FunASR (Chinese-English with hotword support), FireRedASR (Chinese-English/Dialects), MoonShine (English), SenseVoice (Multilingual: Chinese/English/Japanese/Korean/Cantonese).

- [new] 🌈 Added a Transformer-based TTS model: F5-TTS, capable of zero-shot voice cloning (supports inference with DirectML/CUDA on both AMD and Nvidia GPUs).

- [new] 🌈 Voice wake-up: Lightweight model-based voice wake-up, supporting custom wake words to activate ASR and also separate voice command trigger functionality.

- [new] 🔥 Upgraded to the latest version of Google MediaPipe.

- [new] 🔥 Added support for Unreal Engine 5.5.

- [new] 🌈 Integrated NvAR pose tracking, allowing switching between MediaPipe and Nvidia Maxine algorithms.

- [new] 🌈 Open-sourced MediaPipe4U Remoting (Android facial module for MediaPipe4U).

- [new] 💫

Custom mediapipe connector(C++): Enabling complete replacegoogle mediapipewith you want (#195, #204). - [new] 💫 Added

Custom mediapipe feature(C++): Enabling partial replacegoogle mediapipewith you want (#195, #204). - [new] 🌈 Add a new Android Demo project (Gitlab)

- [improve] 👣 Demo project upgraded to UE5.5, added

Fake Demo, a C++ extension example that reads local files instead of using MediaPipe. - [improve] 👣 Demo project now includes voice wake-up examples and speaker selection demo.

- [improve] 👣 Demo project now includes LLM integration example.

- [improve] 👣 Demo project now includes voice chatbot example.

- [improve] 👣 Demo project now supports packaging.

- [break change] 💥

⚠️ ⚠️ ⚠️ Motion capture features have been moved to a new plugin:MediaPipe4UMotion. This may cause your existing blueprint to break. Please update your blueprint after upgrading. - [break change] 💥

⚠️ ⚠️ ⚠️ Due to changes in the license format, old licenses are no longer valid. You can now obtain a free license from here.

Speech Model download move to huggingface.

Currently, only the Unreal Engine 5.5 version is available; Other versions will be packaged and released later, sorry for that.

- The new Google Holistic Task API does not support GPU inference. As a result, the Android platform relies on CPU inference, while Windows continues to use CPU inference as usual.

- Starting from Unreal Engine 5.4, the built-in

OpenCVplugin no longer includes precompiled libraries (DLL files). Upon the first launch of the UE Editor, since M4U depends on theOpenCVplugin, the editor will attempt to download theOpenCVsource code online and compile it on your machine. This process may take a significant amount of time, potentially giving the impression that the engine is stuck at 75% during loading. Please be patient and check the logs in the Saved directory under the root folder to verify whether the process has completed. For users in China, you may need a VPN connection. Alternatively, you can follow the steps outlined in #166 to manually resolve this issue.

For the release notes, ref below:

💚All features are pure C++, no Python or external programs required.

- Motion Capture

- Motion of the body

- Motion of the fingers

- Movement

- Drive 3D avatar

- Real-time

- RGB webcam supported

- ControlRig supported

- Face Capture

- Facial expression.

- Arkit Blendshape compatible (52 expression)

- Live link compatible

- Real-time

- RGB webcam supported

- Multi-source Capture

- RGB WebCam

- Video File

- Image

- Living Stream (RTMP/SMTP)

- Android Device (M4U Remoting)

- LLM

- Ollama Support

- TTS

- Offline

- Real-time

- Lip-Sync

- Multiple models (Model Zoo)

- ASR

- Offline

- Real-time

- Multiple models (Model Zoo)

- Wake Word (like

Hey Siri)

- Animation Data Export

- BVH export

- Pure plugins

- No external programs required

- All in Unreal Engine

| Unreal Engine | China Site | Global Site | Update |

|---|---|---|---|

| UE 5.1 | 百度网盘 | Google Drive | 2025-08-18 |

| UE 5.2 | 百度网盘 | Google Drive | 2025-08-18 |

| UE 5.3 | 百度网盘 | Google Drive | 2025-08-18 |

| UE 5.4 | 百度网盘 | Google Drive | 2025-08-18 |

| UE 5.5 | 百度网盘 | Google Drive | 2025-08-18 |

| UE 5.6 | 百度网盘 | Google Drive | 2025-08-18 |

Because the plugin is precompiled and contains a large number of C++ link symbols and debug symbols, it will cost 10G disk space after decompression (most files are UE-generated binaries in Intermediate).

Don't need to worry about disk usage, this is just disk usage during development, after the project is packaged, the plug-in disk usage is 300M only (most files are GStreamer dynamic library and speech models).

Now, M4U support Android and Windows (Linux is coming soom)

| Plugins (Modules) | Windows | Android | Linux |

|---|---|---|---|

| MediaPipe4U | ✔️ | ✔️ | Coming Soon |

| MediaPipe4ULiveLink | ✔️ | ✔️ | Coming Soon |

| GStreamer | ✔️ | ❌ | Coming Soon |

| MediaPipe4UGStreamer | ✔️ | ❌ | Coming Soon |

| MediaPipe4UBVH | ✔️ | ✔️ | Coming Soon |

| MediaPipe4USpeech | ✔️ | ❌ | Coming Soon |

The license file will be published in the discussion, and the plugin package file will automatically include an license file.

About M4U Remoting

This is an open-source Android application that can send facial expression data to the MediaPipe4U program on Windows.

With it, you can perform facial expression capture using an Android mobile device and visualize it on an avatar in Unreal Engine.

Please clone this repository to get demo project:

Windows Demo: https://gitlab.com/endink/mediapipe4u-demoAndroid Demo: https://gitlab.com/endink/mediapipe4u-android-demo

Use the git client to get the demo project (require git and git lfs) :

The Windows Demo is a full-featured demo, and it is recommended to use the Windows Demo if you are learning how to use the

MediaPipe4U.

Windows Demo:

git lfs clone https://gitlab.com/endink/mediapipe4u-demo.gitAndroid Demo:

git lfs clone https://gitlab.com/endink/mediapipe4u-android-demo.gitThe demo project does not contain plugins, you need to download the plugin and copy content to the project's plugins folder to run.

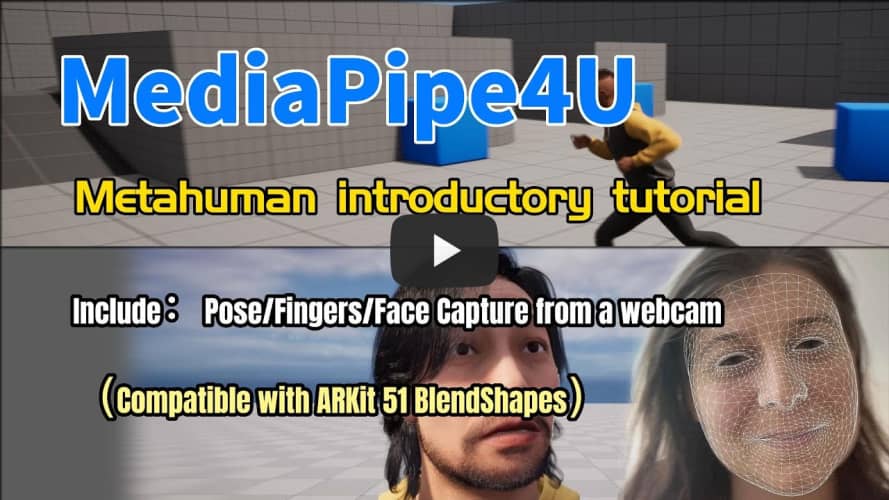

Video Tutorials (English)

Video Tutorials (Chinese)

If you have any questiongs, please check FAQ first. The problems listed there may be also yours. If you can’t find an answer in the FAQ, please post an issue. Private message or emal may cause the question to be mised .

Since the Windows version of MediaPipe does not support GPU inference, Windows relies on the CPU to inferring human pose estimation (see MediaPipe offical site for more details).

Evaluation

Frame Rate: 18-24 fps

CPU usage:20% (Based on DEMO project)

Testing Evnrioment

CPU: AMD 3600 CPU

RAM: 32GB

GPU: Nvidia 1660s

We acknowledge the contributions of the following open-source projects and frameworks, which have significantly influenced the development of M4U:

- M4U utilizes MediaPipe for motion and facial capture.

- M4U utilizes the NVIDIA Maxine AR SDK for advanced facial tracking and capture.

- M4U utilizes PaddleSpeech for text-to-speech (TTS) synthesis.

- M4U utilizes FunASR for automatic speech recognition (ASR).

- M4U utilizes whisper.cpp as an ASR solution.

- M4U utilizes Sherpa Onnx to enhance ASR capabilities.

- M4U utilizes F5-TTS-ONNX for exporting the F5-TTS model.

- M4U utilizes GStreamer to facilitate video processing and real-time streaming.

- M4U utilizes code from PowerIK for inverse kinematics (IK) and ground adaptation.

- M4U utilizes concepts from Kalidokit in the domain of motion capture.

- M4U utilizes code from wongfei to enhance GStreamer and MediaPipe interoperability.

We extend our gratitude to the developers and contributors of these projects for their valuable innovations and open-source contributions, which have greatly facilitated the development of MediaPipe4U.

-brightgreen.svg)