-

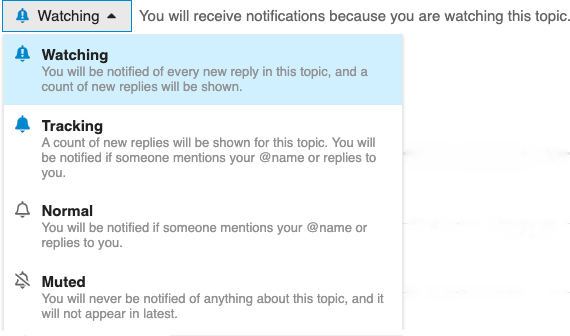

Notifications

You must be signed in to change notification settings - Fork 1.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

something wrong with tablet size calculation #5408

Comments

|

I haven't been able to reproduce this exact issue but it looks like something else is wrong. When calculating the sizes, the function skips all the tables with the following errors. The error happens when trying to read the biggest key of the table and to parse it into a Dgraph key. Note that the smallest (left) key can be read without issue. Also, the value is the same in all four tables. Maybe there's a special key at the end of a badger table? I don't think there's an error with Dgraph itself because my cluster is working fine. @jarifibrahim Do you have any insight into why the right keys of the tables might be different than what Dgraph expects? |

|

@martinmr

Each level 0 table has one |

|

@jarifibrahim Ok. So to deal with this should I iterate through the table backwards until I find a valid key? Can I do something like dgraph-io/badger#1309 but from the dgraph side? |

|

@martinmr, the last time we spoke to @manishrjain he suggested that it's okay to skip some tables. @parasssh would also remember this discussion.

The tables are not accessible out of badger. To perform a reverse iteration you would need access to the table and table iterator. The |

|

Correct. The tablet size is really just a rough estimate. Unless the entire table consists of the keys from the same predicate, dgraph will skip it in the tablet size calculation. Having said that, I think we should have the Alternatively or Additionally, on dgraph side, we can make our tablet size calculation only rely on the Left field of each |

|

@jarifibrahim I implemented what @parasssh suggested above. When I load the 1 million dataset I get a total size of 3.4GB. However, the size of the p directory (in a cluster with only one alpha running for simplicity) is 210MB. One thing I don't know is whether the estimated size knows how to deal with compaction. Is the size the size of the uncompressed or compressed data? Maybe that could explain the difference I am seeing. Otherwise, I think there's something wrong with the values EstimatedSz is reporting. The logic in the dgraph side is fairly simple and I haven't seen any other issue than the one mentioned above (which in any case is under-reporting the numbers so it doesn't explain the situation the user is seeing).. |

In badger, the right key might be a badger specific key that Dgraph cannot understand. To deal with these keys, a table is included in the size calculation if the next table starts with the same key. Related to DGRAPH-1358 and #5408.

@martinmr How did you test this? Do you have steps that I can follow? This could be a badger bug, maybe some issue with how we do estimates in badger. The size is the estimated size of the uncompressed data but compression cannot make such a huge difference. This is definitely a bug. Let me how you tested it and I can verify it in badger. |

For simplicity, I used a cluster with 1 alpha and 1 zero. EDIT: master now contains all the changes you need. |

|

@martinmr can you look at the badger code and figure out what's wrong? The calculations are done here https://github.com/dgraph-io/badger/blob/dd332b04e6e7fe06e4f213e16025128b1989c491/table/builder.go#L228 |

…graph-io#5656) In badger, the right key might be a badger specific key that Dgraph cannot understand. To deal with these keys, a table is included in the size calculation if the next table starts with the same key. Related to DGRAPH-1358 and dgraph-io#5408.

|

Github issues have been deprecated. |

What version of Dgraph are you using?

Dgraph v20.03.1

Have you tried reproducing the issue with the latest release?

yes

What is the hardware spec (RAM, OS)?

centos(126G)

Steps to reproduce the issue (command/config used to run Dgraph).

I noticed the tablet size is more than disk capacity

my machine disk capacity is

the p directory size is

when I used /state endpoint, I got the result

from the ratel, I got the result

md5id and gid size is about 6.1TB,but my disk capacity is 2.9T

Expected behaviour and actual result.

tablet size can calculate correctly.

Related to https://discuss.dgraph.io/t/ratel-predicate-capactiy

The text was updated successfully, but these errors were encountered: