-

Notifications

You must be signed in to change notification settings - Fork 6

DOPE(Deep Object Pose Estimate)

EN|CN

We updated the code in our group, please check here.(Remember to change the branch to

dev)

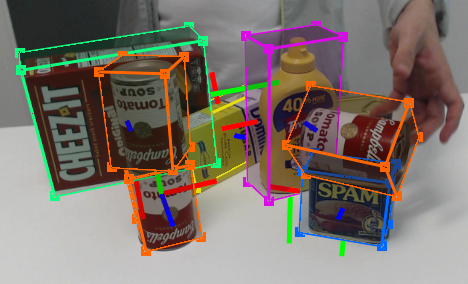

DOPE(Deep Object Pose Estimation)system is developed by NVIDIA Lab. You can use the official DOPE ROS package for detection and 6-DoF pose estimation of known objects from an RGB camera. The network has been trained on several YCB objects. For more details, see the original GitHub repo.

However, based on the official DOPE ROS package, we fixed some codes according to the paper. Besides, we created launch file dope_image_demo.launch、dope_camera_demo.launch to quickly start the demo, image_read.py script to get the images from the folder and publish them, feature_map_visualization.py script to save the output of the neural network as a image. Moreover, lots of useful links were added through the main codes to help you understand the codes more easily.

Any questions are welcome!

- Ubuntu 16.04 with full ROS Kinetic install(tested,it may works on other systems);

- NVIDIA GPU Supported(tested);

- CUDA 8.0(tested);

- CUDNN 6.0(tested);

To install CUDA && CUDNN, you can check here.(This blog was writen in chinese. If you have ang questions, feel free to contact us.)*

Note: The name of workspace(catkin_ws) below should be changed into yours.

$ cd ~/catkin_ws/src

$ git clone https://github.com/birlrobotics/Deep_Object_Pose.git

$ git checkout dev

$ cd ~/catkin_ws/src/dope

$ pip install -r requirements.txt

$ cd ~/catkin_ws

$ catkin_make

-

Edit config info (if desired) in

~/catkin_ws/src/dope/config/config_pose.yaml-

topic_camera: RGB topic to listen to -

topic_publishing: topic name for publishing -

weights: dictionary of object names and their weights path name, comment out any line to disable detection/estimation of that object -

dimension: dictionary of dimensions for the objects (key values must match theweightsnames) -

draw_colors: dictionary of object colors (key values must match theweightsnames) -

camera_settings: dictionary for the camera intrinsics; edit these values to match your camera -

thresh_points: Thresholding the confidence for object detection; increase this value if you see too many false positives, reduce it if objects are not detected.

-

-

Download the weights

Download the weights and save them to the

weightsfolder, -

Run launch file to start

To test in real time with camera:

$ roslaunch dope dope_camera_demo.launchTo test off-line with images:

$ roslaunch dope dope_image_demo.launch -

Visualize in RViz

Add > Imageto view the raw RGB image or the image with cuboids overlaid by changing the topic; -

Ros topics published

$ rostopic list/dope/webcam_rgb_raw # RGB images from camera /dope/dimension_[obj_name] # dimensions of object /dope/pose_[obj_name] # timestamped pose of object /dope/rgb_points # RGB images with detected cuboids overlaidNote:

[obj_name]is in {cracker, gelatin, meat, mustard, soup, sugar} -

Train the model:

Please refer to

python train.py --helpfor specific details about the training code.$ python train.py --data path/to/dataset --object soup --outf soup --gpuids 0 1 2 3 4 5 6 7This will create a folder called

train_soupwhere the weights will be saved after each 10 epochs. It will use the 8 gpus using pytorch data parallel.

A brief overview of this work is following:

- Put captured image into a deep neural network to extract the feature.

- The output of the neural network is two target maps named

beilef mapandaffinity map.- In order to extract the individual objects from the belied maps and retrieve the pose of the object, they used greedy algorithm as well as the PnP algorithm to process the two target maps.

The structure of the repository are following:

The neural network used in this work are following:

You can watch the official video here

For common question, please check here.