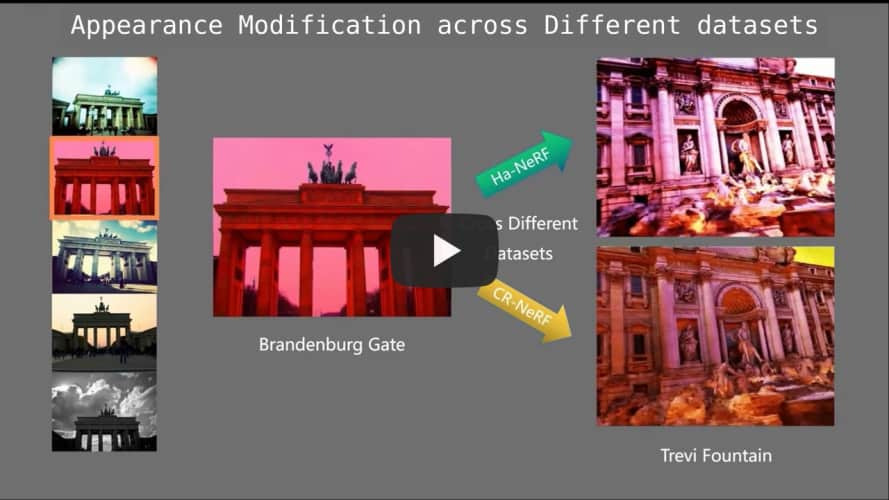

CR-NeRF: Cross-Ray Neural Radiance Fields for Novel-view Synthesis from Unconstrained Image Collections

Yifan Yang · Shuhai Zhang · Zixiong Huang · Yubing Zhang . Mingkui Tan

Table of Contents

|

|---|

| Pipeline of CR-NeRF |

-

If you want to Train & Evaluate, please check dataset.md to prepare dataset, see Training and testing to train and benchmark CR-NeRF using Brandenburg Gate tainingset

-

During evaluation, given:

- A RGB image of a desired image style

- Camera position

with our CR-NeRF You will get:

- image:

- with the same camera position as the given one

- with the same image style as the given image

For more details of our CR-NeRF, see architecture visualization in our encoder, transformation net, and decoder

- See docs/installation.md to install all the required packages and setup the models

- See docs/dataset.md to prepare the in-the-wild datasets

- See Training and testing to train and benchmark CR-NeRF using Brandenburg Gate tainingset

Download trained checkpoints from: google drive or Baidu drive password: z6wd

If you want video demo

#Set $scene_name and $save_dir1 and cuda devices in command/get_video_demo.sh

bash command/get_video_demo.shThe rendered video (in .gif format) will be in path "{$save_dir1}/appearance_modification/{$scene_name}"

If you want images for evaluating metrics

bash command/get_rendered_images.shThe rendered images will be in path "{$save_dir1}/{$exp_name1}"

#Set experiment name and cuda devices in train.sh

bash command/train.sh

#Set the experiment name to match the training name, and set cuda devices in test.sh

bash command/test.sh@inproceedings{yang2023cross,

title={Cross-Ray Neural Radiance Fields for Novel-view Synthesis from Unconstrained Image Collections},

author={Yang, Yifan and Zhang, Shuhai and Huang, Zixiong and Zhang, Yubing and Tan, Mingkui},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={15901--15911},

year={2023}

}We thank Dong Liu's help in making the video demo

Here are some great resources we benefit from:

By downloading and using the code and model you agree to the terms in the LICENSE.