This is a ready-for-use-colab tutorial for finetuning ruDialoGpt3 model on your telegram chat using HuggingFace and PyTorch

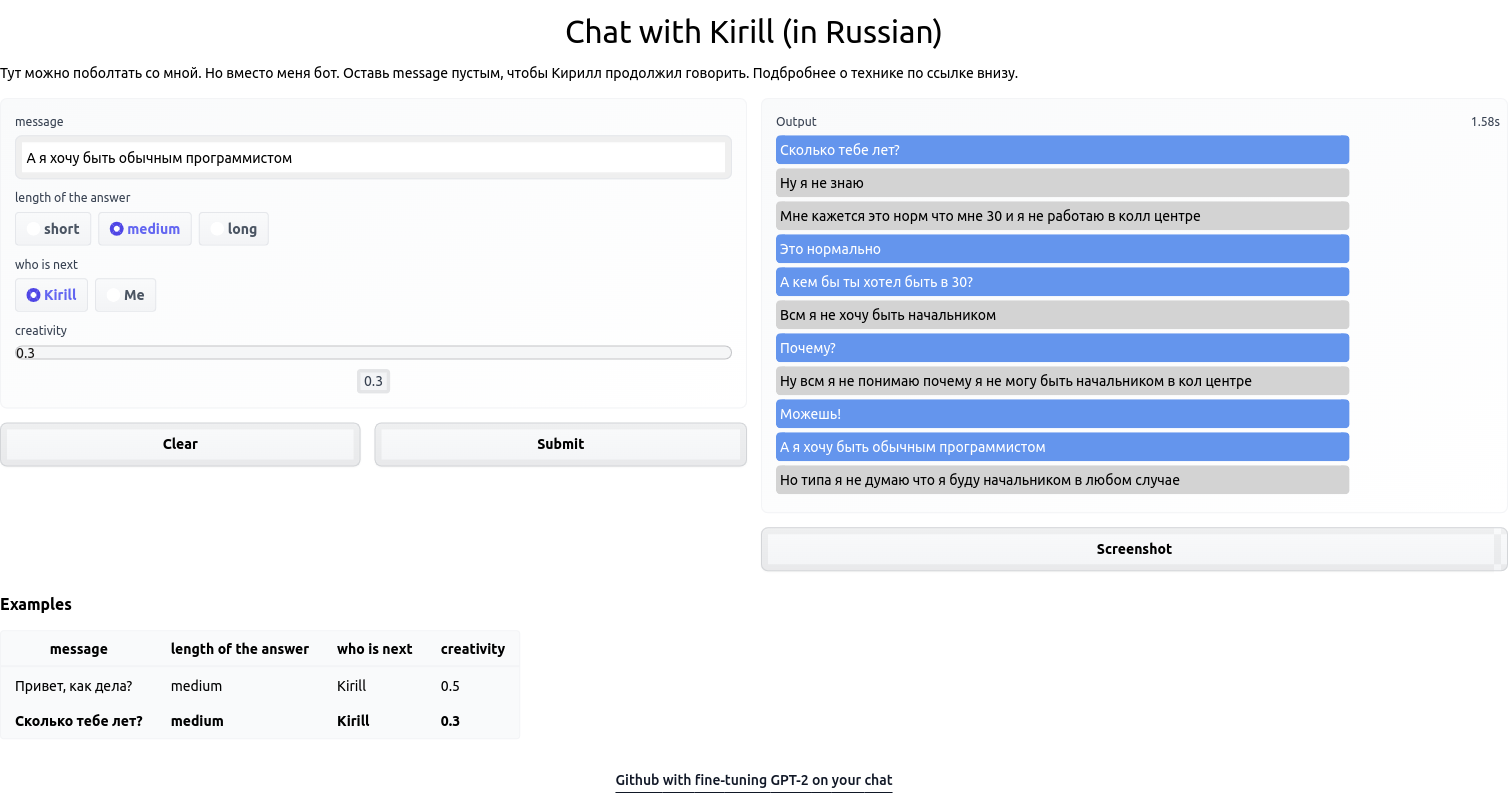

Here is a picture of a demo you can also setup with Gradio and Huggingface Spaces:

-

🤗 Spaces interactable demo of my model using Gradio

I used RuDialoGPT-3 trained on forums to fine tune. It was trained by @Grossmend on Russian forums. The training procedure of the model for dialogue is described in Grossmend's blogpost (in Russian). I have created a simple pipeline and fine tuned that model on my own exported telegram chat (~30mb json, 3 hours of fine tuning).

It is in fact very easy to get the data from telegram and fine tune a model:

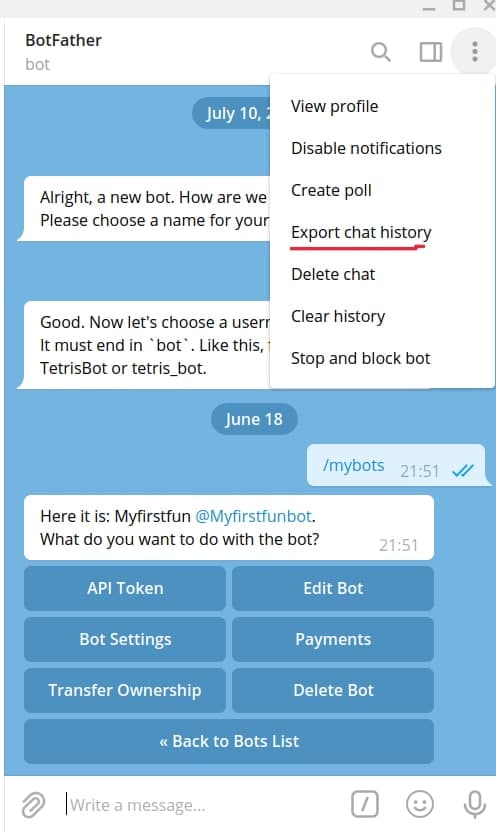

Using telegram chat for fine-tuning

1) Export your telegram chat as JSON- Upload it to colab

-

The code will create a dataset for you

-

Wait a bit!

-

🎉 (Inference and smile)