Repository of pre-trained Language Models.

WARNING: a Bidirectional LM model using the MultiFiT configuration is a good model to perform text classification but with only 46 millions of parameters, it is far from being a LM that can compete with GPT-2 or BERT in NLP tasks like text generation. This my next step ;-)

Note: The training times shown in the tables on this page are the sum of the creation time of Fastai Databunch (forward and backward) and the training duration of the bidirectional model over 10 periods. The download time of the Wikipedia corpus and its preparation time are not counted.

I trained 1 Portuguese Bidirectional Language Model (PBLM) with the MultiFit configuration with 1 NVIDIA GPU v100 on GCP.

MultiFiT configuration (architecture 4 QRNN with 1550 hidden parameters by layer / tokenizer SentencePiece (15 000 tokens))

- notebook lm3-portuguese.ipynb (nbviewer of the notebook): code used to train a Portuguese Bidirectional LM on a 100 millions corpus extrated from Wikipedia by using the MultiFiT configuration.

- link to download pre-trained parameters and vocabulary in models

| PBLM | accuracy | perplexity | training time |

|---|---|---|---|

| forward | 39.68% | 21.76 | 8h |

| backward | 43.67% | 22.16 | 8h |

- Applications:

- notebook lm3-portuguese-classifier-TCU-jurisprudencia.ipynb (nbviewer of the notebook): code used to fine-tune a Portuguese Bidirectional LM and a Text Classifier on "(reduzido) TCU jurisprudência" dataset.

- notebook lm3-portuguese-classifier-olist.ipynb (nbviewer of the notebook): code used to fine-tune a Portuguese Bidirectional LM and a Sentiment Classifier on "Brazilian E-Commerce Public Dataset by Olist" dataset.

[ WARNING ] The code of this notebook lm3-portuguese-classifier-olist.ipynb must be updated in order to use the SentencePiece model and vocab already trained for the Portuguese Language Model in the notebook lm3-portuguese.ipynb as it was done in the notebook lm3-portuguese-classifier-TCU-jurisprudencia.ipynb (see explanations at the top of this notebook).

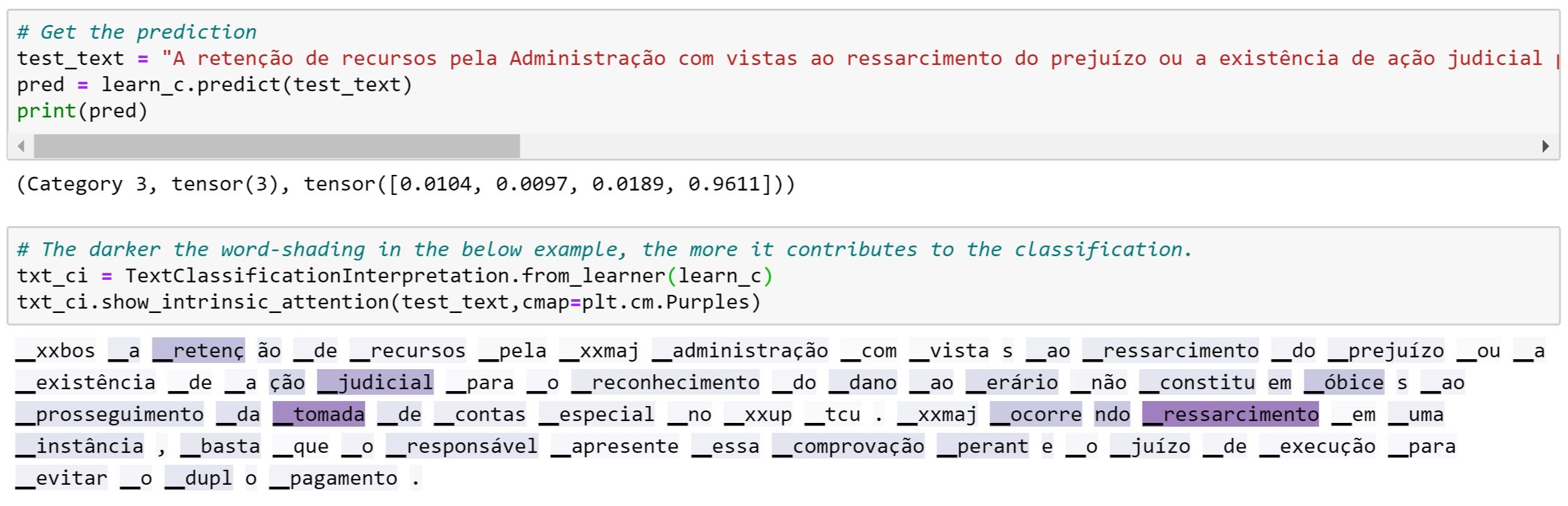

Here's an example of using the classifier to predict the category of a TCU legal text:

I trained 3 French Bidirectional Language Models (FBLM) with 1 NVIDIA GPU v100 on GCP but the best is the one trained with the MultiFit configuration.

| French Bidirectional Language Models (FBLM) | accuracy | perplexity | training time | |

|---|---|---|---|---|

| MultiFiT with 4 QRNN + SentencePiece (15 000 tokens) | forward | 43.77% | 16.09 | 8h40 |

| backward | 49.29% | 16.58 | 8h10 | |

| ULMFiT with 3 QRNN + SentencePiece (15 000 tokens) | forward | 40.99% | 19.96 | 5h30 |

| backward | 47.19% | 19.47 | 5h30 | |

| ULMFiT with 3 AWD-LSTM + spaCy (60 000 tokens) | forward | 36.44% | 25.62 | 11h |

| backward | 42.65% | 27.09 | 11h |

1. MultiFiT configuration (architecture 4 QRNN with 1550 hidden parameters by layer / tokenizer SentencePiece (15 000 tokens))

- notebook lm3-french.ipynb (nbviewer of the notebook): code used to train a French Bidirectional LM on a 100 millions corpus extrated from Wikipedia by using the MultiFiT configuration.

- link to download pre-trained parameters and vocabulary in models

| FBLM | accuracy | perplexity | training time |

|---|---|---|---|

| forward | 43.77% | 16.09 | 8h40 |

| backward | 49.29% | 16.58 | 8h10 |

- Application: notebook lm3-french-classifier-amazon.ipynb (nbviewer of the notebook): code used to fine-tune a French Bidirectional LM and a Sentiment Classifier on "French Amazon Customer Reviews" dataset.

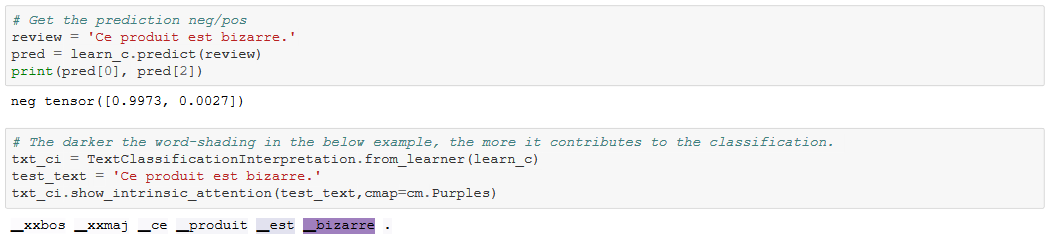

Here's an example of using the classifier to predict the feeling of comments on an amazon product:

- notebook lm2-french.ipynb (nbviewer of the notebook): code used to train a French Bidirectional LM on a 100 millions corpus extrated from Wikipedia

- link to download pre-trained parameters and vocabulary in models

| FBLM | accuracy | perplexity | training time |

|---|---|---|---|

| forward | 40.99% | 19.96 | 5h30 |

| backward | 47.19% | 19.47 | 5h30 |

- Application: notebook lm2-french-classifier-amazon.ipynb (nbviewer of the notebook): code used to fine-tune a French Bidirectional LM and a Sentiment Classifier on "French Amazon Customer Reviews" dataset.

- notebook lm-french.ipynb (nbviewer of the notebook): code used to train a French Bidirectional LM on a 100 millions corpus extrated from Wikipedia

- link to download pre-trained parameters and vocabulary in models

| FBLM | accuracy | perplexity | training time |

|---|---|---|---|

| forward | 36.44% | 25.62 | 11h |

| backward | 42.65% | 27.09 | 11h |

- Application: notebook lm-french-classifier-amazon.ipynb (nbviewer of the notebook): code used to fine-tune a French Bidirectional LM and a Sentiment Classifier on "French Amazon Customer Reviews" dataset.