Explainability in Process Outcome Prediction: Guidelines to Obtain Interpretable and Faithful Models

Complementary code to reproduce the work of "Explainable Predictive Process Monitoring: Evaluation Metrics and Guidelines for Process Outcome Prediction"

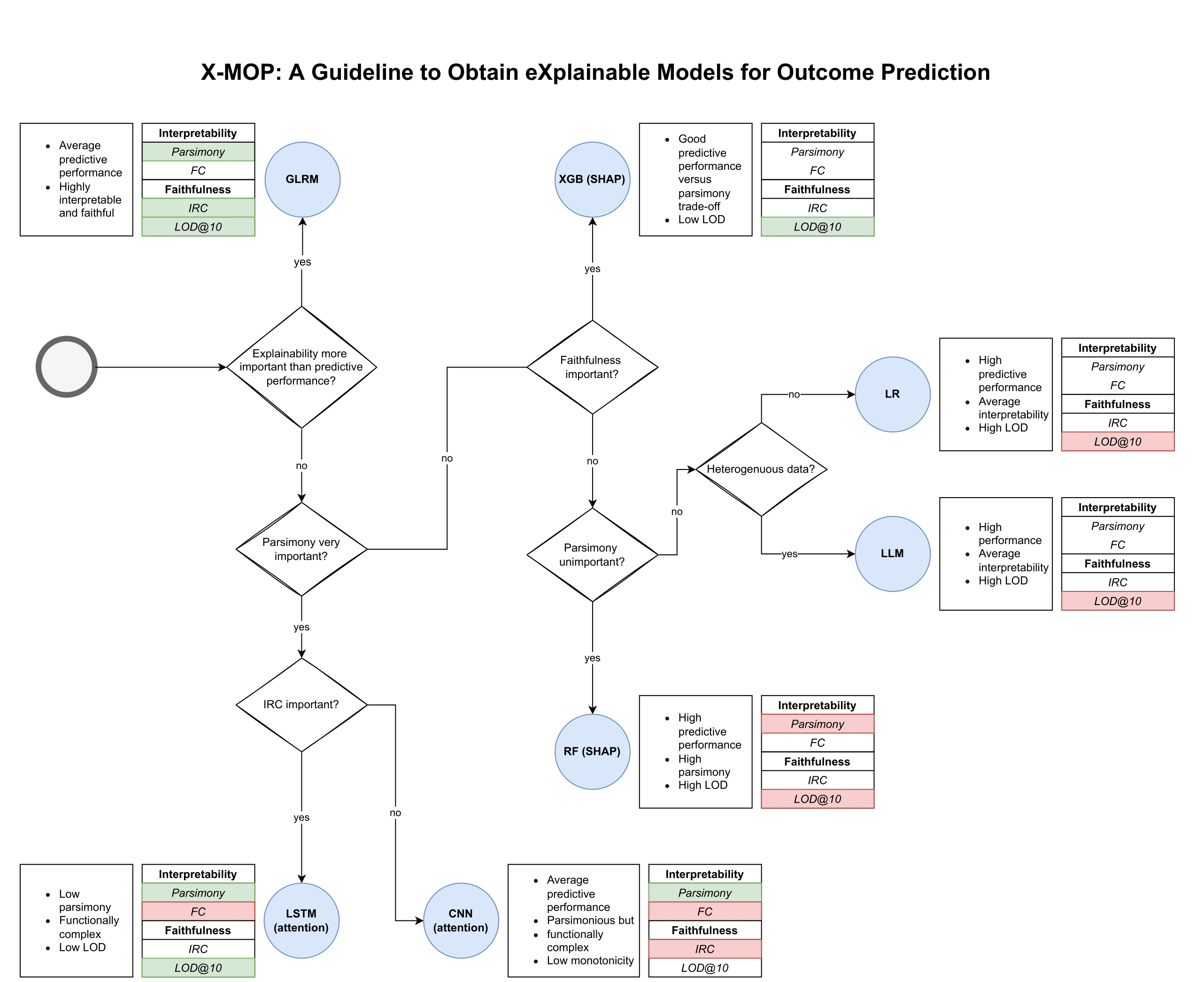

This figure contains the guideline to obtain eXplainable Models for Outcome Prediction (X-MOP) An overview of the files and folders:

The folder figures contain the high-resolution figures (PDF format) that have been used in the paper.

This folder contains cleaned and preprocessed event logs that are made available by this GitHub repository: Benchmark for outcome-oriented predictive process monitoring. They provide 22 event logs, and we have selected 13 of them. The authors of this work an GitHub repository provide a Google drive link to download these event logs.

The metrics introduced in this paper are created in a seperate class (and .py) file. Hopefully, this will support reproducibility. The metrics are intended to work with all the benchmark models as mentioned in the paper. If there are any problems or questions, feel free to contact me (corresponding author and email related to the experiment of this study can be found on this site.

This folder contains only one file (LLM.py), where LLM model (originally written in R) is translated to Python. Note that there are some different design choices made compared to the original paper (link: here).

The different hyperparameter files (in .pickle and .csv)

The different result files (in .csv) for the ML models (results_dir_ML) and the DL models (results_dir_DL).

This folder contains the files to perform the aggregation sequence encoding (code stems from Teinemaa et. al. (2019)).

The preprocessing and hyperoptimalisation are derivative work based on the code provided by Outcome-Oriented Predictive Process Monitoring: Review and Benchmark. We would like to thank the authors for the high quality code that allowed to fastly reproduce the provided work.

- dataset_confs.py

- DatasetManager.py

- EncoderFactory.py

- Hyperopt_ML.py

- Hyperopt_DL (GC).ipynb

Logistic Regression (LR), Logit Leaf Model (LLM), Generalized Logistic Rule Regression (GLRM), Random Forest (RF) and XGBoost (XGB)

- Experiment_ML.py

Long short-term memory neural networks (LSTM) and Convolutional Neural Network( CNN)

- experiment_DL (GC).ipynb

We acknowledge the work provided by Building accurate and interpretable models for predictive process analytics for their attention-based bidirectional LSTM architecture to create the long short-term neural networks with attention layers visualisations.