diff --git a/benchmark/ppo.sh b/benchmark/ppo.sh

index be7c59bd4..70f374785 100644

--- a/benchmark/ppo.sh

+++ b/benchmark/ppo.sh

@@ -5,58 +5,122 @@ OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids CartPole-v1 Acrobot-v1 MountainCar-v0 \

--command "poetry run python cleanrl/ppo.py --no_cuda --track --capture_video" \

--num-seeds 3 \

- --workers 9

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

poetry install -E atari

OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids PongNoFrameskip-v4 BeamRiderNoFrameskip-v4 BreakoutNoFrameskip-v4 \

--command "poetry run python cleanrl/ppo_atari.py --track --capture_video" \

--num-seeds 3 \

- --workers 3

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

+

+poetry install -E mujoco

+OMP_NUM_THREADS=1 xvfb-run -a python -m cleanrl_utils.benchmark \

+ --env-ids HalfCheetah-v4 Walker2d-v4 Hopper-v4 InvertedPendulum-v4 Humanoid-v4 Pusher-v4 \

+ --command "poetry run python cleanrl/ppo_continuous_action.py --no_cuda --track --capture_video" \

+ --num-seeds 3 \

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

+

+poetry install -E "mujoco dm_control"

+OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

+ --env-ids dm_control/acrobot-swingup-v0 dm_control/acrobot-swingup_sparse-v0 dm_control/ball_in_cup-catch-v0 dm_control/cartpole-balance-v0 dm_control/cartpole-balance_sparse-v0 dm_control/cartpole-swingup-v0 dm_control/cartpole-swingup_sparse-v0 dm_control/cartpole-two_poles-v0 dm_control/cartpole-three_poles-v0 dm_control/cheetah-run-v0 dm_control/dog-stand-v0 dm_control/dog-walk-v0 dm_control/dog-trot-v0 dm_control/dog-run-v0 dm_control/dog-fetch-v0 dm_control/finger-spin-v0 dm_control/finger-turn_easy-v0 dm_control/finger-turn_hard-v0 dm_control/fish-upright-v0 dm_control/fish-swim-v0 dm_control/hopper-stand-v0 dm_control/hopper-hop-v0 dm_control/humanoid-stand-v0 dm_control/humanoid-walk-v0 dm_control/humanoid-run-v0 dm_control/humanoid-run_pure_state-v0 dm_control/humanoid_CMU-stand-v0 dm_control/humanoid_CMU-run-v0 dm_control/lqr-lqr_2_1-v0 dm_control/lqr-lqr_6_2-v0 dm_control/manipulator-bring_ball-v0 dm_control/manipulator-bring_peg-v0 dm_control/manipulator-insert_ball-v0 dm_control/manipulator-insert_peg-v0 dm_control/pendulum-swingup-v0 dm_control/point_mass-easy-v0 dm_control/point_mass-hard-v0 dm_control/quadruped-walk-v0 dm_control/quadruped-run-v0 dm_control/quadruped-escape-v0 dm_control/quadruped-fetch-v0 dm_control/reacher-easy-v0 dm_control/reacher-hard-v0 dm_control/stacker-stack_2-v0 dm_control/stacker-stack_4-v0 dm_control/swimmer-swimmer6-v0 dm_control/swimmer-swimmer15-v0 dm_control/walker-stand-v0 dm_control/walker-walk-v0 dm_control/walker-run-v0 \

+ --command "poetry run python cleanrl/ppo_continuous_action.py --exp-name ppo_continuous_action_8M --total-timesteps 8000000 --no_cuda --track" \

+ --num-seeds 10 \

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

poetry install -E atari

OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids PongNoFrameskip-v4 BeamRiderNoFrameskip-v4 BreakoutNoFrameskip-v4 \

--command "poetry run python cleanrl/ppo_atari_lstm.py --track --capture_video" \

--num-seeds 3 \

- --workers 3

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

poetry install -E envpool

-xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

+poetry run python -m cleanrl_utils.benchmark \

--env-ids Pong-v5 BeamRider-v5 Breakout-v5 \

--command "poetry run python cleanrl/ppo_atari_envpool.py --track --capture_video" \

--num-seeds 3 \

- --workers 1

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

-poetry install -E "mujoco_py mujoco"

-poetry run python -c "import mujoco_py"

-OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

- --env-ids HalfCheetah-v2 Walker2d-v2 Hopper-v2 InvertedPendulum-v2 Humanoid-v2 Pusher-v2 \

- --command "poetry run python cleanrl/ppo_continuous_action.py --no_cuda --track --capture_video" \

+poetry install -E "envpool jax"

+poetry run python -m cleanrl_utils.benchmark \

+ --env-ids Alien-v5 Amidar-v5 Assault-v5 Asterix-v5 Asteroids-v5 Atlantis-v5 BankHeist-v5 BattleZone-v5 BeamRider-v5 Berzerk-v5 Bowling-v5 Boxing-v5 Breakout-v5 Centipede-v5 ChopperCommand-v5 CrazyClimber-v5 Defender-v5 DemonAttack-v5 DoubleDunk-v5 Enduro-v5 FishingDerby-v5 Freeway-v5 Frostbite-v5 Gopher-v5 Gravitar-v5 Hero-v5 IceHockey-v5 Jamesbond-v5 Kangaroo-v5 Krull-v5 KungFuMaster-v5 MontezumaRevenge-v5 MsPacman-v5 NameThisGame-v5 Phoenix-v5 Pitfall-v5 Pong-v5 PrivateEye-v5 Qbert-v5 Riverraid-v5 RoadRunner-v5 Robotank-v5 Seaquest-v5 Skiing-v5 Solaris-v5 SpaceInvaders-v5 StarGunner-v5 Surround-v5 Tennis-v5 TimePilot-v5 Tutankham-v5 UpNDown-v5 Venture-v5 VideoPinball-v5 WizardOfWor-v5 YarsRevenge-v5 Zaxxon-v5 \

+ --command "poetry run python ppo_atari_envpool_xla_jax.py --track --wandb-project-name envpool-atari --wandb-entity openrlbenchmark" \

--num-seeds 3 \

- --workers 6

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

+

+poetry install -E "envpool jax"

+python -m cleanrl_utils.benchmark \

+ --env-ids Pong-v5 BeamRider-v5 Breakout-v5 \

+ --command "poetry run python cleanrl/ppo_atari_envpool_xla_jax_scan.py --track --capture_video" \

+ --num-seeds 3 \

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

poetry install -E procgen

-xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

+poetry run python -m cleanrl_utils.benchmark \

--env-ids starpilot bossfight bigfish \

--command "poetry run python cleanrl/ppo_procgen.py --track --capture_video" \

--num-seeds 3 \

- --workers 1

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

poetry install -E atari

xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids PongNoFrameskip-v4 BeamRiderNoFrameskip-v4 BreakoutNoFrameskip-v4 \

- --command "poetry run torchrun --standalone --nnodes=1 --nproc_per_node=2 cleanrl/ppo_atari_multigpu.py --track --capture_video" \

+ --command "poetry run torchrun --standalone --nnodes=1 --nproc_per_node=2 cleanrl/ppo_atari_multigpu.py --local-num-envs 4 --track --capture_video" \

--num-seeds 3 \

- --workers 1

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

-poetry install "pettingzoo atari"

+poetry install -E "pettingzoo atari"

poetry run AutoROM --accept-license

xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids pong_v3 surround_v2 tennis_v3 \

--command "poetry run python cleanrl/ppo_pettingzoo_ma_atari.py --track --capture_video" \

--num-seeds 3 \

- --workers 3

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

# IMPORTANT: see specific Isaac Gym installation at

# https://docs.cleanrl.dev/rl-algorithms/ppo/#usage_8

@@ -65,56 +129,17 @@ xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids Cartpole Ant Humanoid BallBalance Anymal \

--command "poetry run python cleanrl/ppo_continuous_action_isaacgym/ppo_continuous_action_isaacgym.py --track --capture_video" \

--num-seeds 3 \

- --workers 1

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

--env-ids AllegroHand ShadowHand \

--command "poetry run python cleanrl/ppo_continuous_action_isaacgym/ppo_continuous_action_isaacgym.py --track --capture_video --num-envs 8192 --num-steps 8 --update-epochs 5 --num-minibatches 4 --reward-scaler 0.01 --total-timesteps 600000000 --record-video-step-frequency 3660" \

--num-seeds 3 \

- --workers 1

-

-

-poetry install "envpool jax"

-poetry run python -m cleanrl_utils.benchmark \

- --env-ids Alien-v5 Amidar-v5 Assault-v5 Asterix-v5 Asteroids-v5 Atlantis-v5 BankHeist-v5 BattleZone-v5 BeamRider-v5 Berzerk-v5 Bowling-v5 Boxing-v5 Breakout-v5 Centipede-v5 ChopperCommand-v5 CrazyClimber-v5 Defender-v5 DemonAttack-v5 \

- --command "poetry run python ppo_atari_envpool_xla_jax.py --track --wandb-project-name envpool-atari --wandb-entity openrlbenchmark" \

- --num-seeds 3 \

- --workers 1

-poetry run python -m cleanrl_utils.benchmark \

- --env-ids DoubleDunk-v5 Enduro-v5 FishingDerby-v5 Freeway-v5 Frostbite-v5 Gopher-v5 Gravitar-v5 Hero-v5 IceHockey-v5 Jamesbond-v5 Kangaroo-v5 Krull-v5 KungFuMaster-v5 MontezumaRevenge-v5 MsPacman-v5 NameThisGame-v5 Phoenix-v5 Pitfall-v5 Pong-v5 \

- --command "poetry run python ppo_atari_envpool_xla_jax.py --track --wandb-project-name envpool-atari --wandb-entity openrlbenchmark" \

- --num-seeds 3 \

- --workers 1

-poetry run python -m cleanrl_utils.benchmark \

- --env-ids PrivateEye-v5 Qbert-v5 Riverraid-v5 RoadRunner-v5 Robotank-v5 Seaquest-v5 Skiing-v5 Solaris-v5 SpaceInvaders-v5 StarGunner-v5 Surround-v5 Tennis-v5 TimePilot-v5 Tutankham-v5 UpNDown-v5 Venture-v5 VideoPinball-v5 WizardOfWor-v5 YarsRevenge-v5 Zaxxon-v5 \

- --command "poetry run python ppo_atari_envpool_xla_jax.py --track --wandb-project-name envpool-atari --wandb-entity openrlbenchmark" \

- --num-seeds 3 \

- --workers 1

-

-# gymnasium support

-poetry install -E mujoco

-OMP_NUM_THREADS=1 xvfb-run -a python -m cleanrl_utils.benchmark \

- --env-ids HalfCheetah-v4 Walker2d-v4 Hopper-v4 InvertedPendulum-v4 Humanoid-v4 Pusher-v4 \

- --command "poetry run python cleanrl/gymnasium_support/ppo_continuous_action.py --no_cuda --track" \

- --num-seeds 3 \

- --workers 1

-

-poetry install "dm_control mujoco"

-OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

- --env-ids dm_control/acrobot-swingup-v0 dm_control/acrobot-swingup_sparse-v0 dm_control/ball_in_cup-catch-v0 dm_control/cartpole-balance-v0 dm_control/cartpole-balance_sparse-v0 dm_control/cartpole-swingup-v0 dm_control/cartpole-swingup_sparse-v0 dm_control/cartpole-two_poles-v0 dm_control/cartpole-three_poles-v0 dm_control/cheetah-run-v0 dm_control/dog-stand-v0 dm_control/dog-walk-v0 dm_control/dog-trot-v0 dm_control/dog-run-v0 dm_control/dog-fetch-v0 dm_control/finger-spin-v0 dm_control/finger-turn_easy-v0 dm_control/finger-turn_hard-v0 dm_control/fish-upright-v0 dm_control/fish-swim-v0 dm_control/hopper-stand-v0 dm_control/hopper-hop-v0 dm_control/humanoid-stand-v0 dm_control/humanoid-walk-v0 dm_control/humanoid-run-v0 dm_control/humanoid-run_pure_state-v0 dm_control/humanoid_CMU-stand-v0 dm_control/humanoid_CMU-run-v0 dm_control/lqr-lqr_2_1-v0 dm_control/lqr-lqr_6_2-v0 dm_control/manipulator-bring_ball-v0 dm_control/manipulator-bring_peg-v0 dm_control/manipulator-insert_ball-v0 dm_control/manipulator-insert_peg-v0 dm_control/pendulum-swingup-v0 dm_control/point_mass-easy-v0 dm_control/point_mass-hard-v0 dm_control/quadruped-walk-v0 dm_control/quadruped-run-v0 dm_control/quadruped-escape-v0 dm_control/quadruped-fetch-v0 dm_control/reacher-easy-v0 dm_control/reacher-hard-v0 dm_control/stacker-stack_2-v0 dm_control/stacker-stack_4-v0 dm_control/swimmer-swimmer6-v0 dm_control/swimmer-swimmer15-v0 dm_control/walker-stand-v0 dm_control/walker-walk-v0 dm_control/walker-run-v0 \

- --command "poetry run python cleanrl/gymnasium_support/ppo_continuous_action.py --no_cuda --track" \

- --num-seeds 3 \

- --workers 9

-

-poetry install "envpool jax"

-python -m cleanrl_utils.benchmark \

- --env-ids Pong-v5 BeamRider-v5 Breakout-v5 \

- --command "poetry run python cleanrl/ppo_atari_envpool_xla_jax_scan.py --track --capture_video" \

- --num-seeds 3 \

- --workers 1

-

-poetry install "mujoco dm_control"

-OMP_NUM_THREADS=1 xvfb-run -a poetry run python -m cleanrl_utils.benchmark \

- --env-ids dm_control/acrobot-swingup-v0 dm_control/acrobot-swingup_sparse-v0 dm_control/ball_in_cup-catch-v0 dm_control/cartpole-balance-v0 dm_control/cartpole-balance_sparse-v0 dm_control/cartpole-swingup-v0 dm_control/cartpole-swingup_sparse-v0 dm_control/cartpole-two_poles-v0 dm_control/cartpole-three_poles-v0 dm_control/cheetah-run-v0 dm_control/dog-stand-v0 dm_control/dog-walk-v0 dm_control/dog-trot-v0 dm_control/dog-run-v0 dm_control/dog-fetch-v0 dm_control/finger-spin-v0 dm_control/finger-turn_easy-v0 dm_control/finger-turn_hard-v0 dm_control/fish-upright-v0 dm_control/fish-swim-v0 dm_control/hopper-stand-v0 dm_control/hopper-hop-v0 dm_control/humanoid-stand-v0 dm_control/humanoid-walk-v0 dm_control/humanoid-run-v0 dm_control/humanoid-run_pure_state-v0 dm_control/humanoid_CMU-stand-v0 dm_control/humanoid_CMU-run-v0 dm_control/lqr-lqr_2_1-v0 dm_control/lqr-lqr_6_2-v0 dm_control/manipulator-bring_ball-v0 dm_control/manipulator-bring_peg-v0 dm_control/manipulator-insert_ball-v0 dm_control/manipulator-insert_peg-v0 dm_control/pendulum-swingup-v0 dm_control/point_mass-easy-v0 dm_control/point_mass-hard-v0 dm_control/quadruped-walk-v0 dm_control/quadruped-run-v0 dm_control/quadruped-escape-v0 dm_control/quadruped-fetch-v0 dm_control/reacher-easy-v0 dm_control/reacher-hard-v0 dm_control/stacker-stack_2-v0 dm_control/stacker-stack_4-v0 dm_control/swimmer-swimmer6-v0 dm_control/swimmer-swimmer15-v0 dm_control/walker-stand-v0 dm_control/walker-walk-v0 dm_control/walker-run-v0 \

- --command "poetry run python cleanrl/ppo_continuous_action.py --exp-name ppo_continuous_action_8M --total-timesteps 8000000 --no_cuda --track" \

- --num-seeds 10 \

- --workers 1

+ --workers 9 \

+ --slurm-gpus-per-task 1 \

+ --slurm-ntasks 1 \

+ --slurm-total-cpus 10 \

+ --slurm-template-path benchmark/cleanrl_1gpu.slurm_template

diff --git a/benchmark/ppo_plot.sh b/benchmark/ppo_plot.sh

new file mode 100644

index 000000000..95678d986

--- /dev/null

+++ b/benchmark/ppo_plot.sh

@@ -0,0 +1,117 @@

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/episodic_return' \

+ 'ppo?tag=pr-424' \

+ --env-ids CartPole-v1 Acrobot-v1 MountainCar-v0 \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/ppo \

+ --scan-history

+

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/episodic_return' \

+ 'ppo_atari?tag=pr-424' \

+ --env-ids PongNoFrameskip-v4 BeamRiderNoFrameskip-v4 BreakoutNoFrameskip-v4 \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/ppo_atari \

+ --scan-history

+

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/episodic_return' \

+ 'ppo_continuous_action?tag=pr-424' \

+ --env-ids HalfCheetah-v4 Walker2d-v4 Hopper-v4 InvertedPendulum-v4 Humanoid-v4 Pusher-v4 dm_control/acrobot-swingup-v0 dm_control/acrobot-swingup_sparse-v0 dm_control/ball_in_cup-catch-v0 \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/ppo_continuous_action \

+ --scan-history

+

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/episodic_return' \

+ 'ppo_continuous_action?tag=v1.0.0-13-gcbd83f6' \

+ --env-ids dm_control/acrobot-swingup-v0 dm_control/acrobot-swingup_sparse-v0 dm_control/ball_in_cup-catch-v0 dm_control/cartpole-balance-v0 dm_control/cartpole-balance_sparse-v0 dm_control/cartpole-swingup-v0 dm_control/cartpole-swingup_sparse-v0 dm_control/cartpole-two_poles-v0 dm_control/cartpole-three_poles-v0 dm_control/cheetah-run-v0 dm_control/dog-stand-v0 dm_control/dog-walk-v0 dm_control/dog-trot-v0 dm_control/dog-run-v0 dm_control/dog-fetch-v0 dm_control/finger-spin-v0 dm_control/finger-turn_easy-v0 dm_control/finger-turn_hard-v0 dm_control/fish-upright-v0 dm_control/fish-swim-v0 dm_control/hopper-stand-v0 dm_control/hopper-hop-v0 dm_control/humanoid-stand-v0 dm_control/humanoid-walk-v0 dm_control/humanoid-run-v0 dm_control/humanoid-run_pure_state-v0 dm_control/humanoid_CMU-stand-v0 dm_control/humanoid_CMU-run-v0 dm_control/lqr-lqr_2_1-v0 dm_control/lqr-lqr_6_2-v0 dm_control/manipulator-bring_ball-v0 dm_control/manipulator-bring_peg-v0 dm_control/manipulator-insert_ball-v0 dm_control/manipulator-insert_peg-v0 dm_control/pendulum-swingup-v0 dm_control/point_mass-easy-v0 dm_control/point_mass-hard-v0 dm_control/quadruped-walk-v0 dm_control/quadruped-run-v0 dm_control/quadruped-escape-v0 dm_control/quadruped-fetch-v0 dm_control/reacher-easy-v0 dm_control/reacher-hard-v0 dm_control/stacker-stack_2-v0 dm_control/stacker-stack_4-v0 dm_control/swimmer-swimmer6-v0 dm_control/swimmer-swimmer15-v0 dm_control/walker-stand-v0 dm_control/walker-walk-v0 dm_control/walker-run-v0 \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/ppo_continuous_action_dm_control \

+ --scan-history

+

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/episodic_return' \

+ 'ppo_atari_lstm?tag=pr-424' \

+ --env-ids PongNoFrameskip-v4 BeamRiderNoFrameskip-v4 BreakoutNoFrameskip-v4 \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/ppo_atari_lstm \

+ --scan-history

+

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/avg_episodic_return' \

+ 'ppo_atari_envpool?tag=pr-424' \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/episodic_return' \

+ 'ppo_atari?tag=pr-424' \

+ --env-ids Pong-v5 BeamRider-v5 Breakout-v5 \

+ --env-ids PongNoFrameskip-v4 BeamRiderNoFrameskip-v4 BreakoutNoFrameskip-v4 \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/ppo_atari_envpool \

+ --scan-history

+

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=envpool-atari&ceik=env_id&cen=exp_name&metric=charts/avg_episodic_return' \

+ 'ppo_atari_envpool_xla_jax' \

+ --filters '?we=openrlbenchmark&wpn=baselines&ceik=env&cen=exp_name&metric=charts/episodic_return' \

+ 'baselines-ppo2-cnn' \

+ --env-ids Alien-v5 Amidar-v5 Assault-v5 Asterix-v5 Asteroids-v5 Atlantis-v5 BankHeist-v5 BattleZone-v5 BeamRider-v5 Berzerk-v5 Bowling-v5 Boxing-v5 Breakout-v5 Centipede-v5 ChopperCommand-v5 CrazyClimber-v5 Defender-v5 DemonAttack-v5 DoubleDunk-v5 Enduro-v5 FishingDerby-v5 Freeway-v5 Frostbite-v5 Gopher-v5 Gravitar-v5 Hero-v5 IceHockey-v5 Jamesbond-v5 Kangaroo-v5 Krull-v5 KungFuMaster-v5 MontezumaRevenge-v5 MsPacman-v5 NameThisGame-v5 Phoenix-v5 Pitfall-v5 Pong-v5 PrivateEye-v5 Qbert-v5 Riverraid-v5 RoadRunner-v5 Robotank-v5 Seaquest-v5 Skiing-v5 Solaris-v5 SpaceInvaders-v5 StarGunner-v5 Surround-v5 Tennis-v5 TimePilot-v5 Tutankham-v5 UpNDown-v5 Venture-v5 VideoPinball-v5 WizardOfWor-v5 YarsRevenge-v5 Zaxxon-v5 \

+ --env-ids AlienNoFrameskip-v4 AmidarNoFrameskip-v4 AssaultNoFrameskip-v4 AsterixNoFrameskip-v4 AsteroidsNoFrameskip-v4 AtlantisNoFrameskip-v4 BankHeistNoFrameskip-v4 BattleZoneNoFrameskip-v4 BeamRiderNoFrameskip-v4 BerzerkNoFrameskip-v4 BowlingNoFrameskip-v4 BoxingNoFrameskip-v4 BreakoutNoFrameskip-v4 CentipedeNoFrameskip-v4 ChopperCommandNoFrameskip-v4 CrazyClimberNoFrameskip-v4 DefenderNoFrameskip-v4 DemonAttackNoFrameskip-v4 DoubleDunkNoFrameskip-v4 EnduroNoFrameskip-v4 FishingDerbyNoFrameskip-v4 FreewayNoFrameskip-v4 FrostbiteNoFrameskip-v4 GopherNoFrameskip-v4 GravitarNoFrameskip-v4 HeroNoFrameskip-v4 IceHockeyNoFrameskip-v4 JamesbondNoFrameskip-v4 KangarooNoFrameskip-v4 KrullNoFrameskip-v4 KungFuMasterNoFrameskip-v4 MontezumaRevengeNoFrameskip-v4 MsPacmanNoFrameskip-v4 NameThisGameNoFrameskip-v4 PhoenixNoFrameskip-v4 PitfallNoFrameskip-v4 PongNoFrameskip-v4 PrivateEyeNoFrameskip-v4 QbertNoFrameskip-v4 RiverraidNoFrameskip-v4 RoadRunnerNoFrameskip-v4 RobotankNoFrameskip-v4 SeaquestNoFrameskip-v4 SkiingNoFrameskip-v4 SolarisNoFrameskip-v4 SpaceInvadersNoFrameskip-v4 StarGunnerNoFrameskip-v4 SurroundNoFrameskip-v4 TennisNoFrameskip-v4 TimePilotNoFrameskip-v4 TutankhamNoFrameskip-v4 UpNDownNoFrameskip-v4 VentureNoFrameskip-v4 VideoPinballNoFrameskip-v4 WizardOfWorNoFrameskip-v4 YarsRevengeNoFrameskip-v4 ZaxxonNoFrameskip-v4 \

+ --no-check-empty-runs \

+ --pc.ncols 4 \

+ --pc.ncols-legend 2 \

+ --rliable \

+ --rc.score_normalization_method atari \

+ --rc.normalized_score_threshold 8.0 \

+ --rc.sample_efficiency_plots \

+ --rc.sample_efficiency_and_walltime_efficiency_method Median \

+ --rc.performance_profile_plots \

+ --rc.aggregate_metrics_plots \

+ --rc.sample_efficiency_num_bootstrap_reps 50000 \

+ --rc.performance_profile_num_bootstrap_reps 50000 \

+ --rc.interval_estimates_num_bootstrap_reps 50000 \

+ --output-filename benchmark/cleanrl/ppo_atari_envpool_xla_jax \

+ --scan-history

+

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/avg_episodic_return' \

+ 'ppo_atari_envpool_xla_jax?tag=pr-424' \

+ 'ppo_atari_envpool_xla_jax_scan?tag=pr-424' \

+ --env-ids Pong-v5 BeamRider-v5 Breakout-v5 \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/ppo_atari_envpool_xla_jax_scan \

+ --scan-history

+

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/episodic_return' \

+ 'ppo_procgen?tag=pr-424' \

+ --env-ids starpilot bossfight bigfish \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/ppo_procgen \

+ --scan-history

+

+python -m openrlbenchmark.rlops \

+ --filters '?we=openrlbenchmark&wpn=cleanrl&ceik=env_id&cen=exp_name&metric=charts/episodic_return' \

+ 'ppo_atari_multigpu?tag=pr-424' \

+ 'ppo_atari?tag=pr-424' \

+ --env-ids PongNoFrameskip-v4 BeamRiderNoFrameskip-v4 BreakoutNoFrameskip-v4 \

+ --no-check-empty-runs \

+ --pc.ncols 3 \

+ --pc.ncols-legend 2 \

+ --output-filename benchmark/cleanrl/ppo_atari_multigpu \

+ --scan-history

diff --git a/docs/benchmark/ppo_atari_envpool.md b/docs/benchmark/ppo_atari_envpool.md

new file mode 100644

index 000000000..4f6f20afc

--- /dev/null

+++ b/docs/benchmark/ppo_atari_envpool.md

@@ -0,0 +1,5 @@

+| | openrlbenchmark/cleanrl/ppo_atari_envpool ({'tag': ['pr-424']}) | openrlbenchmark/cleanrl/ppo_atari ({'tag': ['pr-424']}) |

+|:-------------|:------------------------------------------------------------------|:----------------------------------------------------------|

+| Pong-v5 | 20.45 ± 0.09 | 20.36 ± 0.20 |

+| BeamRider-v5 | 2501.85 ± 210.52 | 1915.93 ± 484.58 |

+| Breakout-v5 | 211.24 ± 151.84 | 414.66 ± 28.09 |

\ No newline at end of file

diff --git a/docs/benchmark/ppo_atari_envpool_runtimes.md b/docs/benchmark/ppo_atari_envpool_runtimes.md

new file mode 100644

index 000000000..dcd106cbe

--- /dev/null

+++ b/docs/benchmark/ppo_atari_envpool_runtimes.md

@@ -0,0 +1,5 @@

+| | openrlbenchmark/cleanrl/ppo_atari_envpool ({'tag': ['pr-424']}) | openrlbenchmark/cleanrl/ppo_atari ({'tag': ['pr-424']}) |

+|:-------------|------------------------------------------------------------------:|----------------------------------------------------------:|

+| Pong-v5 | 178.375 | 281.071 |

+| BeamRider-v5 | 182.944 | 284.941 |

+| Breakout-v5 | 151.384 | 264.077 |

\ No newline at end of file

diff --git a/docs/benchmark/ppo_atari_envpool_xla_jax.md b/docs/benchmark/ppo_atari_envpool_xla_jax.md

new file mode 100644

index 000000000..e85cd8e55

--- /dev/null

+++ b/docs/benchmark/ppo_atari_envpool_xla_jax.md

@@ -0,0 +1,59 @@

+| | openrlbenchmark/envpool-atari/ppo_atari_envpool_xla_jax ({}) | openrlbenchmark/baselines/baselines-ppo2-cnn ({}) |

+|:--------------------|:---------------------------------------------------------------|:----------------------------------------------------|

+| Alien-v5 | 1736.39 ± 68.65 | 1705.80 ± 439.74 |

+| Amidar-v5 | 653.53 ± 44.06 | 585.99 ± 52.92 |

+| Assault-v5 | 6791.74 ± 420.03 | 4878.67 ± 815.64 |

+| Asterix-v5 | 4820.33 ± 1091.83 | 3738.50 ± 745.13 |

+| Asteroids-v5 | 1633.67 ± 247.21 | 1556.90 ± 151.20 |

+| Atlantis-v5 | 3778458.33 ± 117680.68 | 2036749.00 ± 95929.75 |

+| BankHeist-v5 | 1195.44 ± 18.54 | 1213.47 ± 14.46 |

+| BattleZone-v5 | 24283.75 ± 1841.94 | 19980.00 ± 1355.21 |

+| BeamRider-v5 | 2478.44 ± 336.55 | 2835.71 ± 387.92 |

+| Berzerk-v5 | 992.88 ± 196.90 | 1049.77 ± 144.58 |

+| Bowling-v5 | 51.62 ± 13.53 | 59.66 ± 0.62 |

+| Boxing-v5 | 92.68 ± 1.41 | 93.32 ± 0.36 |

+| Breakout-v5 | 430.09 ± 8.12 | 405.73 ± 11.47 |

+| Centipede-v5 | 3309.34 ± 325.05 | 3688.54 ± 412.24 |

+| ChopperCommand-v5 | 5642.83 ± 802.34 | 816.33 ± 114.14 |

+| CrazyClimber-v5 | 118763.04 ± 4915.34 | 119344.67 ± 4902.83 |

+| Defender-v5 | 48558.98 ± 4466.76 | 50161.67 ± 4477.49 |

+| DemonAttack-v5 | 29283.83 ± 7007.31 | 13788.43 ± 1313.44 |

+| DoubleDunk-v5 | -6.81 ± 0.24 | -12.96 ± 0.31 |

+| Enduro-v5 | 1297.23 ± 143.71 | 986.69 ± 25.28 |

+| FishingDerby-v5 | 21.21 ± 6.73 | 26.23 ± 2.76 |

+| Freeway-v5 | 33.10 ± 0.31 | 32.97 ± 0.37 |

+| Frostbite-v5 | 1137.34 ± 1192.05 | 933.60 ± 885.92 |

+| Gopher-v5 | 6505.29 ± 7655.20 | 3672.53 ± 1749.20 |

+| Gravitar-v5 | 1099.33 ± 603.06 | 881.67 ± 33.73 |

+| Hero-v5 | 26429.65 ± 924.74 | 24746.88 ± 3530.10 |

+| IceHockey-v5 | -4.33 ± 0.43 | -4.12 ± 0.20 |

+| Jamesbond-v5 | 496.08 ± 24.60 | 536.50 ± 82.33 |

+| Kangaroo-v5 | 6582.12 ± 5395.44 | 5325.33 ± 3464.80 |

+| Krull-v5 | 9718.09 ± 649.15 | 8737.10 ± 294.58 |

+| KungFuMaster-v5 | 26000.25 ± 1965.22 | 30451.67 ± 5515.45 |

+| MontezumaRevenge-v5 | 0.08 ± 0.12 | 1.00 ± 1.41 |

+| MsPacman-v5 | 2345.67 ± 185.94 | 2152.83 ± 152.80 |

+| NameThisGame-v5 | 5750.00 ± 181.32 | 6815.63 ± 1098.95 |

+| Phoenix-v5 | 14474.11 ± 1794.83 | 9517.73 ± 1176.62 |

+| Pitfall-v5 | 0.00 ± 0.00 | -0.76 ± 0.55 |

+| Pong-v5 | 20.39 ± 0.24 | 20.45 ± 0.81 |

+| PrivateEye-v5 | 100.00 ± 0.00 | 31.83 ± 43.74 |

+| Qbert-v5 | 17246.27 ± 605.40 | 15228.25 ± 920.95 |

+| Riverraid-v5 | 8275.25 ± 256.63 | 9023.57 ± 1386.85 |

+| RoadRunner-v5 | 33040.38 ± 16488.95 | 40125.33 ± 7249.13 |

+| Robotank-v5 | 14.43 ± 4.98 | 16.45 ± 3.37 |

+| Seaquest-v5 | 1240.30 ± 419.36 | 1518.33 ± 400.35 |

+| Skiing-v5 | -18483.46 ± 8684.71 | -22978.48 ± 9894.25 |

+| Solaris-v5 | 2198.36 ± 147.23 | 2365.33 ± 157.75 |

+| SpaceInvaders-v5 | 1188.82 ± 80.52 | 1019.75 ± 49.08 |

+| StarGunner-v5 | 43519.12 ± 4709.23 | 44457.67 ± 3031.86 |

+| Surround-v5 | -2.58 ± 2.31 | -4.97 ± 0.99 |

+| Tennis-v5 | -17.64 ± 4.60 | -16.44 ± 1.46 |

+| TimePilot-v5 | 6476.46 ± 993.30 | 6346.67 ± 663.31 |

+| Tutankham-v5 | 249.05 ± 16.56 | 190.73 ± 12.00 |

+| UpNDown-v5 | 487495.41 ± 39751.49 | 156143.70 ± 70620.88 |

+| Venture-v5 | 0.00 ± 0.00 | 109.33 ± 61.57 |

+| VideoPinball-v5 | 43133.94 ± 6362.12 | 53121.26 ± 2580.70 |

+| WizardOfWor-v5 | 6353.58 ± 116.59 | 5346.33 ± 277.11 |

+| YarsRevenge-v5 | 55757.68 ± 7467.49 | 9394.97 ± 2743.74 |

+| Zaxxon-v5 | 3689.67 ± 2477.25 | 5532.67 ± 2607.65 |

\ No newline at end of file

diff --git a/docs/benchmark/ppo_atari_envpool_xla_jax_runtimes.md b/docs/benchmark/ppo_atari_envpool_xla_jax_runtimes.md

new file mode 100644

index 000000000..09fe628f0

--- /dev/null

+++ b/docs/benchmark/ppo_atari_envpool_xla_jax_runtimes.md

@@ -0,0 +1,59 @@

+| | openrlbenchmark/envpool-atari/ppo_atari_envpool_xla_jax ({}) | openrlbenchmark/baselines/baselines-ppo2-cnn ({}) |

+|:--------------------|---------------------------------------------------------------:|----------------------------------------------------:|

+| Alien-v5 | 50.3275 | 117.397 |

+| Amidar-v5 | 42.8176 | 114.093 |

+| Assault-v5 | 35.9245 | 108.094 |

+| Asterix-v5 | 37.7117 | 113.386 |

+| Asteroids-v5 | 39.9731 | 114.409 |

+| Atlantis-v5 | 40.1527 | 123.05 |

+| BankHeist-v5 | 38.7443 | 137.308 |

+| BattleZone-v5 | 45.0654 | 138.489 |

+| BeamRider-v5 | 42.0778 | 119.437 |

+| Berzerk-v5 | 38.7173 | 135.316 |

+| Bowling-v5 | 35.0156 | 131.365 |

+| Boxing-v5 | 48.8149 | 151.607 |

+| Breakout-v5 | 42.3547 | 122.828 |

+| Centipede-v5 | 43.6886 | 150.112 |

+| ChopperCommand-v5 | 45.9308 | 131.192 |

+| CrazyClimber-v5 | 36.0841 | 127.942 |

+| Defender-v5 | 35.1029 | 132.29 |

+| DemonAttack-v5 | 35.41 | 128.476 |

+| DoubleDunk-v5 | 41.4521 | 108.028 |

+| Enduro-v5 | 44.9909 | 142.046 |

+| FishingDerby-v5 | 51.6075 | 151.286 |

+| Freeway-v5 | 50.7103 | 154.163 |

+| Frostbite-v5 | 47.5474 | 146.092 |

+| Gopher-v5 | 36.2977 | 139.496 |

+| Gravitar-v5 | 41.9322 | 138.746 |

+| Hero-v5 | 50.5106 | 152.413 |

+| IceHockey-v5 | 43.0228 | 144.455 |

+| Jamesbond-v5 | 38.8264 | 137.321 |

+| Kangaroo-v5 | 44.4304 | 142.436 |

+| Krull-v5 | 47.7748 | 147.313 |

+| KungFuMaster-v5 | 43.1534 | 141.903 |

+| MontezumaRevenge-v5 | 44.8838 | 146.777 |

+| MsPacman-v5 | 42.6463 | 138.382 |

+| NameThisGame-v5 | 43.8473 | 136.264 |

+| Phoenix-v5 | 36.7586 | 129.716 |

+| Pitfall-v5 | 44.6369 | 137.36 |

+| Pong-v5 | 36.7657 | 118.745 |

+| PrivateEye-v5 | 43.3399 | 143.957 |

+| Qbert-v5 | 40.1475 | 135.255 |

+| Riverraid-v5 | 44.2555 | 142.627 |

+| RoadRunner-v5 | 46.1059 | 145.451 |

+| Robotank-v5 | 48.3364 | 149.681 |

+| Seaquest-v5 | 38.3639 | 136.942 |

+| Skiing-v5 | 38.6402 | 132.061 |

+| Solaris-v5 | 50.2944 | 136.9 |

+| SpaceInvaders-v5 | 39.4931 | 125.83 |

+| StarGunner-v5 | 33.7096 | 119.18 |

+| Surround-v5 | 33.923 | 132.017 |

+| Tennis-v5 | 39.6194 | 97.019 |

+| TimePilot-v5 | 37.0124 | 130.693 |

+| Tutankham-v5 | 36.9677 | 139.694 |

+| UpNDown-v5 | 52.9895 | 140.876 |

+| Venture-v5 | 37.9828 | 144.236 |

+| VideoPinball-v5 | 47.1716 | 179.866 |

+| WizardOfWor-v5 | 37.5751 | 142.086 |

+| YarsRevenge-v5 | 36.5889 | 127.358 |

+| Zaxxon-v5 | 41.9785 | 133.922 |

\ No newline at end of file

diff --git a/docs/benchmark/ppo_atari_envpool_xla_jax_scan.md b/docs/benchmark/ppo_atari_envpool_xla_jax_scan.md

new file mode 100644

index 000000000..7fca897b7

--- /dev/null

+++ b/docs/benchmark/ppo_atari_envpool_xla_jax_scan.md

@@ -0,0 +1,5 @@

+| | openrlbenchmark/cleanrl/ppo_atari_envpool_xla_jax ({'tag': ['pr-424']}) | openrlbenchmark/cleanrl/ppo_atari_envpool_xla_jax_scan ({'tag': ['pr-424']}) |

+|:-------------|:--------------------------------------------------------------------------|:-------------------------------------------------------------------------------|

+| Pong-v5 | 20.82 ± 0.21 | 20.52 ± 0.32 |

+| BeamRider-v5 | 2678.73 ± 426.42 | 2860.61 ± 801.30 |

+| Breakout-v5 | 420.92 ± 16.75 | 423.90 ± 5.49 |

\ No newline at end of file

diff --git a/docs/benchmark/ppo_atari_envpool_xla_jax_scan_runtimes.md b/docs/benchmark/ppo_atari_envpool_xla_jax_scan_runtimes.md

new file mode 100644

index 000000000..7c77fc420

--- /dev/null

+++ b/docs/benchmark/ppo_atari_envpool_xla_jax_scan_runtimes.md

@@ -0,0 +1,5 @@

+| | openrlbenchmark/cleanrl/ppo_atari_envpool_xla_jax ({'tag': ['pr-424']}) | openrlbenchmark/cleanrl/ppo_atari_envpool_xla_jax_scan ({'tag': ['pr-424']}) |

+|:-------------|--------------------------------------------------------------------------:|-------------------------------------------------------------------------------:|

+| Pong-v5 | 34.3237 | 34.701 |

+| BeamRider-v5 | 37.1076 | 37.2449 |

+| Breakout-v5 | 39.576 | 39.775 |

\ No newline at end of file

diff --git a/docs/benchmark/ppo_atari_multigpu.md b/docs/benchmark/ppo_atari_multigpu.md

index 6e1b62fb6..7cec5206e 100644

--- a/docs/benchmark/ppo_atari_multigpu.md

+++ b/docs/benchmark/ppo_atari_multigpu.md

@@ -1,5 +1,5 @@

-| | openrlbenchmark/cleanrl/ppo_atari_multigpu ({'tag': ['pr-424']}) |

-|:------------------------|:-------------------------------------------------------------------|

-| PongNoFrameskip-v4 | 20.83 ± 0.10 |

-| BeamRiderNoFrameskip-v4 | 2281.64 ± 392.71 |

-| BreakoutNoFrameskip-v4 | 426.99 ± 13.80 |

\ No newline at end of file

+| | openrlbenchmark/cleanrl/ppo_atari_multigpu ({'tag': ['pr-424']}) | openrlbenchmark/cleanrl/ppo_atari ({'tag': ['pr-424']}) |

+|:------------------------|:-------------------------------------------------------------------|:----------------------------------------------------------|

+| PongNoFrameskip-v4 | 20.34 ± 0.43 | 20.36 ± 0.20 |

+| BeamRiderNoFrameskip-v4 | 2414.65 ± 643.74 | 1915.93 ± 484.58 |

+| BreakoutNoFrameskip-v4 | 414.94 ± 20.60 | 414.66 ± 28.09 |

\ No newline at end of file

diff --git a/docs/rl-algorithms/ppo.md b/docs/rl-algorithms/ppo.md

index cc4c7a809..6eb50cbe6 100644

--- a/docs/rl-algorithms/ppo.md

+++ b/docs/rl-algorithms/ppo.md

@@ -100,27 +100,28 @@ Running `python cleanrl/ppo.py` will automatically record various metrics such a

To run benchmark experiments, see :material-github: [benchmark/ppo.sh](https://github.com/vwxyzjn/cleanrl/blob/master/benchmark/ppo.sh). Specifically, execute the following command:

-

-

+``` title="benchmark/ppo.sh" linenums="1"

+--8<-- "benchmark/ppo.sh:3:8"

+```

Below are the average episodic returns for `ppo.py`. To ensure the quality of the implementation, we compared the results against `openai/baselies`' PPO.

| Environment | `ppo.py` | `openai/baselies`' PPO (Huang et al., 2022)[^1]

| ----------- | ----------- | ----------- |

-| CartPole-v1 | 492.40 ± 13.05 |497.54 ± 4.02 |

-| Acrobot-v1 | -89.93 ± 6.34 | -81.82 ± 5.58 |

+| CartPole-v1 | 490.04 ± 6.12 |497.54 ± 4.02 |

+| Acrobot-v1 | -86.36 ± 1.32 | -81.82 ± 5.58 |

| MountainCar-v0 | -200.00 ± 0.00 | -200.00 ± 0.00 |

Learning curves:

-

-

+``` title="benchmark/ppo_plot.sh" linenums="1"

+--8<-- "benchmark/ppo_plot.sh::9"

+```

-

-

-

+

+ Tracked experiments and game play videos:

@@ -186,27 +187,28 @@ See [related docs](/rl-algorithms/ppo/#explanation-of-the-logged-metrics) for `p

To run benchmark experiments, see :material-github: [benchmark/ppo.sh](https://github.com/vwxyzjn/cleanrl/blob/master/benchmark/ppo.sh). Specifically, execute the following command:

-

+``` title="benchmark/ppo.sh" linenums="1"

+--8<-- "benchmark/ppo.sh:14:19"

+```

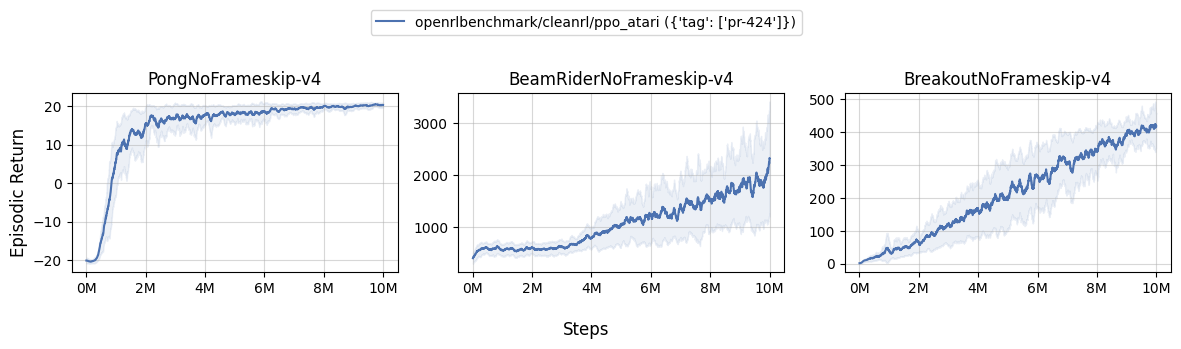

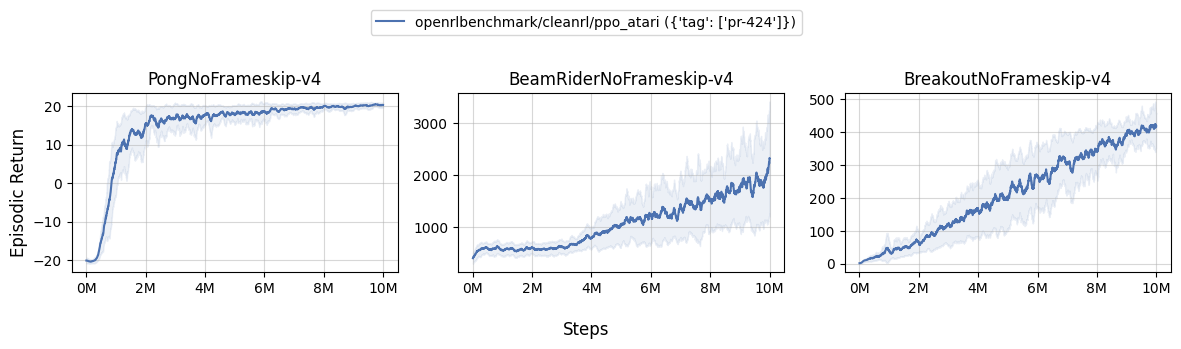

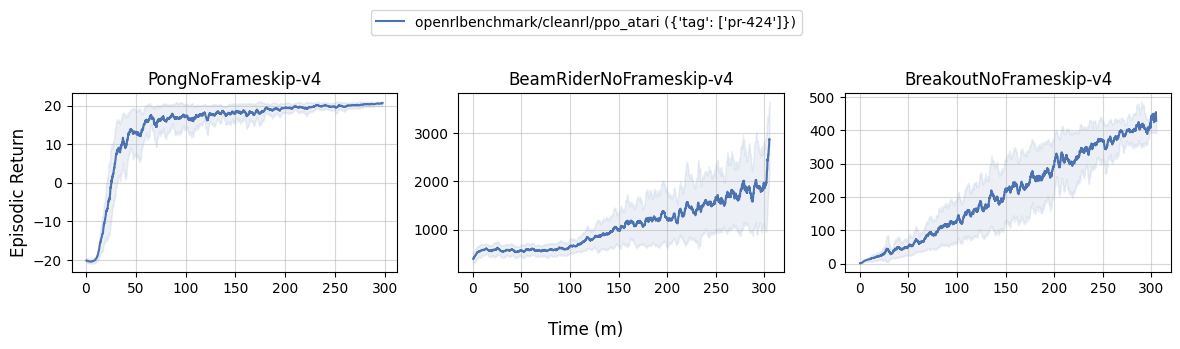

Below are the average episodic returns for `ppo_atari.py`. To ensure the quality of the implementation, we compared the results against `openai/baselies`' PPO.

| Environment | `ppo_atari.py` | `openai/baselies`' PPO (Huang et al., 2022)[^1]

| ----------- | ----------- | ----------- |

-| BreakoutNoFrameskip-v4 | 416.31 ± 43.92 | 406.57 ± 31.554 |

-| PongNoFrameskip-v4 | 20.59 ± 0.35 | 20.512 ± 0.50 |

-| BeamRiderNoFrameskip-v4 | 2445.38 ± 528.91 | 2642.97 ± 670.37 |

+| BreakoutNoFrameskip-v4 | 414.66 ± 28.09 | 406.57 ± 31.554 |

+| PongNoFrameskip-v4 | 20.36 ± 0.20 | 20.512 ± 0.50 |

+| BeamRiderNoFrameskip-v4 | 1915.93 ± 484.58 | 2642.97 ± 670.37 |

Learning curves:

-

Tracked experiments and game play videos:

@@ -186,27 +187,28 @@ See [related docs](/rl-algorithms/ppo/#explanation-of-the-logged-metrics) for `p

To run benchmark experiments, see :material-github: [benchmark/ppo.sh](https://github.com/vwxyzjn/cleanrl/blob/master/benchmark/ppo.sh). Specifically, execute the following command:

-

+``` title="benchmark/ppo.sh" linenums="1"

+--8<-- "benchmark/ppo.sh:14:19"

+```

Below are the average episodic returns for `ppo_atari.py`. To ensure the quality of the implementation, we compared the results against `openai/baselies`' PPO.

| Environment | `ppo_atari.py` | `openai/baselies`' PPO (Huang et al., 2022)[^1]

| ----------- | ----------- | ----------- |

-| BreakoutNoFrameskip-v4 | 416.31 ± 43.92 | 406.57 ± 31.554 |

-| PongNoFrameskip-v4 | 20.59 ± 0.35 | 20.512 ± 0.50 |

-| BeamRiderNoFrameskip-v4 | 2445.38 ± 528.91 | 2642.97 ± 670.37 |

+| BreakoutNoFrameskip-v4 | 414.66 ± 28.09 | 406.57 ± 31.554 |

+| PongNoFrameskip-v4 | 20.36 ± 0.20 | 20.512 ± 0.50 |

+| BeamRiderNoFrameskip-v4 | 1915.93 ± 484.58 | 2642.97 ± 670.37 |

Learning curves:

-

-

-

-

+``` title="benchmark/ppo_plot.sh" linenums="1"

+--8<-- "benchmark/ppo_plot.sh:11:19"

+```

-

-

+

+ Tracked experiments and game play videos:

@@ -248,9 +250,6 @@ The [ppo_continuous_action.py](https://github.com/vwxyzjn/cleanrl/blob/master/cl

# dm_control environments

poetry install -E "mujoco dm_control"

python cleanrl/ppo_continuous_action.py --env-id dm_control/cartpole-balance-v0

- # backwards compatibility with mujoco v2 environments

- poetry install -E mujoco_py # only works in Linux

- python cleanrl/ppo_continuous_action.py --env-id Hopper-v2

```

=== "pip"

@@ -261,8 +260,6 @@ The [ppo_continuous_action.py](https://github.com/vwxyzjn/cleanrl/blob/master/cl

python cleanrl/ppo_continuous_action.py --env-id Hopper-v4

pip install -r requirements/requirements-dm_control.txt

python cleanrl/ppo_continuous_action.py --env-id dm_control/cartpole-balance-v0

- pip install -r requirements/requirements-mujoco_py.txt

- python cleanrl/ppo_continuous_action.py --env-id Hopper-v2

```

???+ warning "dm_control installation issue"

@@ -301,122 +298,97 @@ See [related docs](/rl-algorithms/ppo/#explanation-of-the-logged-metrics) for `p

To run benchmark experiments, see :material-github: [benchmark/ppo.sh](https://github.com/vwxyzjn/cleanrl/blob/master/benchmark/ppo.sh). Specifically, execute the following command:

-

-

-

-

-

-???+ note "Result tables, learning curves, and interactive reports"

-

- === "MuJoCo v2"

-

- Below are the average episodic returns for `ppo_continuous_action.py`. To ensure the quality of the implementation, we compared the results against `openai/baselies`' PPO.

-

- | | ppo_continuous_action ({'tag': ['v1.0.0-27-gde3f410']}) | `openai/baselies`' PPO (results taken from [here](https://wandb.ai/openrlbenchmark/openrlbenchmark/reports/MuJoCo-openai-baselines--VmlldzoyMTgyNjM0)) |

- |:--------------------|:----------------------------------------------------------|:---------------------------------------------------------------------------------------------|

- | HalfCheetah-v2 | 2262.50 ± 1196.81 | 1428.55 ± 62.40 |

- | Walker2d-v2 | 3312.32 ± 429.87 | 3356.49 ± 322.61 |

- | Hopper-v2 | 2311.49 ± 440.99 | 2158.65 ± 302.33 |

- | InvertedPendulum-v2 | 852.04 ± 17.04 | 901.25 ± 35.73 |

- | Humanoid-v2 | 676.34 ± 78.68 | 673.11 ± 53.02 |

- | Pusher-v2 | -60.49 ± 4.37 | -56.83 ± 13.33 |

-

- Learning curves:

-

-

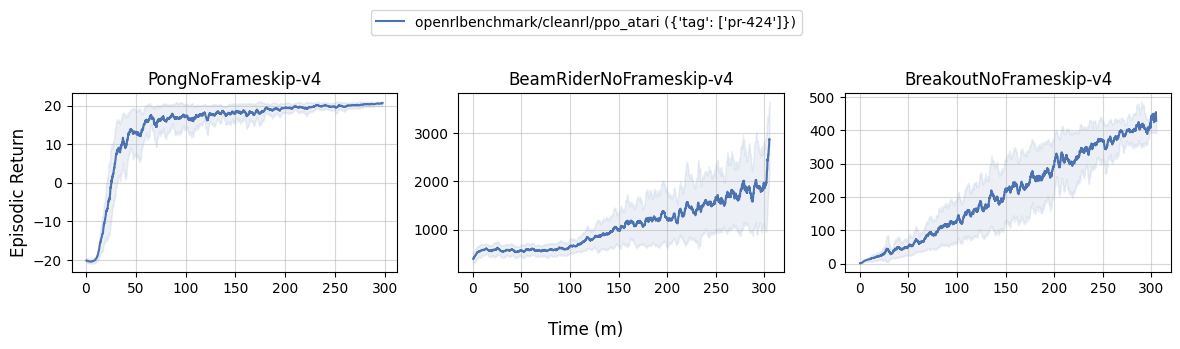

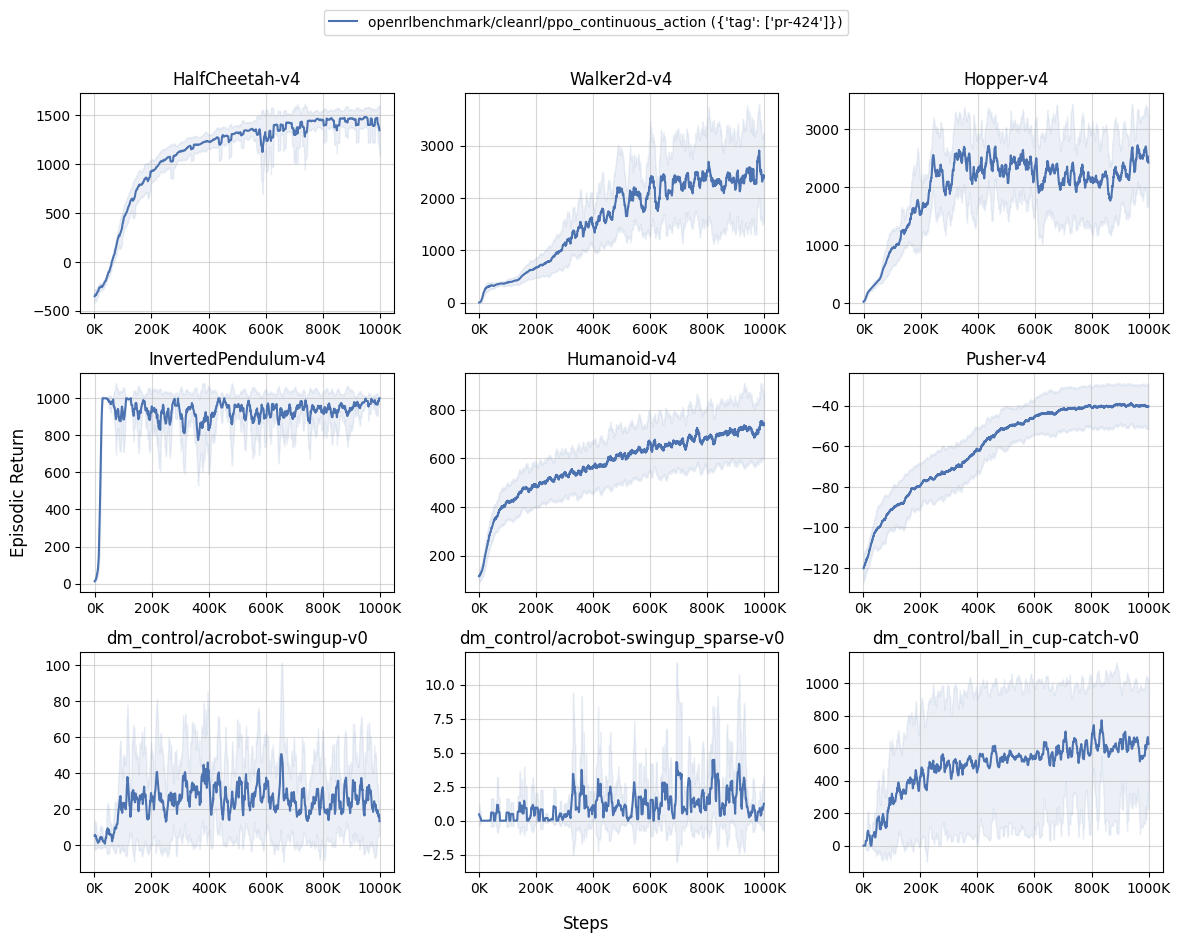

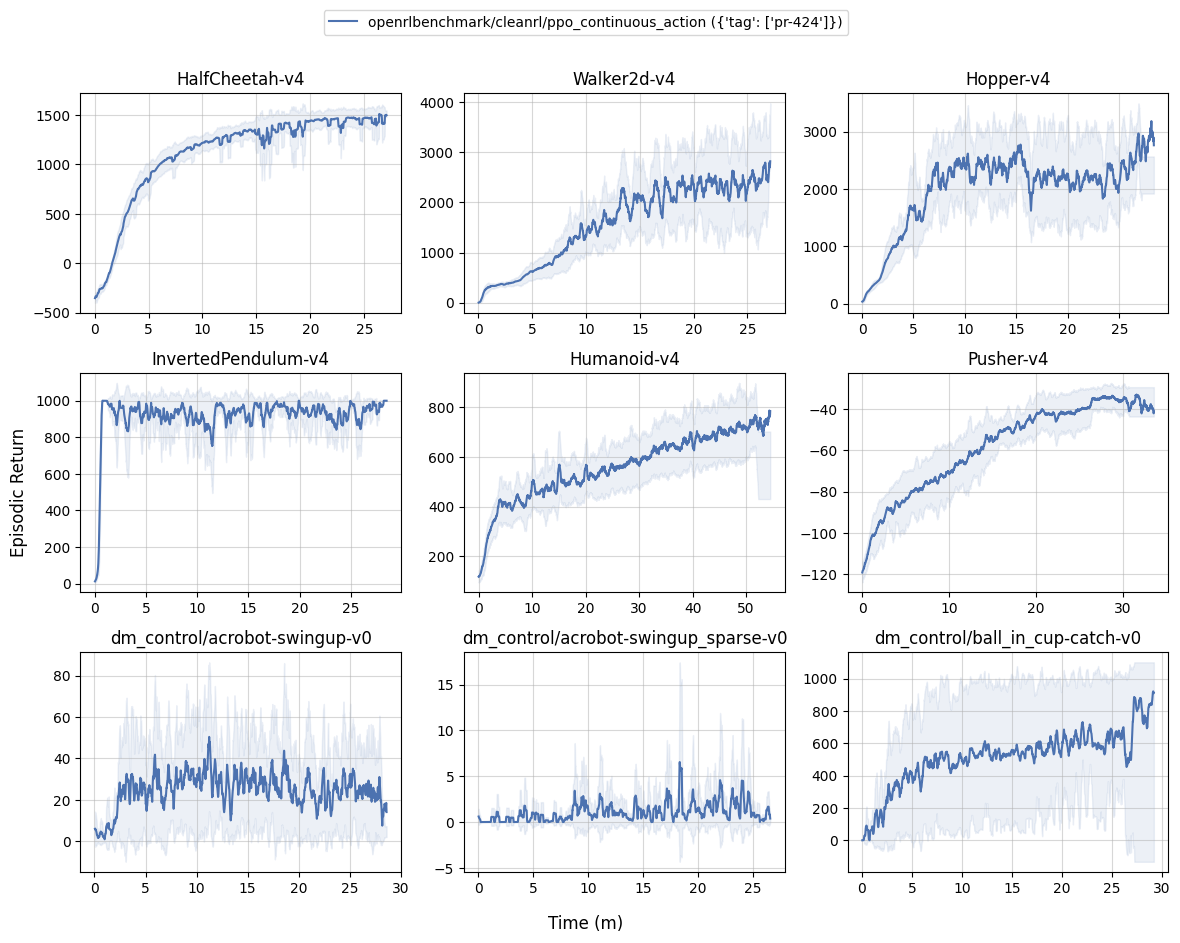

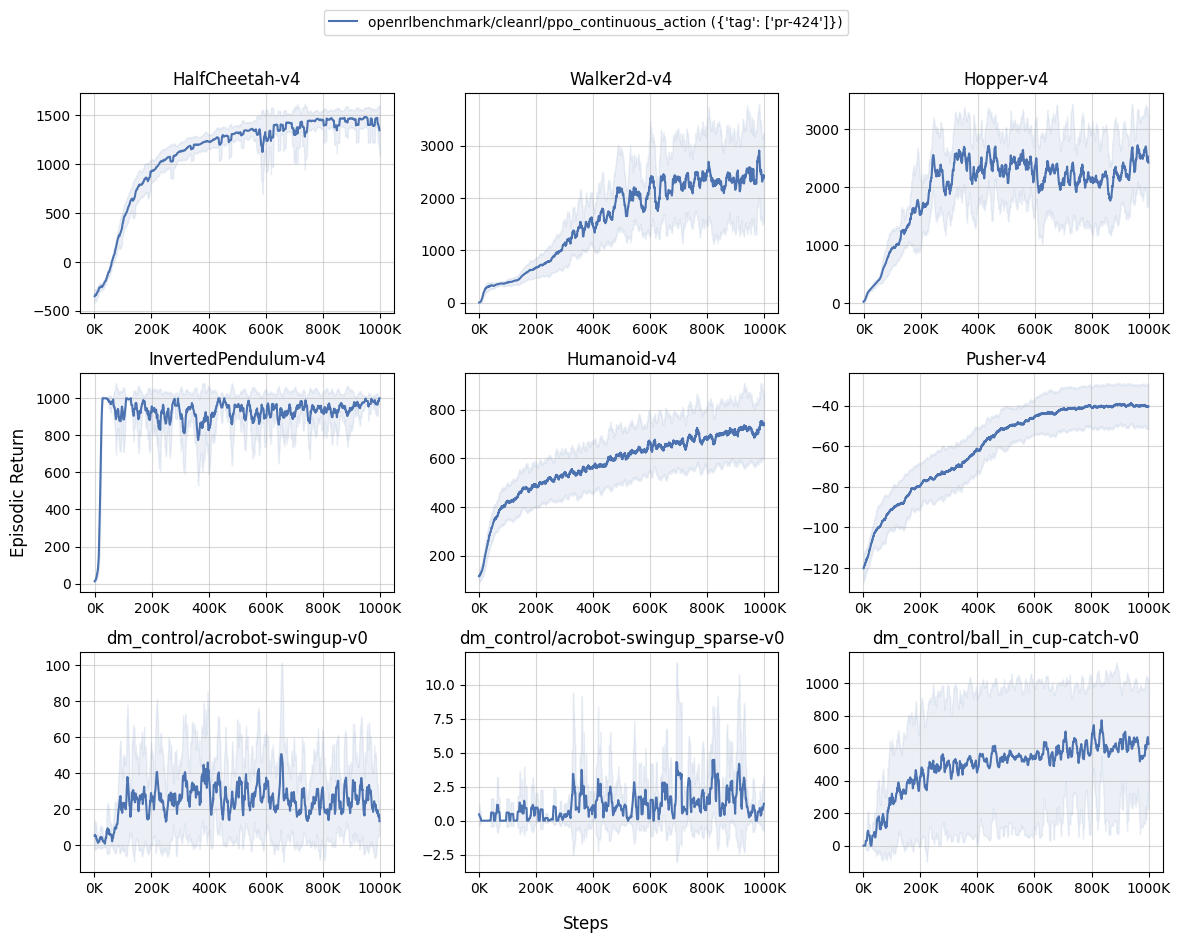

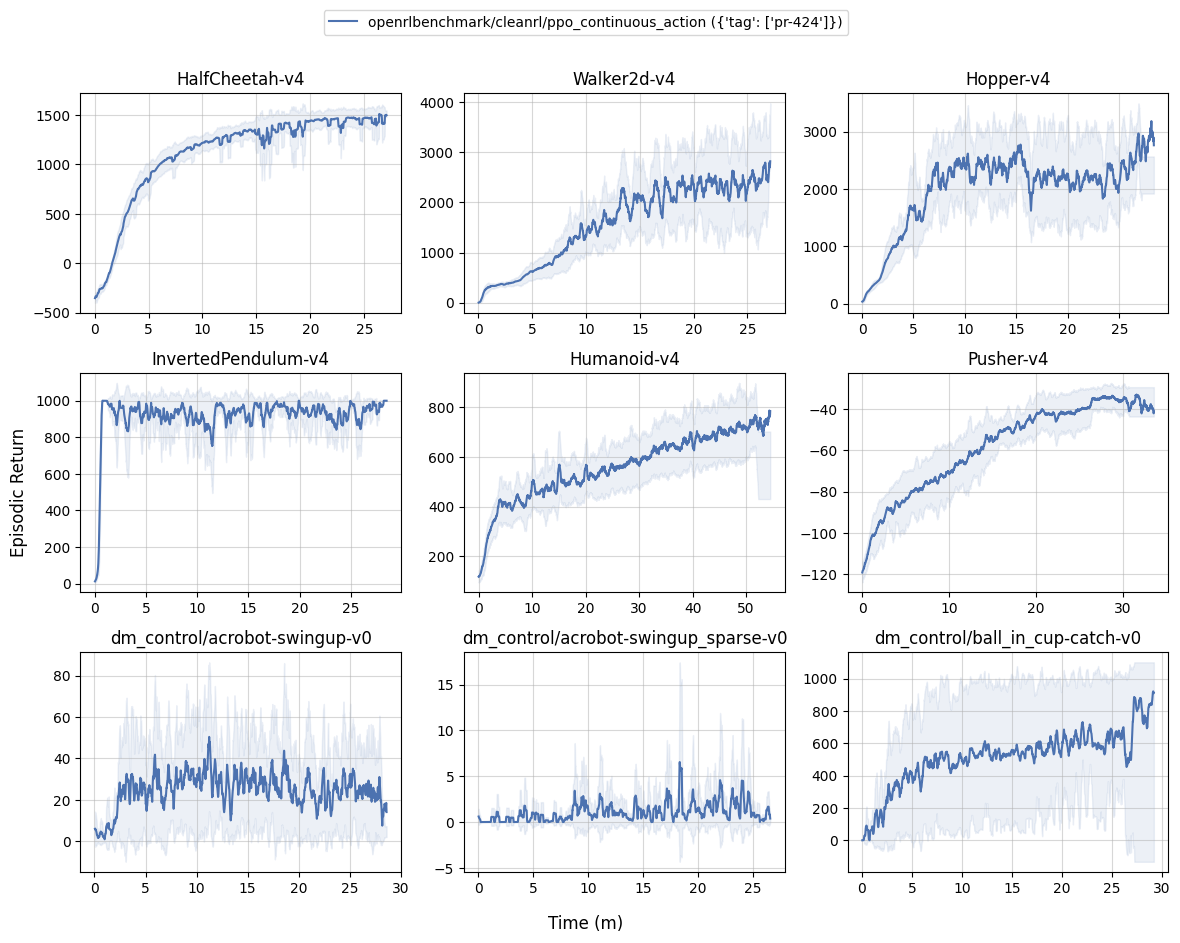

+MuJoCo v4

- Tracked experiments and game play videos:

+``` title="benchmark/ppo.sh" linenums="1"

+--8<-- "benchmark/ppo.sh:25:30"

+```

-

+{!benchmark/ppo_continuous_action.md!}

- === "MuJoCo v4"

-

- Below are the average episodic returns for `ppo_continuous_action.py` in MuJoCo v4 environments and `dm_control` environments.

+Learning curves:

- | | ppo_continuous_action ({'tag': ['v1.0.0-12-g99f7789']}) |

- |:--------------------|:----------------------------------------------------------|

- | HalfCheetah-v4 | 2905.85 ± 1129.37 |

- | Walker2d-v4 | 2890.97 ± 231.40 |

- | Hopper-v4 | 2051.80 ± 313.94 |

- | InvertedPendulum-v4 | 950.98 ± 36.39 |

- | Humanoid-v4 | 742.19 ± 155.77 |

- | Pusher-v4 | -55.60 ± 3.98 |

+``` title="benchmark/ppo_plot.sh" linenums="1"

+--8<-- "benchmark/ppo_plot.sh:11:19"

+```

+

Tracked experiments and game play videos:

@@ -248,9 +250,6 @@ The [ppo_continuous_action.py](https://github.com/vwxyzjn/cleanrl/blob/master/cl

# dm_control environments

poetry install -E "mujoco dm_control"

python cleanrl/ppo_continuous_action.py --env-id dm_control/cartpole-balance-v0

- # backwards compatibility with mujoco v2 environments

- poetry install -E mujoco_py # only works in Linux

- python cleanrl/ppo_continuous_action.py --env-id Hopper-v2

```

=== "pip"

@@ -261,8 +260,6 @@ The [ppo_continuous_action.py](https://github.com/vwxyzjn/cleanrl/blob/master/cl

python cleanrl/ppo_continuous_action.py --env-id Hopper-v4

pip install -r requirements/requirements-dm_control.txt

python cleanrl/ppo_continuous_action.py --env-id dm_control/cartpole-balance-v0

- pip install -r requirements/requirements-mujoco_py.txt

- python cleanrl/ppo_continuous_action.py --env-id Hopper-v2

```

???+ warning "dm_control installation issue"

@@ -301,122 +298,97 @@ See [related docs](/rl-algorithms/ppo/#explanation-of-the-logged-metrics) for `p

To run benchmark experiments, see :material-github: [benchmark/ppo.sh](https://github.com/vwxyzjn/cleanrl/blob/master/benchmark/ppo.sh). Specifically, execute the following command:

-

-

-

-

-

-???+ note "Result tables, learning curves, and interactive reports"

-

- === "MuJoCo v2"

-

- Below are the average episodic returns for `ppo_continuous_action.py`. To ensure the quality of the implementation, we compared the results against `openai/baselies`' PPO.

-

- | | ppo_continuous_action ({'tag': ['v1.0.0-27-gde3f410']}) | `openai/baselies`' PPO (results taken from [here](https://wandb.ai/openrlbenchmark/openrlbenchmark/reports/MuJoCo-openai-baselines--VmlldzoyMTgyNjM0)) |

- |:--------------------|:----------------------------------------------------------|:---------------------------------------------------------------------------------------------|

- | HalfCheetah-v2 | 2262.50 ± 1196.81 | 1428.55 ± 62.40 |

- | Walker2d-v2 | 3312.32 ± 429.87 | 3356.49 ± 322.61 |

- | Hopper-v2 | 2311.49 ± 440.99 | 2158.65 ± 302.33 |

- | InvertedPendulum-v2 | 852.04 ± 17.04 | 901.25 ± 35.73 |

- | Humanoid-v2 | 676.34 ± 78.68 | 673.11 ± 53.02 |

- | Pusher-v2 | -60.49 ± 4.37 | -56.83 ± 13.33 |

-

- Learning curves:

-

-

+MuJoCo v4

- Tracked experiments and game play videos:

+``` title="benchmark/ppo.sh" linenums="1"

+--8<-- "benchmark/ppo.sh:25:30"

+```

-

+{!benchmark/ppo_continuous_action.md!}

- === "MuJoCo v4"

-

- Below are the average episodic returns for `ppo_continuous_action.py` in MuJoCo v4 environments and `dm_control` environments.

+Learning curves:

- | | ppo_continuous_action ({'tag': ['v1.0.0-12-g99f7789']}) |

- |:--------------------|:----------------------------------------------------------|

- | HalfCheetah-v4 | 2905.85 ± 1129.37 |

- | Walker2d-v4 | 2890.97 ± 231.40 |

- | Hopper-v4 | 2051.80 ± 313.94 |

- | InvertedPendulum-v4 | 950.98 ± 36.39 |

- | Humanoid-v4 | 742.19 ± 155.77 |

- | Pusher-v4 | -55.60 ± 3.98 |

+``` title="benchmark/ppo_plot.sh" linenums="1"

+--8<-- "benchmark/ppo_plot.sh:11:19"

+```

+ +

+ - Learning curves:

+Tracked experiments and game play videos:

-

+

+

+

+

+``` title="benchmark/ppo.sh" linenums="1"

+--8<-- "benchmark/ppo.sh:36:41"

+```

+

+Below are the average episodic returns for `ppo_continuous_action.py` in `dm_control` environments.

+

+| | ppo_continuous_action ({'tag': ['v1.0.0-13-gcbd83f6']}) |

+|:--------------------------------------|:----------------------------------------------------------|

+| dm_control/acrobot-swingup-v0 | 27.84 ± 9.25 |

+| dm_control/acrobot-swingup_sparse-v0 | 1.60 ± 1.17 |

+| dm_control/ball_in_cup-catch-v0 | 900.78 ± 5.26 |

+| dm_control/cartpole-balance-v0 | 855.47 ± 22.06 |

+| dm_control/cartpole-balance_sparse-v0 | 999.93 ± 0.10 |

+| dm_control/cartpole-swingup-v0 | 640.86 ± 11.44 |

+| dm_control/cartpole-swingup_sparse-v0 | 51.34 ± 58.35 |

+| dm_control/cartpole-two_poles-v0 | 203.86 ± 11.84 |

+| dm_control/cartpole-three_poles-v0 | 164.59 ± 3.23 |

+| dm_control/cheetah-run-v0 | 432.56 ± 82.54 |

+| dm_control/dog-stand-v0 | 307.79 ± 46.26 |

+| dm_control/dog-walk-v0 | 120.05 ± 8.80 |

+| dm_control/dog-trot-v0 | 76.56 ± 6.44 |

+| dm_control/dog-run-v0 | 60.25 ± 1.33 |

+| dm_control/dog-fetch-v0 | 34.26 ± 2.24 |

+| dm_control/finger-spin-v0 | 590.49 ± 171.09 |

+| dm_control/finger-turn_easy-v0 | 180.42 ± 44.91 |

+| dm_control/finger-turn_hard-v0 | 61.40 ± 9.59 |

+| dm_control/fish-upright-v0 | 516.21 ± 59.52 |

+| dm_control/fish-swim-v0 | 87.91 ± 6.83 |

+| dm_control/hopper-stand-v0 | 2.72 ± 1.72 |

+| dm_control/hopper-hop-v0 | 0.52 ± 0.48 |

+| dm_control/humanoid-stand-v0 | 6.59 ± 0.18 |

+| dm_control/humanoid-walk-v0 | 1.73 ± 0.03 |

+| dm_control/humanoid-run-v0 | 1.11 ± 0.04 |

+| dm_control/humanoid-run_pure_state-v0 | 0.98 ± 0.03 |

+| dm_control/humanoid_CMU-stand-v0 | 4.79 ± 0.18 |

+| dm_control/humanoid_CMU-run-v0 | 0.88 ± 0.05 |

+| dm_control/manipulator-bring_ball-v0 | 0.50 ± 0.29 |

+| dm_control/manipulator-bring_peg-v0 | 1.80 ± 1.58 |

+| dm_control/manipulator-insert_ball-v0 | 35.50 ± 13.04 |

+| dm_control/manipulator-insert_peg-v0 | 60.40 ± 21.76 |

+| dm_control/pendulum-swingup-v0 | 242.81 ± 245.95 |

+| dm_control/point_mass-easy-v0 | 273.95 ± 362.28 |

+| dm_control/point_mass-hard-v0 | 143.25 ± 38.12 |

+| dm_control/quadruped-walk-v0 | 239.03 ± 66.17 |

+| dm_control/quadruped-run-v0 | 180.44 ± 32.91 |

+| dm_control/quadruped-escape-v0 | 28.92 ± 11.21 |

+| dm_control/quadruped-fetch-v0 | 193.97 ± 22.20 |

+| dm_control/reacher-easy-v0 | 626.28 ± 15.51 |

+| dm_control/reacher-hard-v0 | 443.80 ± 9.64 |

+| dm_control/stacker-stack_2-v0 | 75.68 ± 4.83 |

+| dm_control/stacker-stack_4-v0 | 68.02 ± 4.02 |

+| dm_control/swimmer-swimmer6-v0 | 158.19 ± 10.22 |

+| dm_control/swimmer-swimmer15-v0 | 131.94 ± 0.88 |

+| dm_control/walker-stand-v0 | 564.46 ± 235.22 |

+| dm_control/walker-walk-v0 | 392.51 ± 56.25 |

+| dm_control/walker-run-v0 | 125.92 ± 10.01 |

+

+Note that the dm_control/lqr-lqr_2_1-v0 dm_control/lqr-lqr_6_2-v0 environments are never terminated or truncated. See https://wandb.ai/openrlbenchmark/cleanrl/runs/3tm00923 and https://wandb.ai/openrlbenchmark/cleanrl/runs/1z9us07j as an example.

- Tracked experiments and game play videos:

-

-

+Learning curves:

- === "dm_control"

+

- Below are the average episodic returns for `ppo_continuous_action.py` in `dm_control` environments.

+Tracked experiments and game play videos:

- | | ppo_continuous_action ({'tag': ['v1.0.0-13-gcbd83f6']}) |

- |:--------------------------------------|:----------------------------------------------------------|

- | dm_control/acrobot-swingup-v0 | 27.84 ± 9.25 |

- | dm_control/acrobot-swingup_sparse-v0 | 1.60 ± 1.17 |

- | dm_control/ball_in_cup-catch-v0 | 900.78 ± 5.26 |

- | dm_control/cartpole-balance-v0 | 855.47 ± 22.06 |

- | dm_control/cartpole-balance_sparse-v0 | 999.93 ± 0.10 |

- | dm_control/cartpole-swingup-v0 | 640.86 ± 11.44 |

- | dm_control/cartpole-swingup_sparse-v0 | 51.34 ± 58.35 |

- | dm_control/cartpole-two_poles-v0 | 203.86 ± 11.84 |

- | dm_control/cartpole-three_poles-v0 | 164.59 ± 3.23 |

- | dm_control/cheetah-run-v0 | 432.56 ± 82.54 |

- | dm_control/dog-stand-v0 | 307.79 ± 46.26 |

- | dm_control/dog-walk-v0 | 120.05 ± 8.80 |

- | dm_control/dog-trot-v0 | 76.56 ± 6.44 |

- | dm_control/dog-run-v0 | 60.25 ± 1.33 |

- | dm_control/dog-fetch-v0 | 34.26 ± 2.24 |

- | dm_control/finger-spin-v0 | 590.49 ± 171.09 |

- | dm_control/finger-turn_easy-v0 | 180.42 ± 44.91 |

- | dm_control/finger-turn_hard-v0 | 61.40 ± 9.59 |

- | dm_control/fish-upright-v0 | 516.21 ± 59.52 |

- | dm_control/fish-swim-v0 | 87.91 ± 6.83 |

- | dm_control/hopper-stand-v0 | 2.72 ± 1.72 |

- | dm_control/hopper-hop-v0 | 0.52 ± 0.48 |

- | dm_control/humanoid-stand-v0 | 6.59 ± 0.18 |

- | dm_control/humanoid-walk-v0 | 1.73 ± 0.03 |

- | dm_control/humanoid-run-v0 | 1.11 ± 0.04 |

- | dm_control/humanoid-run_pure_state-v0 | 0.98 ± 0.03 |

- | dm_control/humanoid_CMU-stand-v0 | 4.79 ± 0.18 |

- | dm_control/humanoid_CMU-run-v0 | 0.88 ± 0.05 |

- | dm_control/manipulator-bring_ball-v0 | 0.50 ± 0.29 |

- | dm_control/manipulator-bring_peg-v0 | 1.80 ± 1.58 |

- | dm_control/manipulator-insert_ball-v0 | 35.50 ± 13.04 |

- | dm_control/manipulator-insert_peg-v0 | 60.40 ± 21.76 |

- | dm_control/pendulum-swingup-v0 | 242.81 ± 245.95 |

- | dm_control/point_mass-easy-v0 | 273.95 ± 362.28 |

- | dm_control/point_mass-hard-v0 | 143.25 ± 38.12 |

- | dm_control/quadruped-walk-v0 | 239.03 ± 66.17 |

- | dm_control/quadruped-run-v0 | 180.44 ± 32.91 |

- | dm_control/quadruped-escape-v0 | 28.92 ± 11.21 |

- | dm_control/quadruped-fetch-v0 | 193.97 ± 22.20 |

- | dm_control/reacher-easy-v0 | 626.28 ± 15.51 |

- | dm_control/reacher-hard-v0 | 443.80 ± 9.64 |

- | dm_control/stacker-stack_2-v0 | 75.68 ± 4.83 |

- | dm_control/stacker-stack_4-v0 | 68.02 ± 4.02 |

- | dm_control/swimmer-swimmer6-v0 | 158.19 ± 10.22 |

- | dm_control/swimmer-swimmer15-v0 | 131.94 ± 0.88 |

- | dm_control/walker-stand-v0 | 564.46 ± 235.22 |

- | dm_control/walker-walk-v0 | 392.51 ± 56.25 |

- | dm_control/walker-run-v0 | 125.92 ± 10.01 |

-

- Note that the dm_control/lqr-lqr_2_1-v0 dm_control/lqr-lqr_6_2-v0 environments are never terminated or truncated. See https://wandb.ai/openrlbenchmark/cleanrl/runs/3tm00923 and https://wandb.ai/openrlbenchmark/cleanrl/runs/1z9us07j as an example.

-

- Learning curves:

-

-

-

- Tracked experiments and game play videos:

-

-

-

+

+

???+ info

@@ -484,8 +456,9 @@ To help test out the memory, we remove the 4 stacked frames from the observation

To run benchmark experiments, see :material-github: [benchmark/ppo.sh](https://github.com/vwxyzjn/cleanrl/blob/master/benchmark/ppo.sh). Specifically, execute the following command:

-

-

+``` title="benchmark/ppo.sh" linenums="1"

+--8<-- "benchmark/ppo.sh:47:52"

+```

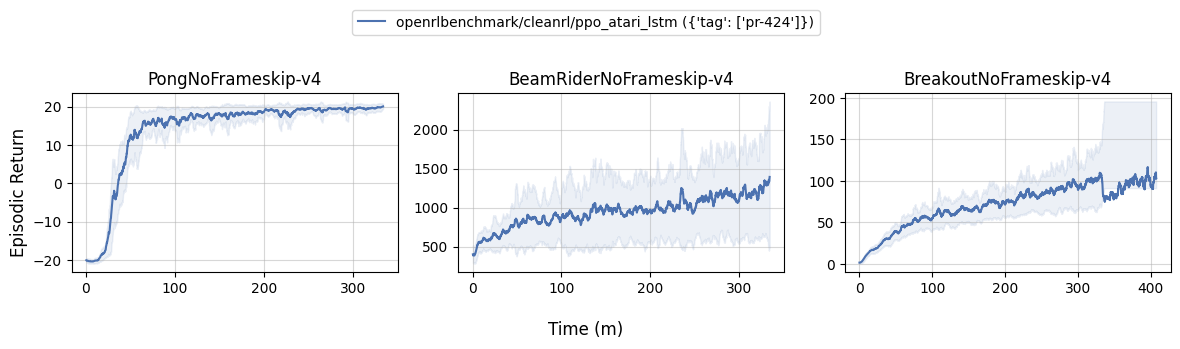

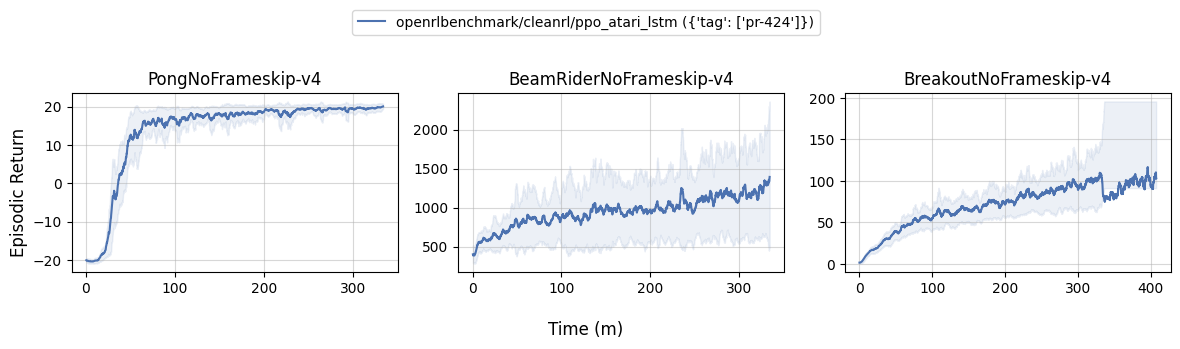

Below are the average episodic returns for `ppo_atari_lstm.py`. To ensure the quality of the implementation, we compared the results against `openai/baselies`' PPO.

@@ -499,14 +472,12 @@ Below are the average episodic returns for `ppo_atari_lstm.py`. To ensure the qu

Learning curves:

-

+``` title="benchmark/ppo_plot.sh" linenums="1"

+--8<-- "benchmark/ppo_plot.sh:11:19"

+```

+

- Learning curves:

+Tracked experiments and game play videos:

-

+

+

+

+

+``` title="benchmark/ppo.sh" linenums="1"

+--8<-- "benchmark/ppo.sh:36:41"

+```

+

+Below are the average episodic returns for `ppo_continuous_action.py` in `dm_control` environments.

+

+| | ppo_continuous_action ({'tag': ['v1.0.0-13-gcbd83f6']}) |

+|:--------------------------------------|:----------------------------------------------------------|

+| dm_control/acrobot-swingup-v0 | 27.84 ± 9.25 |

+| dm_control/acrobot-swingup_sparse-v0 | 1.60 ± 1.17 |

+| dm_control/ball_in_cup-catch-v0 | 900.78 ± 5.26 |

+| dm_control/cartpole-balance-v0 | 855.47 ± 22.06 |

+| dm_control/cartpole-balance_sparse-v0 | 999.93 ± 0.10 |

+| dm_control/cartpole-swingup-v0 | 640.86 ± 11.44 |

+| dm_control/cartpole-swingup_sparse-v0 | 51.34 ± 58.35 |

+| dm_control/cartpole-two_poles-v0 | 203.86 ± 11.84 |

+| dm_control/cartpole-three_poles-v0 | 164.59 ± 3.23 |

+| dm_control/cheetah-run-v0 | 432.56 ± 82.54 |

+| dm_control/dog-stand-v0 | 307.79 ± 46.26 |

+| dm_control/dog-walk-v0 | 120.05 ± 8.80 |

+| dm_control/dog-trot-v0 | 76.56 ± 6.44 |

+| dm_control/dog-run-v0 | 60.25 ± 1.33 |

+| dm_control/dog-fetch-v0 | 34.26 ± 2.24 |

+| dm_control/finger-spin-v0 | 590.49 ± 171.09 |

+| dm_control/finger-turn_easy-v0 | 180.42 ± 44.91 |

+| dm_control/finger-turn_hard-v0 | 61.40 ± 9.59 |

+| dm_control/fish-upright-v0 | 516.21 ± 59.52 |

+| dm_control/fish-swim-v0 | 87.91 ± 6.83 |

+| dm_control/hopper-stand-v0 | 2.72 ± 1.72 |

+| dm_control/hopper-hop-v0 | 0.52 ± 0.48 |

+| dm_control/humanoid-stand-v0 | 6.59 ± 0.18 |

+| dm_control/humanoid-walk-v0 | 1.73 ± 0.03 |

+| dm_control/humanoid-run-v0 | 1.11 ± 0.04 |

+| dm_control/humanoid-run_pure_state-v0 | 0.98 ± 0.03 |

+| dm_control/humanoid_CMU-stand-v0 | 4.79 ± 0.18 |

+| dm_control/humanoid_CMU-run-v0 | 0.88 ± 0.05 |

+| dm_control/manipulator-bring_ball-v0 | 0.50 ± 0.29 |

+| dm_control/manipulator-bring_peg-v0 | 1.80 ± 1.58 |

+| dm_control/manipulator-insert_ball-v0 | 35.50 ± 13.04 |

+| dm_control/manipulator-insert_peg-v0 | 60.40 ± 21.76 |

+| dm_control/pendulum-swingup-v0 | 242.81 ± 245.95 |

+| dm_control/point_mass-easy-v0 | 273.95 ± 362.28 |

+| dm_control/point_mass-hard-v0 | 143.25 ± 38.12 |

+| dm_control/quadruped-walk-v0 | 239.03 ± 66.17 |

+| dm_control/quadruped-run-v0 | 180.44 ± 32.91 |

+| dm_control/quadruped-escape-v0 | 28.92 ± 11.21 |

+| dm_control/quadruped-fetch-v0 | 193.97 ± 22.20 |

+| dm_control/reacher-easy-v0 | 626.28 ± 15.51 |

+| dm_control/reacher-hard-v0 | 443.80 ± 9.64 |

+| dm_control/stacker-stack_2-v0 | 75.68 ± 4.83 |

+| dm_control/stacker-stack_4-v0 | 68.02 ± 4.02 |

+| dm_control/swimmer-swimmer6-v0 | 158.19 ± 10.22 |

+| dm_control/swimmer-swimmer15-v0 | 131.94 ± 0.88 |

+| dm_control/walker-stand-v0 | 564.46 ± 235.22 |

+| dm_control/walker-walk-v0 | 392.51 ± 56.25 |

+| dm_control/walker-run-v0 | 125.92 ± 10.01 |

+

+Note that the dm_control/lqr-lqr_2_1-v0 dm_control/lqr-lqr_6_2-v0 environments are never terminated or truncated. See https://wandb.ai/openrlbenchmark/cleanrl/runs/3tm00923 and https://wandb.ai/openrlbenchmark/cleanrl/runs/1z9us07j as an example.

- Tracked experiments and game play videos:

-

-

+Learning curves:

- === "dm_control"

+

- Below are the average episodic returns for `ppo_continuous_action.py` in `dm_control` environments.

+Tracked experiments and game play videos:

- | | ppo_continuous_action ({'tag': ['v1.0.0-13-gcbd83f6']}) |

- |:--------------------------------------|:----------------------------------------------------------|

- | dm_control/acrobot-swingup-v0 | 27.84 ± 9.25 |

- | dm_control/acrobot-swingup_sparse-v0 | 1.60 ± 1.17 |

- | dm_control/ball_in_cup-catch-v0 | 900.78 ± 5.26 |

- | dm_control/cartpole-balance-v0 | 855.47 ± 22.06 |

- | dm_control/cartpole-balance_sparse-v0 | 999.93 ± 0.10 |

- | dm_control/cartpole-swingup-v0 | 640.86 ± 11.44 |

- | dm_control/cartpole-swingup_sparse-v0 | 51.34 ± 58.35 |

- | dm_control/cartpole-two_poles-v0 | 203.86 ± 11.84 |

- | dm_control/cartpole-three_poles-v0 | 164.59 ± 3.23 |

- | dm_control/cheetah-run-v0 | 432.56 ± 82.54 |

- | dm_control/dog-stand-v0 | 307.79 ± 46.26 |

- | dm_control/dog-walk-v0 | 120.05 ± 8.80 |

- | dm_control/dog-trot-v0 | 76.56 ± 6.44 |

- | dm_control/dog-run-v0 | 60.25 ± 1.33 |

- | dm_control/dog-fetch-v0 | 34.26 ± 2.24 |

- | dm_control/finger-spin-v0 | 590.49 ± 171.09 |

- | dm_control/finger-turn_easy-v0 | 180.42 ± 44.91 |

- | dm_control/finger-turn_hard-v0 | 61.40 ± 9.59 |

- | dm_control/fish-upright-v0 | 516.21 ± 59.52 |

- | dm_control/fish-swim-v0 | 87.91 ± 6.83 |

- | dm_control/hopper-stand-v0 | 2.72 ± 1.72 |

- | dm_control/hopper-hop-v0 | 0.52 ± 0.48 |

- | dm_control/humanoid-stand-v0 | 6.59 ± 0.18 |

- | dm_control/humanoid-walk-v0 | 1.73 ± 0.03 |

- | dm_control/humanoid-run-v0 | 1.11 ± 0.04 |

- | dm_control/humanoid-run_pure_state-v0 | 0.98 ± 0.03 |

- | dm_control/humanoid_CMU-stand-v0 | 4.79 ± 0.18 |

- | dm_control/humanoid_CMU-run-v0 | 0.88 ± 0.05 |

- | dm_control/manipulator-bring_ball-v0 | 0.50 ± 0.29 |

- | dm_control/manipulator-bring_peg-v0 | 1.80 ± 1.58 |

- | dm_control/manipulator-insert_ball-v0 | 35.50 ± 13.04 |

- | dm_control/manipulator-insert_peg-v0 | 60.40 ± 21.76 |

- | dm_control/pendulum-swingup-v0 | 242.81 ± 245.95 |

- | dm_control/point_mass-easy-v0 | 273.95 ± 362.28 |

- | dm_control/point_mass-hard-v0 | 143.25 ± 38.12 |

- | dm_control/quadruped-walk-v0 | 239.03 ± 66.17 |

- | dm_control/quadruped-run-v0 | 180.44 ± 32.91 |

- | dm_control/quadruped-escape-v0 | 28.92 ± 11.21 |

- | dm_control/quadruped-fetch-v0 | 193.97 ± 22.20 |

- | dm_control/reacher-easy-v0 | 626.28 ± 15.51 |

- | dm_control/reacher-hard-v0 | 443.80 ± 9.64 |

- | dm_control/stacker-stack_2-v0 | 75.68 ± 4.83 |

- | dm_control/stacker-stack_4-v0 | 68.02 ± 4.02 |

- | dm_control/swimmer-swimmer6-v0 | 158.19 ± 10.22 |

- | dm_control/swimmer-swimmer15-v0 | 131.94 ± 0.88 |

- | dm_control/walker-stand-v0 | 564.46 ± 235.22 |

- | dm_control/walker-walk-v0 | 392.51 ± 56.25 |

- | dm_control/walker-run-v0 | 125.92 ± 10.01 |

-

- Note that the dm_control/lqr-lqr_2_1-v0 dm_control/lqr-lqr_6_2-v0 environments are never terminated or truncated. See https://wandb.ai/openrlbenchmark/cleanrl/runs/3tm00923 and https://wandb.ai/openrlbenchmark/cleanrl/runs/1z9us07j as an example.

-

- Learning curves:

-

-

-

- Tracked experiments and game play videos:

-

-

-

+

+

???+ info

@@ -484,8 +456,9 @@ To help test out the memory, we remove the 4 stacked frames from the observation

To run benchmark experiments, see :material-github: [benchmark/ppo.sh](https://github.com/vwxyzjn/cleanrl/blob/master/benchmark/ppo.sh). Specifically, execute the following command:

-

-

+``` title="benchmark/ppo.sh" linenums="1"

+--8<-- "benchmark/ppo.sh:47:52"

+```

Below are the average episodic returns for `ppo_atari_lstm.py`. To ensure the quality of the implementation, we compared the results against `openai/baselies`' PPO.

@@ -499,14 +472,12 @@ Below are the average episodic returns for `ppo_atari_lstm.py`. To ensure the qu

Learning curves:

-

+``` title="benchmark/ppo_plot.sh" linenums="1"

+--8<-- "benchmark/ppo_plot.sh:11:19"

+```

+ +

+ Tracked experiments and game play videos:

@@ -568,34 +539,22 @@ See [related docs](/rl-algorithms/ppo/#explanation-of-the-logged-metrics) for `p

To run benchmark experiments, see :material-github: [benchmark/ppo.sh](https://github.com/vwxyzjn/cleanrl/blob/master/benchmark/ppo.sh). Specifically, execute the following command:

-

-

-

-Below are the average episodic returns for `ppo_atari_envpool.py`. Notice it has the same sample efficiency as `ppo_atari.py`, but runs about 3x faster.

-

-

-

-| Environment | `ppo_atari_envpool.py` (~80 mins) | `ppo_atari.py` (~220 mins)

-| ----------- | ----------- | ----------- |

-| BreakoutNoFrameskip-v4 | 389.57 ± 29.62 | 416.31 ± 43.92

-| PongNoFrameskip-v4 | 20.55 ± 0.37 | 20.59 ± 0.35

-| BeamRiderNoFrameskip-v4 | 2039.83 ± 1146.62 | 2445.38 ± 528.91

+``` title="benchmark/ppo.sh" linenums="1"

+--8<-- "benchmark/ppo.sh:58:63"

+```

+{!benchmark/ppo_atari_envpool.md!}

Learning curves:

-

Tracked experiments and game play videos:

@@ -568,34 +539,22 @@ See [related docs](/rl-algorithms/ppo/#explanation-of-the-logged-metrics) for `p

To run benchmark experiments, see :material-github: [benchmark/ppo.sh](https://github.com/vwxyzjn/cleanrl/blob/master/benchmark/ppo.sh). Specifically, execute the following command:

-

-

-

-Below are the average episodic returns for `ppo_atari_envpool.py`. Notice it has the same sample efficiency as `ppo_atari.py`, but runs about 3x faster.

-

-

-

-| Environment | `ppo_atari_envpool.py` (~80 mins) | `ppo_atari.py` (~220 mins)

-| ----------- | ----------- | ----------- |

-| BreakoutNoFrameskip-v4 | 389.57 ± 29.62 | 416.31 ± 43.92

-| PongNoFrameskip-v4 | 20.55 ± 0.37 | 20.59 ± 0.35

-| BeamRiderNoFrameskip-v4 | 2039.83 ± 1146.62 | 2445.38 ± 528.91

+``` title="benchmark/ppo.sh" linenums="1"

+--8<-- "benchmark/ppo.sh:58:63"

+```

+{!benchmark/ppo_atari_envpool.md!}

Learning curves:

-

-

-

-

-

-

+``` title="benchmark/ppo_plot.sh" linenums="1"

+--8<-- "benchmark/ppo_plot.sh:51:62"

+```

-

-

-

+

+ Tracked experiments and game play videos:

@@ -684,96 +643,25 @@ Additionally, we record the following metric:

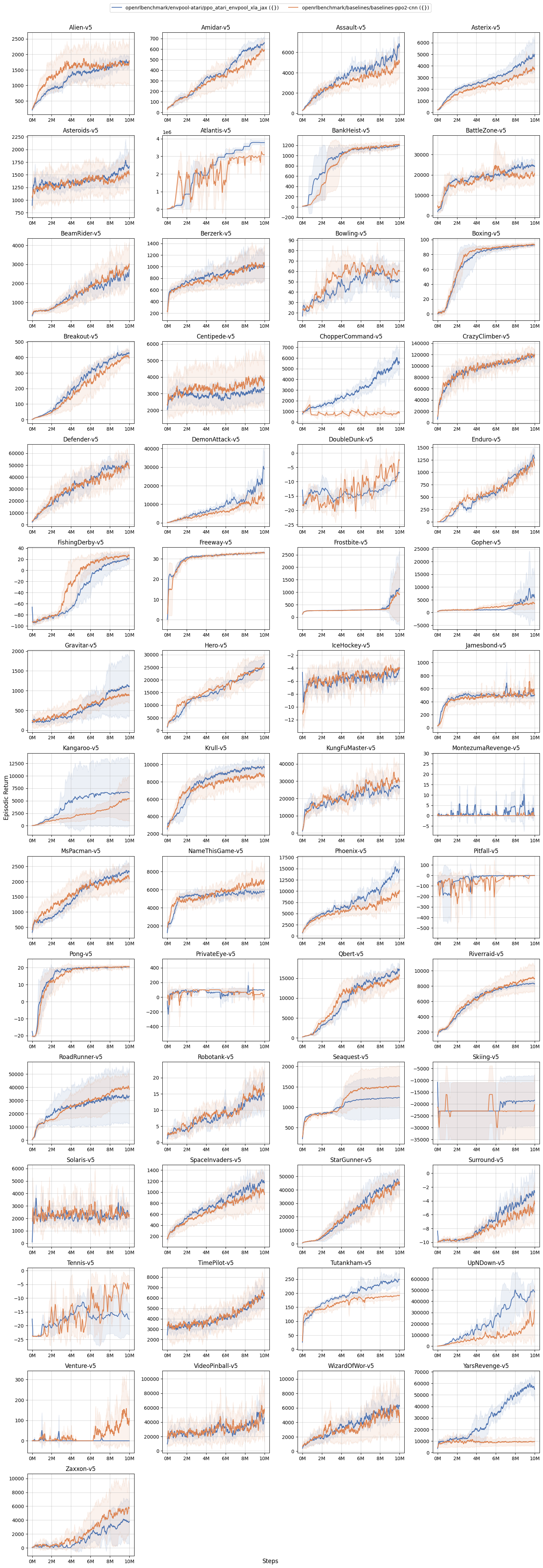

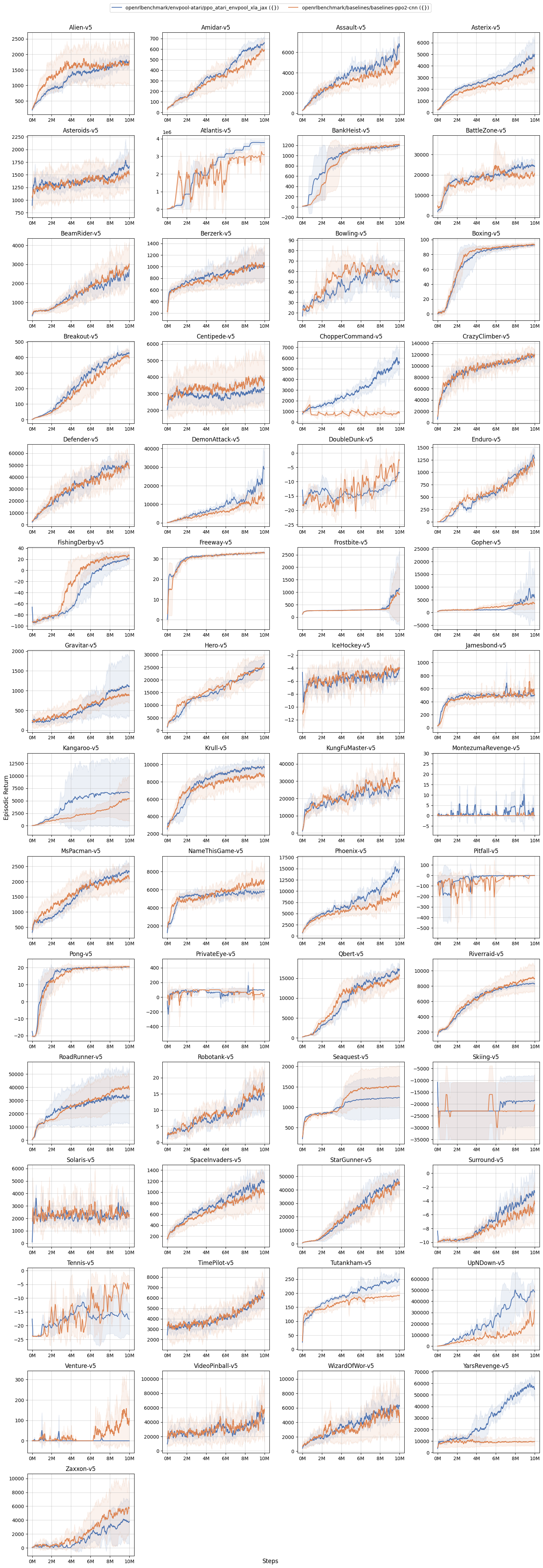

To run benchmark experiments, see :material-github: [benchmark/ppo.sh](https://github.com/vwxyzjn/cleanrl/blob/master/benchmark/ppo.sh). Specifically, execute the following command:

-

+``` title="benchmark/ppo.sh" linenums="1"

+--8<-- "benchmark/ppo.sh:69:74"

+```

-Below are the average episodic returns for `ppo_atari_envpool_xla_jax.py`. Notice it has the same sample efficiency as `ppo_atari.py`, but runs about 3x faster.

-???+ info

+{!benchmark/ppo_atari_envpool_xla_jax.md!}

- The following table and charts are generated by [atari_hns_new.py](https://github.com/openrlbenchmark/openrlbenchmark/blob/0c16fda7d7873143a632865010c74263ea487339/atari_hns_new.py), [ours_vs_baselines_hns.py](https://github.com/openrlbenchmark/openrlbenchmark/blob/0c16fda7d7873143a632865010c74263ea487339/ours_vs_baselines_hns.py), and [ours_vs_seedrl_hns.py](https://github.com/openrlbenchmark/openrlbenchmark/blob/0c16fda7d7873143a632865010c74263ea487339/ours_vs_seedrl_hns.py).

+Learning curves:

+

+``` title="benchmark/ppo_plot.sh" linenums="1"

+--8<-- "benchmark/ppo_plot.sh:64:85"

+```

+

+

Tracked experiments and game play videos:

@@ -684,96 +643,25 @@ Additionally, we record the following metric:

To run benchmark experiments, see :material-github: [benchmark/ppo.sh](https://github.com/vwxyzjn/cleanrl/blob/master/benchmark/ppo.sh). Specifically, execute the following command:

-

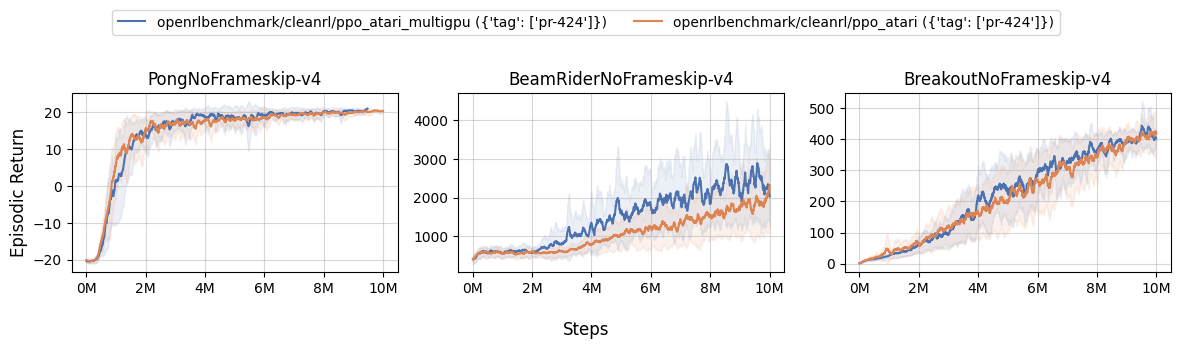

+``` title="benchmark/ppo.sh" linenums="1"

+--8<-- "benchmark/ppo.sh:69:74"

+```

-Below are the average episodic returns for `ppo_atari_envpool_xla_jax.py`. Notice it has the same sample efficiency as `ppo_atari.py`, but runs about 3x faster.

-???+ info

+{!benchmark/ppo_atari_envpool_xla_jax.md!}

- The following table and charts are generated by [atari_hns_new.py](https://github.com/openrlbenchmark/openrlbenchmark/blob/0c16fda7d7873143a632865010c74263ea487339/atari_hns_new.py), [ours_vs_baselines_hns.py](https://github.com/openrlbenchmark/openrlbenchmark/blob/0c16fda7d7873143a632865010c74263ea487339/ours_vs_baselines_hns.py), and [ours_vs_seedrl_hns.py](https://github.com/openrlbenchmark/openrlbenchmark/blob/0c16fda7d7873143a632865010c74263ea487339/ours_vs_seedrl_hns.py).

+Learning curves:

+

+``` title="benchmark/ppo_plot.sh" linenums="1"

+--8<-- "benchmark/ppo_plot.sh:64:85"

+```

+

+ +

+ +

+ -

-| Environment | CleanRL ppo_atari_envpool_xla_jax.py | openai/baselines' PPO |

-|:--------------------|---------------------------------------:|------------------------:|

-| Alien-v5 | 1744.76 | 1549.42 |

-| Amidar-v5 | 617.137 | 546.406 |

-| Assault-v5 | 5734.04 | 4050.78 |

-| Asterix-v5 | 3341.9 | 3459.9 |

-| Asteroids-v5 | 1669.3 | 1467.19 |

-| Atlantis-v5 | 3.92929e+06 | 3.09748e+06 |

-| BankHeist-v5 | 1192.68 | 1195.34 |

-| BattleZone-v5 | 24937.9 | 20314.3 |

-| BeamRider-v5 | 2447.84 | 2740.02 |

-| Berzerk-v5 | 1082.72 | 887.019 |

-| Bowling-v5 | 44.0681 | 62.2634 |

-| Boxing-v5 | 92.0554 | 93.3596 |

-| Breakout-v5 | 431.795 | 388.891 |

-| Centipede-v5 | 2910.69 | 3688.16 |

-| ChopperCommand-v5 | 5555.84 | 933.333 |

-| CrazyClimber-v5 | 116114 | 111675 |

-| Defender-v5 | 51439.2 | 50045.1 |

-| DemonAttack-v5 | 22824.8 | 12173.9 |

-| DoubleDunk-v5 | -8.56781 | -9 |

-| Enduro-v5 | 1262.79 | 1061.12 |

-| FishingDerby-v5 | 21.6222 | 23.8876 |

-| Freeway-v5 | 33.1075 | 32.9167 |

-| Frostbite-v5 | 904.346 | 924.5 |

-| Gopher-v5 | 11369.6 | 2899.57 |

-| Gravitar-v5 | 1141.95 | 870.755 |

-| Hero-v5 | 24628.3 | 25984.5 |

-| IceHockey-v5 | -4.91917 | -4.71505 |

-| Jamesbond-v5 | 504.105 | 516.489 |

-| Kangaroo-v5 | 7281.59 | 3791.5 |

-| Krull-v5 | 9384.7 | 8672.95 |

-| KungFuMaster-v5 | 26594.5 | 29116.1 |

-| MontezumaRevenge-v5 | 0.240385 | 0 |

-| MsPacman-v5 | 2461.62 | 2113.44 |

-| NameThisGame-v5 | 5442.67 | 5713.89 |

-| Phoenix-v5 | 14008.5 | 8693.21 |

-| Pitfall-v5 | -0.0801282 | -1.47059 |

-| Pong-v5 | 20.309 | 20.4043 |

-| PrivateEye-v5 | 99.5283 | 21.2121 |

-| Qbert-v5 | 16430.7 | 14283.4 |

-| Riverraid-v5 | 8297.21 | 9267.48 |

-| RoadRunner-v5 | 19342.2 | 40325 |

-| Robotank-v5 | 15.45 | 16 |

-| Seaquest-v5 | 1230.02 | 1754.44 |

-| Skiing-v5 | -14684.3 | -13901.7 |

-| Solaris-v5 | 2353.62 | 2088.12 |

-| SpaceInvaders-v5 | 1162.16 | 1017.65 |

-| StarGunner-v5 | 53535.9 | 40906 |

-| Surround-v5 | -2.94558 | -6.08095 |

-| Tennis-v5 | -15.0446 | -9.71429 |

-| TimePilot-v5 | 6224.87 | 5775.53 |

-| Tutankham-v5 | 238.419 | 197.929 |

-| UpNDown-v5 | 430177 | 129459 |

-| Venture-v5 | 0 | 115.278 |

-| VideoPinball-v5 | 42975.3 | 32777.4 |

-| WizardOfWor-v5 | 6247.83 | 5024.03 |

-| YarsRevenge-v5 | 56696.7 | 8238.44 |

-| Zaxxon-v5 | 6015.8 | 6379.79 |

-

-

-

-Median Human Normalized Score (HNS) compared to openai/baselines.

-

-

-

-

-Learning curves (left y-axis is the return and right y-axis is the human normalized score):

-

-

-

-

-Percentage of human normalized score (HMS) for each game.

-

???+ info

@@ -839,15 +727,23 @@ See [related docs](/rl-algorithms/ppo/#explanation-of-the-logged-metrics) for `p

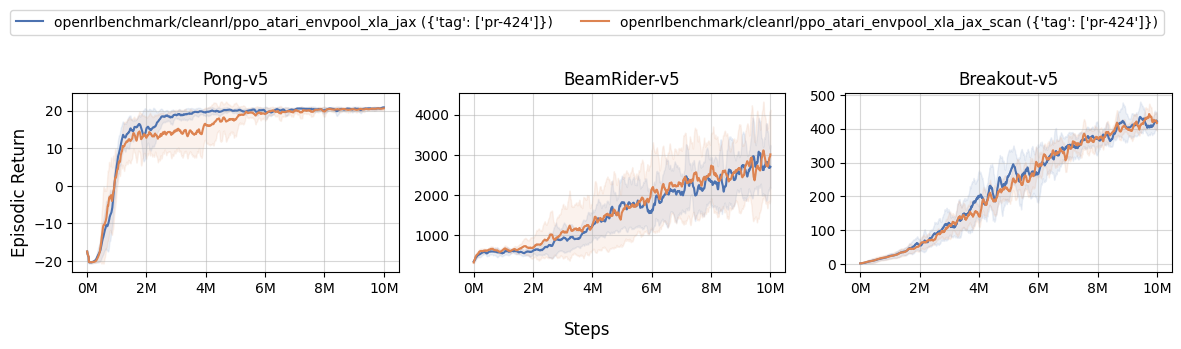

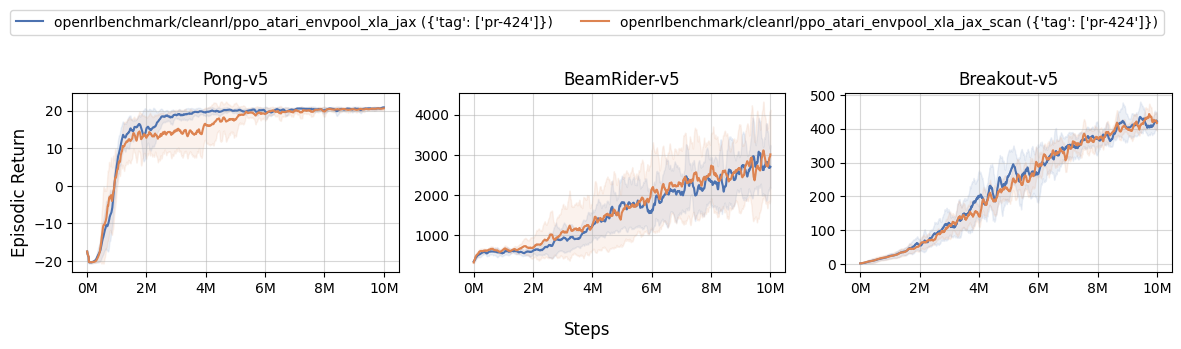

To run benchmark experiments, see :material-github: [benchmark/ppo.sh](https://github.com/vwxyzjn/cleanrl/blob/master/benchmark/ppo.sh). Specifically, execute the following command:

-

-Below are the average episodic returns for `ppo_atari_envpool_xla_jax_scan.py` in 3 atari games. It has the same sample efficiency as `ppo_atari_envpool_xla_jax.py`.

+``` title="benchmark/ppo.sh" linenums="1"

+--8<-- "benchmark/ppo.sh:80:85"

+```

+

+

+{!benchmark/ppo_atari_envpool_xla_jax_scan.md!}

+

-| | ppo_atari_envpool_xla_jax_scan ({'tag': ['pr-328'], 'user': ['51616']}) | ppo_atari_envpool_xla_jax ({'tag': ['pr-328'], 'user': ['51616']}) | baselines-ppo2-cnn ({}) | ppo_atari_envpool_xla_jax_truncation ({'user': ['costa-huang']}) |

-|:-------------|:--------------------------------------------------------------------------|:---------------------------------------------------------------------|:--------------------------|:-------------------------------------------------------------------|

-| BeamRider-v5 | 2899.62 ± 482.12 | 2222.09 ± 1047.86 | 2835.71 ± 387.92 | 3133.78 ± 293.02 |

-| Breakout-v5 | 451.27 ± 45.52 | 424.97 ± 18.37 | 405.73 ± 11.47 | 465.90 ± 14.30 |

-| Pong-v5 | 20.37 ± 0.20 | 20.59 ± 0.40 | 20.45 ± 0.81 | 20.62 ± 0.18 |

+Learning curves:

+

+``` title="benchmark/ppo_plot.sh" linenums="1"

+--8<-- "benchmark/ppo_plot.sh:87:96"

+```

+

+

-

-| Environment | CleanRL ppo_atari_envpool_xla_jax.py | openai/baselines' PPO |

-|:--------------------|---------------------------------------:|------------------------:|

-| Alien-v5 | 1744.76 | 1549.42 |

-| Amidar-v5 | 617.137 | 546.406 |

-| Assault-v5 | 5734.04 | 4050.78 |

-| Asterix-v5 | 3341.9 | 3459.9 |

-| Asteroids-v5 | 1669.3 | 1467.19 |

-| Atlantis-v5 | 3.92929e+06 | 3.09748e+06 |

-| BankHeist-v5 | 1192.68 | 1195.34 |

-| BattleZone-v5 | 24937.9 | 20314.3 |

-| BeamRider-v5 | 2447.84 | 2740.02 |

-| Berzerk-v5 | 1082.72 | 887.019 |

-| Bowling-v5 | 44.0681 | 62.2634 |

-| Boxing-v5 | 92.0554 | 93.3596 |

-| Breakout-v5 | 431.795 | 388.891 |

-| Centipede-v5 | 2910.69 | 3688.16 |

-| ChopperCommand-v5 | 5555.84 | 933.333 |

-| CrazyClimber-v5 | 116114 | 111675 |

-| Defender-v5 | 51439.2 | 50045.1 |

-| DemonAttack-v5 | 22824.8 | 12173.9 |

-| DoubleDunk-v5 | -8.56781 | -9 |

-| Enduro-v5 | 1262.79 | 1061.12 |

-| FishingDerby-v5 | 21.6222 | 23.8876 |

-| Freeway-v5 | 33.1075 | 32.9167 |

-| Frostbite-v5 | 904.346 | 924.5 |

-| Gopher-v5 | 11369.6 | 2899.57 |

-| Gravitar-v5 | 1141.95 | 870.755 |

-| Hero-v5 | 24628.3 | 25984.5 |

-| IceHockey-v5 | -4.91917 | -4.71505 |

-| Jamesbond-v5 | 504.105 | 516.489 |

-| Kangaroo-v5 | 7281.59 | 3791.5 |

-| Krull-v5 | 9384.7 | 8672.95 |

-| KungFuMaster-v5 | 26594.5 | 29116.1 |

-| MontezumaRevenge-v5 | 0.240385 | 0 |

-| MsPacman-v5 | 2461.62 | 2113.44 |

-| NameThisGame-v5 | 5442.67 | 5713.89 |

-| Phoenix-v5 | 14008.5 | 8693.21 |

-| Pitfall-v5 | -0.0801282 | -1.47059 |

-| Pong-v5 | 20.309 | 20.4043 |

-| PrivateEye-v5 | 99.5283 | 21.2121 |

-| Qbert-v5 | 16430.7 | 14283.4 |

-| Riverraid-v5 | 8297.21 | 9267.48 |

-| RoadRunner-v5 | 19342.2 | 40325 |

-| Robotank-v5 | 15.45 | 16 |

-| Seaquest-v5 | 1230.02 | 1754.44 |

-| Skiing-v5 | -14684.3 | -13901.7 |

-| Solaris-v5 | 2353.62 | 2088.12 |

-| SpaceInvaders-v5 | 1162.16 | 1017.65 |

-| StarGunner-v5 | 53535.9 | 40906 |

-| Surround-v5 | -2.94558 | -6.08095 |

-| Tennis-v5 | -15.0446 | -9.71429 |

-| TimePilot-v5 | 6224.87 | 5775.53 |

-| Tutankham-v5 | 238.419 | 197.929 |

-| UpNDown-v5 | 430177 | 129459 |

-| Venture-v5 | 0 | 115.278 |

-| VideoPinball-v5 | 42975.3 | 32777.4 |

-| WizardOfWor-v5 | 6247.83 | 5024.03 |

-| YarsRevenge-v5 | 56696.7 | 8238.44 |

-| Zaxxon-v5 | 6015.8 | 6379.79 |

-

-

-

-Median Human Normalized Score (HNS) compared to openai/baselines.

-

-

-

-

-Learning curves (left y-axis is the return and right y-axis is the human normalized score):

-

-

-

-

-Percentage of human normalized score (HMS) for each game.

-

???+ info

@@ -839,15 +727,23 @@ See [related docs](/rl-algorithms/ppo/#explanation-of-the-logged-metrics) for `p

To run benchmark experiments, see :material-github: [benchmark/ppo.sh](https://github.com/vwxyzjn/cleanrl/blob/master/benchmark/ppo.sh). Specifically, execute the following command:

-

-Below are the average episodic returns for `ppo_atari_envpool_xla_jax_scan.py` in 3 atari games. It has the same sample efficiency as `ppo_atari_envpool_xla_jax.py`.

+``` title="benchmark/ppo.sh" linenums="1"

+--8<-- "benchmark/ppo.sh:80:85"

+```

+

+

+{!benchmark/ppo_atari_envpool_xla_jax_scan.md!}

+

-| | ppo_atari_envpool_xla_jax_scan ({'tag': ['pr-328'], 'user': ['51616']}) | ppo_atari_envpool_xla_jax ({'tag': ['pr-328'], 'user': ['51616']}) | baselines-ppo2-cnn ({}) | ppo_atari_envpool_xla_jax_truncation ({'user': ['costa-huang']}) |

-|:-------------|:--------------------------------------------------------------------------|:---------------------------------------------------------------------|:--------------------------|:-------------------------------------------------------------------|

-| BeamRider-v5 | 2899.62 ± 482.12 | 2222.09 ± 1047.86 | 2835.71 ± 387.92 | 3133.78 ± 293.02 |

-| Breakout-v5 | 451.27 ± 45.52 | 424.97 ± 18.37 | 405.73 ± 11.47 | 465.90 ± 14.30 |

-| Pong-v5 | 20.37 ± 0.20 | 20.59 ± 0.40 | 20.45 ± 0.81 | 20.62 ± 0.18 |

+Learning curves:

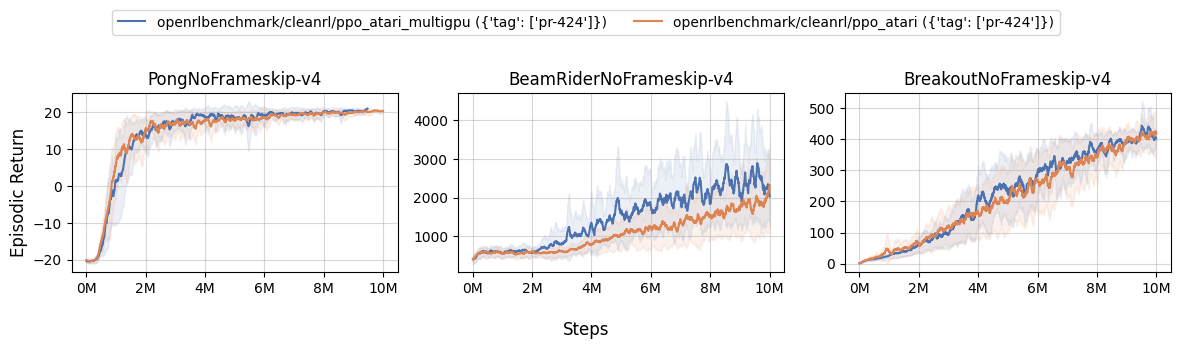

+

+``` title="benchmark/ppo_plot.sh" linenums="1"

+--8<-- "benchmark/ppo_plot.sh:87:96"

+```

+

+ +

+ Learning curves:

@@ -855,15 +751,15 @@ Learning curves:

The trainig time of this variant and that of [ppo_atari_envpool_xla_jax.py](https://github.com/vwxyzjn/cleanrl/blob/master/cleanrl/ppo_atari_envpool_xla_jax.py) are very similar but the compilation time is reduced significantly (see [vwxyzjn/cleanrl#328](https://github.com/vwxyzjn/cleanrl/pull/328#issuecomment-1340474894)). Note that the hardware also affects the speed in the learning curve below. Runs from [`costa-huang`](https://github.com/vwxyzjn/) (red) are slower from those of [`51616`](https://github.com/51616/) (blue and orange) because of hardware differences.

-

-

+

+

+

Tracked experiments:

-

## `ppo_procgen.py`

The [ppo_procgen.py](https://github.com/vwxyzjn/cleanrl/blob/master/cleanrl/ppo_procgen.py) has the following features:

@@ -908,8 +804,10 @@ See [related docs](/rl-algorithms/ppo/#explanation-of-the-logged-metrics) for `p

To run benchmark experiments, see :material-github: [benchmark/ppo.sh](https://github.com/vwxyzjn/cleanrl/blob/master/benchmark/ppo.sh). Specifically, execute the following command:

-

+``` title="benchmark/ppo.sh" linenums="1"

+--8<-- "benchmark/ppo.sh:91:100"

+```

We try to match the default setting in [openai/train-procgen](https://github.com/openai/train-procgen) except that we use the `easy` distribution mode and `total_timesteps=25e6` to save compute. Notice [openai/train-procgen](https://github.com/openai/train-procgen) has the following settings:

@@ -921,24 +819,25 @@ Below are the average episodic returns for `ppo_procgen.py`. To ensure the quali

| Environment | `ppo_procgen.py` | `openai/baselies`' PPO (Huang et al., 2022)[^1]

| ----------- | ----------- | ----------- |

-| StarPilot (easy) | 32.47 ± 11.21 | 33.97 ± 7.86 |

-| BossFight (easy) | 9.63 ± 2.35 | 9.35 ± 2.04 |

-| BigFish (easy) | 16.80 ± 9.49 | 20.06 ± 5.34 |

-

+| StarPilot (easy) | 30.99 ± 1.96 | 33.97 ± 7.86 |

+| BossFight (easy) | 8.85 ± 0.33 | 9.35 ± 2.04 |

+| BigFish (easy) | 16.46 ± 2.71 | 20.06 ± 5.34 |

-???+ info

- Note that we have run the procgen experiments using the `easy` distribution for reducing the computational cost.

Learning curves:

-

Learning curves:

@@ -855,15 +751,15 @@ Learning curves: