A Python framework for autonomous agent networks that handle task automation with multi-step reasoning.

Visit:

- Key Features

- Quick Start

- Technologies Used

- Project Structure

- Setting Up Your Project

- Contributing

- Trouble Shooting

- Frequently Asked Questions (FAQ)

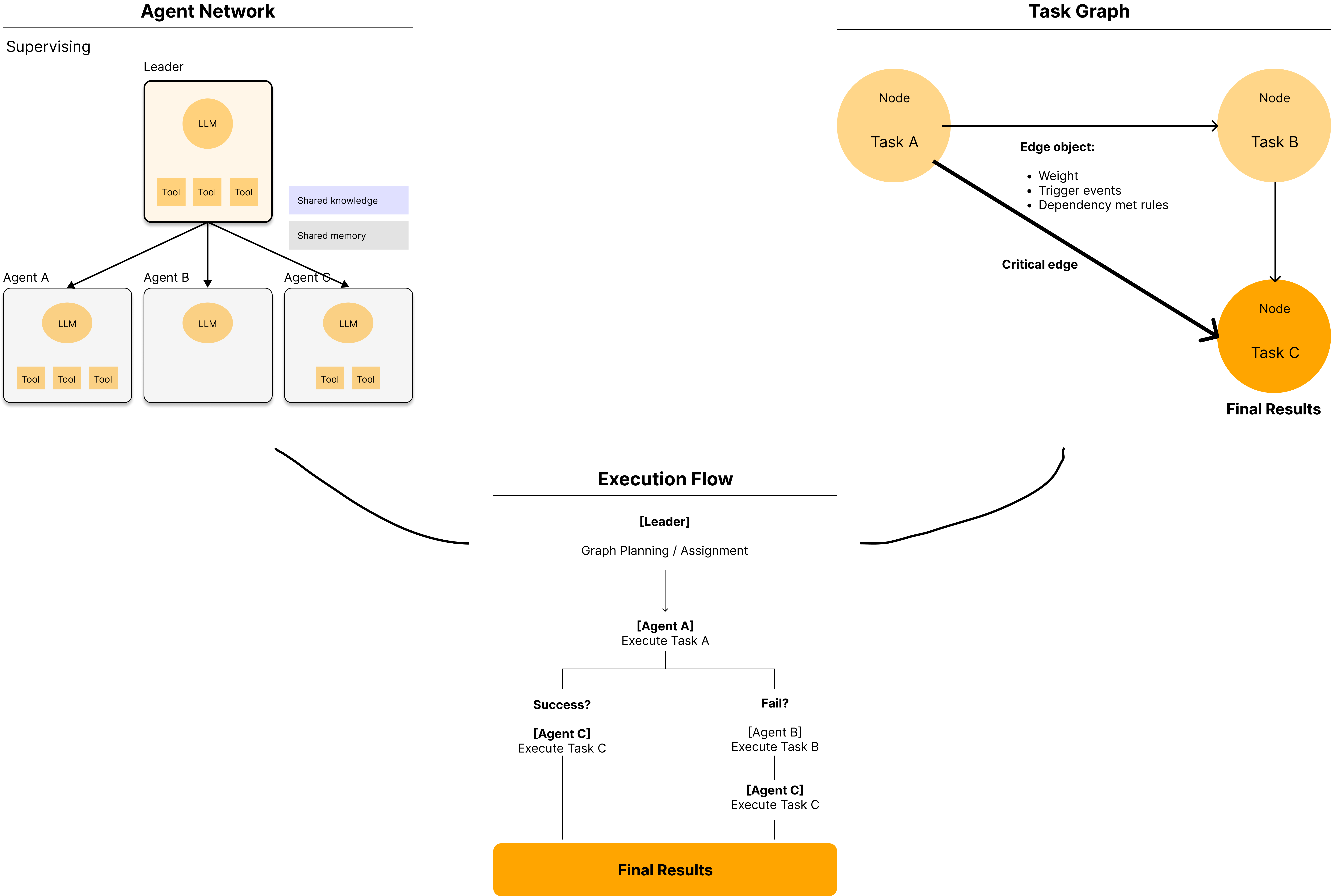

versionhq is a Python framework designed for automating complex, multi-step tasks using autonomous agent networks.

Users can either configure their agents and network manually or allow the system to automatically manage the process based on provided task goals.

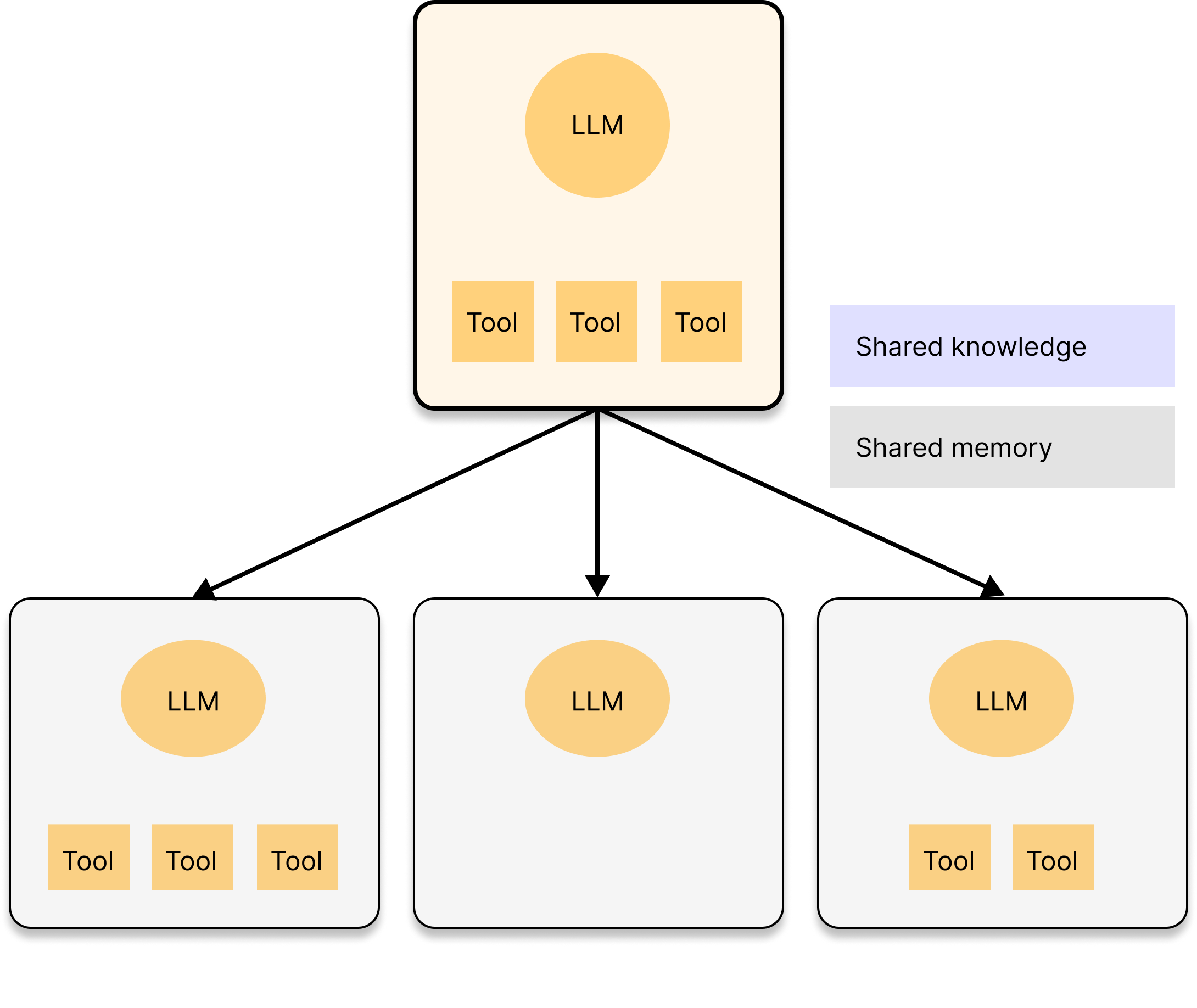

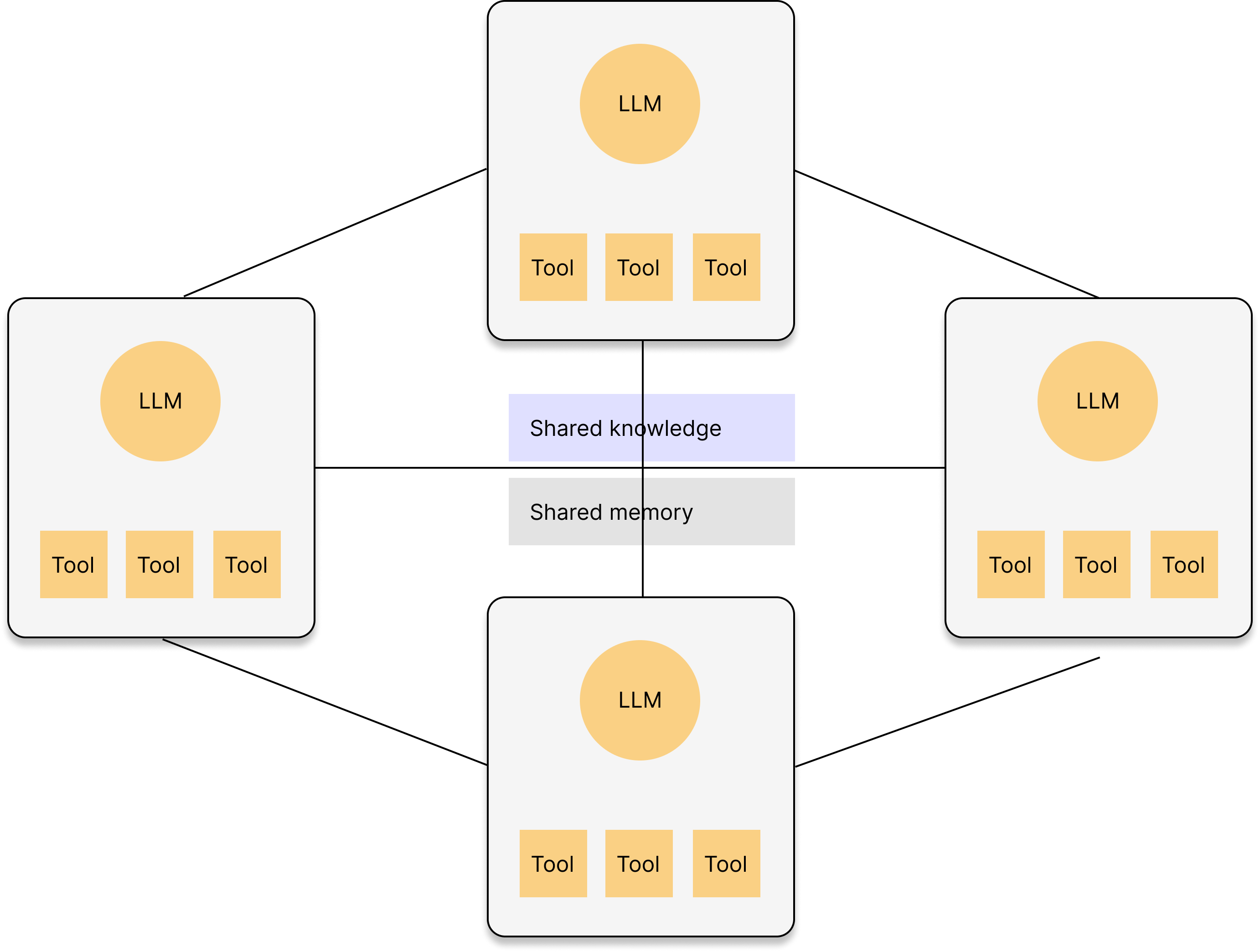

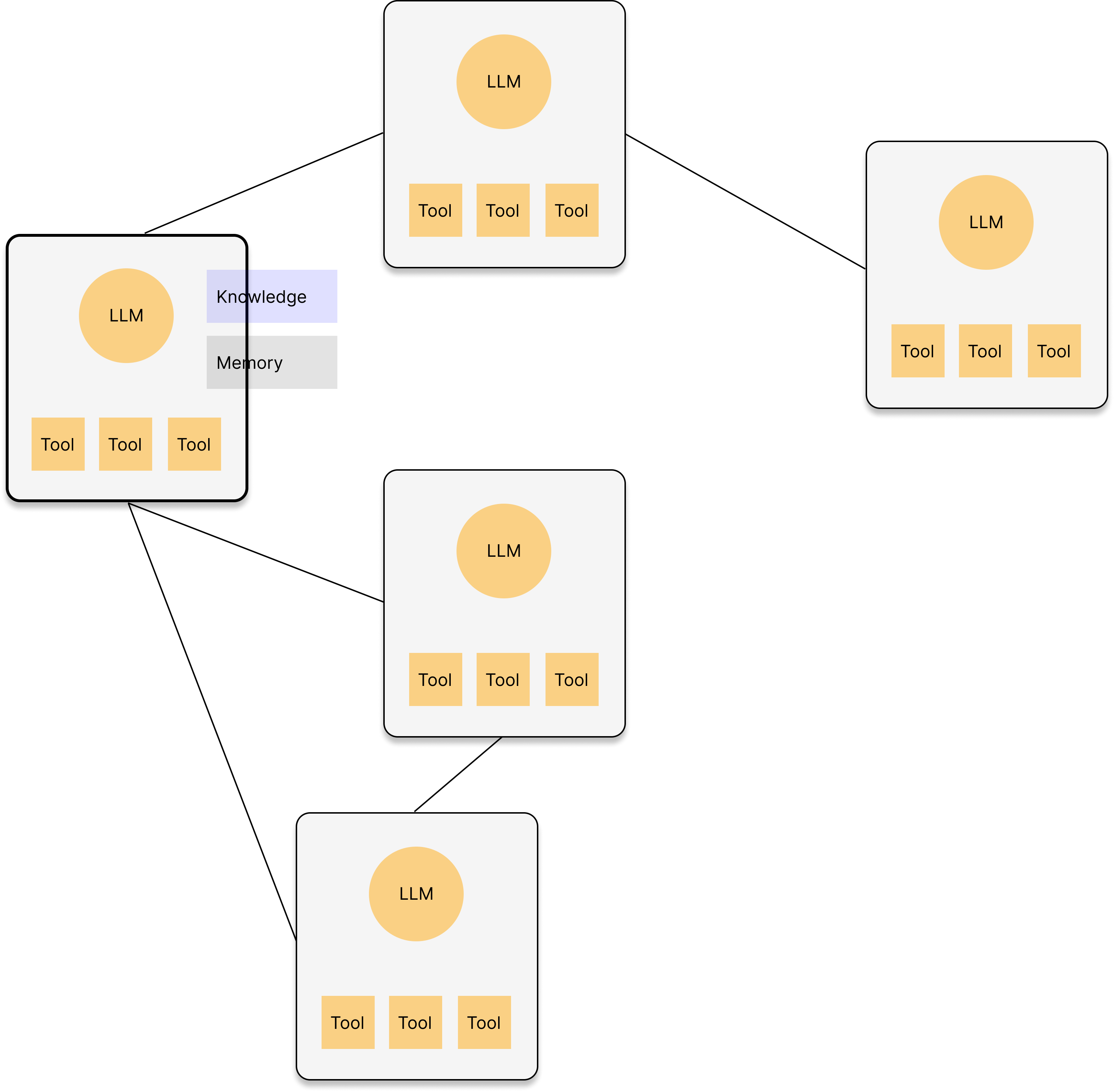

Agents adapt their formation based on task complexity.

You can specify a desired formation or allow the agents to determine it autonomously (default).

To completely automate task workflows, agents will build a task-oriented network by generating nodes that represent tasks and connecting them with dependency-defining edges.

Each node is triggered by specific events and executed by an assigned agent once all dependencies are met.

While the network automatically reconfigures itself, you retain the ability to direct the agents using should_reform variable.

The following code snippet explicitly demonstrates the TaskGraph and its visualization, saving the diagram to the uploads directory.

import versionhq as vhq

task_graph = vhq.TaskGraph(directed=False, should_reform=True) # triggering auto formation

task_a = vhq.Task(description="Research Topic")

task_b = vhq.Task(description="Outline Post")

task_c = vhq.Task(description="Write First Draft")

node_a = task_graph.add_task(task=task_a)

node_b = task_graph.add_task(task=task_b)

node_c = task_graph.add_task(task=task_c)

task_graph.add_dependency(

node_a.identifier, node_b.identifier,

dependency_type=vhq.DependencyType.FINISH_TO_START, weight=5, description="B depends on A"

)

task_graph.add_dependency(

node_a.identifier, node_c.identifier,

dependency_type=vhq.DependencyType.FINISH_TO_FINISH, lag=1, required=False, weight=3

)

# To visualize the graph:

task_graph.visualize()

# To start executing nodes:

latest_output, outputs = task_graph.activate()

assert isinstance(last_task_output, vhq.TaskOutput)

assert [k in task_graph.nodes.keys() and v and isinstance(v, vhq.TaskOutput) for k, v in outputs.items()]A TaskGraph represents tasks as nodes and their execution dependencies as edges, automating rule-based execution.

Agent Networks can handle TaskGraph objects by optimizing their formations.

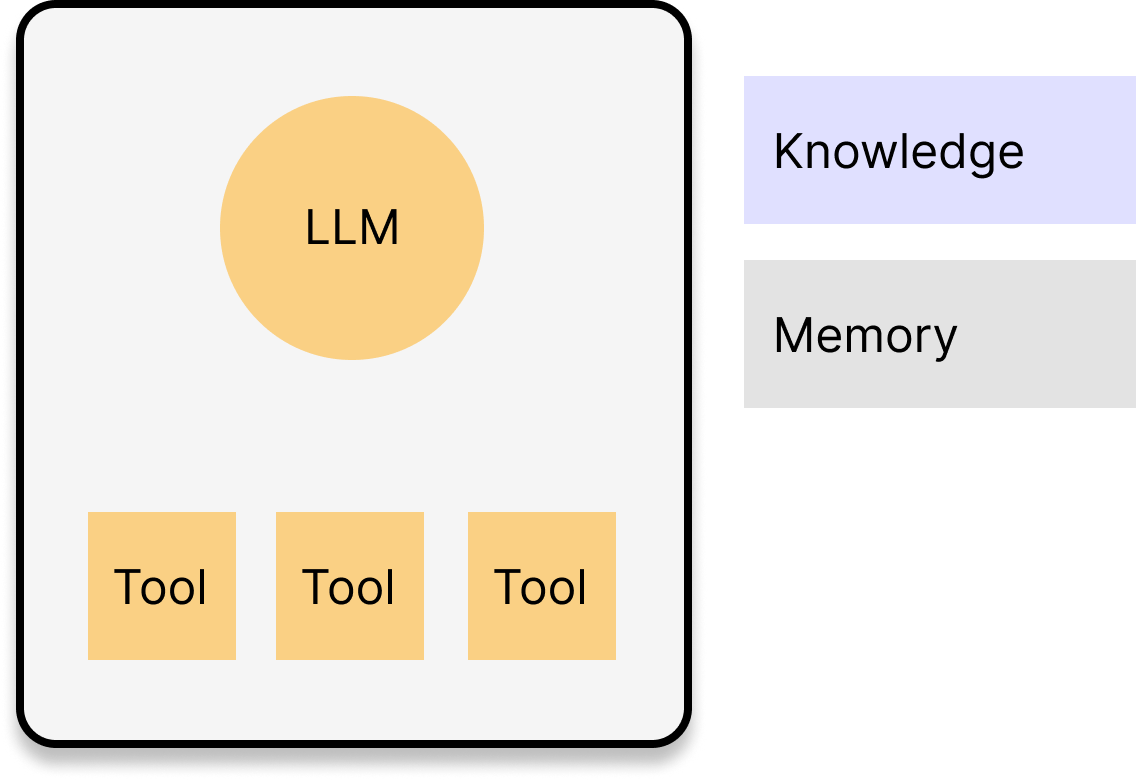

Autonomous agents are model-agnostic and can leverage their own and their peers' knowledge sources, memories, and tools.

Agents are optimized during network formation, but customization is possible before or after.

The following code snippet demonstrates agent customization:

import versionhq as vhq

agent = vhq.Agent(role="Marketing Analyst")

# update the agent

agent.update(

llm="gemini-2.0", # updating LLM (Valid llm_config will be inherited to the new LLM.)

tools=[vhq.Tool(func=lambda x: x)], # adding tools

max_rpm=3,

knowledge_sources=["<KC1>", "<KS2>"], # adding knowledge sources. This will trigger the storage creation.

memory_config={"user_id": "0001"}, # adding memories

dummy="I am dummy" # <- invalid field will be automatically ignored

)pip install versionhq

(Python 3.11 | 3.12 | 3.13)

import versionhq as vhq

agent = vhq.Agent(role="Marketer")

res = agent.start()

assert isinstance(res, vhq.TaskOutput) # contains agent's response in text, JSON, Pydantic formats with usage recordes and eval scores.import versionhq as vhq

network = vhq.form_agent_network(

task="draft a promo plan",

expected_outcome="marketing plan, budget, KPI targets",

)

res, tg = network.launch()

assert isinstance(res, vhq.TaskOutput) # the latest output from the workflow

assert isinstance(tg, vhq.TaskGraph) # contains task nodes and edges that connect the nodes with dep-met conditionsYou can simply build and execute a task using Task class.

import versionhq as vhq

from pydantic import BaseModel

class CustomOutput(BaseModel):

test1: str

test2: list[str]

def dummy_func(message: str, **kwargs) -> str:

test1 = kwargs["test1"] if kwargs and "test1" in kwargs else ""

test2 = kwargs["test2"] if kwargs and "test2" in kwargs else ""

if test1 and test2:

return f"""{message}: {test1}, {", ".join(test2)}"""

task = vhq.Task(

description="Amazing task",

response_schema=CustomOutput,

callback=dummy_func,

callback_kwargs=dict(message="Hi! Here is the result: ")

)

res = task.execute(context="testing a task function")

assert isinstance(res, vhq.TaskOutput)To create an agent network with one or more manager agents, designate members using the is_manager tag.

import versionhq as vhq

agent_a = vhq.Agent(role="Member", llm="gpt-4o")

agent_b = vhq.Agent(role="Leader", llm="gemini-2.0")

task_1 = vhq.Task(

description="Analyze the client's business model.",

response_schema=[vhq.ResponseField(title="test1", data_type=str, required=True),],

allow_delegation=True

)

task_2 = vhq.Task(

description="Define a cohort.",

response_schema=[vhq.ResponseField(title="test1", data_type=int, required=True),],

allow_delegation=False

)

network =vhq.AgentNetwork(

members=[

vhq.Member(agent=agent_a, is_manager=False, tasks=[task_1]),

vhq.Member(agent=agent_b, is_manager=True, tasks=[task_2]), # Agent B as a manager

],

)

res, tg = network.launch()

assert isinstance(res, vhq.NetworkOutput)

assert not [item for item in task_1.processed_agents if "vhq-Delegated-Agent" == item]

assert [item for item in task_1.processed_agents if "agent b" == item]This will return a list with dictionaries with keys defined in the ResponseField of each task.

Tasks can be delegated to a manager, peers within the agent network, or a completely new agent.

Schema, Data Validation

- Pydantic: Data validation and serialization library for Python.

- Upstage: Document processer for ML tasks. (Use

Document Parser APIto extract data from documents) - Docling: Document parsing

Workflow, Task Graph

- NetworkX: A Python package to analyze, create, and manipulate complex graph networks. Ref. Gallary

- Matplotlib: For graph visualization.

- Graphviz: For graph visualization.

LLM Curation

- LiteLLM: LLM orchestration platform

Tools

- Composio: Conect RAG agents with external tools, Apps, and APIs to perform actions and receive triggers. We use tools and RAG tools from Composio toolset.

Storage

- mem0ai: Agents' memory storage and management.

- Chroma DB: Vector database for storing and querying usage data.

- SQLite: C-language library to implements a small SQL database engine.

Deployment

- uv: Python package installer and resolver

- pre-commit: Manage and maintain pre-commit hooks

- setuptools: Build python modules

.

.github

└── workflows/ # Github actions

│

docs/ # Documentation

mkdocs.yml # MkDocs config

│

src/

└── versionhq/ # Orchestration framework package

│ ├── agent/ # Core components

│ └── llm/

│ └── task/

│ └── tool/

│ └── ...

│

└──tests/ # Pytest - by core component and use cases in the docs

│ └── agent/

│ └── llm/

│ └── ...

│

└── .diagrams/ [.gitignore] # Local directory to store graph diagrams

│

└── .logs/ [.gitignore] # Local directory to store error/warning logs for debugging

│

│

pyproject.toml # Project config

.env.sample # sample .env file

For MacOS:

brew install uv

For Ubuntu/Debian:

sudo apt-get install uv

uv venv

source .venv/bin/activate

uv lock --upgrade

uv sync --all-extras

-

AssertionError/module mismatch errors: Set up default Python version using

.pyenvpyenv install 3.12.8 pyenv global 3.12.8 (optional: `pyenv global system` to get back to the system default ver.) uv python pin 3.12.8 echo 3.12.8 >> .python-version -

pygraphvizrelated errors: Run the following commands:brew install graphviz uv pip install --config-settings="--global-option=build_ext" \ --config-settings="--global-option=-I$(brew --prefix graphviz)/include/" \ --config-settings="--global-option=-L$(brew --prefix graphviz)/lib/" \ pygraphviz- If the error continues, skip pygraphviz installation by:

uv sync --all-extras --no-extra pygraphviz

Create .env file in the project root and add secret vars following .env.sample file.

versionhq is a open source project.

-

Create your feature branch (

git checkout -b feature/your-amazing-feature) -

Create amazing features

-

Add a test funcition to the

testsdirectory and run pytest.-

Add secret values defined in

.github/workflows/run_test.ymlto your Githubrepository secretslocated at settings > secrets & variables > Actions. -

Run a following command:

uv run pytest tests -vv --cache-clear

Building a new pytest function

-

Files added to the

testsdirectory must end in_test.py. -

Test functions within the files must begin with

test_. -

Pytest priorities are

1. playground > 2. docs use cases > 3. other features

-

-

Update

docsaccordingly. -

Pull the latest version of source code from the main branch (

git pull origin main) *Address conflicts if any. -

Commit your changes (

git add ./git commit -m 'Add your-amazing-feature') -

Push to the branch (

git push origin feature/your-amazing-feature) -

Open a pull request

Optional

-

Flag with

#! REFINEMEfor any improvements needed and#! FIXMEfor any errors. -

Playgroundis available athttps://versi0n.io.

- Add a package:

uv add <package> - Remove a package:

uv remove <package> - Run a command in the virtual environment:

uv run <command>

- After updating dependencies, update

requirements.txtaccordingly or runuv pip freeze > requirements.txt

-

Install pre-commit hooks:

uv run pre-commit install -

Run pre-commit checks manually:

uv run pre-commit run --all-files

Pre-commit hooks help maintain code quality by running checks for formatting, linting, and other issues before each commit.

- To skip pre-commit hooks

git commit --no-verify -m "your-commit-message"

-

To edit the documentation, see

docsrepository and edit the respective component. -

We use

mkdocsto update the docs. You can run the docs locally at http://127.0.0.1:8000/.uv run python3 -m mkdocs serve --clean -

To add a new page, update

mkdocs.ymlin the root. Refer to MkDocs documentation for more details.

Common issues and solutions:

-

API key errors: Ensure all API keys in the

.envfile are correct and up to date. Make sure to addload_dotenv()on the top of the python file to apply the latest environment values. -

Database connection issues: Check if the Chroma DB is properly initialized and accessible.

-

Memory errors: If processing large contracts, you may need to increase the available memory for the Python process.

-

Issues related to dependencies:

rm -rf uv.lock,uv cache clean,uv venv, and runuv pip install -r requirements.txt -v. -

Issues related to

torchinstallation: Add optional dependencies byuv add versionhq[torch]. -

Issues related to agents and other systems: Check

.logsdirectory located at the root of the project directory for error messages and stack traces. -

Issues related to

Python quit unexpectedly: Check this stackoverflow article. -

reportMissingImportserror from pyright after installing the package: This might occur when installing new libraries while VSCode is running. Open the command pallete (ctrl + shift + p) and run the Python: Restart language server task.

Q. Where can I see if the agent is working?

A. Visit playground.