We're collecting (an admittedly opinionated) list of data annotated datasets in high quality. Most of the data sets listed below are free, however, some are not. They are classified by use case.

We're only at the beginning, and you can help by contributing to this GitHub!

If you're interested in this area and would like to hear more, join our Slack community (coming soon)! We'd also appreciate if you could fill out this short form (coming soon) to help us better understand what your interests might be.

If you have ideas on how we can make this repository better, feel free to submit an issue with suggestions.

We want this resource to grow with contributions from readers and data enthusiasts. If you'd like to make contributions to this Github repository, please read our contributing guidelines.

Documents are an essential part of many businesses in many fields such as law, finance and technology, among others. Automatic processing of documents such as invoices, contracts and resumes is lucrative and opens up many new business avenues. The fields of natural language processing and computer vision have seen considerable progress with the development of deep learning, so these methods have begun to be incorporated into contemporary document understanding systems.

Here is a curated list of datasets for intelligent document processing.

-

GHEGA contains two groups of documents: 110 data-sheets of electronic components and 136 patents. Each group is further divided in classes: data-sheets classes share the component type and producer; patents classes share the patent source.

-

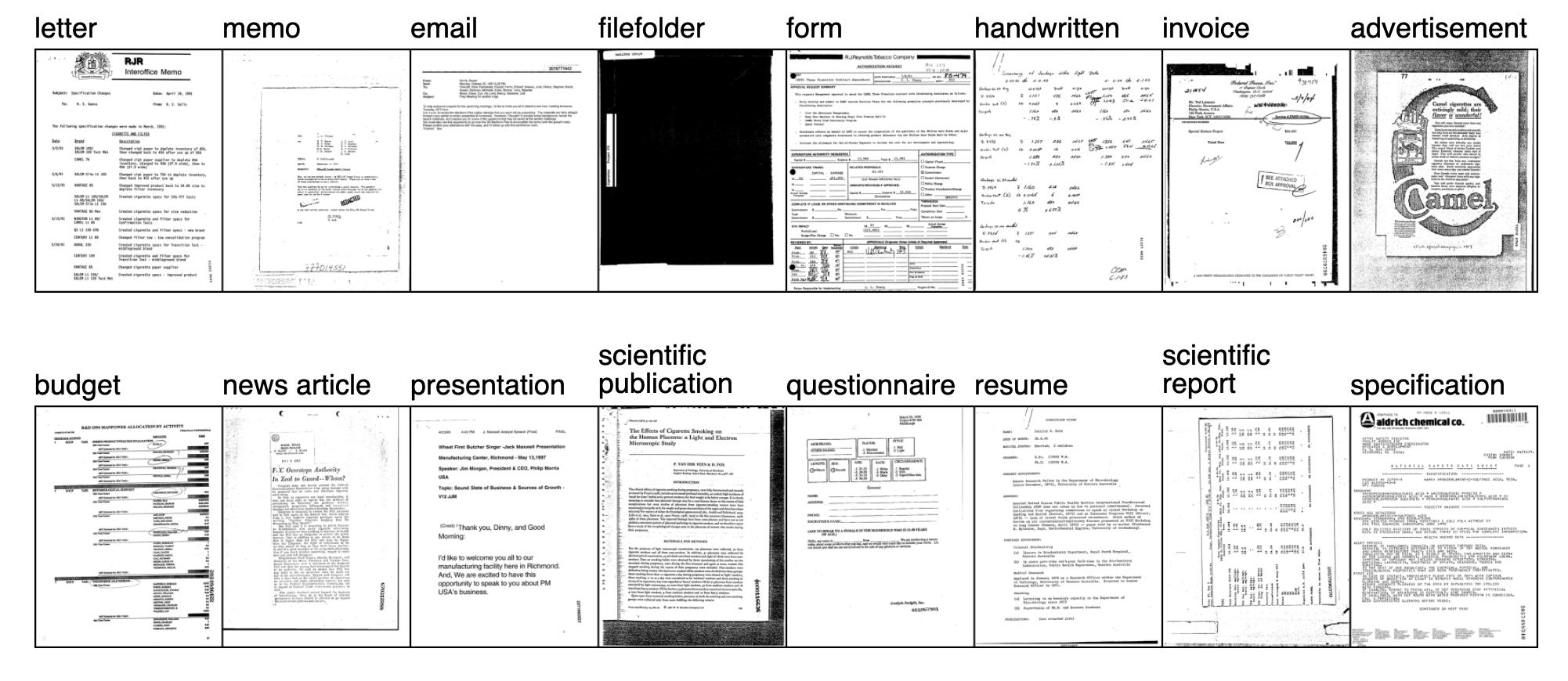

RVL-CDIP Dataset The RVL-CDIP dataset consists of 400,000 grayscale images in 16 classes (letter, memo, email, file folder, form, handwritten, invoice, advertisement, budget, news article, presentation, scientific publication, questionnaire, resume, scientific report, specification), with 25,000 images per class.

-

CORD The dataset consists of thousands of Indonesian receipts, which contains images and box/text annotations for OCR, and multi-level semantic labels for parsing. Labels are the bouding box position and the text of the key informations.

-

FUNSD A dataset for Text Detection, Optical Character Recognition, Spatial Layout Analysis and Form Understanding. Its consists of 199 fully annotated forms, 31485 words, 9707 semantic entities, 5304 relations.

-

NIST The NIST Structured Forms Database consists of 5,590 pages of binary, black-and-white images of synthesized documents: 900 simulated tax submissions, 5,590 images of completed structured form faces, 5,590 text files containing entry field answers.

-

SROIE Consists of a dataset with 1000 whole scanned receipt images and annotations for the competition on scanned receipts OCR and key information extraction (SROIE). Labels are the bouding box position and the text of the key informations.

-

GHEGA contains two groups of documents: 110 data-sheets of electronic components and 136 patents. Each group is further divided in classes: data-sheets classes share the component type and producer; patents classes share the patent source.

-

XFUND is a multilingual form understanding benchmark dataset that includes human-labeled forms with key-value pairs in 7 languages (Chinese, Japanese, Spanish, French, Italian, German, Portuguese).

-

FUNSD for Text Detection, Optical Character Recognition, Spatial Layout Analysis and Form Understanding. Its consists of 199 fully annotated forms, 31485 words, 9707 semantic entities, 5304 relations.

-

RDCL2019 contains scanned pages from contemporary magazines and technical articles.

-

SROIE consists of a dataset with 1000 whole scanned receipt images and annotations for the competition on scanned receipts OCR and key information extraction (SROIE). Labels are the bouding box position and the text of the key informations.

-

Synth90k consists of 9 million images covering 90k English words.

-

Total Text Dataset consists of 1555 images with more than 3 different text orientations: Horizontal, Multi-Oriented, and Curved, one of a kind.

-

DocBank includes 500K document pages, with 12 types of semantic units: abstract, author, caption, date, equation, figure, footer, list, paragraph, reference, section, table, title.

-

Layout Analysis Dataset contains realistic documents with a wide variety of layouts, reflecting the various challenges in layout analysis. Particular emphasis is placed on magazines and technical/scientific publications which are likely to be the focus of digitisation efforts.

-

PubLayNet is a large dataset of document images, of which the layout is annotated with both bounding boxes and polygonal segmentations.

-

TableBank is a new image-based table detection and recognition dataset built with novel weak supervision from Word and Latex documents on the internet, contains 417K high-quality labeled tables.

-

HJDataset contains over 250,000 layout element annotations of seven types. In addition to bounding boxes and masks of the content regions, it also includes the hierarchical structures and reading orders for layout elements for advanced analysis.

-

DocVQA contains 50 K questions and 12K Images in the dataset. Images are collected from UCSF Industry Documents Library. Questions and answers are manually annotated.

Named-entity recognition (NER) (also known as (named) entity identification, entity chunking, and entity extraction) is a subtask of information extraction that seeks to locate and classify named entities mentioned in unstructured text into pre-defined categories such as person names, organizations, locations, medical codes, time expressions, quantities, monetary values, percentages, etc.

State-of-the-art NER systems for English produce near-human performance. For example, the best system entering MUC-7 scored 93.39% of F-measure while human annotators scored 97.60% and 96.95%.

-

re3d focuses on entity and relationship extraction relevant to somebody operating in the role of a defence and security intelligence analyst.

-

Airbus Aircraft Detection can be used to detect the number, size and type of aircrafts present on an airport. In turn, this can provide information about the activity of any airport.

-

Malware consists of texts about malware. It was developed by researchers at the Singapore University of Technology and Design and DSO National Laboratories.

-

SEC-filings is generated using CoNll2003 data and financial documents obtained from U.S. Security and Exchange Commission (SEC) filings.

-

AnEM consists of abstracts and full-text biomedical papers.

-

CADEC is a corpus of adverse drug event annotations.

-

i2b2-2006 is the Deidentification and Smoking Challenge dataset.

-

i2b2-2014 is the 2014 De-identification and Heart Disease Risk Factors Challenge.

-

CONLL 2003 is an annotated dataset for Named Entity Recognition. The tokens are labeled under one of the 9 possible tags.

-

MUC-6 contains the 318 annotated Wall Street Journal articles, the scoring software and the corresponding documentation used in the MUC6 evaluation.

- Kolector surface is a dataset to detect steel defect.

-

MITMovie is a semantically tagged training and test corpus in BIO format.

-

MITRestaurant is a semantically tagged training and test corpus in BIO format.

-

WNUT17 is the dataset for the WNUT 17 Emerging Entities task. It contains text from Twitter, Stack Overflow responses, YouTube comments, and Reddit comments.

-

Assembly is a dataset for Named Entity Recognition (NER) from assembly operations text manuals.

-

BTC is the Broad Twitter corpus, a dataset of tweets collected over stratified times, places and social uses.

-

Ritter is the same as the training portion of WNUT16 (though with sentences in a different order).

-

BBN contains approximately 24,000 pronoun coreferences as well as entity and numeric annotation for approximately 2,300 documents.

-

Groningen Meaning Bank (GMB) comprises thousands of texts in raw and tokenised format, tags for part of speech, named entities and lexical categories, and discourse representation structures compatible with first-order logic.

-

OntoNotes 5 is a large corpus comprising various genres of text (news, conversational telephone speech, weblogs, usenet newsgroups, broadcast, talk shows) in three languages (English, Chinese, and Arabic) with structural information (syntax and predicate argument structure) and shallow semantics (word sense linked to an ontology and coreference).

-

GUM-3.1.0 is the Georgetown University Multilayer Corpus.

-

wikigold is a manually annotated collection of Wikipedia text.

-

WikiNEuRal is a high-quality automatically-generated dataset for Multilingual Named Entity Recognition.

-

QUAERO French Medical Corpus has been initially developed as a resource for named entity recognition and normalization.

-

Europeana Newspapers (Dutch, French, German) is a Named Entity Recognition corpora for Dutch, French, German from Europeana Newspapers.

-

CAp 2017 - (Twitter data) concerns the problem of Named Entity Recognition (NER) for tweets written in French.

-

DBpedia abstract corpus contains a conversion of Wikipedia abstracts in seven languages (dutch, english, french, german, italian, japanese and spanish) into the NLP Interchange Format (NIF).

-

WikiNER is a multilingual named entity recognition dataset from Wikipedia.

-

WikiNEuRal is a high-quality automatically-generated dataset for Multilingual Named Entity Recognition.

Coming soon! 😘

.png)

.png)

.png)