- Operating System Concepts

- Operating System Concepts: Essentials

- Modern Operating Systems

- Operating Systems: Three Easy Pieces (free from the authors)

- Requests to the kernel to perform some privileged operation

- "A system call is the programmatic way in which a computer program requests a service from the kernel of the operating system it is executed on. This may include hardware-related services (for example, accessing a hard disk drive), creation and execution of new processes, and communication with integral kernel services such as process scheduling. System calls provide an essential interface between a process and the operating system." [Wikipedia - System Call]

- On x86, it is usually performed using the

intinstruction, which generates a software trap, switching the processor to "ring-0" (allows priveleged instructions).- Linux uses

int 0x80

- Linux uses

- Most platforms wrap these system calls in standard libraries, such as libc on *nix systems.

- "A process is an instance of a computer program that is being executed. It contains the program code and its current activity. Depending on the operating system (OS), a process may be made up of multiple threads of execution that execute instructions concurrently" [Wikipedia - Process].

- "A thread of execution is the smallest sequence of programmed instructions that can be managed independently by a scheduler, which is typically a part of the operating system." [Wikipedia - Thread]

- Often, threads of the same process share the same address space and system resources.

- "Concurrent computing is a form of computing in which several computations are executing during overlapping time periods—concurrently—instead of sequentially (one completing before the next starts)." [Wikipedia - Concurrent Computing]

- The most common sources of concurrency are multiprocessing and multithreading.

- Concurrency can significantly improve performance because multiple computations are happening at the same time.

- Unfortunately, concurrency can be a source of nondeterministic bugs that are hard to find or debug.

- Concurrency control primitives, such as locks, are used to ensure that use of common resources by multiple threads or processes is done correctly. Several concurrency control primitives are described below.

- University of Wisconson - Locks

- "A lock or mutex (from mutual exclusion) is a synchronization mechanism for enforcing limits on access to a resource in an environment where there are many threads of execution. A lock is designed to enforce a mutual exclusion concurrency control policy." [Wikiepedia - Lock]

- "A semaphore is a variable or abstract data type that is used for controlling access, by multiple processes, to a common resource in a concurrent system such as a multiprogramming operating system." [Wikipedia - Semaphore]

- "A semaphore is hardware or a software tag variable whose value indicates the status of a common resource. Its purpose is to lock the resource being used" [CareerRide - Semaphore]

- A simple implementation is to have an atomic counter in memory (e.g. using atomic compare and swap assembly instructions provided by most modern architectures).

- The counter begins at some initially value X, signifying that X threads can use the resource at a time.

- When a thread requests the resource, the counter is decremented.

- If the counter is greater than 0, the thread can proceed. Otherwise, it blocks until the counter becomes greater than 0.

- When a thread releases the resource, the counter is incremented.

- A semaphore in which X=1 is a mutex since only one thread can use the resource at a time.

- Wikipedia - Monitor

- "In concurrent programming, a monitor is a synchronization construct that allows threads to have both mutual exclusion and the ability to wait (block) for a certain condition to become true. Monitors also have a mechanism for signalling other threads that their condition has been met. "

- In concurrent programs, there may be executions (resulting from bugs) in which threads cease to make progress. Deadlock and livelock are two such cases.

- Deadlock usually occur in the context of locks.

- A deadlock occurs when two processes become mutually dependent. That is, process A cannot make progress until B finishes, and B cannot make progress until A finishes.

- For example, process A locks access to the printer and tries to get access to file

foo.txt, which it wants to print. Meanwhile, process B locks access to the file and then tries to get access to the printer. Imagine the following sequence of events:- A locks printer

- B locks file

- A attempts to lock file, but blocks because B has already locked it

- B attempts to lock printer, but blocks because A has already locked it

- How to avoid deadlock

- Careful design: if all threads acquire locks in the same order, they cannot deadlock. In the example above, if A and B try to lock the file first, neither can deadlock, though one may block temporarily.

- Detection schemes: Some systems, such as database systems, try to detect deadlocks when they happen. Then, they kill one of the processes deadlocking and release its resources so the others can proceed.

- Dependency graphs can be constructed by representing each thread as a node. If A needs a resource that B has currently, a directed edge is drawn from A to B. If this graph has a cycle the processes in the cycle are deadlocked.

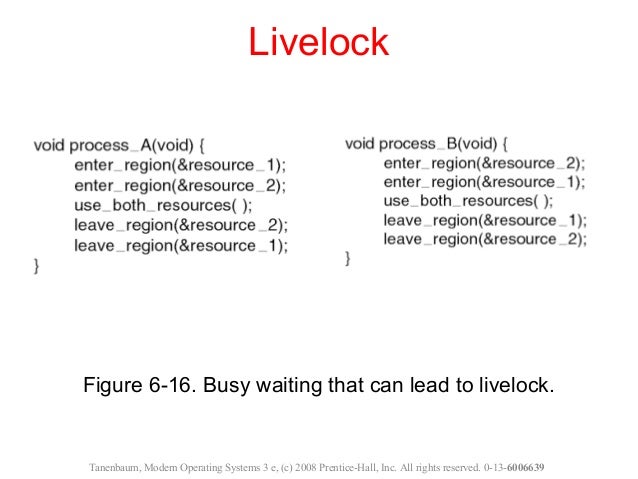

- A livelock occurs when two processes continually change state but do not make progress.

- For example,

- A locks printer

- B locks file

- A attempts to lock file, but cannot because B has already locked it

- B attempts to lock printer, but blocks because A has already locked it

- A releases the printer and waits for the file to be unlocked

- B releases the file and waits for the printer to be unlocked

- A notices that the file is unlocked and tries again

- B notices that the printer is unlocked and tries again

- Repeat forever

- How to avoid livelock

- Variable timeouts