+

+```shell

+$ dstack apply -f .dstack.yml

+

+Active run my-service already exists. Detected changes that can be updated in-place:

+- Repo state (branch, commit, or other)

+- File archives

+- Configuration properties:

+ - env

+ - files

+

+Update the run? [y/n]: y

+⠋ Launching my-service...

+

+ NAME BACKEND PRICE STATUS SUBMITTED

+ my-service deployment=1 running 11 mins ago

+ replica=0 deployment=0 aws (us-west-2) $0.0026 terminating 11 mins ago

+ replica=1 deployment=1 aws (us-west-2) $0.0026 running 1 min ago

+```

+

+

+

+

+

+#### Secrets

+

+Secrets let you centrally manage sensitive data like API keys and credentials. They’re scoped to a project, managed by project admins, and can be [securely referenced](../../docs/concepts/secrets.md) in run configurations.

+

+

+

+```yaml hl_lines="7"

+type: task

+name: train

+

+image: nvcr.io/nvidia/pytorch:25.05-py3

+registry_auth:

+ username: $oauthtoken

+ password: ${{ secrets.ngc_api_key }}

+

+commands:

+ - git clone https://github.com/pytorch/examples.git pytorch-examples

+ - cd pytorch-examples/distributed/ddp-tutorial-series

+ - pip install -r requirements.txt

+ - |

+ torchrun \

+ --nproc-per-node=$DSTACK_GPUS_PER_NODE \

+ --nnodes=$DSTACK_NODES_NUM \

+ multinode.py 50 10

+

+resources:

+ gpu: H100:1..2

+ shm_size: 24GB

+```

+

+

+

+#### Files

+

+By default, `dstack` mounts the repo directory (where you ran `dstack init`) to all runs.

+

+If the directory is large or you need files outside of it, use the new [files](../../docs/concepts/dev-environments/#files) property to map specific local paths into the container.

+

+

+

+```yaml

+type: task

+name: trl-sft

+

+files:

+ - .:examples # Maps the directory where `.dstack.yml` to `/workflow/examples`

+ - ~/.ssh/id_rsa:/root/.ssh/id_rsa # Maps `~/.ssh/id_rsa` to `/root/.ssh/id_rs

+

+python: 3.12

+

+env:

+ - HF_TOKEN

+ - HF_HUB_ENABLE_HF_TRANSFER=1

+ - MODEL=Qwen/Qwen2.5-0.5B

+ - DATASET=stanfordnlp/imdb

+

+commands:

+ - uv pip install trl

+ - |

+ trl sft \

+ --model_name_or_path $MODEL --dataset_name $DATASET

+ --num_processes $DSTACK_GPUS_PER_NODE

+

+resources:

+ gpu: H100:1

+```

+

+

+

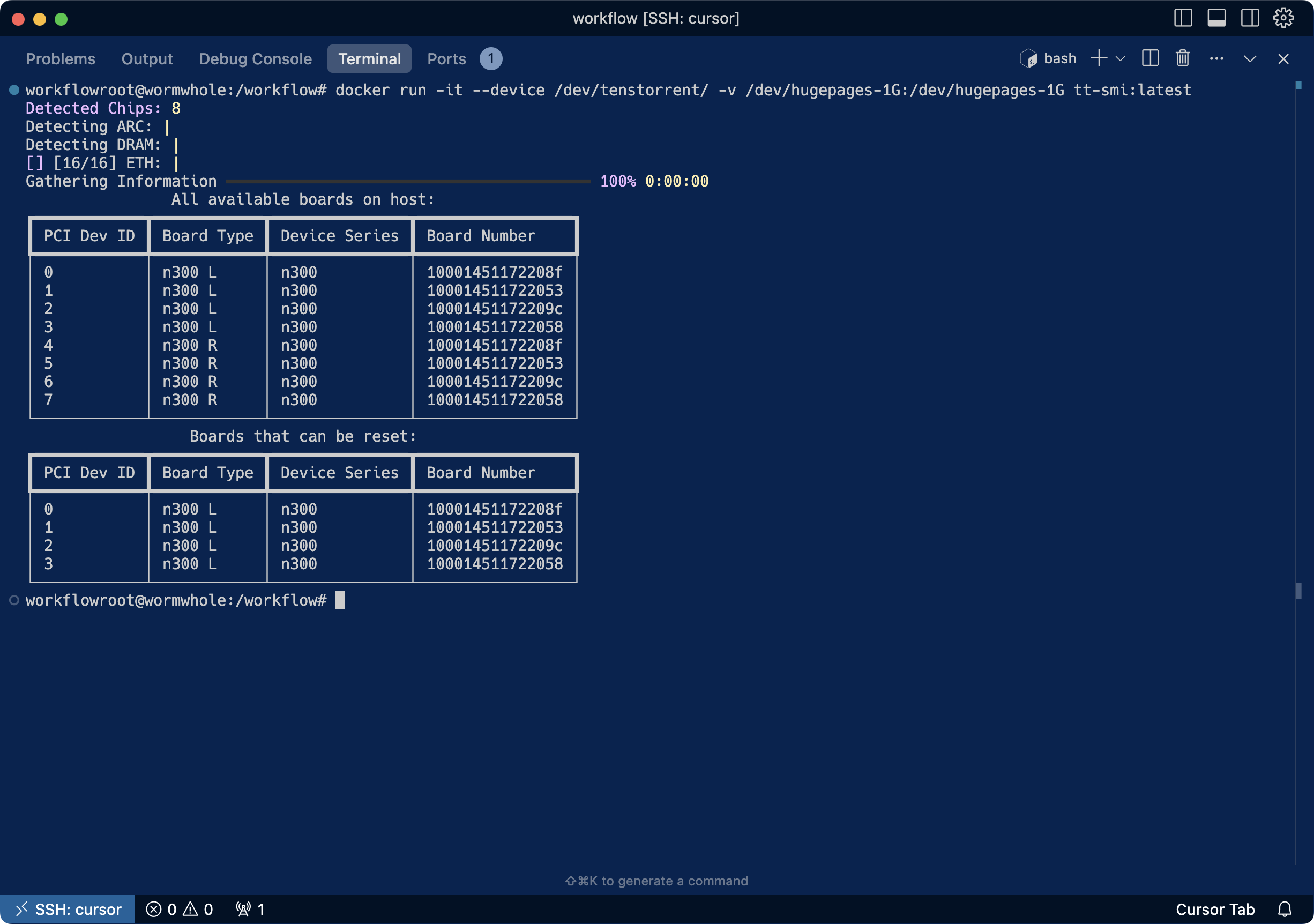

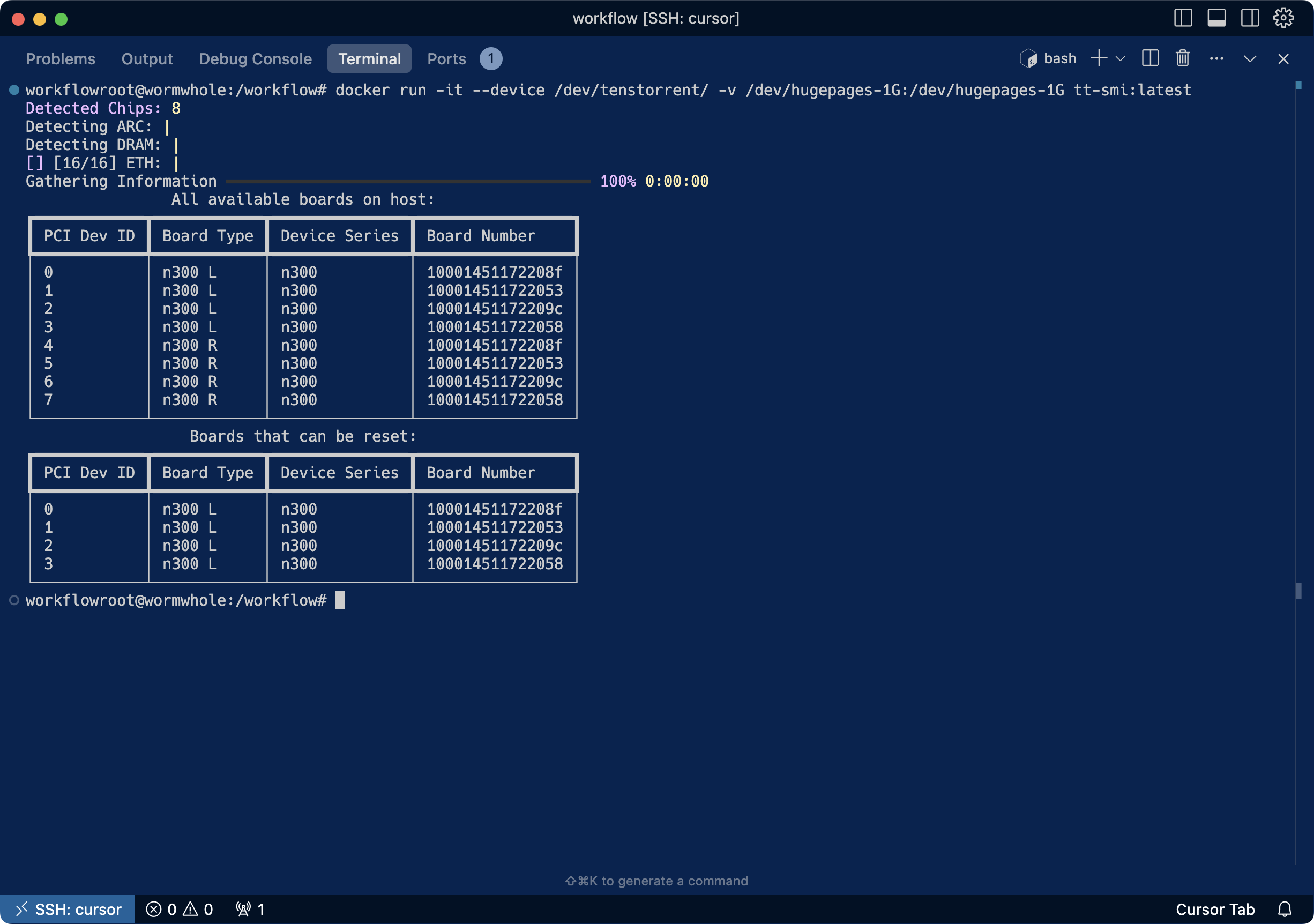

+#### Tenstorrent

+

+`dstack` remains committed to supporting multiple GPU vendors—including NVIDIA, AMD, TPUs, and more recently, [Tenstorrent :material-arrow-top-right-thin:{ .external }](https://tenstorrent.com/){:target="_blank"}. The latest release improves Tenstorrent support by handling hosts with multiple N300 cards and adds Docker-in-Docker support.

+

+ +

+Huge thanks to the Tenstorrent community for testing these improvements!

+

+#### Docker in Docker

+

+Using Docker inside `dstack` run configurations is now even simpler. Just set `docker` to `true` to [enable the use of Docker CLI](../../docs/concepts/tasks.md#docker-in-docker) in your runs—allowing you to build images, run containers, use Docker Compose, and more.

+

+

+

+Huge thanks to the Tenstorrent community for testing these improvements!

+

+#### Docker in Docker

+

+Using Docker inside `dstack` run configurations is now even simpler. Just set `docker` to `true` to [enable the use of Docker CLI](../../docs/concepts/tasks.md#docker-in-docker) in your runs—allowing you to build images, run containers, use Docker Compose, and more.

+

+

+

+```yaml

+type: task

+name: docker-nvidia-smi

+

+docker: true

+

+commands:

+ - |

+ docker run --gpus all \

+ nvidia/cuda:12.3.0-base-ubuntu22.04 \

+ nvidia-smi

+

+resources:

+ gpu: H100:1

+```

+

+

+

+#### AWS EFA

+

+EFA is a network interface for EC2 that enables low-latency, high-bandwidth communication between nodes—crucial for scaling distributed deep learning. With `dstack`, EFA is automatically enabled when using supported instance types in fleets. Check out our [example](../../examples/clusters/efa/index.md)

+

+#### Default Docker images

+

+If no `image` is specified, `dstack` uses a base Docker image that now comes pre-configured with `uv`, `python`, `pip`, essential CUDA drivers, InfiniBand, and NCCL tests (located at `/opt/nccl-tests/build`).

+

+

+

+```yaml

+type: task

+name: nccl-tests

+

+nodes: 2

+

+startup_order: workers-first

+stop_criteria: master-done

+

+env:

+ - NCCL_DEBUG=INFO

+commands:

+ - |

+ if [ $DSTACK_NODE_RANK -eq 0 ]; then

+ mpirun \

+ --allow-run-as-root \

+ --hostfile $DSTACK_MPI_HOSTFILE \

+ -n $DSTACK_GPUS_NUM \

+ -N $DSTACK_GPUS_PER_NODE \

+ --bind-to none \

+ /opt/nccl-tests/build/all_reduce_perf -b 8 -e 8G -f 2 -g 1

+ else

+ sleep infinity

+ fi

+

+resources:

+ gpu: nvidia:1..8

+ shm_size: 16GB

+```

+

+

+

+These images are optimized for common use cases and kept lightweight—ideal for everyday development, training, and inference.

+

+#### Server performance

+

+Server-side performance has been improved. With optimized handling and background processing, each server replica can now handle more runs.

+

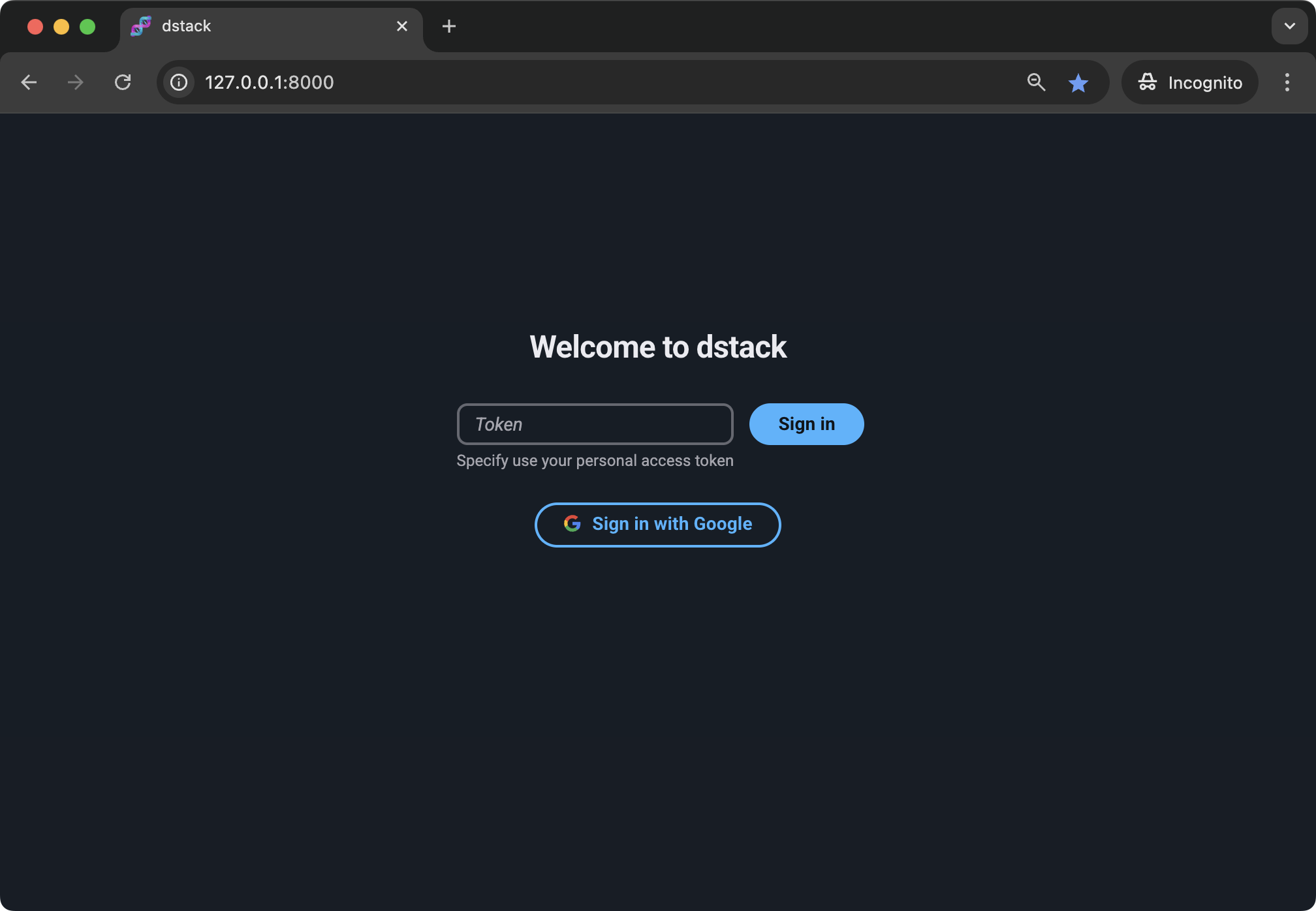

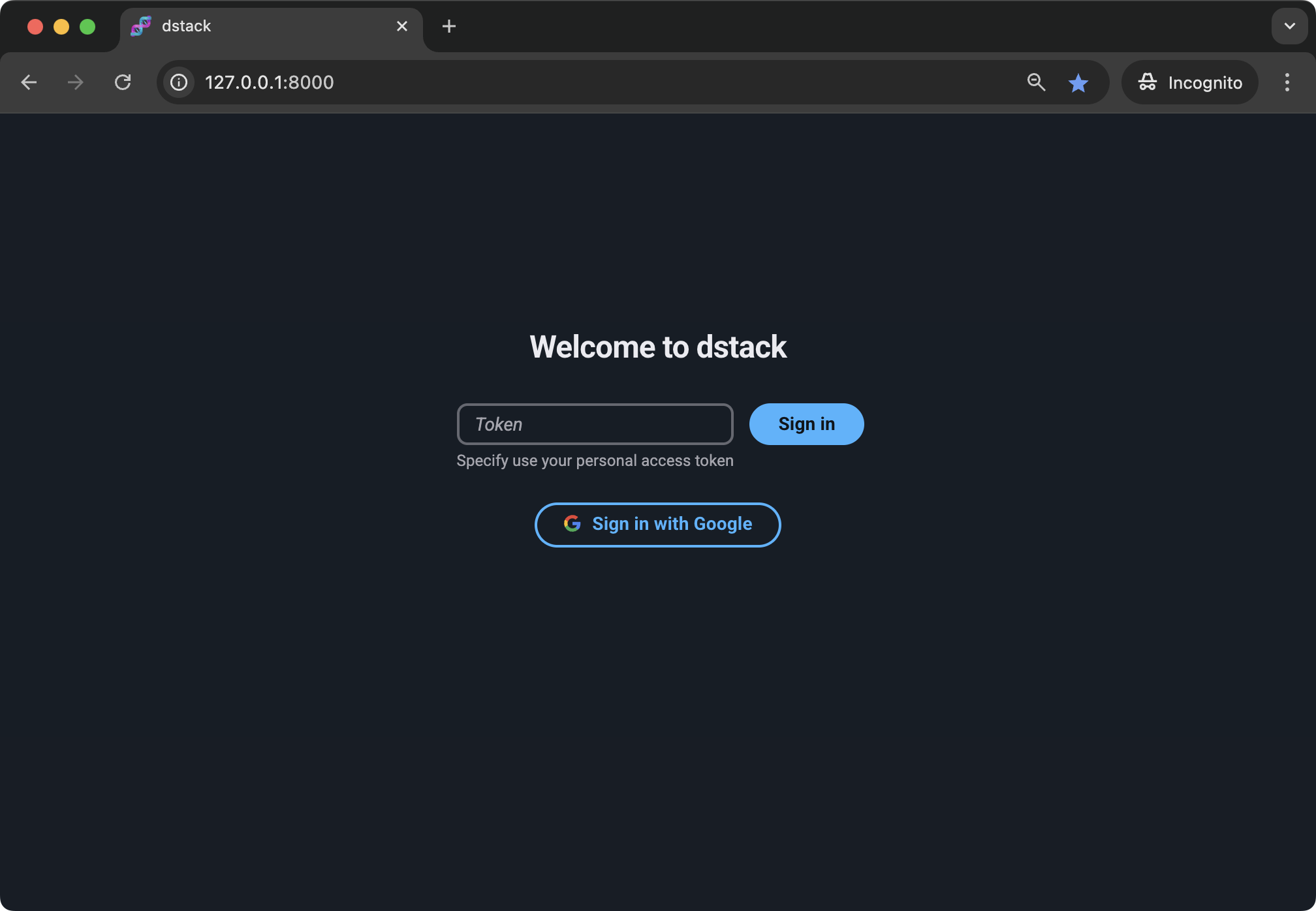

+#### Google SSO

+

+Alongside the open-source version, `dstack` also offers [dstack Enterprise :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack-enterprise){:target="_blank"} — which adds dedicated support and extra integrations like Single Sign-On (SSO). The latest release introduces support for configuring your company’s Google account for authentication.

+

+ +

+If you’d like to learn more about `dstack` Enterprise, [let us know](https://calendly.com/dstackai/discovery-call).

+

+That’s all for now.

+

+!!! info "What's next?"

+ Give dstack a try, and share your feedback—whether it’s [GitHub :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack){:target="_blank"} issues, PRs, or questions on [Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd){:target="_blank"}. We’re eager to hear from you!

diff --git a/docs/blog/posts/cursor.md b/docs/blog/posts/cursor.md

index bdc1e4a61..a5f960469 100644

--- a/docs/blog/posts/cursor.md

+++ b/docs/blog/posts/cursor.md

@@ -5,7 +5,7 @@ description: "TBA"

slug: cursor

image: https://dstack.ai/static-assets/static-assets/images/dstack-cursor-v2.png

categories:

- - Releases

+ - Changelog

---

# Accessing dev environments with Cursor

diff --git a/docs/blog/posts/dstack-metrics.md b/docs/blog/posts/dstack-metrics.md

index f06ff3151..f4647d782 100644

--- a/docs/blog/posts/dstack-metrics.md

+++ b/docs/blog/posts/dstack-metrics.md

@@ -5,7 +5,7 @@ description: "dstack introduces a new CLI command (and API) for monitoring conta

slug: dstack-metrics

image: https://dstack.ai/static-assets/static-assets/images/dstack-stats-v2.png

categories:

- - Releases

+ - Changelog

---

# Monitoring essential GPU metrics via CLI

diff --git a/docs/blog/posts/dstack-sky-own-cloud-accounts.md b/docs/blog/posts/dstack-sky-own-cloud-accounts.md

index ff0b8d182..16b68867c 100644

--- a/docs/blog/posts/dstack-sky-own-cloud-accounts.md

+++ b/docs/blog/posts/dstack-sky-own-cloud-accounts.md

@@ -4,7 +4,7 @@ date: 2024-06-11

description: "With today's release, dstack Sky supports both options: accessing the GPU marketplace and using your own cloud accounts."

slug: dstack-sky-own-cloud-accounts

categories:

- - Releases

+ - Changelog

---

# dstack Sky now supports your own cloud accounts

diff --git a/docs/blog/posts/dstack-sky.md b/docs/blog/posts/dstack-sky.md

index 7cfe80097..78d35641c 100644

--- a/docs/blog/posts/dstack-sky.md

+++ b/docs/blog/posts/dstack-sky.md

@@ -3,7 +3,7 @@ date: 2024-03-11

description: A managed service that enables you to get GPUs at competitive rates from a wide pool of providers.

slug: dstack-sky

categories:

- - Releases

+ - Changelog

---

# Introducing dstack Sky

diff --git a/docs/blog/posts/gh200-on-lambda.md b/docs/blog/posts/gh200-on-lambda.md

index 970c87b12..1741e6f2e 100644

--- a/docs/blog/posts/gh200-on-lambda.md

+++ b/docs/blog/posts/gh200-on-lambda.md

@@ -5,7 +5,7 @@ description: "TBA"

slug: gh200-on-lambda

image: https://dstack.ai/static-assets/static-assets/images/dstack-arm--gh200-lambda-min.png

categories:

- - Releases

+ - Changelog

---

# Supporting ARM and NVIDIA GH200 on Lambda

diff --git a/docs/blog/posts/gpu-blocks-and-proxy-jump.md b/docs/blog/posts/gpu-blocks-and-proxy-jump.md

index dc8bea1df..cbf9ab7dc 100644

--- a/docs/blog/posts/gpu-blocks-and-proxy-jump.md

+++ b/docs/blog/posts/gpu-blocks-and-proxy-jump.md

@@ -5,7 +5,7 @@ description: "TBA"

slug: gpu-blocks-and-proxy-jump

image: https://dstack.ai/static-assets/static-assets/images/data-centers-and-private-clouds.png

categories:

- - Releases

+ - Changelog

---

# Introducing GPU blocks and proxy jump for SSH fleets

diff --git a/docs/blog/posts/inactivity-duration.md b/docs/blog/posts/inactivity-duration.md

index 28ea3f5d7..d04a8eba4 100644

--- a/docs/blog/posts/inactivity-duration.md

+++ b/docs/blog/posts/inactivity-duration.md

@@ -5,7 +5,7 @@ description: "dstack introduces a new feature that automatically detects and shu

slug: inactivity-duration

image: https://dstack.ai/static-assets/static-assets/images/inactive-dev-environments-auto-shutdown.png

categories:

- - Releases

+ - Changelog

---

# Auto-shutdown for inactive dev environments—no idle GPUs

diff --git a/docs/blog/posts/instance-volumes.md b/docs/blog/posts/instance-volumes.md

index c4f5e3b1b..95b48cee1 100644

--- a/docs/blog/posts/instance-volumes.md

+++ b/docs/blog/posts/instance-volumes.md

@@ -5,7 +5,7 @@ description: "To simplify caching across runs and the use of NFS, we introduce a

image: https://dstack.ai/static-assets/static-assets/images/dstack-instance-volumes.png

slug: instance-volumes

categories:

- - Releases

+ - Changelog

---

# Introducing instance volumes to persist data on instances

diff --git a/docs/blog/posts/intel-gaudi.md b/docs/blog/posts/intel-gaudi.md

index 7abc69f41..6f95f49d0 100644

--- a/docs/blog/posts/intel-gaudi.md

+++ b/docs/blog/posts/intel-gaudi.md

@@ -5,7 +5,7 @@ description: "dstack now supports Intel Gaudi accelerators with SSH fleets, simp

slug: intel-gaudi

image: https://dstack.ai/static-assets/static-assets/images/dstack-intel-gaudi-and-intel-tiber-cloud.png-v2

categories:

- - Releases

+ - Changelog

---

# Supporting Intel Gaudi AI accelerators with SSH fleets

diff --git a/docs/blog/posts/metrics-ui.md b/docs/blog/posts/metrics-ui.md

index 74719af2d..b15bbffc5 100644

--- a/docs/blog/posts/metrics-ui.md

+++ b/docs/blog/posts/metrics-ui.md

@@ -5,7 +5,7 @@ description: "TBA"

slug: metrics-ui

image: https://dstack.ai/static-assets/static-assets/images/dstack-metrics-ui-v3-min.png

categories:

- - Releases

+ - Changelog

---

# Built-in UI for monitoring essential GPU metrics

diff --git a/docs/blog/posts/mpi.md b/docs/blog/posts/mpi.md

index ef5d68582..5473d64a2 100644

--- a/docs/blog/posts/mpi.md

+++ b/docs/blog/posts/mpi.md

@@ -5,7 +5,7 @@ description: "TBA"

slug: mpi

image: https://dstack.ai/static-assets/static-assets/images/dstack-mpi-v2.png

categories:

- - Releases

+ - Changelog

---

# Supporting MPI and NCCL/RCCL tests

diff --git a/docs/blog/posts/nebius.md b/docs/blog/posts/nebius.md

index c6f4374db..ef484b0f3 100644

--- a/docs/blog/posts/nebius.md

+++ b/docs/blog/posts/nebius.md

@@ -5,7 +5,7 @@ description: "TBA"

slug: nebius

image: https://dstack.ai/static-assets/static-assets/images/dstack-nebius-v2.png

categories:

- - Releases

+ - Changelog

---

# Supporting GPU provisioning and orchestration on Nebius

diff --git a/docs/blog/posts/nvidia-and-amd-on-vultr.md b/docs/blog/posts/nvidia-and-amd-on-vultr.md

index eda4519b4..2fb30ebbc 100644

--- a/docs/blog/posts/nvidia-and-amd-on-vultr.md

+++ b/docs/blog/posts/nvidia-and-amd-on-vultr.md

@@ -5,7 +5,7 @@ description: "Introducing integration with Vultr: The new integration allows Vul

slug: nvidia-and-amd-on-vultr

image: https://dstack.ai/static-assets/static-assets/images/dstack-vultr.png

categories:

- - Releases

+ - Changelog

---

# Supporting NVIDIA and AMD accelerators on Vultr

diff --git a/docs/blog/posts/prometheus.md b/docs/blog/posts/prometheus.md

index 58299dfb4..5482d0c13 100644

--- a/docs/blog/posts/prometheus.md

+++ b/docs/blog/posts/prometheus.md

@@ -5,7 +5,7 @@ description: "TBA"

slug: prometheus

image: https://dstack.ai/static-assets/static-assets/images/dstack-prometheus-v3.png

categories:

- - Releases

+ - Changelog

---

# Exporting GPU, cost, and other metrics to Prometheus

diff --git a/docs/blog/posts/tpu-on-gcp.md b/docs/blog/posts/tpu-on-gcp.md

index c38bea20a..8fff83cb4 100644

--- a/docs/blog/posts/tpu-on-gcp.md

+++ b/docs/blog/posts/tpu-on-gcp.md

@@ -4,7 +4,7 @@ date: 2024-09-10

description: "Learn how to use TPUs with dstack for fine-tuning and deploying LLMs, leveraging open-source tools like Hugging Face’s Optimum TPU and vLLM."

slug: tpu-on-gcp

categories:

- - Releases

+ - Changelog

---

# Using TPUs for fine-tuning and deploying LLMs

diff --git a/docs/blog/posts/volumes-on-runpod.md b/docs/blog/posts/volumes-on-runpod.md

index a6d436790..de0c8d6d0 100644

--- a/docs/blog/posts/volumes-on-runpod.md

+++ b/docs/blog/posts/volumes-on-runpod.md

@@ -4,7 +4,7 @@ date: 2024-08-13

description: "Learn how to use volumes with dstack to optimize model inference cold start times on RunPod."

slug: volumes-on-runpod

categories:

- - Releases

+ - Changelog

---

# Using volumes to optimize cold starts on RunPod

diff --git a/docs/docs/concepts/services.md b/docs/docs/concepts/services.md

index 5dd92f19c..a93cacf0d 100644

--- a/docs/docs/concepts/services.md

+++ b/docs/docs/concepts/services.md

@@ -679,6 +679,61 @@ utilization_policy:

[`max_price`](../reference/dstack.yml/service.md#max_price), and

among [others](../reference/dstack.yml/service.md).

+## Rolling deployment

+

+To deploy a new version of a service that is already `running`, use `dstack apply`. `dstack` will automatically detect changes and suggest a rolling deployment update.

+

+

+

+If you’d like to learn more about `dstack` Enterprise, [let us know](https://calendly.com/dstackai/discovery-call).

+

+That’s all for now.

+

+!!! info "What's next?"

+ Give dstack a try, and share your feedback—whether it’s [GitHub :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack){:target="_blank"} issues, PRs, or questions on [Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd){:target="_blank"}. We’re eager to hear from you!

diff --git a/docs/blog/posts/cursor.md b/docs/blog/posts/cursor.md

index bdc1e4a61..a5f960469 100644

--- a/docs/blog/posts/cursor.md

+++ b/docs/blog/posts/cursor.md

@@ -5,7 +5,7 @@ description: "TBA"

slug: cursor

image: https://dstack.ai/static-assets/static-assets/images/dstack-cursor-v2.png

categories:

- - Releases

+ - Changelog

---

# Accessing dev environments with Cursor

diff --git a/docs/blog/posts/dstack-metrics.md b/docs/blog/posts/dstack-metrics.md

index f06ff3151..f4647d782 100644

--- a/docs/blog/posts/dstack-metrics.md

+++ b/docs/blog/posts/dstack-metrics.md

@@ -5,7 +5,7 @@ description: "dstack introduces a new CLI command (and API) for monitoring conta

slug: dstack-metrics

image: https://dstack.ai/static-assets/static-assets/images/dstack-stats-v2.png

categories:

- - Releases

+ - Changelog

---

# Monitoring essential GPU metrics via CLI

diff --git a/docs/blog/posts/dstack-sky-own-cloud-accounts.md b/docs/blog/posts/dstack-sky-own-cloud-accounts.md

index ff0b8d182..16b68867c 100644

--- a/docs/blog/posts/dstack-sky-own-cloud-accounts.md

+++ b/docs/blog/posts/dstack-sky-own-cloud-accounts.md

@@ -4,7 +4,7 @@ date: 2024-06-11

description: "With today's release, dstack Sky supports both options: accessing the GPU marketplace and using your own cloud accounts."

slug: dstack-sky-own-cloud-accounts

categories:

- - Releases

+ - Changelog

---

# dstack Sky now supports your own cloud accounts

diff --git a/docs/blog/posts/dstack-sky.md b/docs/blog/posts/dstack-sky.md

index 7cfe80097..78d35641c 100644

--- a/docs/blog/posts/dstack-sky.md

+++ b/docs/blog/posts/dstack-sky.md

@@ -3,7 +3,7 @@ date: 2024-03-11

description: A managed service that enables you to get GPUs at competitive rates from a wide pool of providers.

slug: dstack-sky

categories:

- - Releases

+ - Changelog

---

# Introducing dstack Sky

diff --git a/docs/blog/posts/gh200-on-lambda.md b/docs/blog/posts/gh200-on-lambda.md

index 970c87b12..1741e6f2e 100644

--- a/docs/blog/posts/gh200-on-lambda.md

+++ b/docs/blog/posts/gh200-on-lambda.md

@@ -5,7 +5,7 @@ description: "TBA"

slug: gh200-on-lambda

image: https://dstack.ai/static-assets/static-assets/images/dstack-arm--gh200-lambda-min.png

categories:

- - Releases

+ - Changelog

---

# Supporting ARM and NVIDIA GH200 on Lambda

diff --git a/docs/blog/posts/gpu-blocks-and-proxy-jump.md b/docs/blog/posts/gpu-blocks-and-proxy-jump.md

index dc8bea1df..cbf9ab7dc 100644

--- a/docs/blog/posts/gpu-blocks-and-proxy-jump.md

+++ b/docs/blog/posts/gpu-blocks-and-proxy-jump.md

@@ -5,7 +5,7 @@ description: "TBA"

slug: gpu-blocks-and-proxy-jump

image: https://dstack.ai/static-assets/static-assets/images/data-centers-and-private-clouds.png

categories:

- - Releases

+ - Changelog

---

# Introducing GPU blocks and proxy jump for SSH fleets

diff --git a/docs/blog/posts/inactivity-duration.md b/docs/blog/posts/inactivity-duration.md

index 28ea3f5d7..d04a8eba4 100644

--- a/docs/blog/posts/inactivity-duration.md

+++ b/docs/blog/posts/inactivity-duration.md

@@ -5,7 +5,7 @@ description: "dstack introduces a new feature that automatically detects and shu

slug: inactivity-duration

image: https://dstack.ai/static-assets/static-assets/images/inactive-dev-environments-auto-shutdown.png

categories:

- - Releases

+ - Changelog

---

# Auto-shutdown for inactive dev environments—no idle GPUs

diff --git a/docs/blog/posts/instance-volumes.md b/docs/blog/posts/instance-volumes.md

index c4f5e3b1b..95b48cee1 100644

--- a/docs/blog/posts/instance-volumes.md

+++ b/docs/blog/posts/instance-volumes.md

@@ -5,7 +5,7 @@ description: "To simplify caching across runs and the use of NFS, we introduce a

image: https://dstack.ai/static-assets/static-assets/images/dstack-instance-volumes.png

slug: instance-volumes

categories:

- - Releases

+ - Changelog

---

# Introducing instance volumes to persist data on instances

diff --git a/docs/blog/posts/intel-gaudi.md b/docs/blog/posts/intel-gaudi.md

index 7abc69f41..6f95f49d0 100644

--- a/docs/blog/posts/intel-gaudi.md

+++ b/docs/blog/posts/intel-gaudi.md

@@ -5,7 +5,7 @@ description: "dstack now supports Intel Gaudi accelerators with SSH fleets, simp

slug: intel-gaudi

image: https://dstack.ai/static-assets/static-assets/images/dstack-intel-gaudi-and-intel-tiber-cloud.png-v2

categories:

- - Releases

+ - Changelog

---

# Supporting Intel Gaudi AI accelerators with SSH fleets

diff --git a/docs/blog/posts/metrics-ui.md b/docs/blog/posts/metrics-ui.md

index 74719af2d..b15bbffc5 100644

--- a/docs/blog/posts/metrics-ui.md

+++ b/docs/blog/posts/metrics-ui.md

@@ -5,7 +5,7 @@ description: "TBA"

slug: metrics-ui

image: https://dstack.ai/static-assets/static-assets/images/dstack-metrics-ui-v3-min.png

categories:

- - Releases

+ - Changelog

---

# Built-in UI for monitoring essential GPU metrics

diff --git a/docs/blog/posts/mpi.md b/docs/blog/posts/mpi.md

index ef5d68582..5473d64a2 100644

--- a/docs/blog/posts/mpi.md

+++ b/docs/blog/posts/mpi.md

@@ -5,7 +5,7 @@ description: "TBA"

slug: mpi

image: https://dstack.ai/static-assets/static-assets/images/dstack-mpi-v2.png

categories:

- - Releases

+ - Changelog

---

# Supporting MPI and NCCL/RCCL tests

diff --git a/docs/blog/posts/nebius.md b/docs/blog/posts/nebius.md

index c6f4374db..ef484b0f3 100644

--- a/docs/blog/posts/nebius.md

+++ b/docs/blog/posts/nebius.md

@@ -5,7 +5,7 @@ description: "TBA"

slug: nebius

image: https://dstack.ai/static-assets/static-assets/images/dstack-nebius-v2.png

categories:

- - Releases

+ - Changelog

---

# Supporting GPU provisioning and orchestration on Nebius

diff --git a/docs/blog/posts/nvidia-and-amd-on-vultr.md b/docs/blog/posts/nvidia-and-amd-on-vultr.md

index eda4519b4..2fb30ebbc 100644

--- a/docs/blog/posts/nvidia-and-amd-on-vultr.md

+++ b/docs/blog/posts/nvidia-and-amd-on-vultr.md

@@ -5,7 +5,7 @@ description: "Introducing integration with Vultr: The new integration allows Vul

slug: nvidia-and-amd-on-vultr

image: https://dstack.ai/static-assets/static-assets/images/dstack-vultr.png

categories:

- - Releases

+ - Changelog

---

# Supporting NVIDIA and AMD accelerators on Vultr

diff --git a/docs/blog/posts/prometheus.md b/docs/blog/posts/prometheus.md

index 58299dfb4..5482d0c13 100644

--- a/docs/blog/posts/prometheus.md

+++ b/docs/blog/posts/prometheus.md

@@ -5,7 +5,7 @@ description: "TBA"

slug: prometheus

image: https://dstack.ai/static-assets/static-assets/images/dstack-prometheus-v3.png

categories:

- - Releases

+ - Changelog

---

# Exporting GPU, cost, and other metrics to Prometheus

diff --git a/docs/blog/posts/tpu-on-gcp.md b/docs/blog/posts/tpu-on-gcp.md

index c38bea20a..8fff83cb4 100644

--- a/docs/blog/posts/tpu-on-gcp.md

+++ b/docs/blog/posts/tpu-on-gcp.md

@@ -4,7 +4,7 @@ date: 2024-09-10

description: "Learn how to use TPUs with dstack for fine-tuning and deploying LLMs, leveraging open-source tools like Hugging Face’s Optimum TPU and vLLM."

slug: tpu-on-gcp

categories:

- - Releases

+ - Changelog

---

# Using TPUs for fine-tuning and deploying LLMs

diff --git a/docs/blog/posts/volumes-on-runpod.md b/docs/blog/posts/volumes-on-runpod.md

index a6d436790..de0c8d6d0 100644

--- a/docs/blog/posts/volumes-on-runpod.md

+++ b/docs/blog/posts/volumes-on-runpod.md

@@ -4,7 +4,7 @@ date: 2024-08-13

description: "Learn how to use volumes with dstack to optimize model inference cold start times on RunPod."

slug: volumes-on-runpod

categories:

- - Releases

+ - Changelog

---

# Using volumes to optimize cold starts on RunPod

diff --git a/docs/docs/concepts/services.md b/docs/docs/concepts/services.md

index 5dd92f19c..a93cacf0d 100644

--- a/docs/docs/concepts/services.md

+++ b/docs/docs/concepts/services.md

@@ -679,6 +679,61 @@ utilization_policy:

[`max_price`](../reference/dstack.yml/service.md#max_price), and

among [others](../reference/dstack.yml/service.md).

+## Rolling deployment

+

+To deploy a new version of a service that is already `running`, use `dstack apply`. `dstack` will automatically detect changes and suggest a rolling deployment update.

+

+

+

+```shell

+$ dstack apply -f my-service.dstack.yml

+

+Active run my-service already exists. Detected changes that can be updated in-place:

+- Repo state (branch, commit, or other)

+- File archives

+- Configuration properties:

+ - env

+ - files

+

+Update the run? [y/n]:

+```

+

+

+

+If approved, `dstack` gradually updates the service replicas. To update a replica, `dstack` starts a new replica, waits for it to become `running`, then terminates the old replica. This process is repeated for each replica, one at a time.

+

+You can track the progress of rolling deployment in both `dstack apply` or `dstack ps`.

+Older replicas have lower `deployment` numbers; newer ones have higher.

+

+

+

+```shell

+$ dstack apply -f my-service.dstack.yml

+

+⠋ Launching my-service...

+ NAME BACKEND PRICE STATUS SUBMITTED

+ my-service deployment=1 running 11 mins ago

+ replica=0 job=0 deployment=0 aws (us-west-2) $0.0026 terminating 11 mins ago

+ replica=1 job=0 deployment=1 aws (us-west-2) $0.0026 running 1 min ago

+```

+

+The rolling deployment stops when all replicas are updated or when a new deployment is submitted.

+

+??? info "Supported properties"

+

+

+ Rolling deployment supports changes to the following properties: `port`, `resources`, `volumes`, `docker`, `files`, `image`, `user`, `privileged`, `entrypoint`, `working_dir`, `python`, `nvcc`, `single_branch`, `env`, `shell`, `commands`, as well as changes to [repo](repos.md) or [file](#files) contents.

+

+ Changes to `replicas` and `scaling` can be applied without redeploying replicas.

+

+ Changes to other properties require a full service restart.

+

+ To trigger a rolling deployment when no properties have changed (e.g., after updating [secrets](secrets.md) or to restart all replicas),

+ make a minor config change, such as adding a dummy [environment variable](#environment-variables).

+

--8<-- "docs/concepts/snippets/manage-runs.ext"

!!! info "What's next?"

diff --git a/docs/docs/guides/server-deployment.md b/docs/docs/guides/server-deployment.md

index 8ff4034e6..ee47f481a 100644

--- a/docs/docs/guides/server-deployment.md

+++ b/docs/docs/guides/server-deployment.md

@@ -124,7 +124,11 @@ Postgres has no such limitation and is recommended for production deployment.

### PostgreSQL

-To store the server state in Postgres, set the `DSTACK_DATABASE_URL` environment variable.

+To store the server state in Postgres, set the `DSTACK_DATABASE_URL` environment variable:

+

+```shell

+$ DSTACK_DATABASE_URL=postgresql+asyncpg://user:password@db-host:5432/dstack dstack server

+```

??? info "Migrate from SQLite to PostgreSQL"

You can migrate the existing state from SQLite to PostgreSQL using `pgloader`:

diff --git a/docs/docs/reference/environment-variables.md b/docs/docs/reference/environment-variables.md

index 4c5d44bd5..3c28ba333 100644

--- a/docs/docs/reference/environment-variables.md

+++ b/docs/docs/reference/environment-variables.md

@@ -123,6 +123,7 @@ For more details on the options below, refer to the [server deployment](../guide

- `DSTACK_DB_POOL_SIZE`{ #DSTACK_DB_POOL_SIZE } - The client DB connections pool size. Defaults to `20`,

- `DSTACK_DB_MAX_OVERFLOW`{ #DSTACK_DB_MAX_OVERFLOW } - The client DB connections pool allowed overflow. Defaults to `20`.

- `DSTACK_SERVER_BACKGROUND_PROCESSING_FACTOR`{ #DSTACK_SERVER_BACKGROUND_PROCESSING_FACTOR } - The number of background jobs for processing server resources. Increase if you need to process more resources per server replica quickly. Defaults to `1`.

+- `DSTACK_SERVER_BACKGROUND_PROCESSING_DISABLED`{ #DSTACK_SERVER_BACKGROUND_PROCESSING_DISABLED } - Disables background processing if set to any value. Useful to run only web frontend and API server.

??? info "Internal environment variables"

The following environment variables are intended for development purposes:

diff --git a/docs/overrides/header-2.html b/docs/overrides/header-2.html

index 2c7e67679..4f8542d38 100644

--- a/docs/overrides/header-2.html

+++ b/docs/overrides/header-2.html

@@ -62,7 +62,7 @@

{% if "navigation.tabs.sticky" in features %}

diff --git a/docs/overrides/home.html b/docs/overrides/home.html

index 8c306dd36..b7c3ee994 100644

--- a/docs/overrides/home.html

+++ b/docs/overrides/home.html

@@ -509,20 +509,20 @@ FAQ

-

+

@@ -539,8 +539,8 @@ dstack Enterprise

diff --git a/docs/overrides/main.html b/docs/overrides/main.html

index 4a74fb3a8..8db019610 100644

--- a/docs/overrides/main.html

+++ b/docs/overrides/main.html

@@ -102,38 +102,42 @@

+

+

+

+

+

+

+

+

+

+

+

+

{% endblock %}

diff --git a/frontend/src/pages/Runs/Details/Logs/index.tsx b/frontend/src/pages/Runs/Details/Logs/index.tsx

index aaffbf41c..6fc550103 100644

--- a/frontend/src/pages/Runs/Details/Logs/index.tsx

+++ b/frontend/src/pages/Runs/Details/Logs/index.tsx

@@ -31,7 +31,7 @@ export const Logs: React.FC = ({ className, projectName, runName, jobSub

const writeDataToTerminal = (logs: ILogItem[]) => {

logs.forEach((logItem) => {

- terminalInstance.current.write(logItem.message);

+ terminalInstance.current.write(logItem.message.replace(/(? {

const { data, isLoading, refreshList, isLoadingMore } = useInfiniteScroll({

useLazyQuery: useLazyGetRunsQuery,

- args: { ...filteringRequestParams, limit: DEFAULT_TABLE_PAGE_SIZE },

+ args: { ...filteringRequestParams, limit: DEFAULT_TABLE_PAGE_SIZE, job_submissions_limit: 1 },

getPaginationParams: (lastRun) => ({ prev_submitted_at: lastRun.submitted_at }),

});

diff --git a/frontend/src/services/project.ts b/frontend/src/services/project.ts

index 9e05e444a..c7559784e 100644

--- a/frontend/src/services/project.ts

+++ b/frontend/src/services/project.ts

@@ -4,6 +4,8 @@ import { createApi, fetchBaseQuery } from '@reduxjs/toolkit/query/react';

import { base64ToArrayBuffer } from 'libs';

import fetchBaseQueryHeaders from 'libs/fetchBaseQueryHeaders';

+const decoder = new TextDecoder('utf-8');

+

// Helper function to transform backend response to frontend format

// eslint-disable-next-line @typescript-eslint/no-explicit-any

const transformProjectResponse = (project: any): IProject => ({

@@ -131,7 +133,7 @@ export const projectApi = createApi({

transformResponse: (response: { logs: ILogItem[]; next_token: string }) => {

const logs = response.logs.map((logItem) => ({

...logItem,

- message: base64ToArrayBuffer(logItem.message as string),

+ message: decoder.decode(base64ToArrayBuffer(logItem.message)),

}));

return {

diff --git a/frontend/src/types/log.d.ts b/frontend/src/types/log.d.ts

index eec182c1e..99e9532c8 100644

--- a/frontend/src/types/log.d.ts

+++ b/frontend/src/types/log.d.ts

@@ -1,7 +1,7 @@

declare interface ILogItem {

log_source: 'stdout' | 'stderr';

timestamp: string;

- message: string | Uint8Array;

+ message: string;

}

declare type TRequestLogsParams = {

diff --git a/frontend/src/types/run.d.ts b/frontend/src/types/run.d.ts

index eae9ebacc..2e613defb 100644

--- a/frontend/src/types/run.d.ts

+++ b/frontend/src/types/run.d.ts

@@ -7,6 +7,7 @@ declare type TRunsRequestParams = {

prev_run_id?: string;

limit?: number;

ascending?: boolean;

+ job_submissions_limit?: number;

};

declare type TDeleteRunsRequestParams = {

diff --git a/mkdocs.yml b/mkdocs.yml

index e6fac58ed..7d93d9623 100644

--- a/mkdocs.yml

+++ b/mkdocs.yml

@@ -172,7 +172,7 @@ markdown_extensions:

- pymdownx.tasklist:

custom_checkbox: true

- toc:

- toc_depth: 5

+ toc_depth: 3

permalink: true

- attr_list

- md_in_html

@@ -292,8 +292,9 @@ nav:

- TPU: examples/accelerators/tpu/index.md

- Intel Gaudi: examples/accelerators/intel/index.md

- Tenstorrent: examples/accelerators/tenstorrent/index.md

- - Benchmarks: blog/benchmarks.md

+ - Changelog: blog/changelog.md

- Case studies: blog/case-studies.md

+ - Benchmarks: blog/benchmarks.md

- Blog:

- blog/index.md

# - Discord: https://discord.gg/u8SmfwPpMd" target="_blank

diff --git a/pyproject.toml b/pyproject.toml

index 47353886b..736ba6768 100644

--- a/pyproject.toml

+++ b/pyproject.toml

@@ -35,6 +35,7 @@ dependencies = [

"gpuhunt==0.1.6",

"argcomplete>=3.5.0",

"ignore-python>=0.2.0",

+ "orjson",

]

[project.urls]

diff --git a/runner/go.mod b/runner/go.mod

index 22dad6466..850ea8253 100644

--- a/runner/go.mod

+++ b/runner/go.mod

@@ -1,6 +1,6 @@

module github.com/dstackai/dstack/runner

-go 1.23

+go 1.23.8

require (

github.com/alexellis/go-execute/v2 v2.2.1

@@ -10,6 +10,7 @@ require (

github.com/docker/docker v26.0.0+incompatible

github.com/docker/go-connections v0.5.0

github.com/docker/go-units v0.5.0

+ github.com/dstackai/ansistrip v0.0.6

github.com/go-git/go-git/v5 v5.12.0

github.com/golang/gddo v0.0.0-20210115222349-20d68f94ee1f

github.com/gorilla/websocket v1.5.1

@@ -62,6 +63,7 @@ require (

github.com/russross/blackfriday/v2 v2.1.0 // indirect

github.com/sergi/go-diff v1.3.2-0.20230802210424-5b0b94c5c0d3 // indirect

github.com/skeema/knownhosts v1.2.2 // indirect

+ github.com/tidwall/btree v1.7.0 // indirect

github.com/tklauser/go-sysconf v0.3.12 // indirect

github.com/tklauser/numcpus v0.6.1 // indirect

github.com/ulikunitz/xz v0.5.12 // indirect

@@ -77,10 +79,11 @@ require (

golang.org/x/mod v0.17.0 // indirect

golang.org/x/net v0.24.0 // indirect

golang.org/x/sync v0.7.0 // indirect

+ golang.org/x/time v0.5.0 // indirect

golang.org/x/tools v0.20.0 // indirect

google.golang.org/genproto/googleapis/api v0.0.0-20240401170217-c3f982113cda // indirect

google.golang.org/genproto/googleapis/rpc v0.0.0-20240401170217-c3f982113cda // indirect

gopkg.in/warnings.v0 v0.1.2 // indirect

gopkg.in/yaml.v3 v3.0.1 // indirect

- gotest.tools/v3 v3.5.0 // indirect

+ gotest.tools/v3 v3.5.1 // indirect

)

diff --git a/runner/go.sum b/runner/go.sum

index 41e133c46..1222fcac8 100644

--- a/runner/go.sum

+++ b/runner/go.sum

@@ -47,6 +47,8 @@ github.com/docker/go-connections v0.5.0 h1:USnMq7hx7gwdVZq1L49hLXaFtUdTADjXGp+uj

github.com/docker/go-connections v0.5.0/go.mod h1:ov60Kzw0kKElRwhNs9UlUHAE/F9Fe6GLaXnqyDdmEXc=

github.com/docker/go-units v0.5.0 h1:69rxXcBk27SvSaaxTtLh/8llcHD8vYHT7WSdRZ/jvr4=

github.com/docker/go-units v0.5.0/go.mod h1:fgPhTUdO+D/Jk86RDLlptpiXQzgHJF7gydDDbaIK4Dk=

+github.com/dstackai/ansistrip v0.0.6 h1:6qqeDNWt8NoqfkY1CxKUvdHpJzBl89LOE3wMwptVpaI=

+github.com/dstackai/ansistrip v0.0.6/go.mod h1:w3ejXI0twxDv6bPXhkOaPeYdbwz2nwcrcvFoZGqi9F0=

github.com/ebitengine/purego v0.8.1 h1:sdRKd6plj7KYW33EH5As6YKfe8m9zbN9JMrOjNVF/BE=

github.com/ebitengine/purego v0.8.1/go.mod h1:iIjxzd6CiRiOG0UyXP+V1+jWqUXVjPKLAI0mRfJZTmQ=

github.com/elazarl/goproxy v0.0.0-20230808193330-2592e75ae04a h1:mATvB/9r/3gvcejNsXKSkQ6lcIaNec2nyfOdlTBR2lU=

@@ -171,6 +173,8 @@ github.com/stretchr/testify v1.4.0/go.mod h1:j7eGeouHqKxXV5pUuKE4zz7dFj8WfuZ+81P

github.com/stretchr/testify v1.7.0/go.mod h1:6Fq8oRcR53rry900zMqJjRRixrwX3KX962/h/Wwjteg=

github.com/stretchr/testify v1.10.0 h1:Xv5erBjTwe/5IxqUQTdXv5kgmIvbHo3QQyRwhJsOfJA=

github.com/stretchr/testify v1.10.0/go.mod h1:r2ic/lqez/lEtzL7wO/rwa5dbSLXVDPFyf8C91i36aY=

+github.com/tidwall/btree v1.7.0 h1:L1fkJH/AuEh5zBnnBbmTwQ5Lt+bRJ5A8EWecslvo9iI=

+github.com/tidwall/btree v1.7.0/go.mod h1:twD9XRA5jj9VUQGELzDO4HPQTNJsoWWfYEL+EUQ2cKY=

github.com/tklauser/go-sysconf v0.3.12 h1:0QaGUFOdQaIVdPgfITYzaTegZvdCjmYO52cSFAEVmqU=

github.com/tklauser/go-sysconf v0.3.12/go.mod h1:Ho14jnntGE1fpdOqQEEaiKRpvIavV0hSfmBq8nJbHYI=

github.com/tklauser/numcpus v0.6.1 h1:ng9scYS7az0Bk4OZLvrNXNSAO2Pxr1XXRAPyjhIx+Fk=

@@ -281,8 +285,9 @@ golang.org/x/text v0.7.0/go.mod h1:mrYo+phRRbMaCq/xk9113O4dZlRixOauAjOtrjsXDZ8=

golang.org/x/text v0.8.0/go.mod h1:e1OnstbJyHTd6l/uOt8jFFHp6TRDWZR/bV3emEE/zU8=

golang.org/x/text v0.14.0 h1:ScX5w1eTa3QqT8oi6+ziP7dTV1S2+ALU0bI+0zXKWiQ=

golang.org/x/text v0.14.0/go.mod h1:18ZOQIKpY8NJVqYksKHtTdi31H5itFRjB5/qKTNYzSU=

-golang.org/x/time v0.0.0-20170424234030-8be79e1e0910 h1:bCMaBn7ph495H+x72gEvgcv+mDRd9dElbzo/mVCMxX4=

golang.org/x/time v0.0.0-20170424234030-8be79e1e0910/go.mod h1:tRJNPiyCQ0inRvYxbN9jk5I+vvW/OXSQhTDSoE431IQ=

+golang.org/x/time v0.5.0 h1:o7cqy6amK/52YcAKIPlM3a+Fpj35zvRj2TP+e1xFSfk=

+golang.org/x/time v0.5.0/go.mod h1:3BpzKBy/shNhVucY/MWOyx10tF3SFh9QdLuxbVysPQM=

golang.org/x/tools v0.0.0-20180917221912-90fa682c2a6e/go.mod h1:n7NCudcB/nEzxVGmLbDWY5pfWTLqBcC2KZ6jyYvM4mQ=

golang.org/x/tools v0.0.0-20191119224855-298f0cb1881e/go.mod h1:b+2E5dAYhXwXZwtnZ6UAqBI28+e2cm9otk0dWdXHAEo=

golang.org/x/tools v0.0.0-20200619180055-7c47624df98f/go.mod h1:EkVYQZoAsY45+roYkvgYkIh4xh/qjgUK9TdY2XT94GE=

@@ -318,5 +323,5 @@ gopkg.in/yaml.v2 v2.4.0/go.mod h1:RDklbk79AGWmwhnvt/jBztapEOGDOx6ZbXqjP6csGnQ=

gopkg.in/yaml.v3 v3.0.0-20200313102051-9f266ea9e77c/go.mod h1:K4uyk7z7BCEPqu6E+C64Yfv1cQ7kz7rIZviUmN+EgEM=

gopkg.in/yaml.v3 v3.0.1 h1:fxVm/GzAzEWqLHuvctI91KS9hhNmmWOoWu0XTYJS7CA=

gopkg.in/yaml.v3 v3.0.1/go.mod h1:K4uyk7z7BCEPqu6E+C64Yfv1cQ7kz7rIZviUmN+EgEM=

-gotest.tools/v3 v3.5.0 h1:Ljk6PdHdOhAb5aDMWXjDLMMhph+BpztA4v1QdqEW2eY=

-gotest.tools/v3 v3.5.0/go.mod h1:isy3WKz7GK6uNw/sbHzfKBLvlvXwUyV06n6brMxxopU=

+gotest.tools/v3 v3.5.1 h1:EENdUnS3pdur5nybKYIh2Vfgc8IUNBjxDPSjtiJcOzU=

+gotest.tools/v3 v3.5.1/go.mod h1:isy3WKz7GK6uNw/sbHzfKBLvlvXwUyV06n6brMxxopU=

diff --git a/runner/internal/executor/base.go b/runner/internal/executor/base.go

index 2163ca920..554bd7646 100644

--- a/runner/internal/executor/base.go

+++ b/runner/internal/executor/base.go

@@ -10,7 +10,7 @@ import (

type Executor interface {

GetHistory(timestamp int64) *schemas.PullResponse

- GetJobLogsHistory() []schemas.LogEvent

+ GetJobWsLogsHistory() []schemas.LogEvent

GetRunnerState() string

Run(ctx context.Context) error

SetCodePath(codePath string)

diff --git a/runner/internal/executor/executor.go b/runner/internal/executor/executor.go

index e14ce540d..d2ced5dc3 100644

--- a/runner/internal/executor/executor.go

+++ b/runner/internal/executor/executor.go

@@ -11,6 +11,7 @@ import (

"os/exec"

osuser "os/user"

"path/filepath"

+ "runtime"

"strconv"

"strings"

"sync"

@@ -18,6 +19,7 @@ import (

"time"

"github.com/creack/pty"

+ "github.com/dstackai/ansistrip"

"github.com/dstackai/dstack/runner/consts"

"github.com/dstackai/dstack/runner/internal/connections"

"github.com/dstackai/dstack/runner/internal/gerrors"

@@ -27,6 +29,24 @@ import (

"github.com/prometheus/procfs"

)

+// TODO: Tune these parameters for optimal experience/performance

+const (

+ // Output is flushed when the cursor doesn't move for this duration

+ AnsiStripFlushInterval = 500 * time.Millisecond

+

+ // Output is flushed regardless of cursor activity after this maximum delay

+ AnsiStripMaxDelay = 3 * time.Second

+

+ // Maximum buffer size for ansistrip

+ MaxBufferSize = 32 * 1024 // 32KB

+)

+

+type ConnectionTracker interface {

+ GetNoConnectionsSecs() int64

+ Track(ticker <-chan time.Time)

+ Stop()

+}

+

type RunExecutor struct {

tempDir string

homeDir string

@@ -47,13 +67,21 @@ type RunExecutor struct {

state string

jobStateHistory []schemas.JobStateEvent

jobLogs *appendWriter

+ jobWsLogs *appendWriter

runnerLogs *appendWriter

timestamp *MonotonicTimestamp

killDelay time.Duration

- connectionTracker *connections.ConnectionTracker

+ connectionTracker ConnectionTracker

}

+// stubConnectionTracker is a no-op implementation for when procfs is not available (only required for tests on darwin)

+type stubConnectionTracker struct{}

+

+func (s *stubConnectionTracker) GetNoConnectionsSecs() int64 { return 0 }

+func (s *stubConnectionTracker) Track(ticker <-chan time.Time) {}

+func (s *stubConnectionTracker) Stop() {}

+

func NewRunExecutor(tempDir string, homeDir string, workingDir string, sshPort int) (*RunExecutor, error) {

mu := &sync.RWMutex{}

timestamp := NewMonotonicTimestamp()

@@ -65,15 +93,25 @@ func NewRunExecutor(tempDir string, homeDir string, workingDir string, sshPort i

if err != nil {

return nil, fmt.Errorf("failed to parse current user uid: %w", err)

}

- proc, err := procfs.NewDefaultFS()

- if err != nil {

- return nil, fmt.Errorf("failed to initialize procfs: %w", err)

+

+ // Try to initialize procfs, but don't fail if it's not available (e.g., on macOS)

+ var connectionTracker ConnectionTracker

+

+ if runtime.GOOS == "linux" {

+ proc, err := procfs.NewDefaultFS()

+ if err != nil {

+ return nil, fmt.Errorf("failed to initialize procfs: %w", err)

+ }

+ connectionTracker = connections.NewConnectionTracker(connections.ConnectionTrackerConfig{

+ Port: uint64(sshPort),

+ MinConnDuration: 10 * time.Second, // shorter connections are likely from dstack-server

+ Procfs: proc,

+ })

+ } else {

+ // Use stub connection tracker (only required for tests on darwin)

+ connectionTracker = &stubConnectionTracker{}

}

- connectionTracker := connections.NewConnectionTracker(connections.ConnectionTrackerConfig{

- Port: uint64(sshPort),

- MinConnDuration: 10 * time.Second, // shorter connections are likely from dstack-server

- Procfs: proc,

- })

+

return &RunExecutor{

tempDir: tempDir,

homeDir: homeDir,

@@ -86,6 +124,7 @@ func NewRunExecutor(tempDir string, homeDir string, workingDir string, sshPort i

state: WaitSubmit,

jobStateHistory: make([]schemas.JobStateEvent, 0),

jobLogs: newAppendWriter(mu, timestamp),

+ jobWsLogs: newAppendWriter(mu, timestamp),

runnerLogs: newAppendWriter(mu, timestamp),

timestamp: timestamp,

@@ -129,7 +168,9 @@ func (ex *RunExecutor) Run(ctx context.Context) (err error) {

}

}()

- logger := io.MultiWriter(runnerLogFile, os.Stdout, ex.runnerLogs)

+ stripper := ansistrip.NewWriter(ex.runnerLogs, AnsiStripFlushInterval, AnsiStripMaxDelay, MaxBufferSize)

+ defer stripper.Close()

+ logger := io.MultiWriter(runnerLogFile, os.Stdout, stripper)

ctx = log.WithLogger(ctx, log.NewEntry(logger, int(log.DefaultEntry.Logger.Level))) // todo loglevel

log.Info(ctx, "Run job", "log_level", log.GetLogger(ctx).Logger.Level.String())

@@ -431,7 +472,9 @@ func (ex *RunExecutor) execJob(ctx context.Context, jobLogFile io.Writer) error

defer func() { _ = ptm.Close() }()

defer func() { _ = cmd.Wait() }() // release resources if copy fails

- logger := io.MultiWriter(jobLogFile, ex.jobLogs)

+ stripper := ansistrip.NewWriter(ex.jobLogs, AnsiStripFlushInterval, AnsiStripMaxDelay, MaxBufferSize)

+ defer stripper.Close()

+ logger := io.MultiWriter(jobLogFile, ex.jobWsLogs, stripper)

_, err = io.Copy(logger, ptm)

if err != nil && !isPtyError(err) {

return gerrors.Wrap(err)

diff --git a/runner/internal/executor/executor_test.go b/runner/internal/executor/executor_test.go

index 8d275b137..e13184513 100644

--- a/runner/internal/executor/executor_test.go

+++ b/runner/internal/executor/executor_test.go

@@ -9,6 +9,7 @@ import (

"os"

"os/exec"

"path/filepath"

+ "strings"

"testing"

"time"

@@ -17,8 +18,6 @@ import (

"github.com/stretchr/testify/require"

)

-// todo test get history

-

func TestExecutor_WorkingDir_Current(t *testing.T) {

var b bytes.Buffer

ex := makeTestExecutor(t)

@@ -28,7 +27,8 @@ func TestExecutor_WorkingDir_Current(t *testing.T) {

err := ex.execJob(context.TODO(), io.Writer(&b))

assert.NoError(t, err)

- assert.Equal(t, ex.workingDir+"\r\n", b.String())

+ // Normalize line endings for cross-platform compatibility.

+ assert.Equal(t, ex.workingDir+"\n", strings.ReplaceAll(b.String(), "\r\n", "\n"))

}

func TestExecutor_WorkingDir_Nil(t *testing.T) {

@@ -39,7 +39,7 @@ func TestExecutor_WorkingDir_Nil(t *testing.T) {

err := ex.execJob(context.TODO(), io.Writer(&b))

assert.NoError(t, err)

- assert.Equal(t, ex.workingDir+"\r\n", b.String())

+ assert.Equal(t, ex.workingDir+"\n", strings.ReplaceAll(b.String(), "\r\n", "\n"))

}

func TestExecutor_HomeDir(t *testing.T) {

@@ -49,7 +49,7 @@ func TestExecutor_HomeDir(t *testing.T) {

err := ex.execJob(context.TODO(), io.Writer(&b))

assert.NoError(t, err)

- assert.Equal(t, ex.homeDir+"\r\n", b.String())

+ assert.Equal(t, ex.homeDir+"\n", strings.ReplaceAll(b.String(), "\r\n", "\n"))

}

func TestExecutor_NonZeroExit(t *testing.T) {

@@ -61,7 +61,7 @@ func TestExecutor_NonZeroExit(t *testing.T) {

assert.Error(t, err)

assert.NotEmpty(t, ex.jobStateHistory)

exitStatus := ex.jobStateHistory[len(ex.jobStateHistory)-1].ExitStatus

- assert.NotNil(t, exitStatus, ex.jobStateHistory)

+ assert.NotNil(t, exitStatus)

assert.Equal(t, 100, *exitStatus)

}

@@ -96,7 +96,7 @@ func TestExecutor_LocalRepo(t *testing.T) {

err = ex.execJob(context.TODO(), io.Writer(&b))

assert.NoError(t, err)

- assert.Equal(t, "bar\r\n", b.String())

+ assert.Equal(t, "bar\n", strings.ReplaceAll(b.String(), "\r\n", "\n"))

}

func TestExecutor_Recover(t *testing.T) {

@@ -148,8 +148,8 @@ func TestExecutor_RemoteRepo(t *testing.T) {

err = ex.execJob(context.TODO(), io.Writer(&b))

assert.NoError(t, err)

- expected := fmt.Sprintf("%s\r\n%s\r\n%s\r\n", ex.getRepoData().RepoHash, ex.getRepoData().RepoConfigName, ex.getRepoData().RepoConfigEmail)

- assert.Equal(t, expected, b.String())

+ expected := fmt.Sprintf("%s\n%s\n%s\n", ex.getRepoData().RepoHash, ex.getRepoData().RepoConfigName, ex.getRepoData().RepoConfigEmail)

+ assert.Equal(t, expected, strings.ReplaceAll(b.String(), "\r\n", "\n"))

}

/* Helpers */

@@ -236,3 +236,98 @@ func TestWriteDstackProfile(t *testing.T) {

assert.Equal(t, value, string(out))

}

}

+

+func TestExecutor_Logs(t *testing.T) {

+ var b bytes.Buffer

+ ex := makeTestExecutor(t)

+ // Use printf to generate ANSI control codes.

+ // \033[31m = red text, \033[1;32m = bold green text, \033[0m = reset

+ ex.jobSpec.Commands = append(ex.jobSpec.Commands, "printf '\\033[31mRed Hello World\\033[0m\\n' && printf '\\033[1;32mBold Green Line 2\\033[0m\\n' && printf 'Line 3\\n'")

+

+ err := ex.execJob(context.TODO(), io.Writer(&b))

+ assert.NoError(t, err)

+

+ logHistory := ex.GetHistory(0).JobLogs

+ assert.NotEmpty(t, logHistory)

+

+ logString := combineLogMessages(logHistory)

+ normalizedLogString := strings.ReplaceAll(logString, "\r\n", "\n")

+

+ expectedOutput := "Red Hello World\nBold Green Line 2\nLine 3\n"

+ assert.Equal(t, expectedOutput, normalizedLogString, "Should strip ANSI codes from regular logs")

+

+ // Verify timestamps are in order

+ assert.Greater(t, len(logHistory), 0)

+ for i := 1; i < len(logHistory); i++ {

+ assert.GreaterOrEqual(t, logHistory[i].Timestamp, logHistory[i-1].Timestamp)

+ }

+}

+

+func TestExecutor_LogsWithErrors(t *testing.T) {

+ var b bytes.Buffer

+ ex := makeTestExecutor(t)

+ ex.jobSpec.Commands = append(ex.jobSpec.Commands, "echo 'Success message' && echo 'Error message' >&2 && exit 1")

+

+ err := ex.execJob(context.TODO(), io.Writer(&b))

+ assert.Error(t, err)

+

+ logHistory := ex.GetHistory(0).JobLogs

+ assert.NotEmpty(t, logHistory)

+

+ logString := combineLogMessages(logHistory)

+ normalizedLogString := strings.ReplaceAll(logString, "\r\n", "\n")

+

+ expectedOutput := "Success message\nError message\n"

+ assert.Equal(t, expectedOutput, normalizedLogString)

+}

+

+func TestExecutor_LogsAnsiCodeHandling(t *testing.T) {

+ var b bytes.Buffer

+ ex := makeTestExecutor(t)

+

+ // Test a variety of ANSI escape sequences on stdout and stderr.

+ cmd := "printf '\\033[31mRed\\033[0m \\033[32mGreen\\033[0m\\n' && " +

+ "printf '\\033[1mBold\\033[0m \\033[4mUnderline\\033[0m\\n' && " +

+ "printf '\\033[s\\033[uPlain text\\n' >&2"

+

+ ex.jobSpec.Commands = append(ex.jobSpec.Commands, cmd)

+

+ err := ex.execJob(context.TODO(), io.Writer(&b))

+ assert.NoError(t, err)

+

+ // 1. Check WebSocket logs, which should preserve ANSI codes.

+ wsLogHistory := ex.GetJobWsLogsHistory()

+ assert.NotEmpty(t, wsLogHistory)

+ wsLogString := combineLogMessages(wsLogHistory)

+ normalizedWsLogString := strings.ReplaceAll(wsLogString, "\r\n", "\n")

+

+ expectedWsOutput := "\033[31mRed\033[0m \033[32mGreen\033[0m\n" +

+ "\033[1mBold\033[0m \033[4mUnderline\033[0m\n" +

+ "\033[s\033[uPlain text\n"

+ assert.Equal(t, expectedWsOutput, normalizedWsLogString, "Websocket logs should preserve ANSI codes")

+

+ // 2. Check regular job logs, which should have ANSI codes stripped.

+ regularLogHistory := ex.GetHistory(0).JobLogs

+ assert.NotEmpty(t, regularLogHistory)

+ regularLogString := combineLogMessages(regularLogHistory)

+ normalizedRegularLogString := strings.ReplaceAll(regularLogString, "\r\n", "\n")

+

+ expectedRegularOutput := "Red Green\n" +

+ "Bold Underline\n" +

+ "Plain text\n"

+ assert.Equal(t, expectedRegularOutput, normalizedRegularLogString, "Regular logs should have ANSI codes stripped")

+

+ // Verify timestamps are ordered for both log types.

+ assert.Greater(t, len(wsLogHistory), 0)

+ for i := 1; i < len(wsLogHistory); i++ {

+ assert.GreaterOrEqual(t, wsLogHistory[i].Timestamp, wsLogHistory[i-1].Timestamp)

+ }

+}

+

+func combineLogMessages(logHistory []schemas.LogEvent) string {

+ var logOutput bytes.Buffer

+ for _, logEvent := range logHistory {

+ logOutput.Write(logEvent.Message)

+ }

+ return logOutput.String()

+}

diff --git a/runner/internal/executor/query.go b/runner/internal/executor/query.go

index 1dff4e330..6678e5f8d 100644

--- a/runner/internal/executor/query.go

+++ b/runner/internal/executor/query.go

@@ -4,8 +4,8 @@ import (

"github.com/dstackai/dstack/runner/internal/schemas"

)

-func (ex *RunExecutor) GetJobLogsHistory() []schemas.LogEvent {

- return ex.jobLogs.history

+func (ex *RunExecutor) GetJobWsLogsHistory() []schemas.LogEvent {

+ return ex.jobWsLogs.history

}

func (ex *RunExecutor) GetHistory(timestamp int64) *schemas.PullResponse {

diff --git a/runner/internal/metrics/metrics_test.go b/runner/internal/metrics/metrics_test.go

index 7f280da25..d547e2e33 100644

--- a/runner/internal/metrics/metrics_test.go

+++ b/runner/internal/metrics/metrics_test.go

@@ -1,6 +1,7 @@

package metrics

import (

+ "runtime"

"testing"

"github.com/dstackai/dstack/runner/internal/schemas"

@@ -8,6 +9,9 @@ import (

)

func TestGetAMDGPUMetrics_OK(t *testing.T) {

+ if runtime.GOOS == "darwin" {

+ t.Skip("Skipping on macOS")

+ }

collector, err := NewMetricsCollector()

assert.NoError(t, err)

@@ -39,6 +43,9 @@ func TestGetAMDGPUMetrics_OK(t *testing.T) {

}

func TestGetAMDGPUMetrics_ErrorGPUUtilNA(t *testing.T) {

+ if runtime.GOOS == "darwin" {

+ t.Skip("Skipping on macOS")

+ }

collector, err := NewMetricsCollector()

assert.NoError(t, err)

metrics, err := collector.getAMDGPUMetrics("gpu,gfx,gfx_clock,vram_used,vram_total\n0,N/A,N/A,283,196300\n")

diff --git a/runner/internal/runner/api/ws.go b/runner/internal/runner/api/ws.go

index cade1170a..ebb0caea2 100644

--- a/runner/internal/runner/api/ws.go

+++ b/runner/internal/runner/api/ws.go

@@ -34,23 +34,23 @@ func (s *Server) streamJobLogs(conn *websocket.Conn) {

for {

s.executor.RLock()

- jobLogsHistory := s.executor.GetJobLogsHistory()

+ jobLogsWsHistory := s.executor.GetJobWsLogsHistory()

select {

case <-s.shutdownCh:

- if currentPos >= len(jobLogsHistory) {

+ if currentPos >= len(jobLogsWsHistory) {

s.executor.RUnlock()

close(s.wsDoneCh)

return

}

default:

- if currentPos >= len(jobLogsHistory) {

+ if currentPos >= len(jobLogsWsHistory) {

s.executor.RUnlock()

time.Sleep(100 * time.Millisecond)

continue

}

}

- for currentPos < len(jobLogsHistory) {

- if err := conn.WriteMessage(websocket.BinaryMessage, jobLogsHistory[currentPos].Message); err != nil {

+ for currentPos < len(jobLogsWsHistory) {

+ if err := conn.WriteMessage(websocket.BinaryMessage, jobLogsWsHistory[currentPos].Message); err != nil {

s.executor.RUnlock()

log.Error(context.TODO(), "Failed to write message", "err", err)

return

diff --git a/runner/internal/shim/docker.go b/runner/internal/shim/docker.go

index 19462c9d7..1834188ae 100644

--- a/runner/internal/shim/docker.go

+++ b/runner/internal/shim/docker.go

@@ -274,6 +274,13 @@ func (d *DockerRunner) Run(ctx context.Context, taskID string) error {

cfg := task.config

var err error

+ runnerDir, err := d.dockerParams.MakeRunnerDir(task.containerName)

+ if err != nil {

+ return tracerr.Wrap(err)

+ }

+ task.runnerDir = runnerDir

+ log.Debug(ctx, "runner dir", "task", task.ID, "path", runnerDir)

+

if cfg.GPU != 0 {

gpuIDs, err := d.gpuLock.Acquire(ctx, cfg.GPU)

if err != nil {

@@ -335,7 +342,10 @@ func (d *DockerRunner) Run(ctx context.Context, taskID string) error {

if err := d.tasks.Update(task); err != nil {

return tracerr.Errorf("%w: failed to update task %s: %w", ErrInternal, task.ID, err)

}

- if err = pullImage(pullCtx, d.client, cfg); err != nil {

+ // Although it's called "runner dir", we also use it for shim task-related data.

+ // Maybe we should rename it to "task dir" (including the `/root/.dstack/runners` dir on the host).

+ pullLogPath := filepath.Join(runnerDir, "pull.log")

+ if err = pullImage(pullCtx, d.client, cfg, pullLogPath); err != nil {

errMessage := fmt.Sprintf("pullImage error: %s", err.Error())

log.Error(ctx, errMessage)

task.SetStatusTerminated(string(types.TerminationReasonCreatingContainerError), errMessage)

@@ -655,7 +665,7 @@ func mountDisk(ctx context.Context, deviceName, mountPoint string, fsRootPerms o

return nil

}

-func pullImage(ctx context.Context, client docker.APIClient, taskConfig TaskConfig) error {

+func pullImage(ctx context.Context, client docker.APIClient, taskConfig TaskConfig, logPath string) error {

if !strings.Contains(taskConfig.ImageName, ":") {

taskConfig.ImageName += ":latest"

}

@@ -685,51 +695,70 @@ func pullImage(ctx context.Context, client docker.APIClient, taskConfig TaskConf

if err != nil {

return tracerr.Wrap(err)

}

- defer func() { _ = reader.Close() }()

+ defer reader.Close()

+

+ logFile, err := os.OpenFile(logPath, os.O_CREATE|os.O_TRUNC|os.O_WRONLY, 0o644)

+ if err != nil {

+ return tracerr.Wrap(err)

+ }

+ defer logFile.Close()

+

+ teeReader := io.TeeReader(reader, logFile)

current := make(map[string]uint)

total := make(map[string]uint)

- type ProgressDetail struct {

- Current uint `json:"current"`

- Total uint `json:"total"`

- }

- type Progress struct {

- Id string `json:"id"`

- Status string `json:"status"`

- ProgressDetail ProgressDetail `json:"progressDetail"` //nolint:tagliatelle

- Error string `json:"error"`

+ // dockerd reports pulling progress as a stream of JSON Lines. The format of records is not documented in the API documentation,

+ // although it's occasionally mentioned, e.g., https://docs.docker.com/reference/api/engine/version-history/#v148-api-changes

+

+ // https://github.com/moby/moby/blob/e77ff99ede5ee5952b3a9227863552ae6e5b6fb1/pkg/jsonmessage/jsonmessage.go#L144

+ // All fields are optional

+ type PullMessage struct {

+ Id string `json:"id"` // layer id

+ Status string `json:"status"`

+ ProgressDetail struct {

+ Current uint `json:"current"` // bytes

+ Total uint `json:"total"` // bytes

+ } `json:"progressDetail"`

+ ErrorDetail struct {

+ Message string `json:"message"`

+ } `json:"errorDetail"`

}

- var status bool

+ var pullCompleted bool

pullErrors := make([]string, 0)

- scanner := bufio.NewScanner(reader)

+ scanner := bufio.NewScanner(teeReader)

for scanner.Scan() {

line := scanner.Bytes()

- var progressRow Progress

- if err := json.Unmarshal(line, &progressRow); err != nil {

+ var pullMessage PullMessage

+ if err := json.Unmarshal(line, &pullMessage); err != nil {

continue

}

- if progressRow.Status == "Downloading" {

- current[progressRow.Id] = progressRow.ProgressDetail.Current

- total[progressRow.Id] = progressRow.ProgressDetail.Total

+ if pullMessage.Status == "Downloading" {

+ current[pullMessage.Id] = pullMessage.ProgressDetail.Current

+ total[pullMessage.Id] = pullMessage.ProgressDetail.Total

}

- if progressRow.Status == "Download complete" {

- current[progressRow.Id] = total[progressRow.Id]

+ if pullMessage.Status == "Download complete" {

+ current[pullMessage.Id] = total[pullMessage.Id]

}

- if progressRow.Error != "" {

- log.Error(ctx, "error pulling image", "name", taskConfig.ImageName, "err", progressRow.Error)

- pullErrors = append(pullErrors, progressRow.Error)

+ if pullMessage.ErrorDetail.Message != "" {

+ log.Error(ctx, "error pulling image", "name", taskConfig.ImageName, "err", pullMessage.ErrorDetail.Message)

+ pullErrors = append(pullErrors, pullMessage.ErrorDetail.Message)

}

- if strings.HasPrefix(progressRow.Status, "Status:") {

- status = true

- log.Debug(ctx, progressRow.Status)

+ // If the pull is successful, the last two entries must be:

+ // "Digest: sha256:"

+ // "Status: "

+ // where is either "Downloaded newer image for " or "Image is up to date for ".

+ // See: https://github.com/moby/moby/blob/e77ff99ede5ee5952b3a9227863552ae6e5b6fb1/daemon/containerd/image_pull.go#L134-L152

+ // See: https://github.com/moby/moby/blob/e77ff99ede5ee5952b3a9227863552ae6e5b6fb1/daemon/containerd/image_pull.go#L257-L263

+ if strings.HasPrefix(pullMessage.Status, "Status:") {

+ pullCompleted = true

+ log.Debug(ctx, pullMessage.Status)

}

}

duration := time.Since(startTime)

-

var currentBytes uint

var totalBytes uint

for _, v := range current {

@@ -738,9 +767,13 @@ func pullImage(ctx context.Context, client docker.APIClient, taskConfig TaskConf

for _, v := range total {

totalBytes += v

}

-

speed := bytesize.New(float64(currentBytes) / duration.Seconds())

- if status && currentBytes == totalBytes {

+

+ if err := ctx.Err(); err != nil {

+ return tracerr.Errorf("image pull interrupted: downloaded %d bytes out of %d (%s/s): %w", currentBytes, totalBytes, speed, err)

+ }

+

+ if pullCompleted {

log.Debug(ctx, "image successfully pulled", "bytes", currentBytes, "bps", speed)

} else {

return tracerr.Errorf(

@@ -749,21 +782,11 @@ func pullImage(ctx context.Context, client docker.APIClient, taskConfig TaskConf

)

}

- err = ctx.Err()

- if err != nil {

- return tracerr.Errorf("imagepull interrupted: downloaded %d bytes out of %d (%s/s): %w", currentBytes, totalBytes, speed, err)

- }

return nil

}

func (d *DockerRunner) createContainer(ctx context.Context, task *Task) error {

- runnerDir, err := d.dockerParams.MakeRunnerDir(task.containerName)

- if err != nil {

- return tracerr.Wrap(err)

- }

- task.runnerDir = runnerDir

-

- mounts, err := d.dockerParams.DockerMounts(runnerDir)

+ mounts, err := d.dockerParams.DockerMounts(task.runnerDir)

if err != nil {

return tracerr.Wrap(err)

}

diff --git a/runner/internal/shim/docker_test.go b/runner/internal/shim/docker_test.go

index 35c8cbab6..29a5e1afd 100644

--- a/runner/internal/shim/docker_test.go

+++ b/runner/internal/shim/docker_test.go

@@ -26,8 +26,9 @@ func TestDocker_SSHServer(t *testing.T) {

t.Parallel()

params := &dockerParametersMock{

- commands: []string{"echo 1"},

- sshPort: nextPort(),

+ commands: []string{"echo 1"},

+ sshPort: nextPort(),

+ runnerDir: t.TempDir(),

}

timeout := 180 // seconds

@@ -58,6 +59,7 @@ func TestDocker_SSHServerConnect(t *testing.T) {

commands: []string{"sleep 5"},

sshPort: nextPort(),

publicSSHKey: string(publicBytes),

+ runnerDir: t.TempDir(),

}

timeout := 180 // seconds

@@ -103,7 +105,8 @@ func TestDocker_ShmNoexecByDefault(t *testing.T) {

t.Parallel()

params := &dockerParametersMock{

- commands: []string{"mount | grep '/dev/shm .*size=65536k' | grep noexec"},

+ commands: []string{"mount | grep '/dev/shm .*size=65536k' | grep noexec"},

+ runnerDir: t.TempDir(),

}

timeout := 180 // seconds

@@ -125,7 +128,8 @@ func TestDocker_ShmExecIfSizeSpecified(t *testing.T) {

t.Parallel()

params := &dockerParametersMock{

- commands: []string{"mount | grep '/dev/shm .*size=1024k' | grep -v noexec"},

+ commands: []string{"mount | grep '/dev/shm .*size=1024k' | grep -v noexec"},

+ runnerDir: t.TempDir(),

}

timeout := 180 // seconds

@@ -148,6 +152,7 @@ type dockerParametersMock struct {

commands []string

sshPort int

publicSSHKey string

+ runnerDir string

}

func (c *dockerParametersMock) DockerPrivileged() bool {

@@ -184,7 +189,7 @@ func (c *dockerParametersMock) DockerMounts(string) ([]mount.Mount, error) {

}

func (c *dockerParametersMock) MakeRunnerDir(string) (string, error) {

- return "", nil

+ return c.runnerDir, nil

}

/* Utilities */

diff --git a/src/dstack/_internal/cli/services/configurators/fleet.py b/src/dstack/_internal/cli/services/configurators/fleet.py

index b501f0b9d..2a7eeb4d5 100644

--- a/src/dstack/_internal/cli/services/configurators/fleet.py

+++ b/src/dstack/_internal/cli/services/configurators/fleet.py

@@ -25,6 +25,7 @@

ServerClientError,

URLNotFoundError,

)

+from dstack._internal.core.models.common import ApplyAction

from dstack._internal.core.models.configurations import ApplyConfigurationType

from dstack._internal.core.models.fleets import (

Fleet,

@@ -72,7 +73,104 @@ def apply_configuration(

spec=spec,

)

_print_plan_header(plan)

+ if plan.action is not None:

+ self._apply_plan(plan, command_args)

+ else:

+ # Old servers don't support spec update

+ self._apply_plan_on_old_server(plan, command_args)

+

+ def _apply_plan(self, plan: FleetPlan, command_args: argparse.Namespace):

+ delete_fleet_name: Optional[str] = None

+ action_message = ""

+ confirm_message = ""

+ if plan.current_resource is None:

+ if plan.spec.configuration.name is not None:

+ action_message += (

+ f"Fleet [code]{plan.spec.configuration.name}[/] does not exist yet."

+ )

+ confirm_message += "Create the fleet?"

+ else:

+ action_message += f"Found fleet [code]{plan.spec.configuration.name}[/]."

+ if plan.action == ApplyAction.CREATE:

+ delete_fleet_name = plan.current_resource.name

+ action_message += (

+ " Configuration changes detected. Cannot update the fleet in-place"

+ )

+ confirm_message += "Re-create the fleet?"

+ elif plan.current_resource.spec == plan.effective_spec:

+ if command_args.yes and not command_args.force:

+ # --force is required only with --yes,

+ # otherwise we may ask for force apply interactively.

+ console.print(

+ "No configuration changes detected. Use --force to apply anyway."

+ )

+ return

+ delete_fleet_name = plan.current_resource.name

+ action_message += " No configuration changes detected."

+ confirm_message += "Re-create the fleet?"

+ else:

+ action_message += " Configuration changes detected."

+ confirm_message += "Update the fleet in-place?"

+

+ console.print(action_message)

+ if not command_args.yes and not confirm_ask(confirm_message):

+ console.print("\nExiting...")

+ return

+

+ if delete_fleet_name is not None:

+ with console.status("Deleting existing fleet..."):

+ self.api.client.fleets.delete(

+ project_name=self.api.project, names=[delete_fleet_name]

+ )

+ # Fleet deletion is async. Wait for fleet to be deleted.

+ while True:

+ try:

+ self.api.client.fleets.get(

+ project_name=self.api.project, name=delete_fleet_name

+ )

+ except ResourceNotExistsError:

+ break

+ else:

+ time.sleep(1)

+

+ try:

+ with console.status("Applying plan..."):

+ fleet = self.api.client.fleets.apply_plan(project_name=self.api.project, plan=plan)

+ except ServerClientError as e:

+ raise CLIError(e.msg)

+ if command_args.detach:

+ console.print("Fleet configuration submitted. Exiting...")

+ return

+ try:

+ with MultiItemStatus(

+ f"Provisioning [code]{fleet.name}[/]...", console=console

+ ) as live:

+ while not _finished_provisioning(fleet):

+ table = get_fleets_table([fleet])

+ live.update(table)

+ time.sleep(LIVE_TABLE_PROVISION_INTERVAL_SECS)

+ fleet = self.api.client.fleets.get(self.api.project, fleet.name)

+ except KeyboardInterrupt:

+ if confirm_ask("Delete the fleet before exiting?"):

+ with console.status("Deleting fleet..."):

+ self.api.client.fleets.delete(

+ project_name=self.api.project, names=[fleet.name]

+ )

+ else:

+ console.print("Exiting... Fleet provisioning will continue in the background.")

+ return

+ console.print(

+ get_fleets_table(

+ [fleet],

+ verbose=_failed_provisioning(fleet),

+ format_date=local_time,

+ )

+ )

+ if _failed_provisioning(fleet):

+ console.print("\n[error]Some instances failed. Check the table above for errors.[/]")

+ exit(1)

+ def _apply_plan_on_old_server(self, plan: FleetPlan, command_args: argparse.Namespace):

action_message = ""

confirm_message = ""

if plan.current_resource is None:

@@ -86,7 +184,7 @@ def apply_configuration(

diff = diff_models(

old=plan.current_resource.spec.configuration,

new=plan.spec.configuration,

- ignore={

+ reset={

"ssh_config": {

"ssh_key": True,

"proxy_jump": {"ssh_key"},

diff --git a/src/dstack/_internal/cli/services/profile.py b/src/dstack/_internal/cli/services/profile.py

index 23bbe55ad..d57ea2e13 100644

--- a/src/dstack/_internal/cli/services/profile.py

+++ b/src/dstack/_internal/cli/services/profile.py

@@ -159,7 +159,7 @@ def apply_profile_args(

if args.idle_duration is not None:

profile_settings.idle_duration = args.idle_duration

elif args.dont_destroy:

- profile_settings.idle_duration = False

+ profile_settings.idle_duration = "off"

if args.creation_policy_reuse:

profile_settings.creation_policy = CreationPolicy.REUSE

diff --git a/src/dstack/_internal/core/compatibility/runs.py b/src/dstack/_internal/core/compatibility/runs.py

index 1deea7ee0..5eaf10ed2 100644

--- a/src/dstack/_internal/core/compatibility/runs.py

+++ b/src/dstack/_internal/core/compatibility/runs.py

@@ -3,7 +3,16 @@

from dstack._internal.core.models.common import IncludeExcludeDictType, IncludeExcludeSetType

from dstack._internal.core.models.configurations import ServiceConfiguration

from dstack._internal.core.models.runs import ApplyRunPlanInput, JobSpec, JobSubmission, RunSpec

-from dstack._internal.server.schemas.runs import GetRunPlanRequest

+from dstack._internal.server.schemas.runs import GetRunPlanRequest, ListRunsRequest

+

+

+def get_list_runs_excludes(list_runs_request: ListRunsRequest) -> IncludeExcludeSetType:

+ excludes = set()

+ if list_runs_request.include_jobs:

+ excludes.add("include_jobs")

+ if list_runs_request.job_submissions_limit is None:

+ excludes.add("job_submissions_limit")

+ return excludes

def get_apply_plan_excludes(plan: ApplyRunPlanInput) -> Optional[IncludeExcludeDictType]:

@@ -139,6 +148,8 @@ def get_job_spec_excludes(job_specs: list[JobSpec]) -> IncludeExcludeDictType:

spec_excludes["repo_data"] = True

if all(not s.file_archives for s in job_specs):

spec_excludes["file_archives"] = True

+ if all(s.service_port is None for s in job_specs):

+ spec_excludes["service_port"] = True

return spec_excludes

diff --git a/src/dstack/_internal/core/compatibility/volumes.py b/src/dstack/_internal/core/compatibility/volumes.py

index 7395674f9..4b7be6bb0 100644

--- a/src/dstack/_internal/core/compatibility/volumes.py

+++ b/src/dstack/_internal/core/compatibility/volumes.py

@@ -30,4 +30,6 @@ def _get_volume_configuration_excludes(

configuration_excludes: IncludeExcludeDictType = {}

if configuration.tags is None:

configuration_excludes["tags"] = True

+ if configuration.auto_cleanup_duration is None:

+ configuration_excludes["auto_cleanup_duration"] = True

return configuration_excludes

diff --git a/src/dstack/_internal/core/models/common.py b/src/dstack/_internal/core/models/common.py

index c347cf0d3..a13922671 100644

--- a/src/dstack/_internal/core/models/common.py

+++ b/src/dstack/_internal/core/models/common.py

@@ -1,11 +1,14 @@

import re

from enum import Enum

-from typing import Union

+from typing import Any, Callable, Optional, Union

+import orjson

from pydantic import Field

from pydantic_duality import DualBaseModel

from typing_extensions import Annotated

+from dstack._internal.utils.json_utils import pydantic_orjson_dumps

+

IncludeExcludeFieldType = Union[int, str]

IncludeExcludeSetType = set[IncludeExcludeFieldType]

IncludeExcludeDictType = dict[

@@ -20,7 +23,40 @@

# This allows to use the same model both for a strict parsing of the user input and

# for a permissive parsing of the server responses.

class CoreModel(DualBaseModel):

- pass

+ class Config:

+ json_loads = orjson.loads

+ json_dumps = pydantic_orjson_dumps

+

+ def json(

+ self,

+ *,

+ include: Optional[IncludeExcludeType] = None,

+ exclude: Optional[IncludeExcludeType] = None,

+ by_alias: bool = False,

+ skip_defaults: Optional[bool] = None, # ignore as it's deprecated

+ exclude_unset: bool = False,

+ exclude_defaults: bool = False,

+ exclude_none: bool = False,

+ encoder: Optional[Callable[[Any], Any]] = None,

+ models_as_dict: bool = True, # does not seems to be needed by dstack or dependencies

+ **dumps_kwargs: Any,

+ ) -> str:

+ """

+ Override `json()` method so that it calls `dict()`.

+ Allows changing how models are serialized by overriding `dict()` only.

+ By default, `json()` won't call `dict()`, so changes applied in `dict()` won't take place.

+ """

+ data = self.dict(

+ by_alias=by_alias,

+ include=include,

+ exclude=exclude,

+ exclude_unset=exclude_unset,

+ exclude_defaults=exclude_defaults,

+ exclude_none=exclude_none,

+ )

+ if self.__custom_root_type__:

+ data = data["__root__"]

+ return self.__config__.json_dumps(data, default=encoder, **dumps_kwargs)

class Duration(int):

diff --git a/src/dstack/_internal/core/models/configurations.py b/src/dstack/_internal/core/models/configurations.py

index 97be403ca..a22db9e36 100644

--- a/src/dstack/_internal/core/models/configurations.py

+++ b/src/dstack/_internal/core/models/configurations.py

@@ -4,6 +4,7 @@

from pathlib import PurePosixPath

from typing import Any, Dict, List, Optional, Union

+import orjson

from pydantic import Field, ValidationError, conint, constr, root_validator, validator

from typing_extensions import Annotated, Literal

@@ -18,6 +19,9 @@

from dstack._internal.core.models.services import AnyModel, OpenAIChatModel

from dstack._internal.core.models.unix import UnixUser

from dstack._internal.core.models.volumes import MountPoint, VolumeConfiguration, parse_mount_point

+from dstack._internal.utils.json_utils import (

+ pydantic_orjson_dumps_with_indent,

+)

CommandsList = List[str]

ValidPort = conint(gt=0, le=65536)

@@ -394,8 +398,9 @@ class TaskConfiguration(

class ServiceConfigurationParams(CoreModel):

port: Annotated[

+ # NOTE: it's a PortMapping for historical reasons. Only `port.container_port` is used.

Union[ValidPort, constr(regex=r"^[0-9]+:[0-9]+$"), PortMapping],

- Field(description="The port, that application listens on or the mapping"),

+ Field(description="The port the application listens on"),

]

gateway: Annotated[

Optional[Union[bool, str]],

@@ -573,6 +578,9 @@ class DstackConfiguration(CoreModel):

]

class Config:

+ json_loads = orjson.loads

+ json_dumps = pydantic_orjson_dumps_with_indent

+

@staticmethod

def schema_extra(schema: Dict[str, Any]):

schema["$schema"] = "http://json-schema.org/draft-07/schema#"

diff --git a/src/dstack/_internal/core/models/fleets.py b/src/dstack/_internal/core/models/fleets.py

index 6cf970a95..fd616b754 100644

--- a/src/dstack/_internal/core/models/fleets.py

+++ b/src/dstack/_internal/core/models/fleets.py

@@ -8,7 +8,7 @@

from typing_extensions import Annotated, Literal

from dstack._internal.core.models.backends.base import BackendType

-from dstack._internal.core.models.common import CoreModel

+from dstack._internal.core.models.common import ApplyAction, CoreModel

from dstack._internal.core.models.envs import Env

from dstack._internal.core.models.instances import Instance, InstanceOfferWithAvailability, SSHKey

from dstack._internal.core.models.profiles import (

@@ -324,6 +324,7 @@ class FleetPlan(CoreModel):

offers: List[InstanceOfferWithAvailability]

total_offers: int

max_offer_price: Optional[float] = None

+ action: Optional[ApplyAction] = None # default value for backward compatibility

def get_effective_spec(self) -> FleetSpec: