|

| 1 | +# Metrics |

| 2 | + |

| 3 | +A cortex cluster includes a deployment of Prometheus for metrics collections and a deployment of Grafana for |

| 4 | +visualization. You can monitor your APIs with the Grafana dashboards that ship with Cortex, or even add custom metrics |

| 5 | +and dashboards. |

| 6 | + |

| 7 | +## Accessing the dashboard |

| 8 | + |

| 9 | +The dashboard URL is displayed once you run a `cortex get <api_name>` command. |

| 10 | + |

| 11 | +Alternatively, you can access it on `http://<operator_url>/dashboard`. Run the following command to get the operator |

| 12 | +URL: |

| 13 | + |

| 14 | +```shell |

| 15 | +cortex env list |

| 16 | +``` |

| 17 | + |

| 18 | +If your operator load balancer is configured to be internal, there are a few options for accessing the dashboard: |

| 19 | + |

| 20 | +1. Access the dashboard from a machine that has VPC Peering configured to your cluster's VPC, or which is inside of your |

| 21 | + cluster's VPC |

| 22 | +1. Run `kubectl port-forward -n default grafana-0 3000:3000` to forward Grafana's port to your local machine, and access |

| 23 | + the dashboard on [http://localhost:3000/](http://localhost:3000/) (see instructions for setting up `kubectl` |

| 24 | + on [AWS](../../clusters/aws/kubectl.md) or [GCP](../../clusters/gcp/kubectl.md)) |

| 25 | +1. Set up VPN access to your cluster's |

| 26 | + VPC ([AWS docs](https://docs.aws.amazon.com/vpc/latest/userguide/vpn-connections.html)) |

| 27 | + |

| 28 | +### Default credentials |

| 29 | + |

| 30 | +The dashboard is protected with username / password authentication, which by default are: |

| 31 | + |

| 32 | +- Username: admin |

| 33 | +- Password: admin |

| 34 | + |

| 35 | +You will be prompted to change the admin user password in the first time you log in. |

| 36 | + |

| 37 | +Grafana allows managing the access of several users and managing teams. For more information on this topic check |

| 38 | +the [grafana documentation](https://grafana.com/docs/grafana/latest/manage-users/). |

| 39 | + |

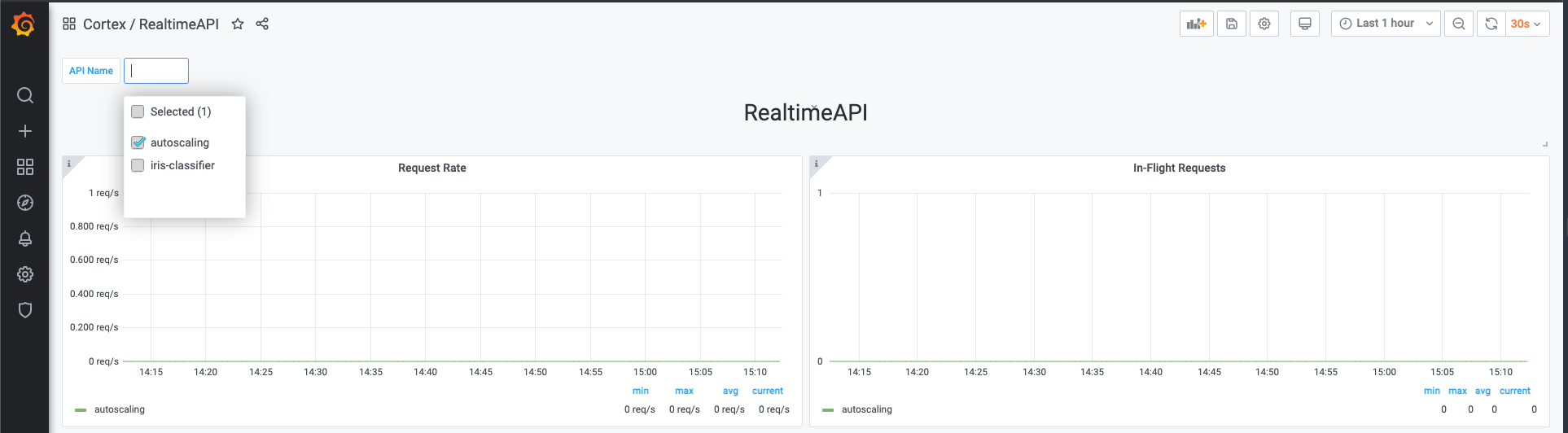

| 40 | +### Selecting an API |

| 41 | + |

| 42 | +You can select one or more APIs to visualize in the top left corner of the dashboard. |

| 43 | + |

| 44 | + |

| 45 | + |

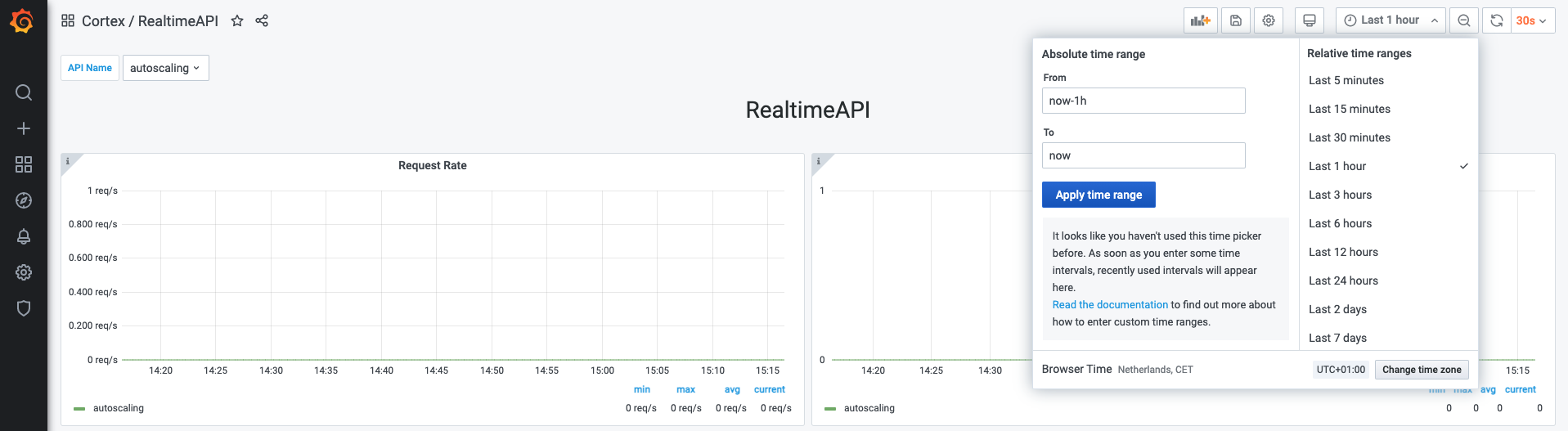

| 46 | +### Selecting a time range |

| 47 | + |

| 48 | +Grafana allows you to select a time range on which the metrics will be visualized. You can do so in the top right corner |

| 49 | +of the dashboard. |

| 50 | + |

| 51 | + |

| 52 | + |

| 53 | +**Note: Cortex only retains a maximum of 2 weeks worth of data at any moment in time** |

| 54 | + |

| 55 | +### Available dashboards |

| 56 | + |

| 57 | +There are more than one dashboard available by default. You can view the available dashboards by accessing the Grafana |

| 58 | +menu: `Dashboards -> Manage -> Cortex folder`. |

| 59 | + |

| 60 | +The dashboards that Cortex ships with are the following: |

| 61 | + |

| 62 | +- RealtimeAPI |

| 63 | +- BatchAPI |

| 64 | +- Cluster resources |

| 65 | +- Node resources |

| 66 | + |

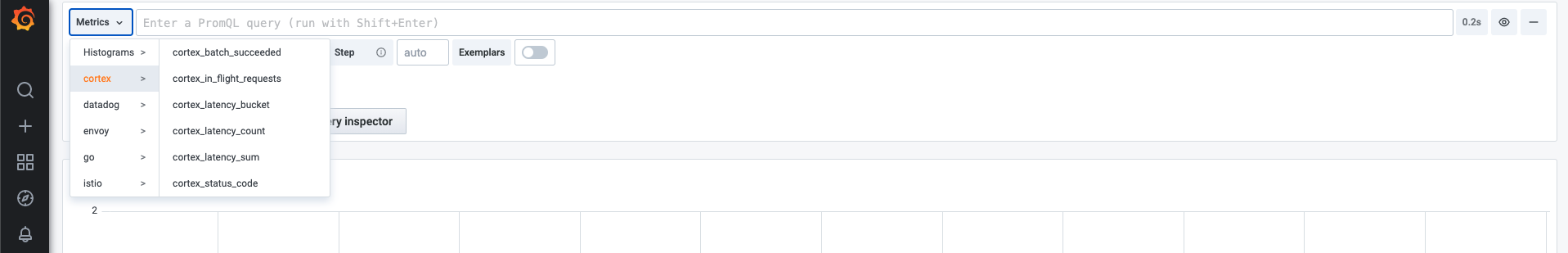

| 67 | +## Exposed metrics |

| 68 | + |

| 69 | +Cortex exposes more metrics with Prometheus, that can be potentially useful. To check the available metrics, access |

| 70 | +the `Explore` menu in grafana and press the `Metrics` button. |

| 71 | + |

| 72 | + |

| 73 | + |

| 74 | +You can use any of these metrics to set up your own dashboards. |

| 75 | + |

| 76 | +## Custom user metrics |

| 77 | + |

| 78 | +It is possible to export your own custom metrics by using the `MetricsClient` class in your predictor code. This allows |

| 79 | +you to create a custom metrics from your deployed API that can be later be used on your own custom dashboards. |

| 80 | + |

| 81 | +Code examples on how to use custom metrics for each API kind can be found here: |

| 82 | + |

| 83 | +- [RealtimeAPI](../realtime/metrics.md#custom-user-metrics) |

| 84 | +- [BatchAPI](../batch/metrics.md#custom-user-metrics) |

| 85 | +- [TaskAPI](../task/metrics.md#custom-user-metrics) |

| 86 | + |

| 87 | +### Metric types |

| 88 | + |

| 89 | +Currently, we only support 3 different metric types that will be converted to its respective Prometheus type: |

| 90 | + |

| 91 | +- [Counter](https://prometheus.io/docs/concepts/metric_types/#counter) - a cumulative metric that represents a single |

| 92 | + monotonically increasing counter whose value can only increase or be reset to zero on restart. |

| 93 | +- [Gauge](https://prometheus.io/docs/concepts/metric_types/#gauge) - a single numerical value that can arbitrarily go up |

| 94 | + and down. |

| 95 | +- [Histogram](https://prometheus.io/docs/concepts/metric_types/#histogram) - samples observations (usually things like |

| 96 | + request durations or response sizes) and counts them in configurable buckets. It also provides a sum of all observed |

| 97 | + values. |

| 98 | + |

| 99 | +### Pushing metrics |

| 100 | + |

| 101 | + - Counter |

| 102 | + |

| 103 | + ```python |

| 104 | + metrics.increment('my_counter', value=1, tags={"tag": "tag_name"}) |

| 105 | + ``` |

| 106 | + |

| 107 | + - Gauge |

| 108 | + |

| 109 | + ```python |

| 110 | + metrics.gauge('active_connections', value=1001, tags={"tag": "tag_name"}) |

| 111 | + ``` |

| 112 | + |

| 113 | + - Histogram |

| 114 | + |

| 115 | + ```python |

| 116 | + metrics.histogram('inference_time_milliseconds', 120, tags={"tag": "tag_name"}) |

| 117 | + ``` |

| 118 | + |

| 119 | +### Metrics client class reference |

| 120 | + |

| 121 | +```python |

| 122 | +class MetricsClient: |

| 123 | + |

| 124 | + def gauge(self, metric: str, value: float, tags: Dict[str, str] = None): |

| 125 | + """ |

| 126 | + Record the value of a gauge. |

| 127 | +

|

| 128 | + Example: |

| 129 | + >>> metrics.gauge('active_connections', 1001, tags={"protocol": "http"}) |

| 130 | + """ |

| 131 | + pass |

| 132 | + |

| 133 | + def increment(self, metric: str, value: float = 1, tags: Dict[str, str] = None): |

| 134 | + """ |

| 135 | + Increment the value of a counter. |

| 136 | +

|

| 137 | + Example: |

| 138 | + >>> metrics.increment('model_calls', 1, tags={"model_version": "v1"}) |

| 139 | + """ |

| 140 | + pass |

| 141 | + |

| 142 | + def histogram(self, metric: str, value: float, tags: Dict[str, str] = None): |

| 143 | + """ |

| 144 | + Set the value in a histogram metric |

| 145 | +

|

| 146 | + Example: |

| 147 | + >>> metrics.histogram('inference_time_milliseconds', 120, tags={"model_version": "v1"}) |

| 148 | + """ |

| 149 | + pass |

| 150 | +``` |

0 commit comments