Add metrics for Limit and Projection, and CoalesceBatches #1004

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

Which issue does this PR close?

Next part #866 (following the same model as #960).

Rationale for this change

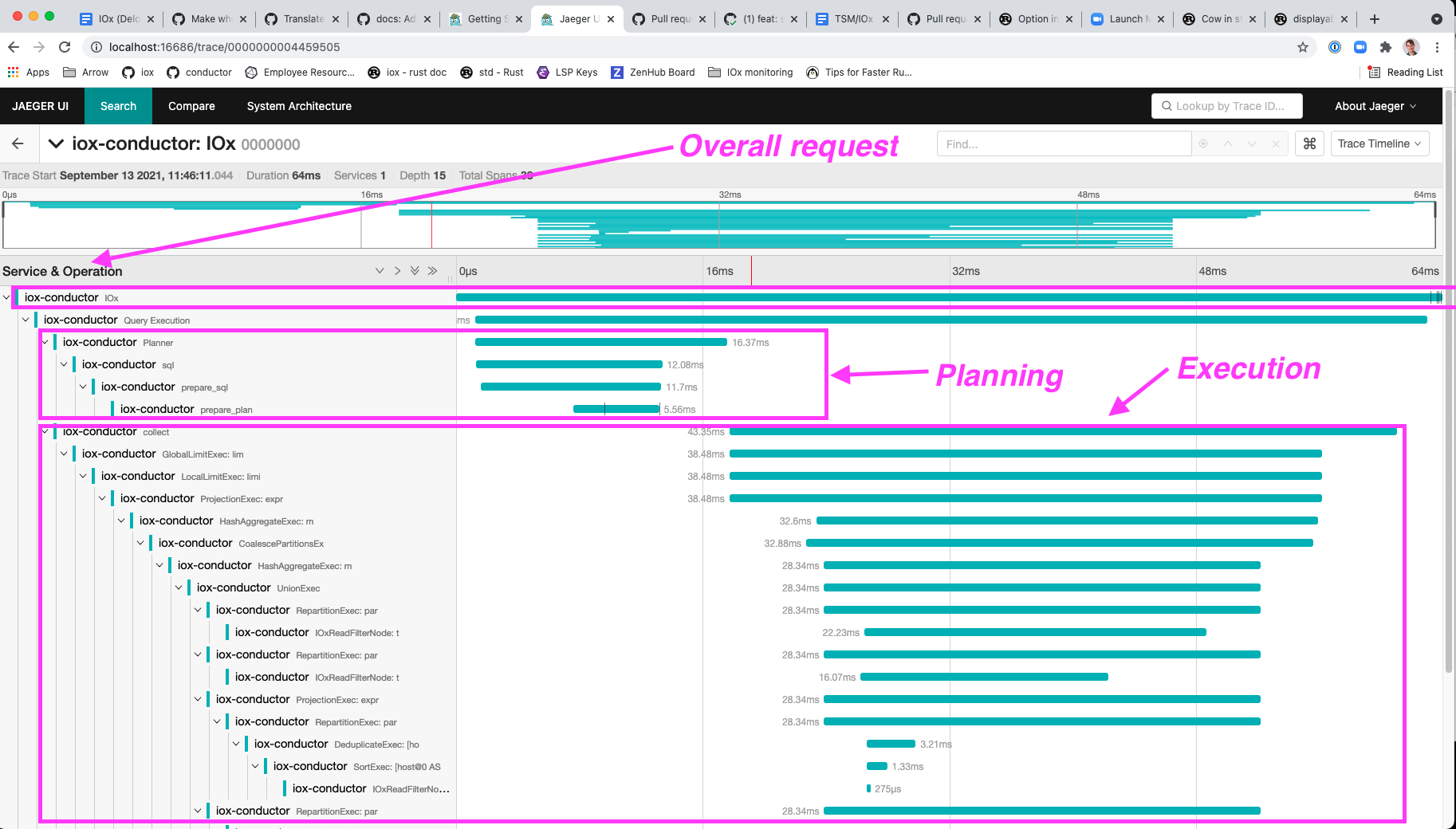

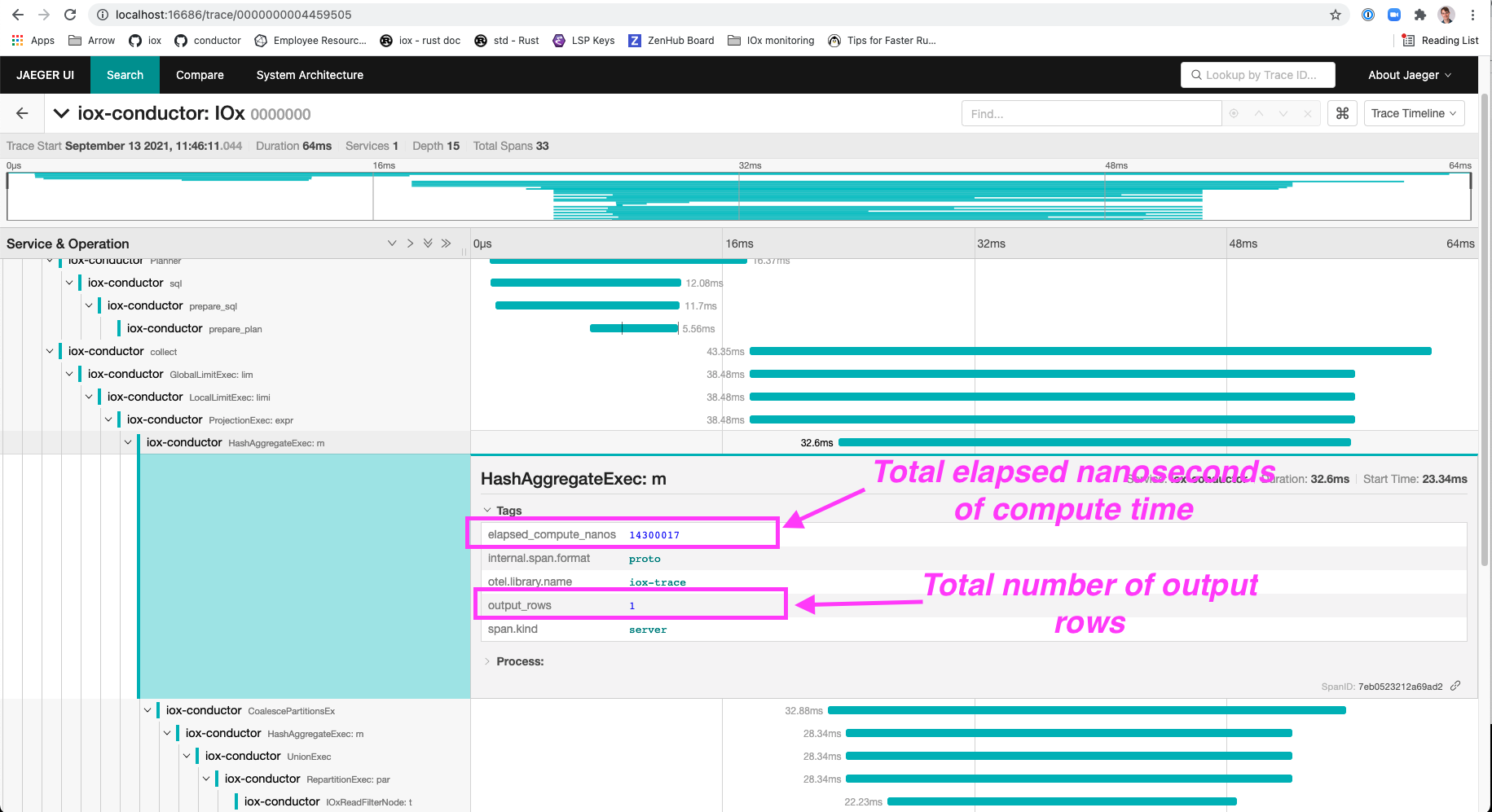

We want basic understanding of where a plan's time is spent and in what operators. See #866 for more details

What changes are included in this PR?

ProjectionExec,GlobalLimitExec,LocalLimitExecandCoalesceBatchesExecusing the API from Add BaselineMetrics, Timestamp metrics, add forCoalescePartitionsExec, rename output_time -> elapsed_compute #909Are there any user-facing changes?

More fields in

EXPLAIN ANALYZEare now filled outExample of how explain analyze is looking (dense but packed with good info)