Replies: 8 comments 20 replies

-

|

There is no "universal debugging method" - just use whatever you usually use in this case to debug python memory leaks. You have not written which version of Airflow you are using, but we do not see a widespread memory issues from others in the latest version of Airflow so this is indeed likely something in your code. Ideally - in order to narrow-down the root cause of it, you should run a series of experiements - running a pure scheduler with no dags, no external script, adding ver simple example dags and let them run, adding any customisations you have, adding your DAGs. That's what I'd do. This way you can pin-point the root cause and that might help in narrowing down further investigations. Knowing what causes the problem, you can then either use more sophisticate techniques (See below) or gather more evidences - logs, more traces (actually afrer knowing what causes it will help you to decide what are the right evidences). This is what I woudl do. And you are the only one who can make such investigation I am afraid, No-one will help you without having access to your system. Re - more sophisticated tracing - I googled and found for example this link on how to trace memory issues, but I am not sure if it is good for you - you can generreally start looking there. https://stackoverflow.com/questions/1435415/python-memory-leaks |

Beta Was this translation helpful? Give feedback.

-

|

Thanks for reply. I will try variaous experiments. I use airflow versions 2.2.5 and helm chart 1.6.0. also tried at airflow 2.3.2 but not working |

Beta Was this translation helpful? Give feedback.

-

|

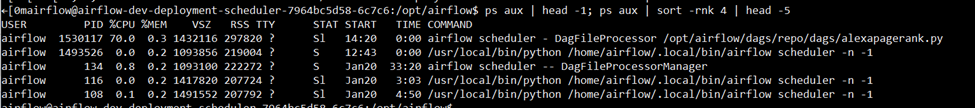

@potiuk defaultAirflowTag: "2.3.2-python3.8"

airflowVersion: "2.3.2"

fernetKeySecretName: airflow-fernet-key

webserverSecretKeySecretName: airflow-webserver-secret

executor: "KubernetesExecutor"

There is No DAG But memory still increasing Now I use eks. Do I have to investigate the eks cluster? |

Beta Was this translation helpful? Give feedback.

-

|

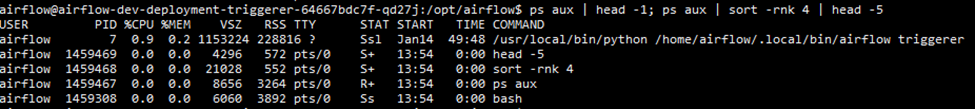

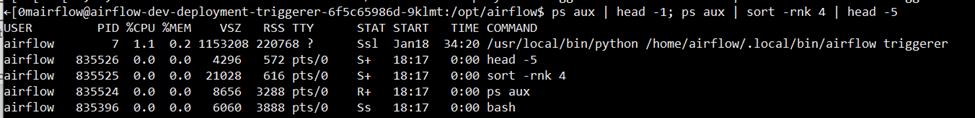

+1 here, we're also seeing memory leakage on Triggerer, Airflow 2.2.5. Fresh setup based on configs from previous Airflow 1.10.13. |

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

|

Just to comment in here so everybody knows, we are having the same problem as well on Airflow 2.5. I will make a more in depth post once I am done completely debugging. We are currently trying to go back to a default Airflow 2.5 image to see if we see the same leaks there, and then adding on stuff custom to our envs. |

Beta Was this translation helpful? Give feedback.

-

|

Super late reply, but I suspect I know what's going on here. It's got to do with the log retention settings, e.g. |

Beta Was this translation helpful? Give feedback.

-

|

not sure if the issue is related, but in my company we just updated to 2.7.2 and we are observing an abnormal number of error code -9 due to the MWAA workers running out of memory. |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

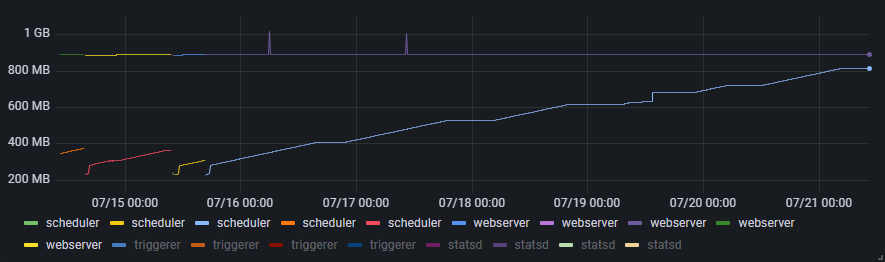

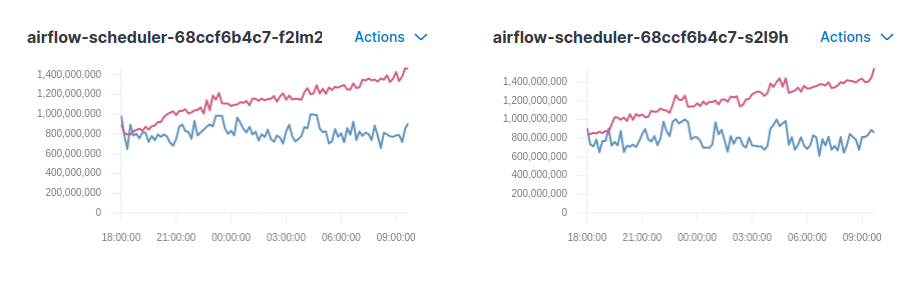

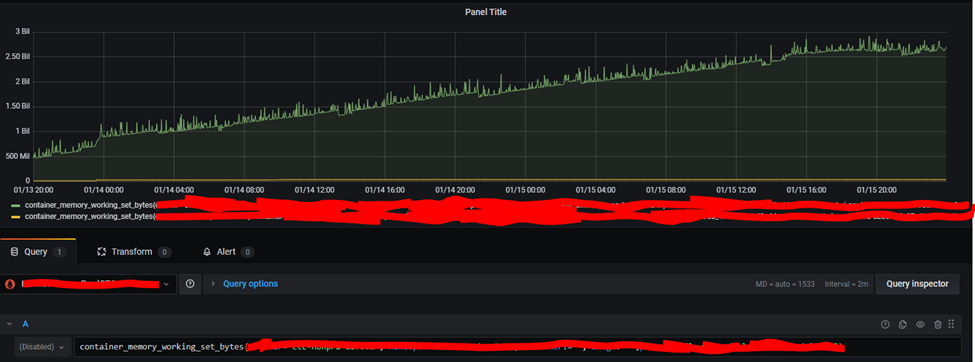

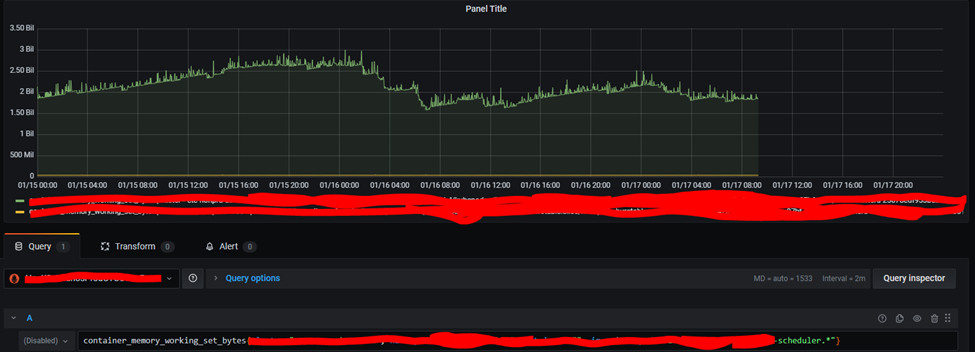

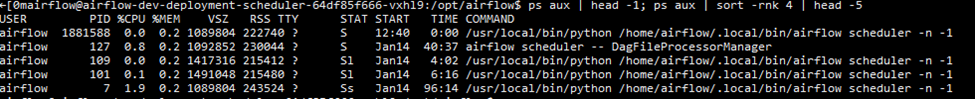

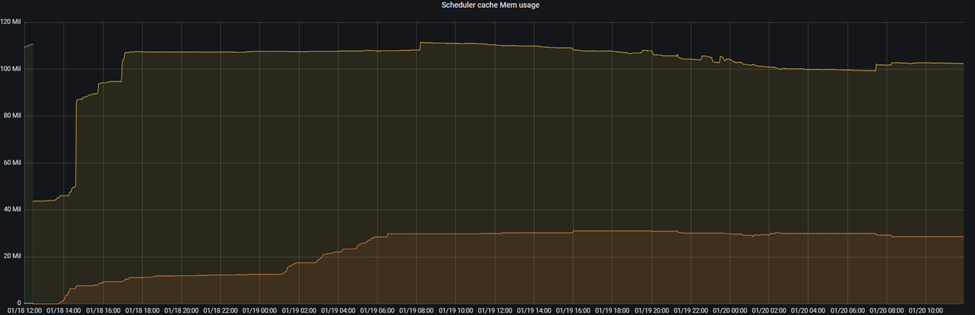

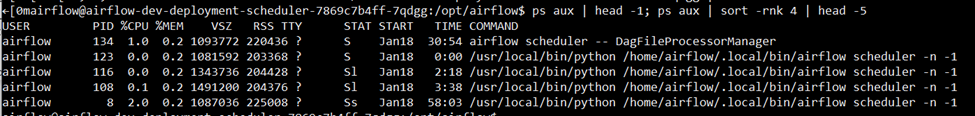

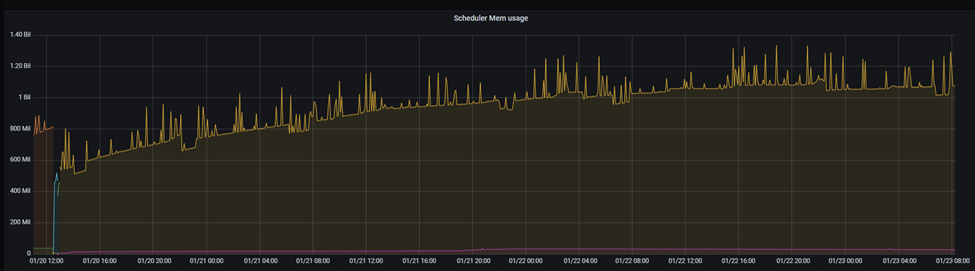

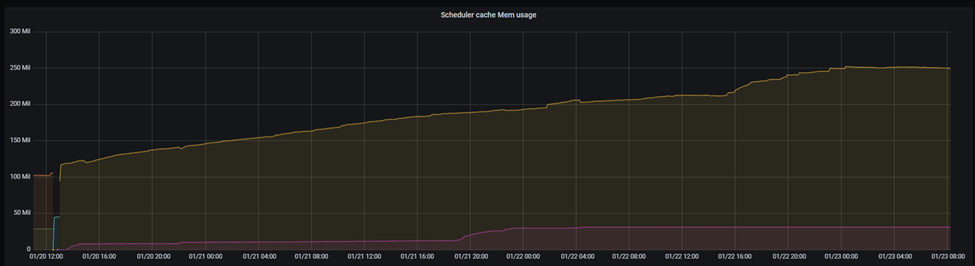

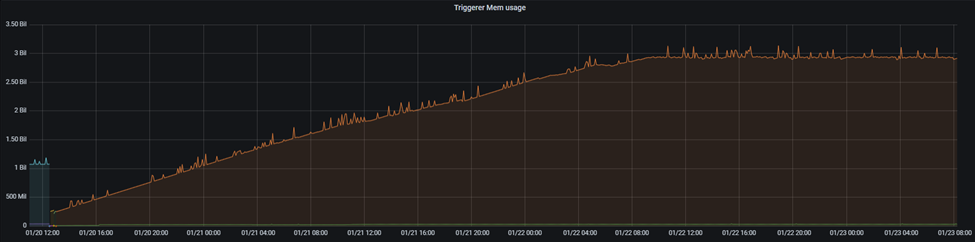

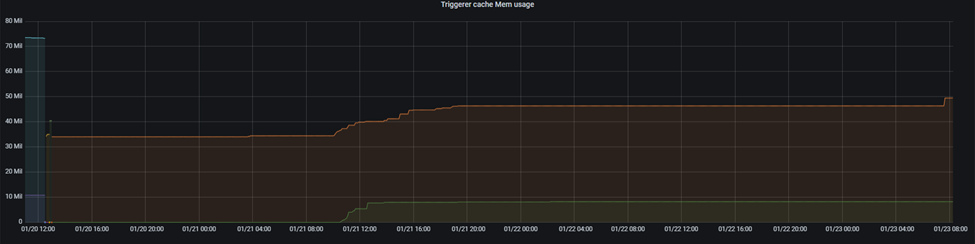

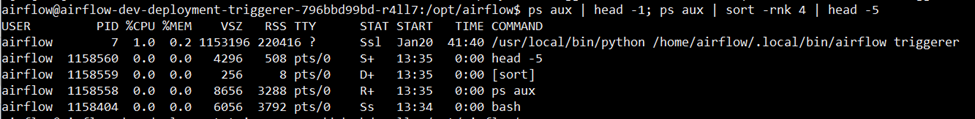

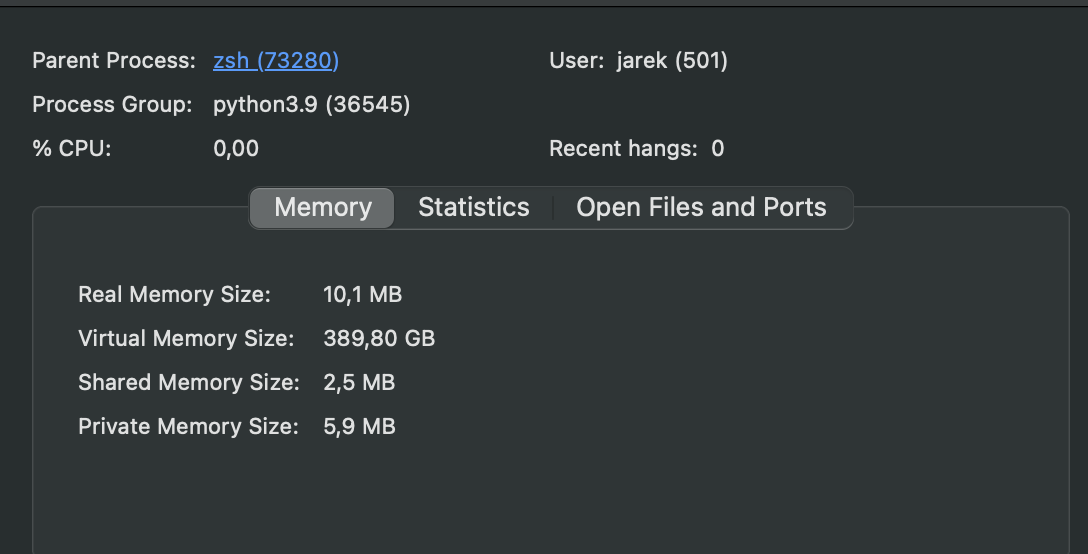

I'm experiencing memory leak in scheduler and triggerer, but I don't know reason. I want to know how to fix and debugging method

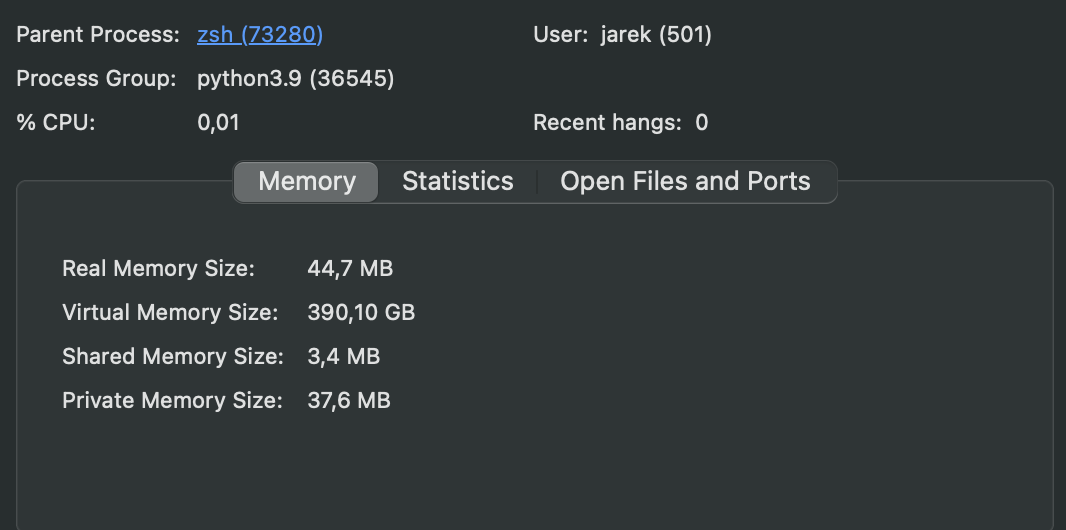

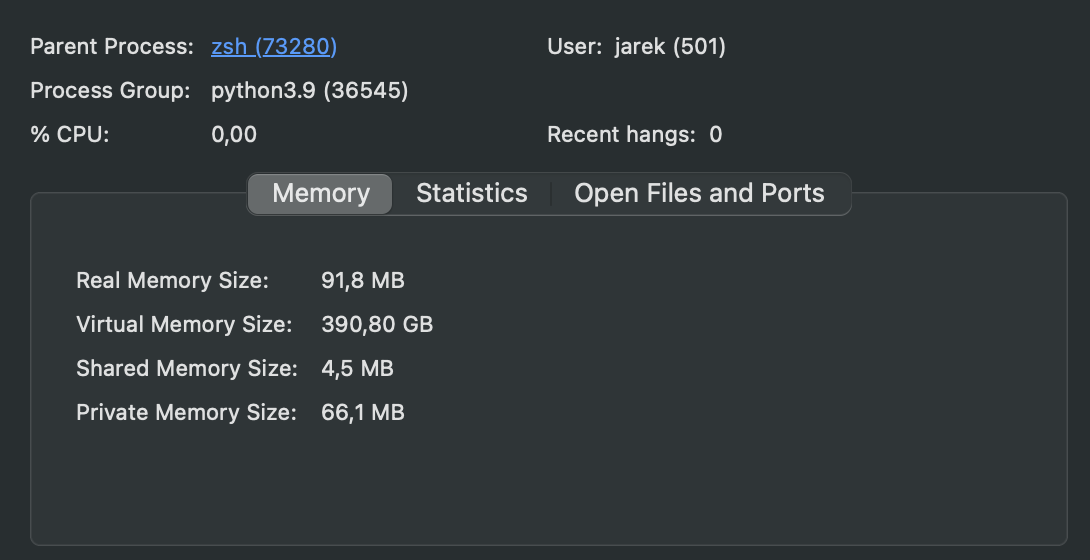

At same interval, scheduler cache increase 150MB and scheduler logs total 149MB. but scheduler increase 600MB.

Also, I experienced this problem in community helm chart. so I changed to official chart to solve this problem, but still occurring.... Is it a problem caused by parsing the dag? If my dag structure is wrong?

Please help....🙏

Beta Was this translation helpful? Give feedback.

All reactions