|

| 1 | +#hdp-2.6.5集成kylin服务 |

| 2 | + |

| 3 | + |

| 4 | +## 环境 |

| 5 | +```shell script |

| 6 | +HDP-2.6.5 |

| 7 | +kylin-3.1.1 |

| 8 | +``` |

| 9 | +## kylin部署包准备 |

| 10 | +```shell script |

| 11 | +https://mirror.jframeworks.com/apache/kylin/apache-kylin-3.1.1/apache-kylin-3.1.1-bin-hbase1x.tar.gz |

| 12 | +``` |

| 13 | + |

| 14 | +## hdp-stack目录创建kylin的 |

| 15 | +``` |

| 16 | +VERSION=`hdp-select status hadoop-client | sed 's/hadoop-client - \([0-9]\.[0-9]\).*/\1/'` |

| 17 | +mkdir /var/lib/ambari-server/resources/stacks/HDP/$VERSION/services/KYLIN |

| 18 | +``` |

| 19 | + |

| 20 | +## 上传ambari-kylin-service的压缩包到hdp集群 |

| 21 | +项目根目录执行编译脚本`build.sh` |

| 22 | +```shell script |

| 23 | +sh build.sh |

| 24 | +``` |

| 25 | +编译成功后,可以看到`service.tar.gz`。上传该压缩包到服务器上。复制到ambari stack目录后解压。 |

| 26 | +```shell script |

| 27 | +cp service.tar.gz /var/lib/ambari-server/resources/stacks/HDP/2.6/services/KYLIN |

| 28 | +tar zxvf service.tar.gz |

| 29 | +``` |

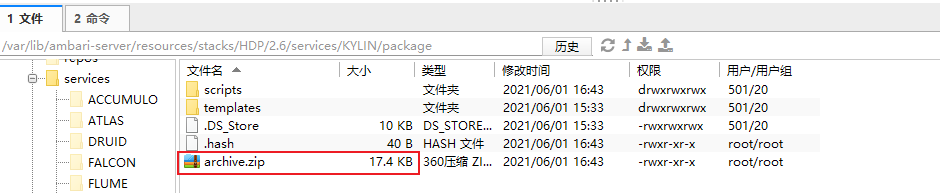

| 30 | +可以看到如下目录。 |

| 31 | + |

| 32 | + |

| 33 | + |

| 34 | +## Restart Ambari |

| 35 | +``` |

| 36 | +ambari-server restart |

| 37 | +``` |

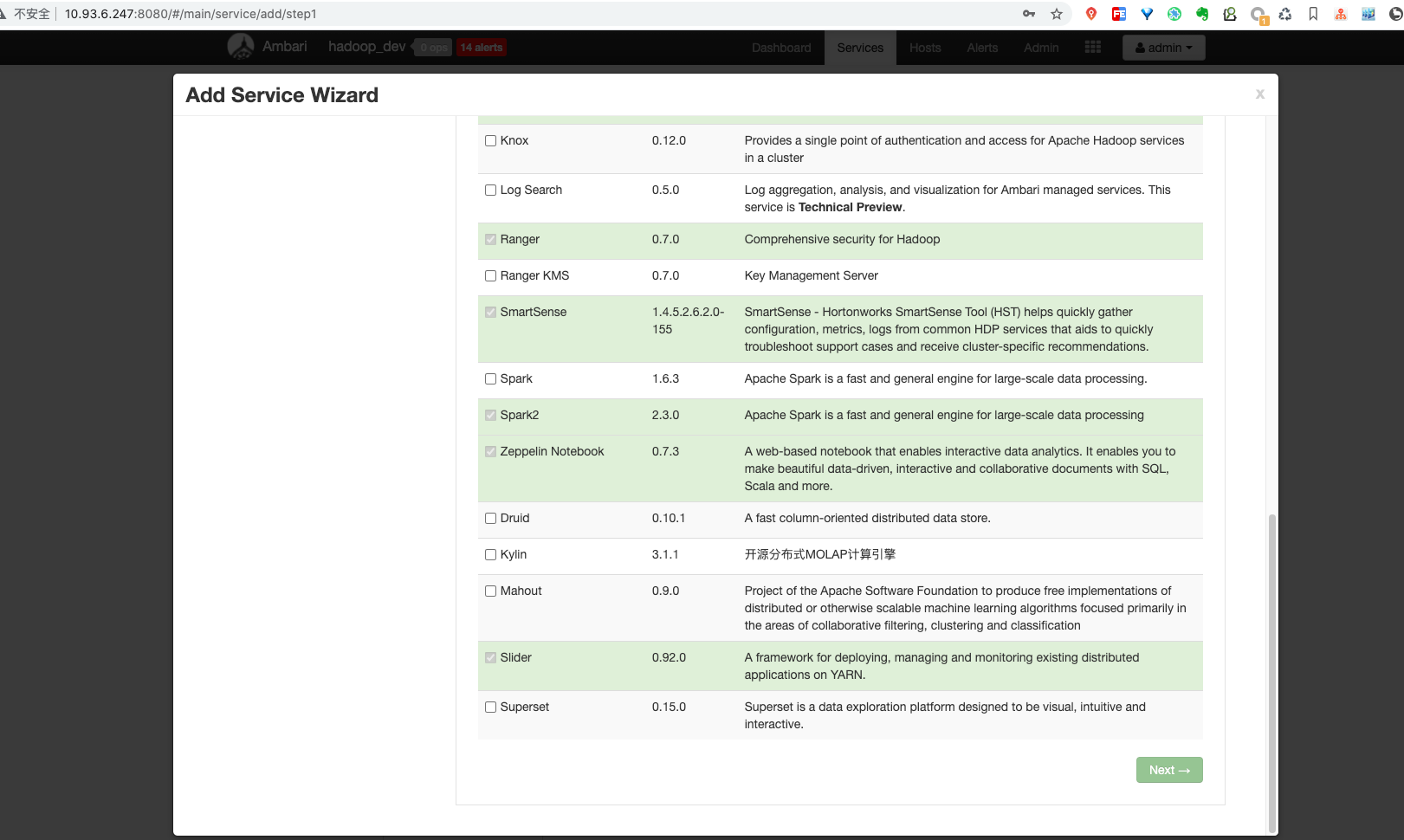

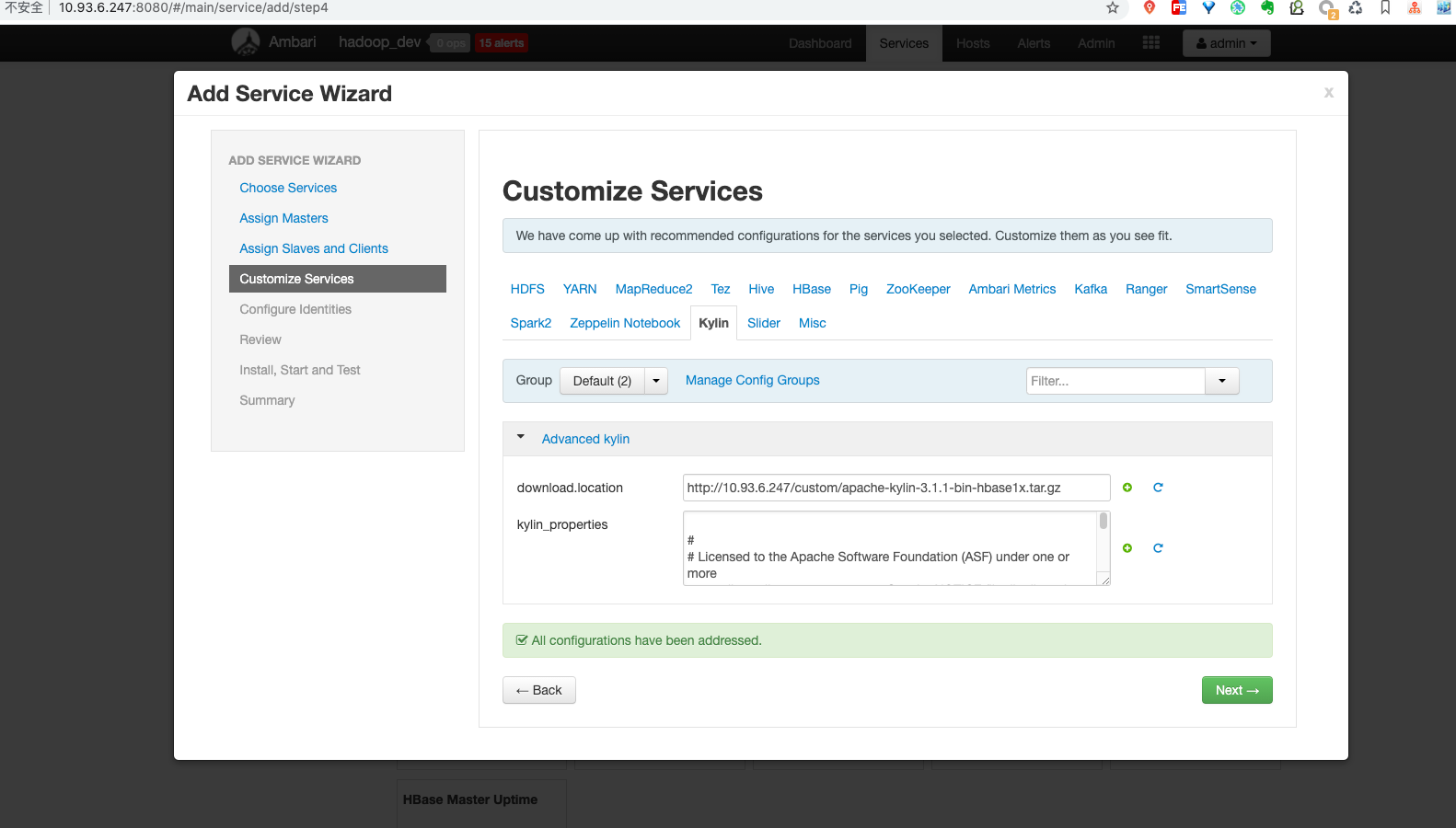

| 38 | +重启ambari后,打开ambari页面增加服务,可以看到kylin服务。 |

| 39 | + |

| 40 | + |

| 41 | + |

| 42 | +## ambari安装kylin服务流程剖析 |

| 43 | +在ambari页面上安装kylin时,ambari-server会对stack目录下的kylin的package目录进行压缩生成archive.zip。 |

| 44 | + |

| 45 | +然后ambari-agent会将其缓存起来,解压到ambari-agent的缓存目录/var/lib/ambari-agent/cache/stacks/HDP/2.6/services/KYLIN/,之后在ambari页面上点击安装/启动操作,agent都会加载缓存目录下的脚本。 |

| 46 | +因此在agent缓存存在时,修改stack目录下的配置是不生效的,要先删缓存,等ambari重建缓存配置才会加载最新的。 |

| 47 | + |

| 48 | +## 离线环境下安装故障处理 |

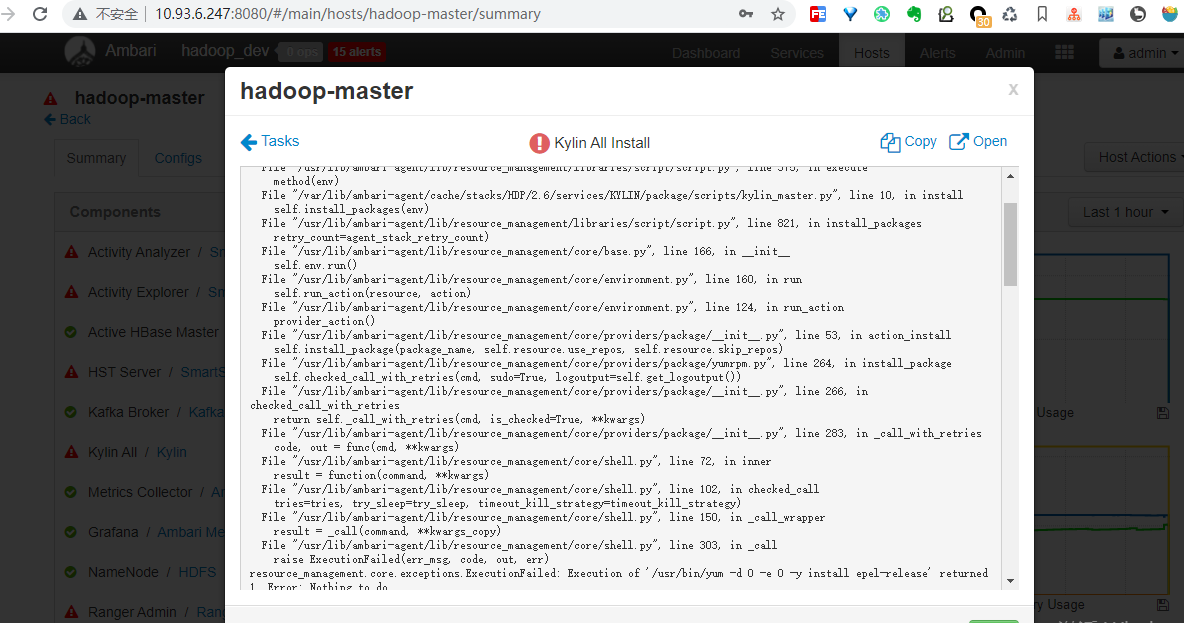

| 49 | +在ambari中对自定义添加的kylin服务,一直无法安装。每次安装都在执行安装 epel-release(yum的拓展包)时报错。 |

| 50 | + |

| 51 | +解决的办法: |

| 52 | + |

| 53 | +手动下载 epel-release 并安装到服务器上。 |

| 54 | +下载地址 : https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm |

| 55 | +下载到服务器后,执行安装。 |

| 56 | +```rpm -Uvh epel-release*rpm``` |

| 57 | +在安装完 epel-release 后,就可以在ambari中重新执行安装kylin服务,此时就可以正常安装。 |

| 58 | + |

| 59 | +不过在安装完 epel-release 后,如果安装hdp自带的服务,如:`yum flume_2_6_5_0_292 `时由于离线环境会报错。错误如下: |

| 60 | + |

| 61 | +```shell script |

| 62 | +stderr: |

| 63 | +Traceback (most recent call last): |

| 64 | + File "/var/lib/ambari-agent/cache/common-services/FLUME/1.4.0.2.0/package/scripts/flume_handler.py", line 122, in <module> |

| 65 | + FlumeHandler().execute() |

| 66 | + File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 375, in execute |

| 67 | + method(env) |

| 68 | + File "/var/lib/ambari-agent/cache/common-services/FLUME/1.4.0.2.0/package/scripts/flume_handler.py", line 45, in install |

| 69 | + self.install_packages(env) |

| 70 | + File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 821, in install_packages |

| 71 | + retry_count=agent_stack_retry_count) |

| 72 | + File "/usr/lib/ambari-agent/lib/resource_management/core/base.py", line 166, in __init__ |

| 73 | + self.env.run() |

| 74 | + File "/usr/lib/ambari-agent/lib/resource_management/core/environment.py", line 160, in run |

| 75 | + self.run_action(resource, action) |

| 76 | + File "/usr/lib/ambari-agent/lib/resource_management/core/environment.py", line 124, in run_action |

| 77 | + provider_action() |

| 78 | + File "/usr/lib/ambari-agent/lib/resource_management/core/providers/package/__init__.py", line 53, in action_install |

| 79 | + self.install_package(package_name, self.resource.use_repos, self.resource.skip_repos) |

| 80 | + File "/usr/lib/ambari-agent/lib/resource_management/core/providers/package/yumrpm.py", line 264, in install_package |

| 81 | + self.checked_call_with_retries(cmd, sudo=True, logoutput=self.get_logoutput()) |

| 82 | + File "/usr/lib/ambari-agent/lib/resource_management/core/providers/package/__init__.py", line 266, in checked_call_with_retries |

| 83 | + return self._call_with_retries(cmd, is_checked=True, **kwargs) |

| 84 | + File "/usr/lib/ambari-agent/lib/resource_management/core/providers/package/__init__.py", line 283, in _call_with_retries |

| 85 | + code, out = func(cmd, **kwargs) |

| 86 | + File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 72, in inner |

| 87 | + result = function(command, **kwargs) |

| 88 | + File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 102, in checked_call |

| 89 | + tries=tries, try_sleep=try_sleep, timeout_kill_strategy=timeout_kill_strategy) |

| 90 | + File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 150, in _call_wrapper |

| 91 | + result = _call(command, **kwargs_copy) |

| 92 | + File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 303, in _call |

| 93 | + raise ExecutionFailed(err_msg, code, out, err) |

| 94 | +resource_management.core.exceptions.ExecutionFailed: Execution of '/usr/bin/yum -d 0 -e 0 -y install flume_2_6_5_0_292' returned 1. One of the configured repositories failed (Unknown), |

| 95 | +and yum doesn't have enough cached data to continue. At this point the only |

| 96 | +safe thing yum can do is fail. There are a few ways to work "fix" this: |

| 97 | +

|

| 98 | + 1. Contact the upstream for the repository and get them to fix the problem. |

| 99 | +

|

| 100 | + 2. Reconfigure the baseurl/etc. for the repository, to point to a working |

| 101 | + upstream. This is most often useful if you are using a newer |

| 102 | + distribution release than is supported by the repository (and the |

| 103 | + packages for the previous distribution release still work). |

| 104 | +

|

| 105 | + 3. Run the command with the repository temporarily disabled |

| 106 | + yum --disablerepo=<repoid> ... |

| 107 | +

|

| 108 | + 4. Disable the repository permanently, so yum won't use it by default. Yum |

| 109 | + will then just ignore the repository until you permanently enable it |

| 110 | + again or use --enablerepo for temporary usage: |

| 111 | + |

| 112 | + yum-config-manager --disable <repoid> |

| 113 | + or |

| 114 | + subscription-manager repos --disable=<repoid> |

| 115 | + |

| 116 | + 5. Configure the failing repository to be skipped, if it is unavailable. |

| 117 | + Note that yum will try to contact the repo. when it runs most commands, |

| 118 | + so will have to try and fail each time (and thus. yum will be be much |

| 119 | + slower). If it is a very temporary problem though, this is often a nice |

| 120 | + compromise: |

| 121 | + |

| 122 | + yum-config-manager --save --setopt=<repoid>.skip_if_unavailable=true |

| 123 | + |

| 124 | +Cannot retrieve metalink for repository: epel/x86_64. Please verify its path and try again |

| 125 | +stdout: |

| 126 | +2021-06-03 15:08:55,823 - Stack Feature Version Info: Cluster Stack=2.6, Command Stack=None, Command Version=None -> 2.6 |

| 127 | +2021-06-03 15:08:55,828 - Using hadoop conf dir: /usr/hdp/2.6.5.0-292/hadoop/conf |

| 128 | +2021-06-03 15:08:55,829 - Group['livy'] {} |

| 129 | +2021-06-03 15:08:55,831 - Group['spark'] {} |

| 130 | +2021-06-03 15:08:55,831 - Group['ranger'] {} |

| 131 | +2021-06-03 15:08:55,831 - Group['hdfs'] {} |

| 132 | +2021-06-03 15:08:55,831 - Group['zeppelin'] {} |

| 133 | +2021-06-03 15:08:55,831 - Group['hadoop'] {} |

| 134 | +2021-06-03 15:08:55,832 - Group['users'] {} |

| 135 | +2021-06-03 15:08:55,832 - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None} |

| 136 | +2021-06-03 15:08:55,833 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None} |

| 137 | +2021-06-03 15:08:55,834 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None} |

| 138 | +2021-06-03 15:08:55,834 - User['ranger'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'ranger'], 'uid': None} |

| 139 | +2021-06-03 15:08:55,835 - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users'], 'uid': None} |

| 140 | +2021-06-03 15:08:55,836 - User['zeppelin'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'zeppelin', u'hadoop'], 'uid': None} |

| 141 | +2021-06-03 15:08:55,837 - User['livy'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None} |

| 142 | +2021-06-03 15:08:55,837 - User['spark'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None} |

| 143 | +2021-06-03 15:08:55,838 - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users'], 'uid': None} |

| 144 | +2021-06-03 15:08:55,839 - User['flume'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None} |

| 145 | +2021-06-03 15:08:55,839 - Adding user User['flume'] |

| 146 | +2021-06-03 15:08:55,914 - User['kafka'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None} |

| 147 | +2021-06-03 15:08:55,915 - User['hdfs'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hdfs'], 'uid': None} |

| 148 | +2021-06-03 15:08:55,916 - User['sqoop'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None} |

| 149 | +2021-06-03 15:08:55,916 - User['yarn'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None} |

| 150 | +2021-06-03 15:08:55,917 - User['mapred'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None} |

| 151 | +2021-06-03 15:08:55,918 - User['hbase'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None} |

| 152 | +2021-06-03 15:08:55,919 - User['hcat'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None} |

| 153 | +2021-06-03 15:08:55,919 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555} |

| 154 | +2021-06-03 15:08:55,921 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'} |

| 155 | +2021-06-03 15:08:55,927 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] due to not_if |

| 156 | +2021-06-03 15:08:55,927 - Directory['/tmp/hbase-hbase'] {'owner': 'hbase', 'create_parents': True, 'mode': 0775, 'cd_access': 'a'} |

| 157 | +2021-06-03 15:08:55,929 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555} |

| 158 | +2021-06-03 15:08:55,930 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555} |

| 159 | +2021-06-03 15:08:55,931 - call['/var/lib/ambari-agent/tmp/changeUid.sh hbase'] {} |

| 160 | +2021-06-03 15:08:55,939 - call returned (0, '1014') |

| 161 | +2021-06-03 15:08:55,940 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase 1014'] {'not_if': '(test $(id -u hbase) -gt 1000) || (false)'} |

| 162 | +2021-06-03 15:08:55,945 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase 1014'] due to not_if |

| 163 | +2021-06-03 15:08:55,945 - Group['hdfs'] {} |

| 164 | +2021-06-03 15:08:55,946 - User['hdfs'] {'fetch_nonlocal_groups': True, 'groups': ['hdfs', u'hdfs']} |

| 165 | +2021-06-03 15:08:55,946 - FS Type: |

| 166 | +2021-06-03 15:08:55,946 - Directory['/etc/hadoop'] {'mode': 0755} |

| 167 | +2021-06-03 15:08:55,959 - File['/usr/hdp/2.6.5.0-292/hadoop/conf/hadoop-env.sh'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'} |

| 168 | +2021-06-03 15:08:55,960 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 01777} |

| 169 | +2021-06-03 15:08:55,975 - Repository['HDP-2.6-repo-1'] {'append_to_file': False, 'base_url': 'http://10.93.6.247/hdp/HDP/centos7/2.6.5.0-292/', 'action': ['create'], 'components': [u'HDP', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'ambari-hdp-1', 'mirror_list': None} |

| 170 | +2021-06-03 15:08:55,983 - File['/etc/yum.repos.d/ambari-hdp-1.repo'] {'content': '[HDP-2.6-repo-1]\nname=HDP-2.6-repo-1\nbaseurl=http://10.93.6.247/hdp/HDP/centos7/2.6.5.0-292/\n\npath=/\nenabled=1\ngpgcheck=0'} |

| 171 | +2021-06-03 15:08:55,983 - Writing File['/etc/yum.repos.d/ambari-hdp-1.repo'] because contents don't match |

| 172 | +2021-06-03 15:08:55,983 - Repository with url is not created due to its tags: set([u'GPL']) |

| 173 | +2021-06-03 15:08:55,984 - Repository['HDP-UTILS-1.1.0.22-repo-1'] {'append_to_file': True, 'base_url': 'http://10.93.6.247/hdp/HDP-UTILS/centos7/1.1.0.22/', 'action': ['create'], 'components': [u'HDP-UTILS', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'ambari-hdp-1', 'mirror_list': None} |

| 174 | +2021-06-03 15:08:55,987 - File['/etc/yum.repos.d/ambari-hdp-1.repo'] {'content': '[HDP-2.6-repo-1]\nname=HDP-2.6-repo-1\nbaseurl=http://10.93.6.247/hdp/HDP/centos7/2.6.5.0-292/\n\npath=/\nenabled=1\ngpgcheck=0\n[HDP-UTILS-1.1.0.22-repo-1]\nname=HDP-UTILS-1.1.0.22-repo-1\nbaseurl=http://10.93.6.247/hdp/HDP-UTILS/centos7/1.1.0.22/\n\npath=/\nenabled=1\ngpgcheck=0'} |

| 175 | +2021-06-03 15:08:55,987 - Writing File['/etc/yum.repos.d/ambari-hdp-1.repo'] because contents don't match |

| 176 | +2021-06-03 15:08:55,988 - Package['unzip'] {'retry_on_repo_unavailability': False, 'retry_count': 5} |

| 177 | +2021-06-03 15:08:56,345 - Skipping installation of existing package unzip |

| 178 | +2021-06-03 15:08:56,345 - Package['curl'] {'retry_on_repo_unavailability': False, 'retry_count': 5} |

| 179 | +2021-06-03 15:08:56,440 - Skipping installation of existing package curl |

| 180 | +2021-06-03 15:08:56,440 - Package['hdp-select'] {'retry_on_repo_unavailability': False, 'retry_count': 5} |

| 181 | +2021-06-03 15:08:56,536 - Skipping installation of existing package hdp-select |

| 182 | +2021-06-03 15:08:56,540 - The repository with version 2.6.5.0-292 for this command has been marked as resolved. It will be used to report the version of the component which was installed |

| 183 | +2021-06-03 15:08:56,780 - Command repositories: HDP-2.6-repo-1, HDP-2.6-GPL-repo-1, HDP-UTILS-1.1.0.22-repo-1 |

| 184 | +2021-06-03 15:08:56,780 - Applicable repositories: HDP-2.6-repo-1, HDP-2.6-GPL-repo-1, HDP-UTILS-1.1.0.22-repo-1 |

| 185 | +2021-06-03 15:08:56,781 - Looking for matching packages in the following repositories: HDP-2.6-repo-1, HDP-2.6-GPL-repo-1, HDP-UTILS-1.1.0.22-repo-1 |

| 186 | +2021-06-03 15:08:59,747 - Adding fallback repositories: HDP-UTILS-1.1.0.22, HDP-2.6.5.0 |

| 187 | +2021-06-03 15:09:01,831 - Package['flume_2_6_5_0_292'] {'retry_on_repo_unavailability': False, 'retry_count': 5} |

| 188 | +2021-06-03 15:09:02,010 - Installing package flume_2_6_5_0_292 ('/usr/bin/yum -d 0 -e 0 -y install flume_2_6_5_0_292') |

| 189 | +2021-06-03 15:09:42,203 - Execution of '/usr/bin/yum -d 0 -e 0 -y install flume_2_6_5_0_292' returned 1. One of the configured repositories failed (Unknown), |

| 190 | +and yum doesn't have enough cached data to continue. At this point the only |

| 191 | +safe thing yum can do is fail. There are a few ways to work "fix" this: |

| 192 | +

|

| 193 | + 1. Contact the upstream for the repository and get them to fix the problem. |

| 194 | +

|

| 195 | + 2. Reconfigure the baseurl/etc. for the repository, to point to a working |

| 196 | + upstream. This is most often useful if you are using a newer |

| 197 | + distribution release than is supported by the repository (and the |

| 198 | + packages for the previous distribution release still work). |

| 199 | +

|

| 200 | + 3. Run the command with the repository temporarily disabled |

| 201 | + yum --disablerepo=<repoid> ... |

| 202 | +

|

| 203 | + 4. Disable the repository permanently, so yum won't use it by default. Yum |

| 204 | + will then just ignore the repository until you permanently enable it |

| 205 | + again or use --enablerepo for temporary usage: |

| 206 | + |

| 207 | + yum-config-manager --disable <repoid> |

| 208 | + or |

| 209 | + subscription-manager repos --disable=<repoid> |

| 210 | + |

| 211 | + 5. Configure the failing repository to be skipped, if it is unavailable. |

| 212 | + Note that yum will try to contact the repo. when it runs most commands, |

| 213 | + so will have to try and fail each time (and thus. yum will be be much |

| 214 | + slower). If it is a very temporary problem though, this is often a nice |

| 215 | + compromise: |

| 216 | + |

| 217 | + yum-config-manager --save --setopt=<repoid>.skip_if_unavailable=true |

| 218 | + |

| 219 | +Cannot retrieve metalink for repository: epel/x86_64. Please verify its path and try again |

| 220 | +2021-06-03 15:09:42,203 - Failed to install package flume_2_6_5_0_292. Executing '/usr/bin/yum clean metadata' |

| 221 | +2021-06-03 15:09:42,360 - Retrying to install package flume_2_6_5_0_292 after 30 seconds |

| 222 | +2021-06-03 15:10:52,581 - The repository with version 2.6.5.0-292 for this command has been marked as resolved. It will be used to report the version of the component which was installed |

| 223 | + |

| 224 | +Command failed after 1 tries |

| 225 | + |

| 226 | +``` |

| 227 | +这时候需要执行如下命令禁止epel源,并且重新制作本地源缓存: |

| 228 | +```shell script |

| 229 | +yum-config-manager --disable epel |

| 230 | +yum clean all |

| 231 | +yum makecache |

| 232 | + |

| 233 | +``` |

0 commit comments