diff --git a/README.en.md b/README.en.md

new file mode 100644

index 00000000..17bffce8

--- /dev/null

+++ b/README.en.md

@@ -0,0 +1,169 @@

+English | [Chinese](./README.md)

+

+# Build a Large Language Model (From Scratch)

+

+This repository contains the code for developing, pretraining, and finetuning a GPT-like LLM and is the official code repository for the book [Build a Large Language Model (From Scratch)](https://amzn.to/4fqvn0D).

+

+

+

+

+ +

+

+

+

+

+In [*Build a Large Language Model (From Scratch)*](http://mng.bz/orYv), you'll learn and understand how large language models (LLMs) work from the inside out by coding them from the ground up, step by step. In this book, I'll guide you through creating your own LLM, explaining each stage with clear text, diagrams, and examples.

+

+The method described in this book for training and developing your own small-but-functional model for educational purposes mirrors the approach used in creating large-scale foundational models such as those behind ChatGPT. In addition, this book includes code for loading the weights of larger pretrained models for finetuning.

+

+- Link to the official [source code repository](https://github.com/rasbt/LLMs-from-scratch)

+- [Link to the book at Manning (the publisher's website)](http://mng.bz/orYv)

+- [Link to the book page on Amazon.com](https://www.amazon.com/gp/product/1633437167)

+- ISBN 9781633437166

+

+ +

+

+

+

+

+

+

+

+To download a copy of this repository, click on the [Download ZIP](https://github.com/rasbt/LLMs-from-scratch/archive/refs/heads/main.zip) button or execute the following command in your terminal:

+

+```bash

+git clone --depth 1 https://github.com/rasbt/LLMs-from-scratch.git

+```

+

+

+

+(If you downloaded the code bundle from the Manning website, please consider visiting the official code repository on GitHub at [https://github.com/rasbt/LLMs-from-scratch](https://github.com/rasbt/LLMs-from-scratch) for the latest updates.)

+

+

+

+

+

+# Table of Contents

+

+Please note that this `README.md` file is a Markdown (`.md`) file. If you have downloaded this code bundle from the Manning website and are viewing it on your local computer, I recommend using a Markdown editor or previewer for proper viewing. If you haven't installed a Markdown editor yet, [MarkText](https://www.marktext.cc) is a good free option.

+

+You can alternatively view this and other files on GitHub at [https://github.com/rasbt/LLMs-from-scratch](https://github.com/rasbt/LLMs-from-scratch) in your browser, which renders Markdown automatically.

+

+

+

+

+

+> [!TIP]

+> If you're seeking guidance on installing Python and Python packages and setting up your code environment, I suggest reading the [README.md](setup/README.md) file located in the [setup](setup) directory.

+

+

+

+

+[](https://github.com/rasbt/LLMs-from-scratch/actions/workflows/basic-tests-linux.yml)

+[](https://github.com/rasbt/LLMs-from-scratch/actions/workflows/basic-tests-windows.yml)

+[](https://github.com/rasbt/LLMs-from-scratch/actions/workflows/basic-tests-macos.yml)

+

+

+

+

+

+| Chapter Title | Main Code (for Quick Access) | All Code + Supplementary |

+|------------------------------------------------------------|---------------------------------------------------------------------------------------------------------------------------------|-------------------------------|

+| [Setup recommendations](setup) | - | - |

+| Ch 1: Understanding Large Language Models | No code | - |

+| Ch 2: Working with Text Data | - [ch02.ipynb](ch02/01_main-chapter-code/ch02.ipynb)

- [dataloader.ipynb](ch02/01_main-chapter-code/dataloader.ipynb) (summary)

- [exercise-solutions.ipynb](ch02/01_main-chapter-code/exercise-solutions.ipynb) | [./ch02](./ch02) |

+| Ch 3: Coding Attention Mechanisms | - [ch03.ipynb](ch03/01_main-chapter-code/ch03.ipynb)

- [multihead-attention.ipynb](ch03/01_main-chapter-code/multihead-attention.ipynb) (summary)

- [exercise-solutions.ipynb](ch03/01_main-chapter-code/exercise-solutions.ipynb)| [./ch03](./ch03) |

+| Ch 4: Implementing a GPT Model from Scratch | - [ch04.ipynb](ch04/01_main-chapter-code/ch04.ipynb)

- [gpt.py](ch04/01_main-chapter-code/gpt.py) (summary)

- [exercise-solutions.ipynb](ch04/01_main-chapter-code/exercise-solutions.ipynb) | [./ch04](./ch04) |

+| Ch 5: Pretraining on Unlabeled Data | - [ch05.ipynb](ch05/01_main-chapter-code/ch05.ipynb)

- [gpt_train.py](ch05/01_main-chapter-code/gpt_train.py) (summary)

- [gpt_generate.py](ch05/01_main-chapter-code/gpt_generate.py) (summary)

- [exercise-solutions.ipynb](ch05/01_main-chapter-code/exercise-solutions.ipynb) | [./ch05](./ch05) |

+| Ch 6: Finetuning for Text Classification | - [ch06.ipynb](ch06/01_main-chapter-code/ch06.ipynb)

- [gpt_class_finetune.py](ch06/01_main-chapter-code/gpt_class_finetune.py)

- [exercise-solutions.ipynb](ch06/01_main-chapter-code/exercise-solutions.ipynb) | [./ch06](./ch06) |

+| Ch 7: Finetuning to Follow Instructions | - [ch07.ipynb](ch07/01_main-chapter-code/ch07.ipynb)

- [gpt_instruction_finetuning.py](ch07/01_main-chapter-code/gpt_instruction_finetuning.py) (summary)

- [ollama_evaluate.py](ch07/01_main-chapter-code/ollama_evaluate.py) (summary)

- [exercise-solutions.ipynb](ch07/01_main-chapter-code/exercise-solutions.ipynb) | [./ch07](./ch07) |

+| Appendix A: Introduction to PyTorch | - [code-part1.ipynb](appendix-A/01_main-chapter-code/code-part1.ipynb)

- [code-part2.ipynb](appendix-A/01_main-chapter-code/code-part2.ipynb)

- [DDP-script.py](appendix-A/01_main-chapter-code/DDP-script.py)

- [exercise-solutions.ipynb](appendix-A/01_main-chapter-code/exercise-solutions.ipynb) | [./appendix-A](./appendix-A) |

+| Appendix B: References and Further Reading | No code | - |

+| Appendix C: Exercise Solutions | No code | - |

+| Appendix D: Adding Bells and Whistles to the Training Loop | - [appendix-D.ipynb](appendix-D/01_main-chapter-code/appendix-D.ipynb) | [./appendix-D](./appendix-D) |

+| Appendix E: Parameter-efficient Finetuning with LoRA | - [appendix-E.ipynb](appendix-E/01_main-chapter-code/appendix-E.ipynb) | [./appendix-E](./appendix-E) |

+

+

+

+

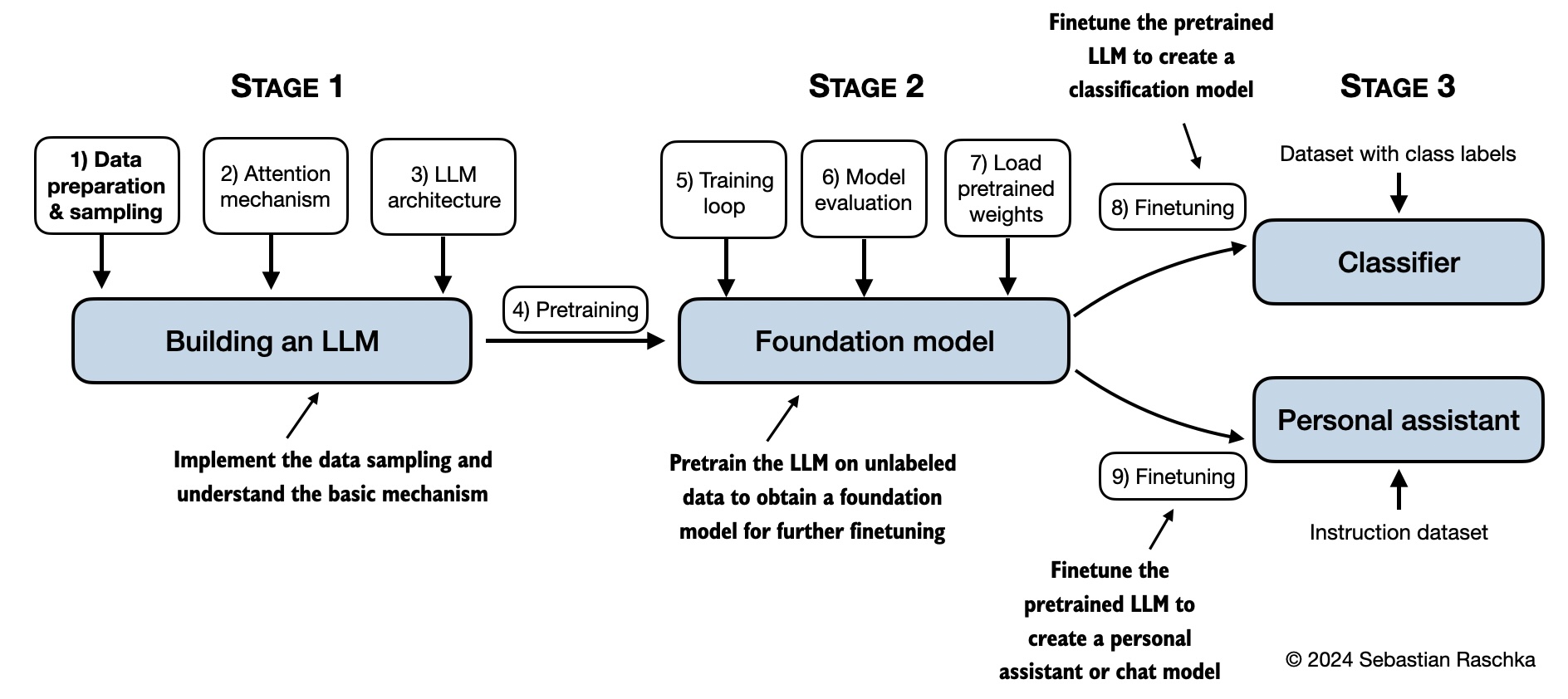

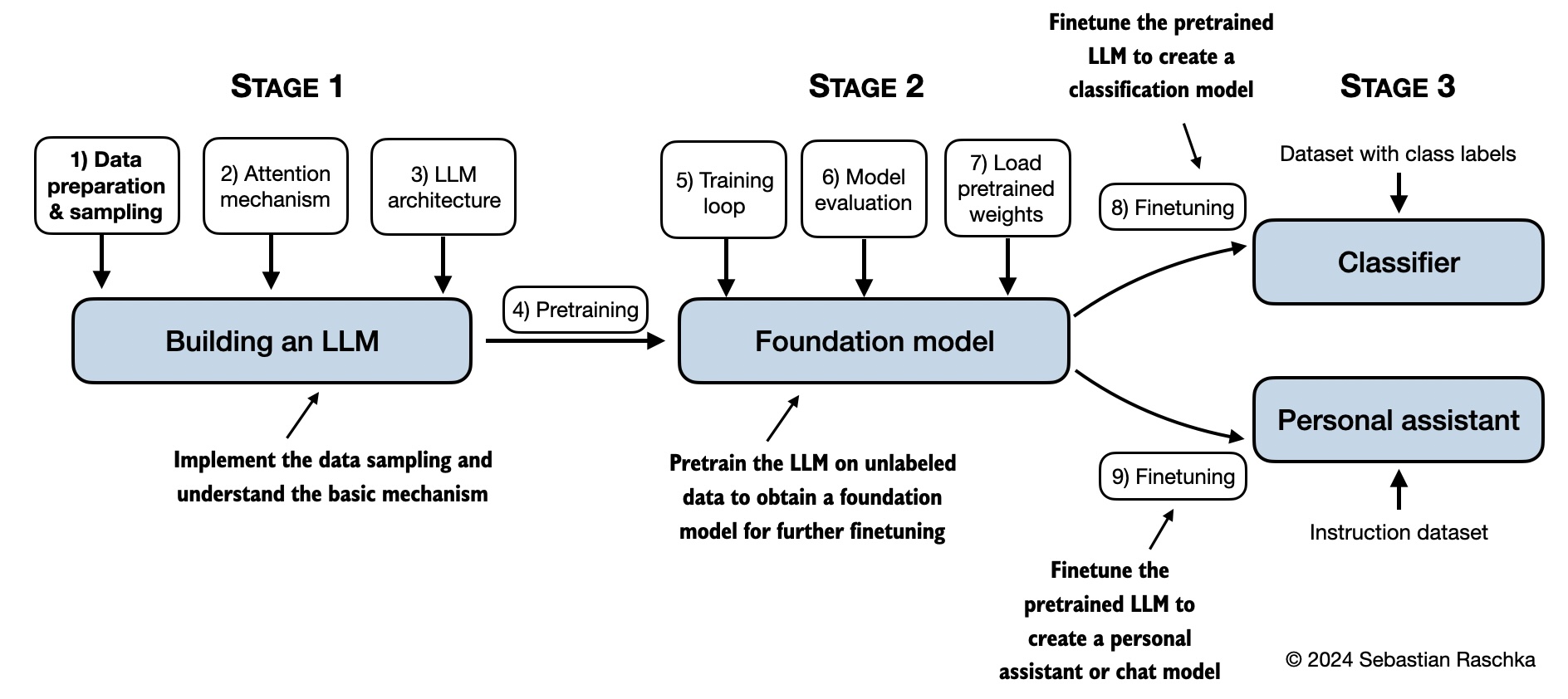

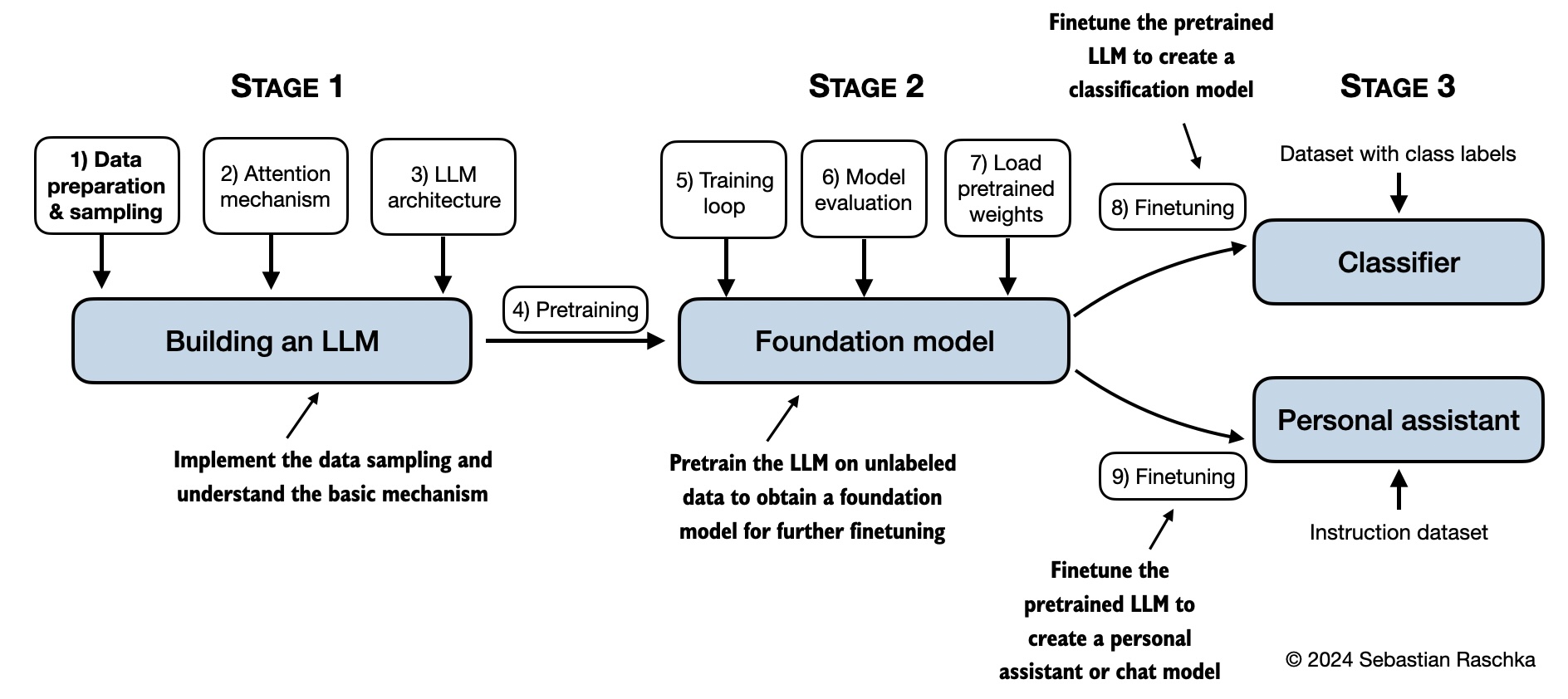

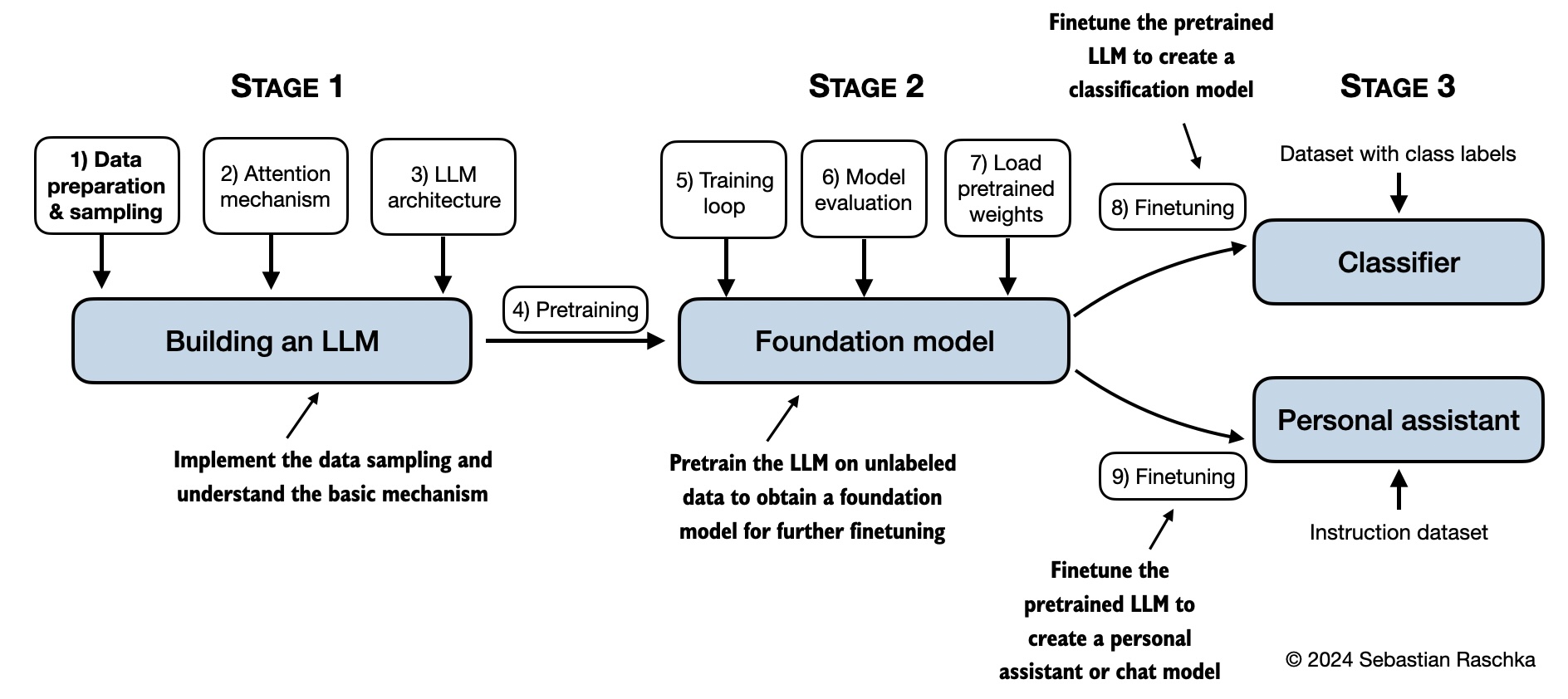

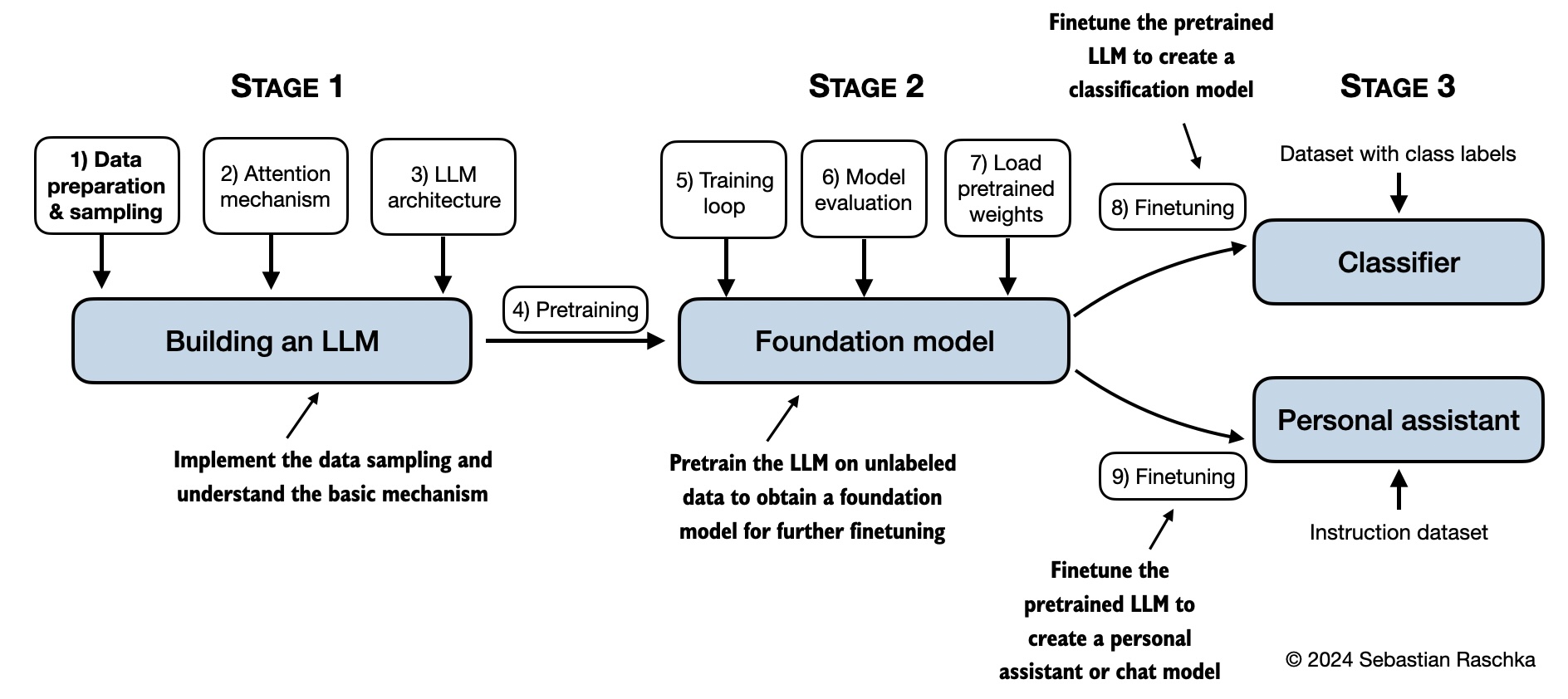

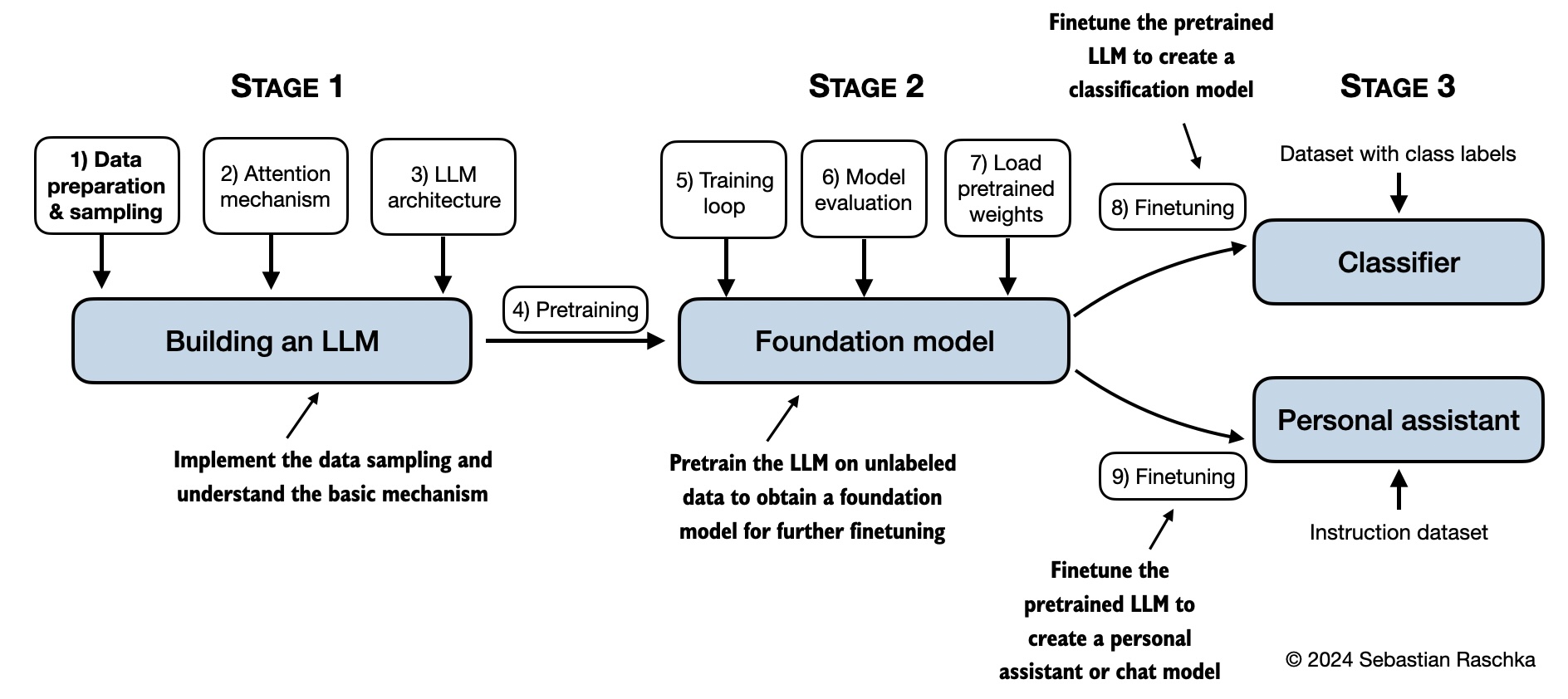

+The mental model below summarizes the contents covered in this book.

+

+ +

+

+

+

+

+

+## Hardware Requirements

+

+The code in the main chapters of this book is designed to run on conventional laptops within a reasonable timeframe and does not require specialized hardware. This approach ensures that a wide audience can engage with the material. Additionally, the code automatically utilizes GPUs if they are available. (Please see the [setup](https://github.com/rasbt/LLMs-from-scratch/blob/main/setup/README.md) doc for additional recommendations.)

+

+

+## Bonus Material

+

+Several folders contain optional materials as a bonus for interested readers:

+

+- **Setup**

+ - [Python Setup Tips](setup/01_optional-python-setup-preferences)

+ - [Installing Python Packages and Libraries Used In This Book](setup/02_installing-python-libraries)

+ - [Docker Environment Setup Guide](setup/03_optional-docker-environment)

+- **Chapter 2: Working with text data**

+ - [Comparing Various Byte Pair Encoding (BPE) Implementations](ch02/02_bonus_bytepair-encoder)

+ - [Understanding the Difference Between Embedding Layers and Linear Layers](ch02/03_bonus_embedding-vs-matmul)

+ - [Dataloader Intuition with Simple Numbers](ch02/04_bonus_dataloader-intuition)

+- **Chapter 3: Coding attention mechanisms**

+ - [Comparing Efficient Multi-Head Attention Implementations](ch03/02_bonus_efficient-multihead-attention/mha-implementations.ipynb)

+ - [Understanding PyTorch Buffers](ch03/03_understanding-buffers/understanding-buffers.ipynb)

+- **Chapter 4: Implementing a GPT model from scratch**

+ - [FLOPS Analysis](ch04/02_performance-analysis/flops-analysis.ipynb)

+- **Chapter 5: Pretraining on unlabeled data:**

+ - [Alternative Weight Loading from Hugging Face Model Hub using Transformers](ch05/02_alternative_weight_loading/weight-loading-hf-transformers.ipynb)

+ - [Pretraining GPT on the Project Gutenberg Dataset](ch05/03_bonus_pretraining_on_gutenberg)

+ - [Adding Bells and Whistles to the Training Loop](ch05/04_learning_rate_schedulers)

+ - [Optimizing Hyperparameters for Pretraining](ch05/05_bonus_hparam_tuning)

+ - [Building a User Interface to Interact With the Pretrained LLM](ch05/06_user_interface)

+ - [Converting GPT to Llama](ch05/07_gpt_to_llama)

+ - [Llama 3.2 From Scratch](ch05/07_gpt_to_llama/standalone-llama32.ipynb)

+ - [Memory-efficient Model Weight Loading](ch05/08_memory_efficient_weight_loading/memory-efficient-state-dict.ipynb)

+- **Chapter 6: Finetuning for classification**

+ - [Additional experiments finetuning different layers and using larger models](ch06/02_bonus_additional-experiments)

+ - [Finetuning different models on 50k IMDB movie review dataset](ch06/03_bonus_imdb-classification)

+ - [Building a User Interface to Interact With the GPT-based Spam Classifier](ch06/04_user_interface)

+- **Chapter 7: Finetuning to follow instructions**

+ - [Dataset Utilities for Finding Near Duplicates and Creating Passive Voice Entries](ch07/02_dataset-utilities)

+ - [Evaluating Instruction Responses Using the OpenAI API and Ollama](ch07/03_model-evaluation)

+ - [Generating a Dataset for Instruction Finetuning](ch07/05_dataset-generation/llama3-ollama.ipynb)

+ - [Improving a Dataset for Instruction Finetuning](ch07/05_dataset-generation/reflection-gpt4.ipynb)

+ - [Generating a Preference Dataset with Llama 3.1 70B and Ollama](ch07/04_preference-tuning-with-dpo/create-preference-data-ollama.ipynb)

+ - [Direct Preference Optimization (DPO) for LLM Alignment](ch07/04_preference-tuning-with-dpo/dpo-from-scratch.ipynb)

+ - [Building a User Interface to Interact With the Instruction Finetuned GPT Model](ch07/06_user_interface)

+

+

+

+

+## Questions, Feedback, and Contributing to This Repository

+

+

+I welcome all sorts of feedback, best shared via the [Manning Forum](https://livebook.manning.com/forum?product=raschka&page=1) or [GitHub Discussions](https://github.com/rasbt/LLMs-from-scratch/discussions). Likewise, if you have any questions or just want to bounce ideas off others, please don't hesitate to post these in the forum as well.

+

+Please note that since this repository contains the code corresponding to a print book, I currently cannot accept contributions that would extend the contents of the main chapter code, as it would introduce deviations from the physical book. Keeping it consistent helps ensure a smooth experience for everyone.

+

+

+

+## Citation

+

+If you find this book or code useful for your research, please consider citing it.

+

+Chicago-style citation:

+

+> Raschka, Sebastian. *Build A Large Language Model (From Scratch)*. Manning, 2024. ISBN: 978-1633437166.

+

+BibTeX entry:

+

+```

+@book{build-llms-from-scratch-book,

+ author = {Sebastian Raschka},

+ title = {Build A Large Language Model (From Scratch)},

+ publisher = {Manning},

+ year = {2024},

+ isbn = {978-1633437166},

+ url = {https://www.manning.com/books/build-a-large-language-model-from-scratch},

+ github = {https://github.com/rasbt/LLMs-from-scratch}

+}

+```

diff --git a/README.md b/README.md

index bf0e645d..afb35bdb 100644

--- a/README.md

+++ b/README.md

@@ -1,8 +1,8 @@

-English | [Chinese](./README.zh.md)

+[English](./README.en.md) | 中文

-# Build a Large Language Model (From Scratch)

+# 构建大型语言模型(从头开始)

-This repository contains the code for developing, pretraining, and finetuning a GPT-like LLM and is the official code repository for the book [Build a Large Language Model (From Scratch)](https://amzn.to/4fqvn0D).

+此存储库包含用于开发、预训练和微调类似 GPT 的 LLM 的代码,是本书 [构建大型语言模型(从头开始)](https://amzn.to/4fqvn0D) 的官方代码存储库。

@@ -11,13 +11,13 @@ This repository contains the code for developing, pretraining, and finetuning a

-In [*Build a Large Language Model (From Scratch)*](http://mng.bz/orYv), you'll learn and understand how large language models (LLMs) work from the inside out by coding them from the ground up, step by step. In this book, I'll guide you through creating your own LLM, explaining each stage with clear text, diagrams, and examples.

+在 [*构建大型语言模型(从头开始)*](http://mng.bz/orYv) 中,您将通过从头开始逐步编码大型语言模型 (LLM),从内到外学习和了解大型语言模型 (LLM) 的工作原理。在本书中,我将指导您创建自己的 LLM,并用清晰的文本、图表和示例解释每个阶段。

-The method described in this book for training and developing your own small-but-functional model for educational purposes mirrors the approach used in creating large-scale foundational models such as those behind ChatGPT. In addition, this book includes code for loading the weights of larger pretrained models for finetuning.

+本书中描述的用于训练和开发您自己的小型但功能齐全的教育模型的方法反映了创建大型基础模型(例如 ChatGPT 背后的模型)所使用的方法。此外,本书还包括用于加载较大预训练模型的权重以进行微调的代码。

-- Link to the official [source code repository](https://github.com/rasbt/LLMs-from-scratch)

-- [Link to the book at Manning (the publisher's website)](http://mng.bz/orYv)

-- [Link to the book page on Amazon.com](https://www.amazon.com/gp/product/1633437167)

+- 链接到官方[源代码存储库](https://github.com/rasbt/LLMs-from-scratch)

+- [链接到 Manning(出版商网站)上的书籍](http://mng.bz/orYv)

+- [链接到 Amazon.com 上的书籍页面](https://www.amazon.com/gp/product/1633437167)

- ISBN 9781633437166

@@ -26,7 +26,7 @@ The method described in this book for training and developing your own small-but

@@ -26,7 +26,7 @@ The method described in this book for training and developing your own small-but

-To download a copy of this repository, click on the [Download ZIP](https://github.com/rasbt/LLMs-from-scratch/archive/refs/heads/main.zip) button or execute the following command in your terminal:

+要下载此存储库的副本,请单击[下载ZIP](https://github.com/rasbt/LLMs-from-scratch/archive/refs/heads/main.zip) 按钮或在终端中执行以下命令:

```bash

git clone --depth 1 https://github.com/rasbt/LLMs-from-scratch.git

@@ -34,127 +34,122 @@ git clone --depth 1 https://github.com/rasbt/LLMs-from-scratch.git

-(If you downloaded the code bundle from the Manning website, please consider visiting the official code repository on GitHub at [https://github.com/rasbt/LLMs-from-scratch](https://github.com/rasbt/LLMs-from-scratch) for the latest updates.)

+(如果您从 Manning 网站下载了代码包,请考虑访问 GitHub 上的官方代码存储库 [https://github.com/rasbt/LLMs-from-scratch](https://github.com/rasbt/LLMs-from-scratch) 以获取最新更新。)

+# 目录

-# Table of Contents

+请注意,此 `README.md` 文件是 Markdown(`.md`)文件。如果您已从 Manning 网站下载此代码包并在本地计算机上查看它,我建议使用 Markdown 编辑器或预览器进行正确查看。如果您尚未安装 Markdown 编辑器,[MarkText](https://www.marktext.cc) 是一个不错的免费选择。

-Please note that this `README.md` file is a Markdown (`.md`) file. If you have downloaded this code bundle from the Manning website and are viewing it on your local computer, I recommend using a Markdown editor or previewer for proper viewing. If you haven't installed a Markdown editor yet, [MarkText](https://www.marktext.cc) is a good free option.

-

-You can alternatively view this and other files on GitHub at [https://github.com/rasbt/LLMs-from-scratch](https://github.com/rasbt/LLMs-from-scratch) in your browser, which renders Markdown automatically.

+您也可以在浏览器中通过 [https://github.com/rasbt/LLMs-from-scratch](https://github.com/rasbt/LLMs-from-scratch) 查看此文件和 GitHub 上的其他文件,浏览器会自动呈现 Markdown。

-

+

> [!TIP]

-> If you're seeking guidance on installing Python and Python packages and setting up your code environment, I suggest reading the [README.md](setup/README.md) file located in the [setup](setup) directory.

+> 如果您正在寻求有关安装 Python 和 Python 包以及设置代码环境的指导,我建议您阅读位于 [setup](setup) 目录中的 [README.zh.md](setup/README.zh.md) 文件。

-[](https://github.com/rasbt/LLMs-from-scratch/actions/workflows/basic-tests-linux.yml)

-[](https://github.com/rasbt/LLMs-from-scratch/actions/workflows/basic-tests-windows.yml)

-[](https://github.com/rasbt/LLMs-from-scratch/actions/workflows/basic-tests-macos.yml)

-

-

+[](https://github.com/rasbt/LLMs-from-scratch/actions/workflows/basic-tests-linux.yml)

+[](https://github.com/rasbt/LLMs-from-scratch/actions/workflows/basic-tests-windows.yml)

+[](https://github.com/rasbt/LLMs-from-scratch/actions/workflows/basic-tests-macos.yml)

-| Chapter Title | Main Code (for Quick Access) | All Code + Supplementary |

-|------------------------------------------------------------|---------------------------------------------------------------------------------------------------------------------------------|-------------------------------|

-| [Setup recommendations](setup) | - | - |

-| Ch 1: Understanding Large Language Models | No code | - |

-| Ch 2: Working with Text Data | - [ch02.ipynb](ch02/01_main-chapter-code/ch02.ipynb)

- [dataloader.ipynb](ch02/01_main-chapter-code/dataloader.ipynb) (summary)

- [exercise-solutions.ipynb](ch02/01_main-chapter-code/exercise-solutions.ipynb) | [./ch02](./ch02) |

-| Ch 3: Coding Attention Mechanisms | - [ch03.ipynb](ch03/01_main-chapter-code/ch03.ipynb)

- [multihead-attention.ipynb](ch03/01_main-chapter-code/multihead-attention.ipynb) (summary)

- [exercise-solutions.ipynb](ch03/01_main-chapter-code/exercise-solutions.ipynb)| [./ch03](./ch03) |

-| Ch 4: Implementing a GPT Model from Scratch | - [ch04.ipynb](ch04/01_main-chapter-code/ch04.ipynb)

- [gpt.py](ch04/01_main-chapter-code/gpt.py) (summary)

- [exercise-solutions.ipynb](ch04/01_main-chapter-code/exercise-solutions.ipynb) | [./ch04](./ch04) |

-| Ch 5: Pretraining on Unlabeled Data | - [ch05.ipynb](ch05/01_main-chapter-code/ch05.ipynb)

- [gpt_train.py](ch05/01_main-chapter-code/gpt_train.py) (summary)

- [gpt_generate.py](ch05/01_main-chapter-code/gpt_generate.py) (summary)

- [exercise-solutions.ipynb](ch05/01_main-chapter-code/exercise-solutions.ipynb) | [./ch05](./ch05) |

-| Ch 6: Finetuning for Text Classification | - [ch06.ipynb](ch06/01_main-chapter-code/ch06.ipynb)

- [gpt_class_finetune.py](ch06/01_main-chapter-code/gpt_class_finetune.py)

- [exercise-solutions.ipynb](ch06/01_main-chapter-code/exercise-solutions.ipynb) | [./ch06](./ch06) |

-| Ch 7: Finetuning to Follow Instructions | - [ch07.ipynb](ch07/01_main-chapter-code/ch07.ipynb)

- [gpt_instruction_finetuning.py](ch07/01_main-chapter-code/gpt_instruction_finetuning.py) (summary)

- [ollama_evaluate.py](ch07/01_main-chapter-code/ollama_evaluate.py) (summary)

- [exercise-solutions.ipynb](ch07/01_main-chapter-code/exercise-solutions.ipynb) | [./ch07](./ch07) |

-| Appendix A: Introduction to PyTorch | - [code-part1.ipynb](appendix-A/01_main-chapter-code/code-part1.ipynb)

- [code-part2.ipynb](appendix-A/01_main-chapter-code/code-part2.ipynb)

- [DDP-script.py](appendix-A/01_main-chapter-code/DDP-script.py)

- [exercise-solutions.ipynb](appendix-A/01_main-chapter-code/exercise-solutions.ipynb) | [./appendix-A](./appendix-A) |

-| Appendix B: References and Further Reading | No code | - |

-| Appendix C: Exercise Solutions | No code | - |

-| Appendix D: Adding Bells and Whistles to the Training Loop | - [appendix-D.ipynb](appendix-D/01_main-chapter-code/appendix-D.ipynb) | [./appendix-D](./appendix-D) |

-| Appendix E: Parameter-efficient Finetuning with LoRA | - [appendix-E.ipynb](appendix-E/01_main-chapter-code/appendix-E.ipynb) | [./appendix-E](./appendix-E) |

+| 章节标题 | 主代码(用于快速访问) | 所有代码 + 补充 |

+|------------------------------------------------------------|---------------------------------------------------------------------------------------------------------|-----------------------------------------------|

+| [设置建议](./setup/README.zh.md) | - | - |

+| 第 1 章:了解大型语言模型 | 无代码 | - |

+| 第 2 章:使用文本数据 | - [ch02.ipynb](ch02/01_main-chapter-code/ch02.ipynb)

- [dataloader.ipynb](ch02/01_main-chapter-code/dataloader.ipynb) (摘要)

- [exercise-solutions.ipynb](ch02/01_main-chapter-code/exercise-solutions.ipynb) | [./ch02](./ch02) |

+| 第 3 章:编码注意力机制 | - [ch03.ipynb](ch03/01_main-chapter-code/ch03.ipynb)

- [multihead-attention.ipynb](ch03/01_main-chapter-code/multihead-attention.ipynb) (摘要)

- [exercise-solutions.ipynb](ch03/01_main-chapter-code/exercise-solutions.ipynb)| [./ch03](./ch03) |

+| 第 4 章:从头开始实现 GPT 模型 | - [ch04.ipynb](ch04/01_main-chapter-code/ch04.ipynb)

- [gpt.py](ch04/01_main-chapter-code/gpt.py) (摘要)

- [exercise-solutions.ipynb](ch04/01_main-chapter-code/exercise-solutions.ipynb) | [./ch04](./ch04) |

+| 第 5 章:对未标记数据进行预训练 | - [ch05.ipynb](ch05/01_main-chapter-code/ch05.ipynb)

- [gpt_train.py](ch05/01_main-chapter-code/gpt_train.py) (摘要)

- [gpt_generate.py](ch05/01_main-chapter-code/gpt_generate.py) (摘要)

- [exercise-solutions.ipynb](ch05/01_main-chapter-code/exercise-solutions.ipynb) | [./ch05](./ch05) |

+| 第 6 章:文本分类的微调 | - [ch06.ipynb](ch06/01_main-chapter-code/ch06.ipynb)

- [gpt_class_finetune.py](ch06/01_main-chapter-code/gpt_class_finetune.py)

- [exercise-solutions.ipynb](ch06/01_main-chapter-code/exercise-solutions.ipynb) | [./ch06](./ch06) |

+| 第 7 章:微调以遵循说明 | - [ch07.ipynb](ch07/01_main-chapter-code/ch07.ipynb)

- [gpt_instruction_finetuning.py](ch07/01_main-chapter-code/gpt_instruction_finetuning.py) (摘要)

- [ollama_evaluate.py](ch07/01_main-chapter-code/ollama_evaluate.py) (摘要)

- [exercise-solutions.ipynb](ch07/01_main-chapter-code/exercise-solutions.ipynb) | [./ch07](./ch07) |

+| 附录 A:PyTorch 简介 | - [code-part1.ipynb](附录 A/01_main-chapter-code/code-part1.ipynb)

- [code-part2.ipynb](附录 A/01_main-chapter-code/code-part2.ipynb)

- [DDP-script.py](附录 A/01_main-chapter-code/DDP-script.py)

- [exercise-solutions.ipynb](附录 A/01_main-chapter-code/exercise-solutions.ipynb) | [./appendix-A](./appendix-A) |

+| 附录 B:参考资料和进一步阅读 | 无代码 | - |

+| 附录 C:练习解决方案 | 无代码 | - |

+| 附录 D:在训练循环中添加花哨的东西 | - [appendix-D.ipynb](appendix-D/01_main-chapter-code/appendix-D.ipynb) | [./appendix-D](./appendix-D) |

+| 附录 E:使用 LoRA 进行参数高效微调 | - [appendix-E.ipynb](appendix-E/01_main-chapter-code/appendix-E.ipynb) | [./appendix-E](./appendix-E) |

-The mental model below summarizes the contents covered in this book.

+下面的心智模型总结了本书涵盖的内容。

-## Hardware Requirements

+## 硬件要求

-The code in the main chapters of this book is designed to run on conventional laptops within a reasonable timeframe and does not require specialized hardware. This approach ensures that a wide audience can engage with the material. Additionally, the code automatically utilizes GPUs if they are available. (Please see the [setup](https://github.com/rasbt/LLMs-from-scratch/blob/main/setup/README.md) doc for additional recommendations.)

+本书主要章节中的代码旨在在合理的时间范围内在传统笔记本电脑上运行,并且不需要专门的硬件。这种方法可确保广大受众能够参与其中。此外,如果 GPU 可用,代码会自动利用它们。(请参阅 [setup](https://github.com/rasbt/LLMs-from-scratch/blob/main/setup/README.zh.md) 文档以获取更多建议。)

-## Bonus Material

-

-Several folders contain optional materials as a bonus for interested readers:

-

-- **Setup**

- - [Python Setup Tips](setup/01_optional-python-setup-preferences)

- - [Installing Python Packages and Libraries Used In This Book](setup/02_installing-python-libraries)

- - [Docker Environment Setup Guide](setup/03_optional-docker-environment)

-- **Chapter 2: Working with text data**

- - [Comparing Various Byte Pair Encoding (BPE) Implementations](ch02/02_bonus_bytepair-encoder)

- - [Understanding the Difference Between Embedding Layers and Linear Layers](ch02/03_bonus_embedding-vs-matmul)

- - [Dataloader Intuition with Simple Numbers](ch02/04_bonus_dataloader-intuition)

-- **Chapter 3: Coding attention mechanisms**

- - [Comparing Efficient Multi-Head Attention Implementations](ch03/02_bonus_efficient-multihead-attention/mha-implementations.ipynb)

- - [Understanding PyTorch Buffers](ch03/03_understanding-buffers/understanding-buffers.ipynb)

-- **Chapter 4: Implementing a GPT model from scratch**

- - [FLOPS Analysis](ch04/02_performance-analysis/flops-analysis.ipynb)

-- **Chapter 5: Pretraining on unlabeled data:**

- - [Alternative Weight Loading from Hugging Face Model Hub using Transformers](ch05/02_alternative_weight_loading/weight-loading-hf-transformers.ipynb)

- - [Pretraining GPT on the Project Gutenberg Dataset](ch05/03_bonus_pretraining_on_gutenberg)

- - [Adding Bells and Whistles to the Training Loop](ch05/04_learning_rate_schedulers)

- - [Optimizing Hyperparameters for Pretraining](ch05/05_bonus_hparam_tuning)

- - [Building a User Interface to Interact With the Pretrained LLM](ch05/06_user_interface)

- - [Converting GPT to Llama](ch05/07_gpt_to_llama)

- - [Llama 3.2 From Scratch](ch05/07_gpt_to_llama/standalone-llama32.ipynb)

- - [Memory-efficient Model Weight Loading](ch05/08_memory_efficient_weight_loading/memory-efficient-state-dict.ipynb)

-- **Chapter 6: Finetuning for classification**

- - [Additional experiments finetuning different layers and using larger models](ch06/02_bonus_additional-experiments)

- - [Finetuning different models on 50k IMDB movie review dataset](ch06/03_bonus_imdb-classification)

- - [Building a User Interface to Interact With the GPT-based Spam Classifier](ch06/04_user_interface)

-- **Chapter 7: Finetuning to follow instructions**

- - [Dataset Utilities for Finding Near Duplicates and Creating Passive Voice Entries](ch07/02_dataset-utilities)

- - [Evaluating Instruction Responses Using the OpenAI API and Ollama](ch07/03_model-evaluation)

- - [Generating a Dataset for Instruction Finetuning](ch07/05_dataset-generation/llama3-ollama.ipynb)

- - [Improving a Dataset for Instruction Finetuning](ch07/05_dataset-generation/reflection-gpt4.ipynb)

- - [Generating a Preference Dataset with Llama 3.1 70B and Ollama](ch07/04_preference-tuning-with-dpo/create-preference-data-ollama.ipynb)

- - [Direct Preference Optimization (DPO) for LLM Alignment](ch07/04_preference-tuning-with-dpo/dpo-from-scratch.ipynb)

- - [Building a User Interface to Interact With the Instruction Finetuned GPT Model](ch07/06_user_interface)

+## 奖励材料

+

+几个文件夹包含可选材料,作为对感兴趣的读者的奖励:

+

+- **设置**

+ - [Python 设置提示](setup/01_optional-python-setup-preferences/README.zh.md)

+ - [安装本书中使用的 Python 包和库](setup/02_installing-python-libraries/README.zh.md)

+ - [Docker 环境设置指南](setup/03_optional-docker-environment/README.zh.md)

+- **第 2 章:使用文本数据**

+ - [比较各种字节对编码 (BPE) 实现](ch02/02_bonus_bytepair-encoder)

+ - [理解嵌入层和线性层之间的区别](ch02/03_bonus_embedding-vs-matmul)

+ - [使用简单数字的数据加载器直觉](ch02/04_bonus_dataloader-intuition)

+- **第3:编码注意力机制**

+ - [比较有效的多头注意力实现](ch03/02_bonus_efficient-multihead-attention/mha-implementations.ipynb)

+ - [理解 PyTorch 缓冲区](ch03/03_understanding-buffers/understanding-buffers.ipynb)

+- **第 4 章:从头开始实现 GPT 模型**

+ - [FLOPS 分析](ch04/02_performance-analysis/flops-analysis.ipynb)

+- **第 5 章:对未标记数据进行预训练:**

+ - [使用 Transformers 从 Hugging Face Model Hub 进行替代权重加载](ch05/02_alternative_weight_loading/weight-loading-hf-transformers.ipynb)

+ - [在 Project Gutenberg 上对 GPT 进行预训练数据集](ch05/03_bonus_pretraining_on_gutenberg)

+ - [为训练循环添加花哨功能](ch05/04_learning_rate_schedulers)

+ - [优化预训练的超参数](ch05/05_bonus_hparam_tuning)

+ - [构建用户界面与预训练的 LLM 交互](ch05/06_user_interface)

+ - [将 GPT 转换为 Llama](ch05/07_gpt_to_llama)

+ - [从头开始构建 Llama 3.2](ch05/07_gpt_to_llama/standalone-llama32.ipynb)

+ - [内存高效的模型权重加载](ch05/08_memory_efficient_weight_loading/memory-efficient-state-dict.ipynb)

+- **第 6 章:分类微调**

+ - [对不同层进行微调并使用更大模型的其他实验](ch06/02_bonus_additional-experiments)

+ - [在 50k IMDB 电影评论数据集上对不同模型进行微调](ch06/03_bonus_imdb-classification)

+ - [构建用户界面进行交互使用基于 GPT 的垃圾邮件分类器](ch06/04_user_interface)

+- **第 7 章:微调以遵循指令**

+ - [用于查找近似重复和创建被动语态条目的数据集实用程序](ch07/02_dataset-utilities)

+ - [使用 OpenAI API 和 Ollama 评估指令响应](ch07/03_model-evaluation)

+ - [生成用于指令微调的数据集](ch07/05_dataset-generation/llama3-ollama.ipynb)

+ - [改进用于指令微调的数据集](ch07/05_dataset-generation/reflection-gpt4.ipynb)

+ - [使用 Llama 3.1 70B 和Ollama](ch07/04_preference-tuning-with-dpo/create-preference-data-ollama.ipynb)

+ - [LLM 对齐的直接偏好优化 (DPO)](ch07/04_preference-tuning-with-dpo/dpo-from-scratch.ipynb)

+ - [构建用户界面以与指令微调 GPT 模型交互](ch07/06_user_interface)

-## Questions, Feedback, and Contributing to This Repository

-

-

-I welcome all sorts of feedback, best shared via the [Manning Forum](https://livebook.manning.com/forum?product=raschka&page=1) or [GitHub Discussions](https://github.com/rasbt/LLMs-from-scratch/discussions). Likewise, if you have any questions or just want to bounce ideas off others, please don't hesitate to post these in the forum as well.

+## 问题、反馈和对此存储库的贡献

-Please note that since this repository contains the code corresponding to a print book, I currently cannot accept contributions that would extend the contents of the main chapter code, as it would introduce deviations from the physical book. Keeping it consistent helps ensure a smooth experience for everyone.

+我欢迎各种反馈,最好通过 [Manning 论坛](https://livebook.manning.com/forum?product=raschka&page=1) 或 [GitHub 讨论](https://github.com/rasbt/LLMs-from-scratch/discussions) 分享。同样,如果您有任何疑问或只是想与他人交流想法,请随时在论坛中发布这些内容。

+请注意,由于此存储库包含与印刷书籍相对应的代码,因此我目前无法接受扩展主要章节代码内容的贡献,因为这会引入与实体书的偏差。保持一致有助于确保每个人都能获得流畅的体验。

-## Citation

+## 引用

-If you find this book or code useful for your research, please consider citing it.

+如果您发现本书或代码对您的研究有用,请考虑引用它。

-Chicago-style citation:

+芝加哥风格引用:

> Raschka, Sebastian. *Build A Large Language Model (From Scratch)*. Manning, 2024. ISBN: 978-1633437166.

-BibTeX entry:

+BibTeX 条目:

```

@book{build-llms-from-scratch-book,

diff --git a/README.zh.md b/README.zh.md

deleted file mode 100644

index 381b0aba..00000000

--- a/README.zh.md

+++ /dev/null

@@ -1,164 +0,0 @@

-[English](./README.md) | 中文

-

-# 构建大型语言模型(从头开始)

-

-此存储库包含用于开发、预训练和微调类似 GPT 的 LLM 的代码,是本书 [构建大型语言模型(从头开始)](https://amzn.to/4fqvn0D) 的官方代码存储库。

-

-

-

-

- -

-

-

-

-

-在 [*构建大型语言模型(从头开始)*](http://mng.bz/orYv) 中,您将通过从头开始逐步编码大型语言模型 (LLM),从内到外学习和了解大型语言模型 (LLM) 的工作原理。在本书中,我将指导您创建自己的 LLM,并用清晰的文本、图表和示例解释每个阶段。

-

-本书中描述的用于训练和开发您自己的小型但功能齐全的教育模型的方法反映了创建大型基础模型(例如 ChatGPT 背后的模型)所使用的方法。此外,本书还包括用于加载较大预训练模型的权重以进行微调的代码。

-

-- 链接到官方[源代码存储库](https://github.com/rasbt/LLMs-from-scratch)

-- [链接到 Manning(出版商网站)上的书籍](http://mng.bz/orYv)

-- [链接到 Amazon.com 上的书籍页面](https://www.amazon.com/gp/product/1633437167)

-- ISBN 9781633437166

-

- -

-

-

-

-

-

-

-

-要下载此存储库的副本,请单击[下载ZIP](https://github.com/rasbt/LLMs-from-scratch/archive/refs/heads/main.zip) 按钮或在终端中执行以下命令:

-

-```bash

-git clone --depth 1 https://github.com/rasbt/LLMs-from-scratch.git

-```

-

-

-

-(如果您从 Manning 网站下载了代码包,请考虑访问 GitHub 上的官方代码存储库 [https://github.com/rasbt/LLMs-from-scratch](https://github.com/rasbt/LLMs-from-scratch) 以获取最新更新。)

-

-

-

-

-# 目录

-

-请注意,此 `README.md` 文件是 Markdown(`.md`)文件。如果您已从 Manning 网站下载此代码包并在本地计算机上查看它,我建议使用 Markdown 编辑器或预览器进行正确查看。如果您尚未安装 Markdown 编辑器,[MarkText](https://www.marktext.cc) 是一个不错的免费选择。

-

-您也可以在浏览器中通过 [https://github.com/rasbt/LLMs-from-scratch](https://github.com/rasbt/LLMs-from-scratch) 查看此文件和 GitHub 上的其他文件,浏览器会自动呈现 Markdown。

-

-

-

-

-

-> [!TIP]

-> 如果您正在寻求有关安装 Python 和 Python 包以及设置代码环境的指导,我建议您阅读位于 [setup](setup) 目录中的 [README.zh.md](setup/README.zh.md) 文件。

-

-

-

-

-[](https://github.com/rasbt/LLMs-from-scratch/actions/workflows/basic-tests-linux.yml)

-[](https://github.com/rasbt/LLMs-from-scratch/actions/workflows/basic-tests-windows.yml)

-[](https://github.com/rasbt/LLMs-from-scratch/actions/workflows/basic-tests-macos.yml)

-

-

-

-| 章节标题 | 主代码(用于快速访问) | 所有代码 + 补充 |

-|------------------------------------------------------------|---------------------------------------------------------------------------------------------------------|-----------------------------------------------|

-| [设置建议](./setup/README.zh.md) | - | - |

-| 第 1 章:了解大型语言模型 | 无代码 | - |

-| 第 2 章:使用文本数据 | - [ch02.ipynb](ch02/01_main-chapter-code/ch02.ipynb)

- [dataloader.ipynb](ch02/01_main-chapter-code/dataloader.ipynb) (摘要)

- [exercise-solutions.ipynb](ch02/01_main-chapter-code/exercise-solutions.ipynb) | [./ch02](./ch02) |

-| 第 3 章:编码注意力机制 | - [ch03.ipynb](ch03/01_main-chapter-code/ch03.ipynb)

- [multihead-attention.ipynb](ch03/01_main-chapter-code/multihead-attention.ipynb) (摘要)

- [exercise-solutions.ipynb](ch03/01_main-chapter-code/exercise-solutions.ipynb)| [./ch03](./ch03) |

-| 第 4 章:从头开始实现 GPT 模型 | - [ch04.ipynb](ch04/01_main-chapter-code/ch04.ipynb)

- [gpt.py](ch04/01_main-chapter-code/gpt.py) (摘要)

- [exercise-solutions.ipynb](ch04/01_main-chapter-code/exercise-solutions.ipynb) | [./ch04](./ch04) |

-| 第 5 章:对未标记数据进行预训练 | - [ch05.ipynb](ch05/01_main-chapter-code/ch05.ipynb)

- [gpt_train.py](ch05/01_main-chapter-code/gpt_train.py) (摘要)

- [gpt_generate.py](ch05/01_main-chapter-code/gpt_generate.py) (摘要)

- [exercise-solutions.ipynb](ch05/01_main-chapter-code/exercise-solutions.ipynb) | [./ch05](./ch05) |

-| 第 6 章:文本分类的微调 | - [ch06.ipynb](ch06/01_main-chapter-code/ch06.ipynb)

- [gpt_class_finetune.py](ch06/01_main-chapter-code/gpt_class_finetune.py)

- [exercise-solutions.ipynb](ch06/01_main-chapter-code/exercise-solutions.ipynb) | [./ch06](./ch06) |

-| 第 7 章:微调以遵循说明 | - [ch07.ipynb](ch07/01_main-chapter-code/ch07.ipynb)

- [gpt_instruction_finetuning.py](ch07/01_main-chapter-code/gpt_instruction_finetuning.py) (摘要)

- [ollama_evaluate.py](ch07/01_main-chapter-code/ollama_evaluate.py) (摘要)

- [exercise-solutions.ipynb](ch07/01_main-chapter-code/exercise-solutions.ipynb) | [./ch07](./ch07) |

-| 附录 A:PyTorch 简介 | - [code-part1.ipynb](附录 A/01_main-chapter-code/code-part1.ipynb)

- [code-part2.ipynb](附录 A/01_main-chapter-code/code-part2.ipynb)

- [DDP-script.py](附录 A/01_main-chapter-code/DDP-script.py)

- [exercise-solutions.ipynb](附录 A/01_main-chapter-code/exercise-solutions.ipynb) | [./appendix-A](./appendix-A) |

-| 附录 B:参考资料和进一步阅读 | 无代码 | - |

-| 附录 C:练习解决方案 | 无代码 | - |

-| 附录 D:在训练循环中添加花哨的东西 | - [appendix-D.ipynb](appendix-D/01_main-chapter-code/appendix-D.ipynb) | [./appendix-D](./appendix-D) |

-| 附录 E:使用 LoRA 进行参数高效微调 | - [appendix-E.ipynb](appendix-E/01_main-chapter-code/appendix-E.ipynb) | [./appendix-E](./appendix-E) |

-

-

-

-

-下面的心智模型总结了本书涵盖的内容。

-

- -

-

-

-

-

-

-## 硬件要求

-

-本书主要章节中的代码旨在在合理的时间范围内在传统笔记本电脑上运行,并且不需要专门的硬件。这种方法可确保广大受众能够参与其中。此外,如果 GPU 可用,代码会自动利用它们。(请参阅 [setup](https://github.com/rasbt/LLMs-from-scratch/blob/main/setup/README.zh.md) 文档以获取更多建议。)

-

-

-## 奖励材料

-

-几个文件夹包含可选材料,作为对感兴趣的读者的奖励:

-

-- **设置**

- - [Python 设置提示](setup/01_optional-python-setup-preferences/README.zh.md)

- - [安装本书中使用的 Python 包和库](setup/02_installing-python-libraries/README.zh.md)

- - [Docker 环境设置指南](setup/03_optional-docker-environment/README.zh.md)

-- **第 2 章:使用文本数据**

- - [比较各种字节对编码 (BPE) 实现](ch02/02_bonus_bytepair-encoder)

- - [理解嵌入层和线性层之间的区别](ch02/03_bonus_embedding-vs-matmul)

- - [使用简单数字的数据加载器直觉](ch02/04_bonus_dataloader-intuition)

-- **第3:编码注意力机制**

- - [比较有效的多头注意力实现](ch03/02_bonus_efficient-multihead-attention/mha-implementations.ipynb)

- - [理解 PyTorch 缓冲区](ch03/03_understanding-buffers/understanding-buffers.ipynb)

-- **第 4 章:从头开始实现 GPT 模型**

- - [FLOPS 分析](ch04/02_performance-analysis/flops-analysis.ipynb)

-- **第 5 章:对未标记数据进行预训练:**

- - [使用 Transformers 从 Hugging Face Model Hub 进行替代权重加载](ch05/02_alternative_weight_loading/weight-loading-hf-transformers.ipynb)

- - [在 Project Gutenberg 上对 GPT 进行预训练数据集](ch05/03_bonus_pretraining_on_gutenberg)

- - [为训练循环添加花哨功能](ch05/04_learning_rate_schedulers)

- - [优化预训练的超参数](ch05/05_bonus_hparam_tuning)

- - [构建用户界面与预训练的 LLM 交互](ch05/06_user_interface)

- - [将 GPT 转换为 Llama](ch05/07_gpt_to_llama)

- - [从头开始构建 Llama 3.2](ch05/07_gpt_to_llama/standalone-llama32.ipynb)

- - [内存高效的模型权重加载](ch05/08_memory_efficient_weight_loading/memory-efficient-state-dict.ipynb)

-- **第 6 章:分类微调**

- - [对不同层进行微调并使用更大模型的其他实验](ch06/02_bonus_additional-experiments)

- - [在 50k IMDB 电影评论数据集上对不同模型进行微调](ch06/03_bonus_imdb-classification)

- - [构建用户界面进行交互使用基于 GPT 的垃圾邮件分类器](ch06/04_user_interface)

-- **第 7 章:微调以遵循指令**

- - [用于查找近似重复和创建被动语态条目的数据集实用程序](ch07/02_dataset-utilities)

- - [使用 OpenAI API 和 Ollama 评估指令响应](ch07/03_model-evaluation)

- - [生成用于指令微调的数据集](ch07/05_dataset-generation/llama3-ollama.ipynb)

- - [改进用于指令微调的数据集](ch07/05_dataset-generation/reflection-gpt4.ipynb)

- - [使用 Llama 3.1 70B 和Ollama](ch07/04_preference-tuning-with-dpo/create-preference-data-ollama.ipynb)

- - [LLM 对齐的直接偏好优化 (DPO)](ch07/04_preference-tuning-with-dpo/dpo-from-scratch.ipynb)

- - [构建用户界面以与指令微调 GPT 模型交互](ch07/06_user_interface)

-

-

-

-

-## 问题、反馈和对此存储库的贡献

-

-我欢迎各种反馈,最好通过 [Manning 论坛](https://livebook.manning.com/forum?product=raschka&page=1) 或 [GitHub 讨论](https://github.com/rasbt/LLMs-from-scratch/discussions) 分享。同样,如果您有任何疑问或只是想与他人交流想法,请随时在论坛中发布这些内容。

-

-请注意,由于此存储库包含与印刷书籍相对应的代码,因此我目前无法接受扩展主要章节代码内容的贡献,因为这会引入与实体书的偏差。保持一致有助于确保每个人都能获得流畅的体验。

-

-

-## 引用

-

-如果您发现本书或代码对您的研究有用,请考虑引用它。

-

-芝加哥风格引用:

-

-> Raschka, Sebastian. *Build A Large Language Model (From Scratch)*. Manning, 2024. ISBN: 978-1633437166.

-

-BibTeX 条目:

-

-```

-@book{build-llms-from-scratch-book,

- author = {Sebastian Raschka},

- title = {Build A Large Language Model (From Scratch)},

- publisher = {Manning},

- year = {2024},

- isbn = {978-1633437166},

- url = {https://www.manning.com/books/build-a-large-language-model-from-scratch},

- github = {https://github.com/rasbt/LLMs-from-scratch}

-}

-```

+

+

+

+ +

+

+

+

+

+ +

+

+

+ @@ -26,7 +26,7 @@ The method described in this book for training and developing your own small-but

@@ -26,7 +26,7 @@ The method described in this book for training and developing your own small-but

-

-

-

- -

-

-

-

-

- -

-

-

-