581437405

By Wei Liu, Dragomir Anguelov, Dumitru Erhan, Christian Szegedy, Scott Reed, Cheng-Yang Fu, Alexander C. Berg.

SSD is an unified framework for object detection with a single network. You can use the code to train/evaluate a network for object detection task. For more details, please refer to our arXiv paper and our slide.

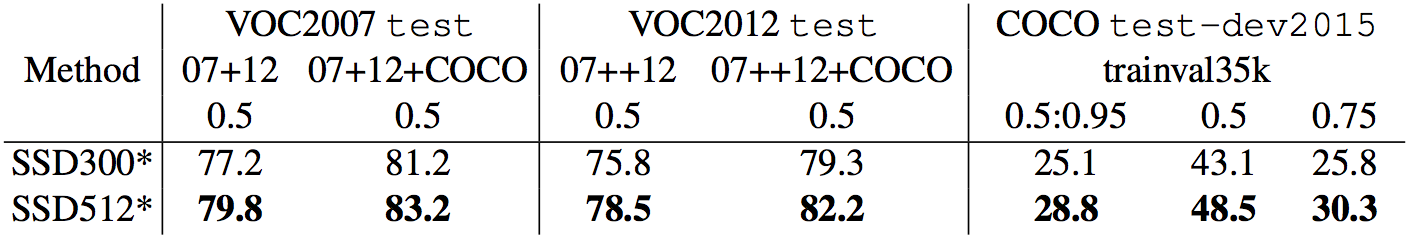

| System | VOC2007 test mAP | FPS (Titan X) | Number of Boxes | Input resolution |

|---|---|---|---|---|

| Faster R-CNN (VGG16) | 73.2 | 7 | ~6000 | ~1000 x 600 |

| YOLO (customized) | 63.4 | 45 | 98 | 448 x 448 |

| SSD300* (VGG16) | 77.2 | 46 | 8732 | 300 x 300 |

| SSD512* (VGG16) | 79.8 | 19 | 24564 | 512 x 512 |

Note: SSD300* and SSD512* are the latest models. Current code should reproduce these results.

Please cite SSD in your publications if it helps your research:

@inproceedings{liu2016ssd,

title = {{SSD}: Single Shot MultiBox Detector},

author = {Liu, Wei and Anguelov, Dragomir and Erhan, Dumitru and Szegedy, Christian and Reed, Scott and Fu, Cheng-Yang and Berg, Alexander C.},

booktitle = {ECCV},

year = {2016}

}

- Get the code. We will call the directory that you cloned Caffe into

$CAFFE_ROOT

git clone https://github.com/weiliu89/caffe.git

cd caffe

git checkout ssd- Build the code. Please follow Caffe instruction to install all necessary packages and build it.

# Modify Makefile.config according to your Caffe installation.

cp Makefile.config.example Makefile.config

make -j8

# Make sure to include $CAFFE_ROOT/python to your PYTHONPATH.

make py

make test -j8

# (Optional)

make runtest -j8-

Download fully convolutional reduced (atrous) VGGNet. By default, we assume the model is stored in

$CAFFE_ROOT/models/VGGNet/ -

Download VOC2007 and VOC2012 dataset. By default, we assume the data is stored in

$HOME/data/

# Download the data.

cd $HOME/data

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2012/VOCtrainval_11-May-2012.tar

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtrainval_06-Nov-2007.tar

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtest_06-Nov-2007.tar

# Extract the data.

tar -xvf VOCtrainval_11-May-2012.tar

tar -xvf VOCtrainval_06-Nov-2007.tar

tar -xvf VOCtest_06-Nov-2007.tar- Create the LMDB file.

cd $CAFFE_ROOT

# Create the trainval.txt, test.txt, and test_name_size.txt in data/VOC0712/

./data/VOC0712/create_list.sh

# You can modify the parameters in create_data.sh if needed.

# It will create lmdb files for trainval and test with encoded original image:

# - $HOME/data/VOCdevkit/VOC0712/lmdb/VOC0712_trainval_lmdb

# - $HOME/data/VOCdevkit/VOC0712/lmdb/VOC0712_test_lmdb

# and make soft links at examples/VOC0712/

./data/VOC0712/create_data.sh- Train your model and evaluate the model on the fly.

# It will create model definition files and save snapshot models in:

# - $CAFFE_ROOT/models/VGGNet/VOC0712/SSD_300x300/

# and job file, log file, and the python script in:

# - $CAFFE_ROOT/jobs/VGGNet/VOC0712/SSD_300x300/

# and save temporary evaluation results in:

# - $HOME/data/VOCdevkit/results/VOC2007/SSD_300x300/

# It should reach 77.* mAP at 120k iterations.

python examples/ssd/ssd_pascal.pyIf you don't have time to train your model, you can download a pre-trained model at here.

- Evaluate the most recent snapshot.

# If you would like to test a model you trained, you can do:

python examples/ssd/score_ssd_pascal.py- Test your model using a webcam. Note: press esc to stop.

# If you would like to attach a webcam to a model you trained, you can do:

python examples/ssd/ssd_pascal_webcam.pyHere is a demo video of running a SSD500 model trained on MSCOCO dataset.

-

Check out

examples/ssd_detect.ipynborexamples/ssd/ssd_detect.cppon how to detect objects using a SSD model. Check outexamples/ssd/plot_detections.pyon how to plot detection results output by ssd_detect.cpp. -

To train on other dataset, please refer to data/OTHERDATASET for more details. We currently add support for COCO and ILSVRC2016. We recommend using

examples/ssd.ipynbto check whether the new dataset is prepared correctly.

We have provided the latest models that are trained from different datasets. To help reproduce the results in Table 6, most models contain a pretrained .caffemodel file, many .prototxt files, and python scripts.

-

PASCAL VOC models:

-

COCO models:

-

ILSVRC models:

[1]We use examples/convert_model.ipynb to extract a VOC model from a pretrained COCO model.

A caffe implementation of MobileNet-SSD detection network, with pretrained weights on VOC0712 and mAP=0.727.

| Network | mAP | Download | Download |

|---|---|---|---|

| MobileNet-SSD | 72.7 | train | deploy |

- Download SSD source code and compile (follow the SSD README).

- Download the pretrained deploy weights from the link above.

- Put all the files in SSD_HOME/examples/

- Run demo.py to show the detection result.

- Convert your own dataset to lmdb database (follow the SSD README), and create symlinks to current directory.

ln -s PATH_TO_YOUR_TRAIN_LMDB trainval_lmdb

ln -s PATH_TO_YOUR_TEST_LMDB test_lmdb

- Create the labelmap.prototxt file and put it into current directory.

- Use gen_model.sh to generate your own training prototxt.

- Download the training weights from the link above, and run train.sh, after about 30000 iterations, the loss should be 1.5 - 2.5.

- Run test.sh to evaluate the result.

- Run merge_bn.py to generate your own deploy caffemodel.

There are 2 primary differences between this model and MobileNet-SSD on tensorflow:

- ReLU6 layer is replaced by ReLU.

- For the conv11_mbox_prior layer, the anchors is [(0.2, 1.0), (0.2, 2.0), (0.2, 0.5)] vs tensorflow's [(0.1, 1.0), (0.2, 2.0), (0.2, 0.5)].

I trained this model from a MobileNet classifier(caffemodel and prototxt) converted from tensorflow. I first trained the model on MS-COCO and then fine-tuned on VOC0712. Without MS-COCO pretraining, it can only get mAP=0.68.