Wait, ANOTHER tool to take code files and turn them into a single prompt file for Large Language Models? How many tools like this does the world need?! There are already so many:

… and others keep cropping up. Yet, Your Source to Prompt stands out with a unique set of capabilities that address many pain points these existing tools don’t fully resolve.

In fact, I made it for myself to scratch my own itch, because I was wasting so much time using other tools that caused me to repeat myself over and over, or which made it difficult to work with private repos.

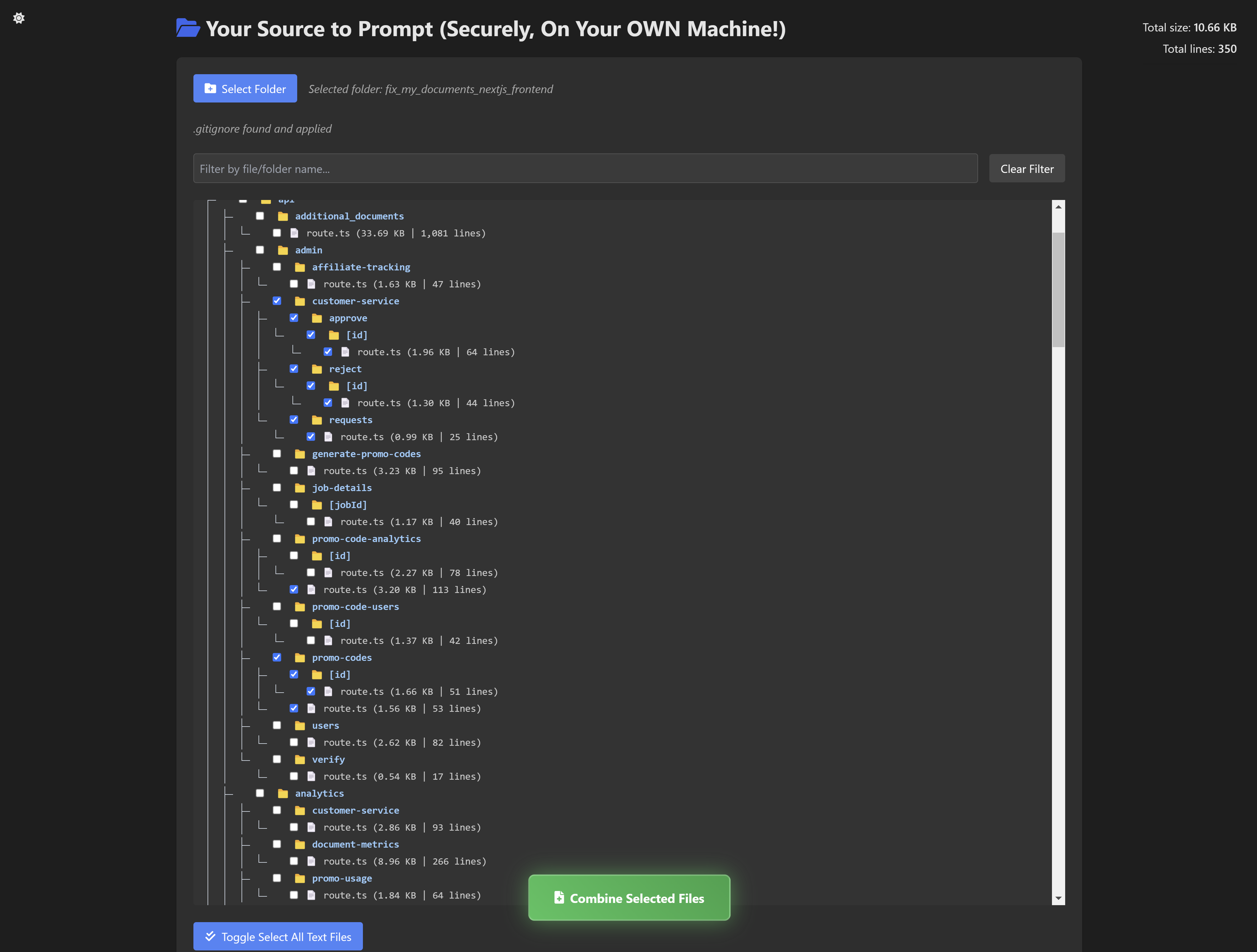

Choose files visually:  |

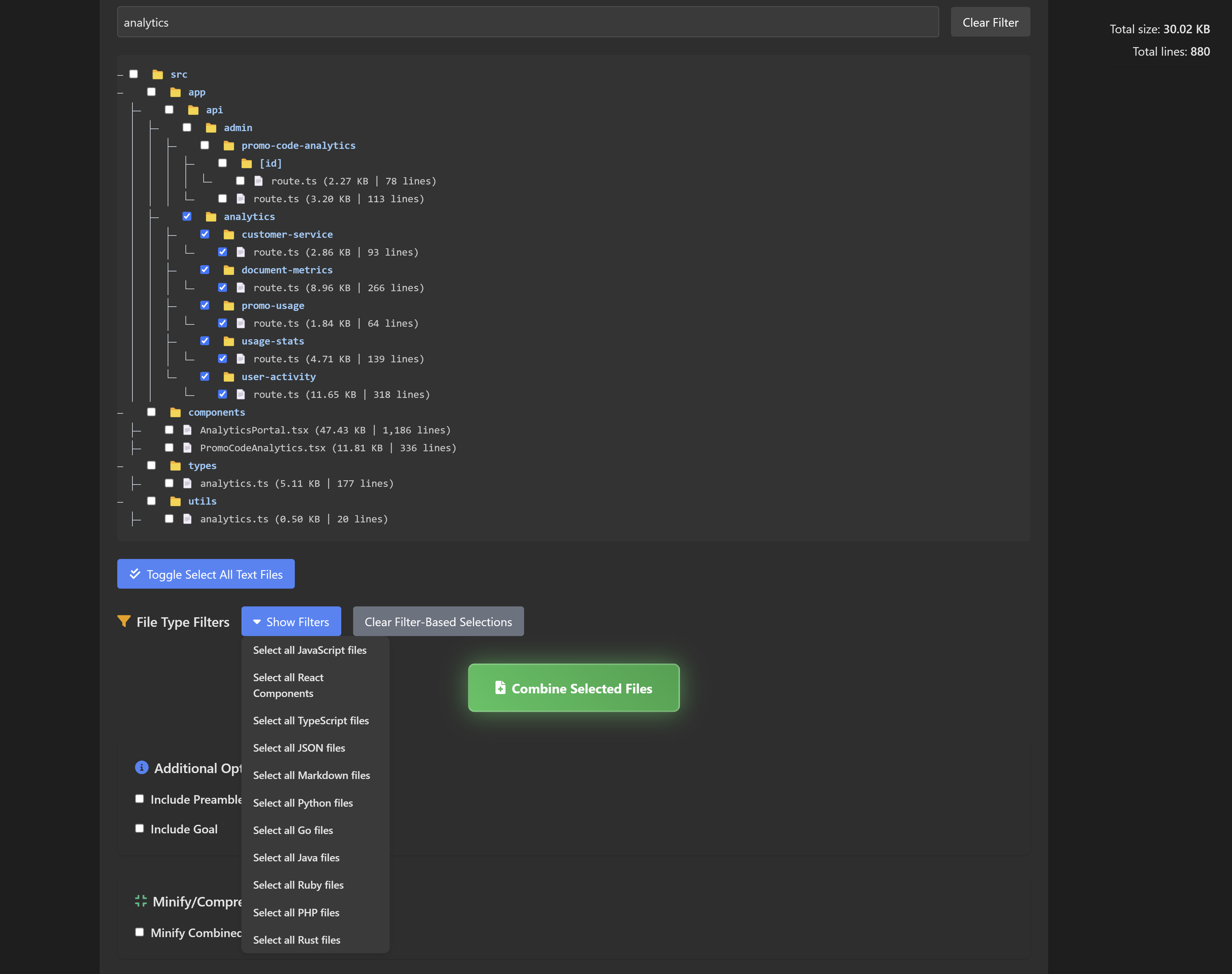

String filters and quick select options:  |

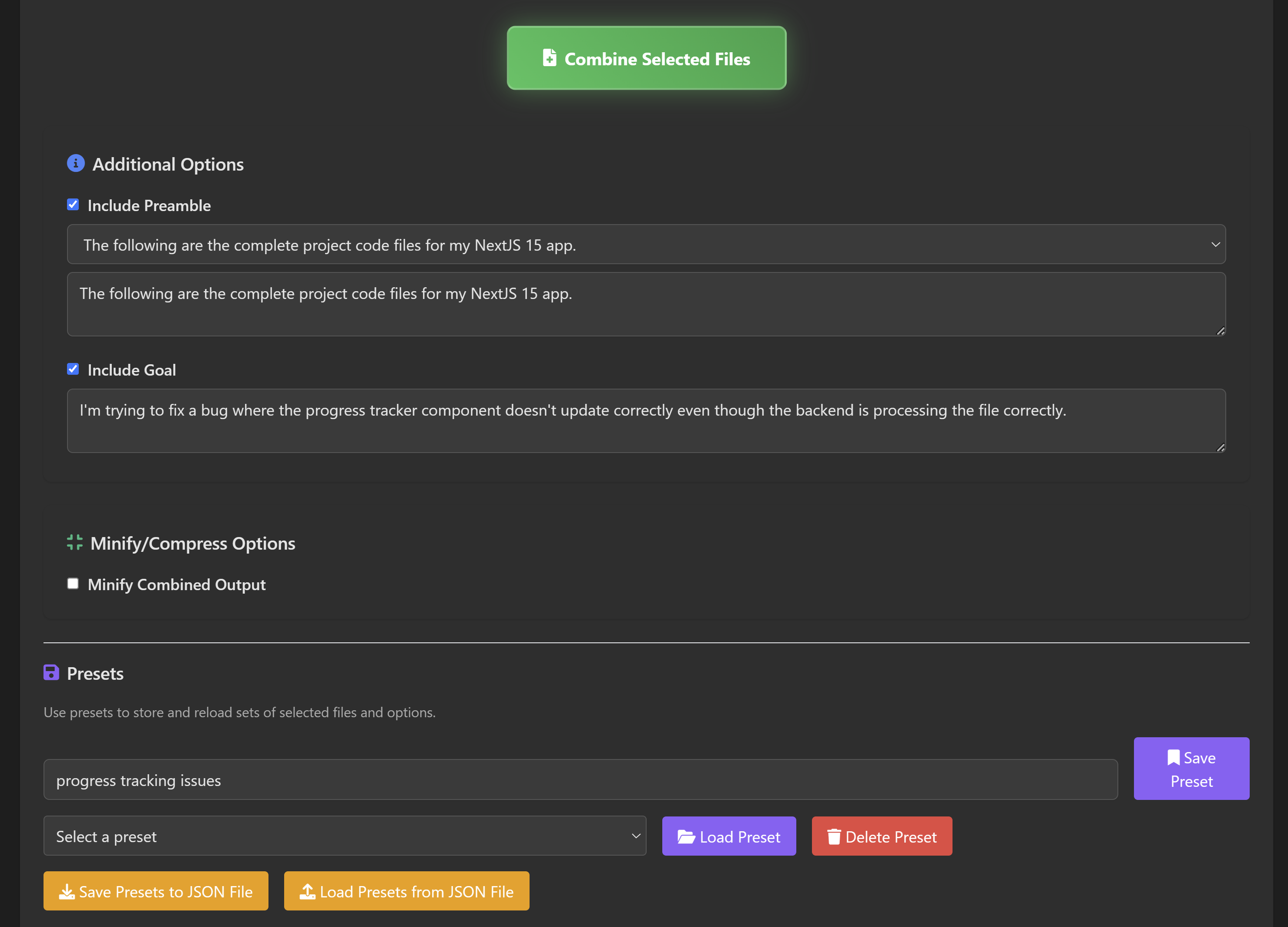

Add preamble text and goals; save and load presets:  |

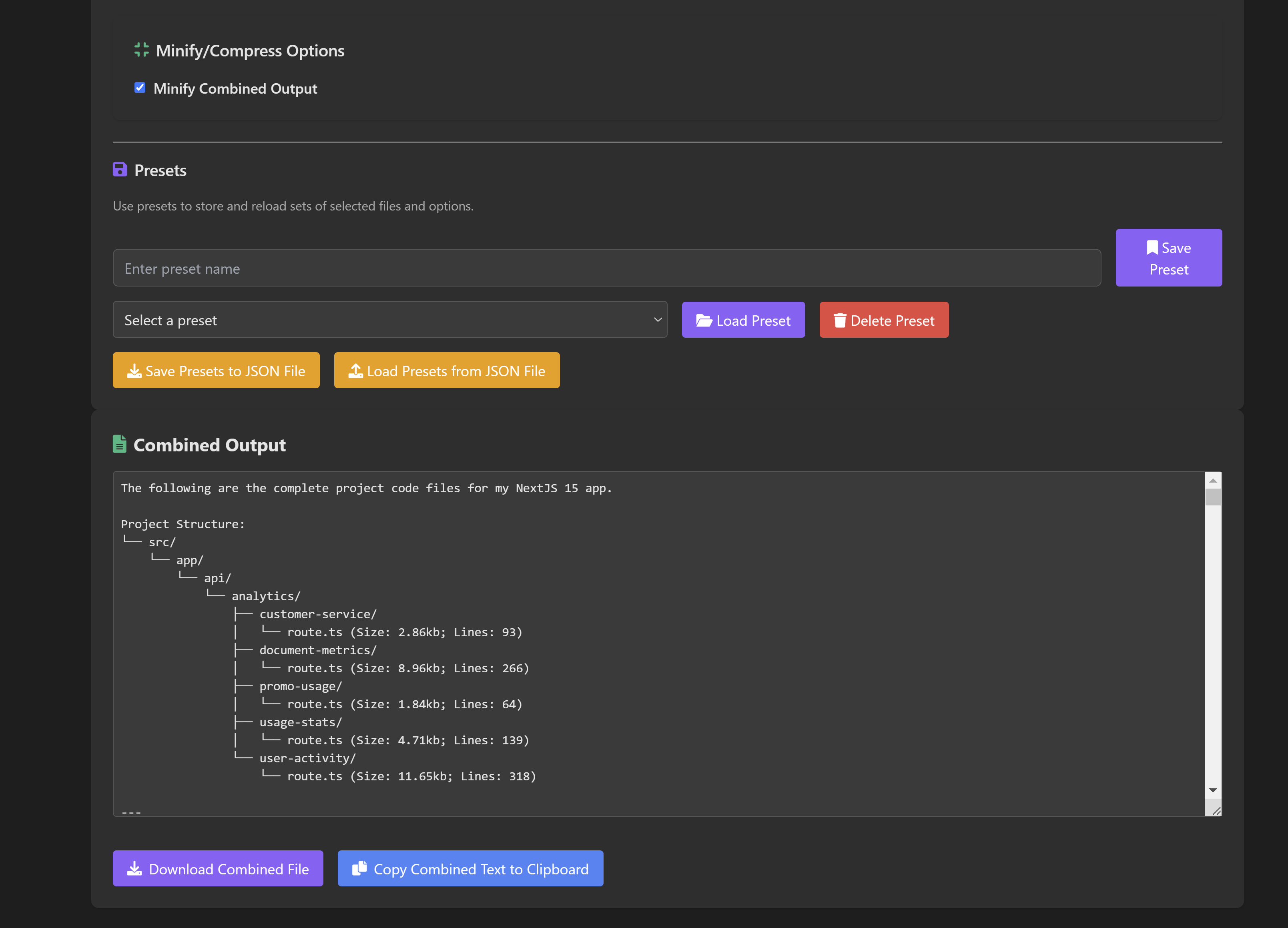

Easily save the final output text as a file or copy to clipboard:  |

Your Source to Prompt is a single .html file that, when opened in a modern browser (like Chrome), provides a complete GUI to easily select code files and combine them into a single text output. The key innovation is that it runs entirely in your browser, with no external dependencies or services. It’s:

- Local and Secure: Your code never leaves your machine.

- No Installation Hassles: No Python, no Node.js, no CLI fiddling. Just a single, self-contained HTML file that you open in any modern browser and then it "just works."

- Works with Any Folder: Not limited to Git repos.

- Optimized for Repeated Use: Save and load presets of file selections and settings.

This tool is all about letting you focus on your actual LLM-driven coding tasks without wrestling with complex pipelines or risking your code’s privacy.

-

Fully Local & Secure: Just open the

.htmlfile. The modern File System Access API lets you read from your local drive directly. No server, no GitHub auth tokens, no privacy concerns. -

No Dependencies: Requires only a recent Chromium-based browser. No installations, no package managers.

-

Use with Any Folder or Repo: Perfect for private codebases or just a random set of files you want to show the LLM.

-

Presets to Save Time: Preset functionality lets you store your favorite file selections and reload them instantly. Save to

localStorageor export/import as JSON. -

Efficient File Selection:

- String-based filtering to quickly find files.

- Convenient mass selection (e.g., “Select all React files”).

- Toggle all text files with one click.

-

Context Size Awareness: A tally of total size and lines is always visible, with warnings if you’re likely to exceed context windows (like GPT-4 or Claude limits).

-

Hierarchical Structure Preview: Automatically includes a tree-like structure showing file sizes and line counts before the code listings, providing vital context to the LLM.

-

Minification to Save Space: Optionally minify JS, CSS, HTML, JSON, and even trim other text files for maximum context efficiency.

-

Custom Preamble & Goal: Prepend your combined output with a preamble and a stated goal so the LLM understands the context and your intentions before reading the code.

-

Export/Import Presets: Share or backup your presets easily so you don't need to waste time selecting the same complex grouping of files over and over again!

-

User Friendly UI with Dark Mode: It looks nice and is easy to get started, with comprehensive tooltips explaining what everything does.

- On clicking "Select Folder," the tool uses the File System Access API to prompt you for a local directory.

- Once granted, it reads the contents of that directory (and subdirectories), filtering out ignored files (via

.gitignoreor default patterns). - Everything happens locally, in-browser.

- The tool scans your chosen folder, recursively enumerating files and directories.

.gitignorepatterns are applied to skip irrelevant files.- Files are displayed in a nested tree structure.

- Text files (based on known extensions) get checkboxes, so you can select which ones to include in the final prompt.

- Selections and configurations are stored in

localStorageas JSON. - Name and save a preset to quickly restore a known configuration.

- Export and import presets to/from a

.jsonfile for portability.

- The UI calculates total selected size and line count as you select files.

- Approximate warnings appear if you exceed thresholds likely to cause trouble with certain LLM contexts.

- Preamble: A custom introduction or explanation.

- Goal: A concise statement of what you want to achieve.

- These help frame the LLM prompt so that the code is contextualized and not just dropped in cold.

- Uses client-side libraries:

- Terser for JS/TS minification.

- csso for CSS.

- html-minifier-terser for HTML.

- JSON is re-serialized to a single line.

- Other text files have trailing whitespace trimmed.

This process helps fit larger codebases into the LLM’s context window, and it tells you the space savings so you can decide if it's worth it.

-

Get the HTML File: Download

your-source-to-prompt.htmlfrom this repository to your computer. -

Open it in Chrome:

Just double-click or drag it into your browser. -

Select a Folder:

Click "Select Folder," choose your code directory. The tool will load and display your files. -

Filter and Select Files:

Use the search bar to quickly find files by name. Use the quick-select buttons to grab all files of a certain type. Toggle all text files if that’s easier. -

Set Up Preamble and Goal (Optional):

If desired, enable preamble and goal options and enter your custom text. -

Minify Output (Optional):

Check the minify box to reduce file size. This can help when dealing with large projects. -

Check the Tally:

The total selected size and line count appear in the top-right corner. Heed the warnings if any appear. -

Save a Preset (Optional):

If you want to reuse these selections and settings, enter a name and click "Save Preset." You can load it next time without re-selecting everything. -

Combine Files:

Click "Combine Selected Files." The tool generates a single text output containing:- Your preamble (if any)

- Your goal (if any)

- A hierarchical summary of selected files

- Each file preceded by a header line with the filename

-

Copy or Download the Result:

Use the provided buttons to copy the combined text to your clipboard or download it as a.txtfile. Paste it into your LLM prompt and start working!

Scenario: You want your LLM to help refactor a Node.js API.

- Open

your-source-to-prompt.htmlin Chrome. - Select your project folder.

- Filter by "api" to find backend files quickly.

- Use file type filters to select all

.jsfiles. - Enable the preamble: "Below are the main API files from my Node.js project..."

- Add a goal: "Goal: Refactor the API handlers to follow better error-handling patterns."

- Enable Minify Output to save space.

- Combine the files.

- Copy the output and paste into the LLM’s prompt.

Now the LLM sees a well-structured, minimized project context along with your stated goal, all fully local and secure.

- Written in pure HTML/JS with TailwindCSS for styling and Tippy.js for tooltips.

- Uses browser-based libraries from CDNs for minification and UI enhancements.

- Data never leaves your machine.

- Works best in Chromium-based browsers supporting the File System Access API.

- Presets stored in

localStorage; easily exported/imported as JSON.

For best results, use a Chromium-based browser like Chrome. This ensures full support for the File System Access API. Other browsers (Firefox, Safari) may not support the directory access features yet.

Your Source to Prompt may be one of many such tools, but it offers a unique combination of local operation, no-install convenience, powerful preset management, efficient file selection, minification, and thoughtful UI enhancements. It lets you quickly and securely create a well-structured prompt context for your LLM, making your AI-driven coding sessions more streamlined and effective.